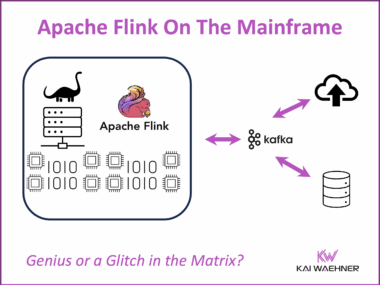

Stream Processing on the Mainframe with Apache Flink: Genius or a Glitch in the Matrix?

Running Apache Flink on a mainframe may sound surprising, but it is already happening and for good reason. As modern mainframes like IBM z17 evolve to support Linux, Kubernetes, and AI workloads, they are becoming a powerful platform for real-time stream processing. This blog explores why enterprises are deploying Apache Flink on IBM LinuxONE, how it works in practice, and what business value it brings. With Kafka providing the data backbone, Flink enables intelligent processing close to where business-critical data lives. The result is a modern hybrid architecture that connects core systems with cloud-based innovation without needing to fully migrate off the mainframe.