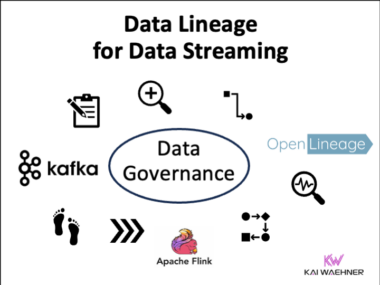

Open Standards for Data Lineage: OpenLineage for Batch AND Streaming

One of the greatest wishes of companies is end-to-end visibility in their operational and analytical workflows. Where does data come from? Where does it go? To whom am I giving access to? How can I track data quality issues? The capability to follow the data flow to answer these questions is called data lineage. This blog post explores market trends, efforts to provide an open standard with OpenLineage, and how data governance solutions from vendors such as IBM, Google, Confluent and Collibra help fulfil the enterprise-wide data governance needs of most companies, including data streaming technologies such as Apache Kafka and Flink.