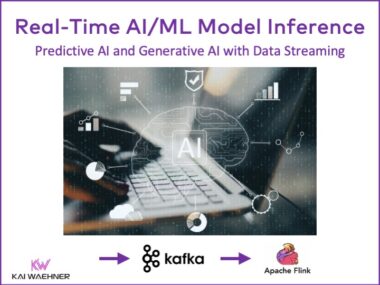

Real-Time Model Inference with Apache Kafka and Flink for Predictive AI and GenAI

Artificial Intelligence (AI) and Machine Learning (ML) are transforming business operations by enabling systems to learn from data and make intelligent decisions for predictive and generative AI use cases. Two essential components of AI/ML are model training and inference. This blog post explores how data streaming with Apache Kafka and Flink enhances the performance and reliability of model predictions. Whether for real-time fraud detection, smart customer service applications or predictive maintenance, understanding the value of data streaming for model inference is crucial for leveraging AI/ML effectively.