I added a new example to my “Machine Learning + Kafka Streams Examples” Github project:

“Python + Keras + TensorFlow + DeepLearning4j + Apache Kafka + Kafka Streams“.

This blog post discusses the motivation and why this is a great combination of technologies for scalable, reliable Machine Learning infrastructures. For more details about building Machine Learning / Deep Learning infrastructures leveraging the Apache Kafka open source ecosystem, check out these two blog posts:

- How to Build and Deploy Scalable Machine Learning in Production with Apache Kafka

- Using Apache Kafka to Drive Cutting-Edge Machine Learning – Hybrid ML Architectures, AutoML, and more…

Deep Learning with Python and Keras

The goal was to show how you can easily deploy a model developed with Python and Keras to a Java / Kafka ecosystem. Keras allows to use different Deep Learning backends under the hood: TensorFlow, CNTK, or Theano. It acts as high level wrapper and is very easy to use even for people new to Machine Learning. Due to these reasons, Keras is getting a lot of traction these days.

Deployment of Keras Models to Java / Kafka Ecosystem with Deeplearning4J (DL4J)

Machine Learning frameworks have different options for combining it with Java platform (and therefore with Apache Kafka ecosystem), like native Java APIs to load models or RPC interfaces to its framework-specific model servers.

Deeplearning4j seems to become the de facto standard for deployment of Keras models (if you trust Google search). The Deep Learning framework specifically built for the Java platform added many features and bug fixes to its Keras Model Import and supports many Keras concepts already getting better and better with every new (beta) release. I used 1.0.0-beta3 for my Github example. Please check here for a complete list of supported Keras features in Deeplearning4j like Layers, Loss Functions, Activation Functions, Initializers and Optimizers.

You can either fully train a model with Keras and “just” embed it into a Java application for model inference, or re-use the model or parts of it to improve the model with DL4J’s Java API (aka transfer learning).

Example: Python + Keras + TensorFlow + Apache Kafka + DL4J

I implemented a simple but still impressive example:

Development of an analytic model trained with Python, Keras and TensorFlow and deployment to Java and Kafka ecosystem. Simple to implement (as you see in the source code), but powerful, scalable and reliable.

This is no business case this time, but just a technical demonstration of a simple ‘Hello World’ Keras model. Feel free to replace the model with any other Keras model trained with your backend of choice. You just need to replace the model binary (and use a model which is compatible with DeepLearning4J ‘s model importer). Then you can embed it into your Kafka application for real time model inference.

Machine Learning Technologies

- Python

- DeepLearning4J

- Keras – a high-level neural networks API, written in Python and capable of running on top of TensorFlow, CNTK, or Theano.

- TensorFlow – used as backend under the hood of Keras

- DeepLearning4J ‘s KerasModelImport feature is used for importing the Keras / TensorFlow model into Java. The used model is its ‘Hello World’ model example.

- The Keras model was trained with this Python script.

Apache Kafka and DL4J for Real Time Predictions

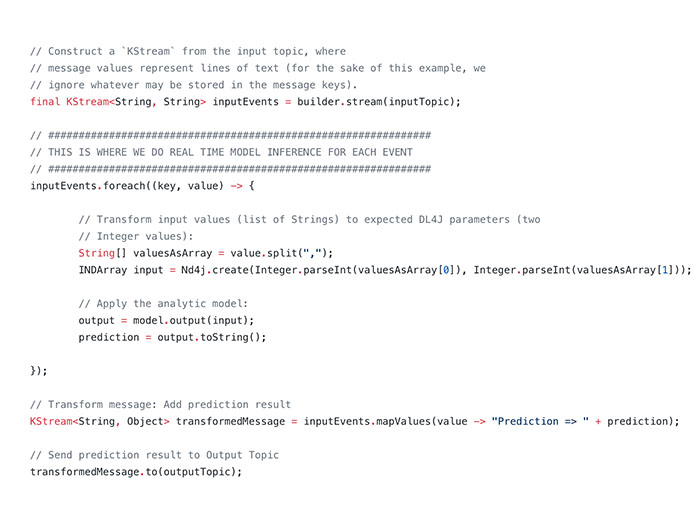

The trained model is embedded into a Kafka Streams application for real time predictions. Here is the core Kafka Streams logic where I use the Deeplearning4j API to do predictions:

The full source code of my unit test is here: Kafka Streams + DeepLearning4j + Keras + TensorFlow. Just do a “git clone” of the Github project and run the Maven build and it should work out-of-the-box without any configuration.