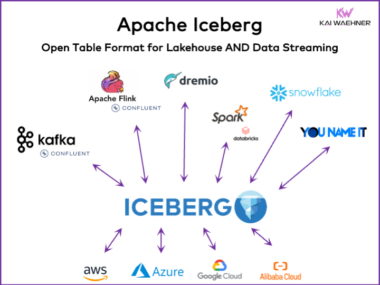

Apache Iceberg – The Open Table Format for Lakehouse AND Data Streaming

An open table format framework like Apache Iceberg is essential in the enterprise architecture to ensure reliable data management and sharing, seamless schema evolution, efficient handling of large-scale datasets and cost-efficient storage. This blog post explores market trends, adoption of table format frameworks like Iceberg, Hudi, Paimon, Delta Lake and XTable, and the product strategy of leading vendors of data platforms such as Snowflake, Databricks (Apache Spark), Confluent (Apache Kafka / Flink), Amazon Athena and Google BigQuery.