Fraud detection becomes increasingly challenging in a digital world across all industries. Real-time data processing with Apache Kafka became the de facto standard to correlate and prevent fraud continuously before it happens. This blog post explores case studies for fraud prevention from companies such as Paypal, Capital One, ING Bank, Grab, and Kakao Games that leverage stream processing technologies like Kafka Streams, KSQL, and Apache Flink.

Fraud detection and the need for real-time data

Fraud detection and prevention is the adequate response to fraudulent activities in companies (like fraud, embezzlement, and loss of assets because of employee actions).

An anti-fraud management system (AFMS) comprises fraud auditing, prevention, and detection tasks. Larger companies use it as a company-wide system to prevent, detect, and adequately respond to fraudulent activities. These distinct elements are interconnected or exist independently. An integrated solution is usually more effective if the architecture considers the interdependencies during planning.

Real-time data beats slow data across business domains and industries in almost all use cases. But there are few better examples than fraud prevention and fraud detection. It is not helpful to detect fraud in your data warehouse or data lake after hours or even minutes, as the money is already lost. This “too late architecture” increases risk, revenue loss, and lousy customer experience.

It is no surprise that most modern payment platforms and anti-fraud management systems implement real-time capabilities with streaming analytics technologies for these transactional and analytical workloads. The Kappa architecture powered by Apache Kafka became the de facto standard replacing the Lambda architecture.

A stream processing example in payments

Stream processing is the foundation for implementing fraud detection and prevention while the data is in motion (and relevant) instead of just storing data at rest for analytics (too late).

No matter what modern stream processing technology you choose (e.g., Kafka Streams, KSQL, Apache Flink), it enables continuous real-time processing and correlation of different data sets. Often, the combination of real-time and historical data helps find the right insights and correlations to detect fraud with a high probability.

Let’s look at a few examples of stateless and stateful stream processing for real-time data correlation with the Kafka-native tools Kafka Streams and ksqlDB. Similarly, Apache Flink or other stream processing engines can be combined with the Kafka data stream. It always has pros and cons. While Flink might be the better fit for some projects, it is another engine and infrastructure you need to combine with Kafka.

Ensure you understand your end-to-end SLAs and requirements regarding latency, exactly-once semantics, potential data loss, etc. Then use the right combination of tools for the job.

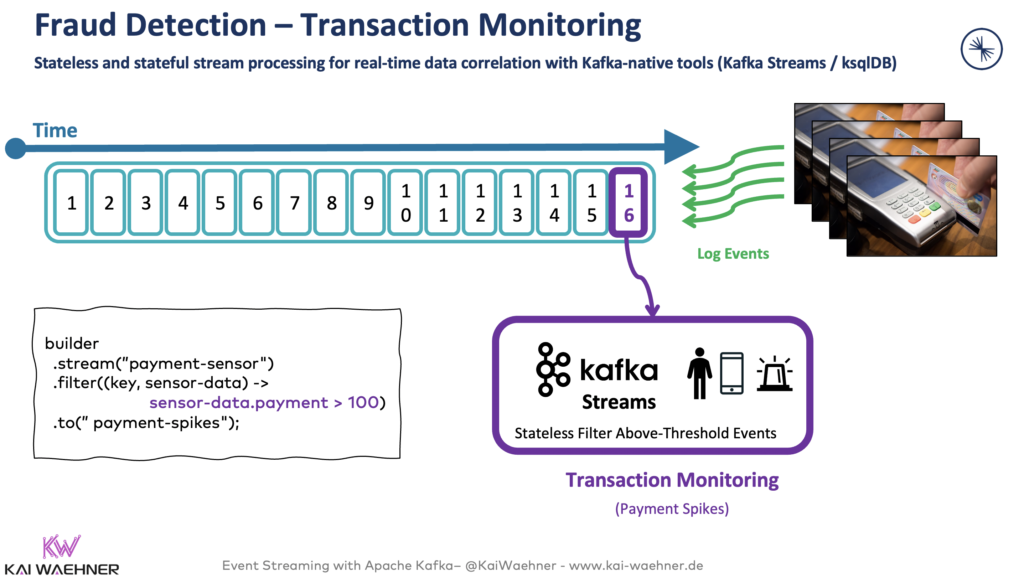

Stateless transaction monitoring with Kafka Streams

A Kafka Streams application, written in Java, processes each payment event in a stateless fashion one by one:

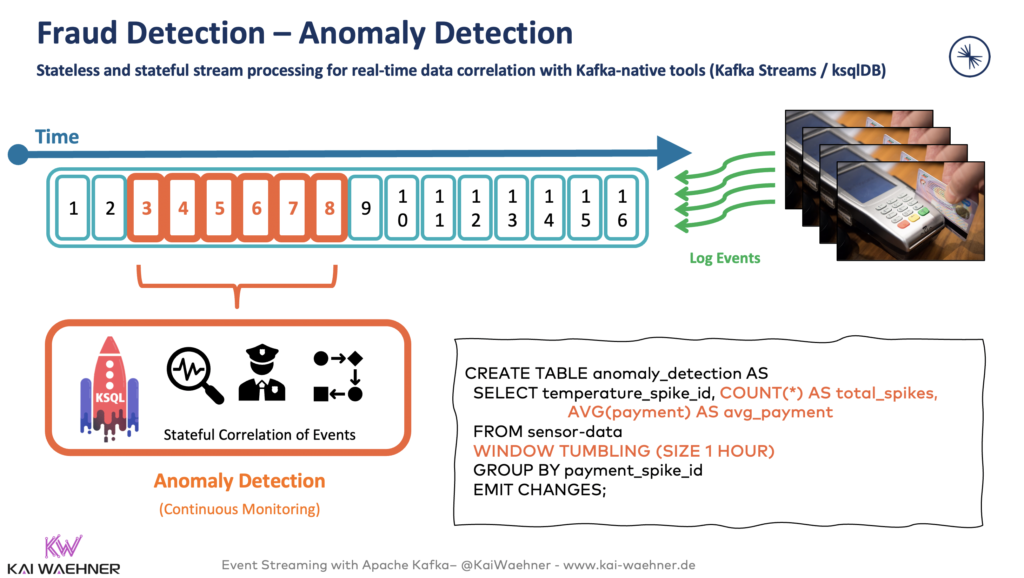

Stateful anomaly detection with Kafka and KSQL

A ksqlDB application, written with SQL code, continuously analyses the transactions of the last hour per customer ID to identify malicious behavior:

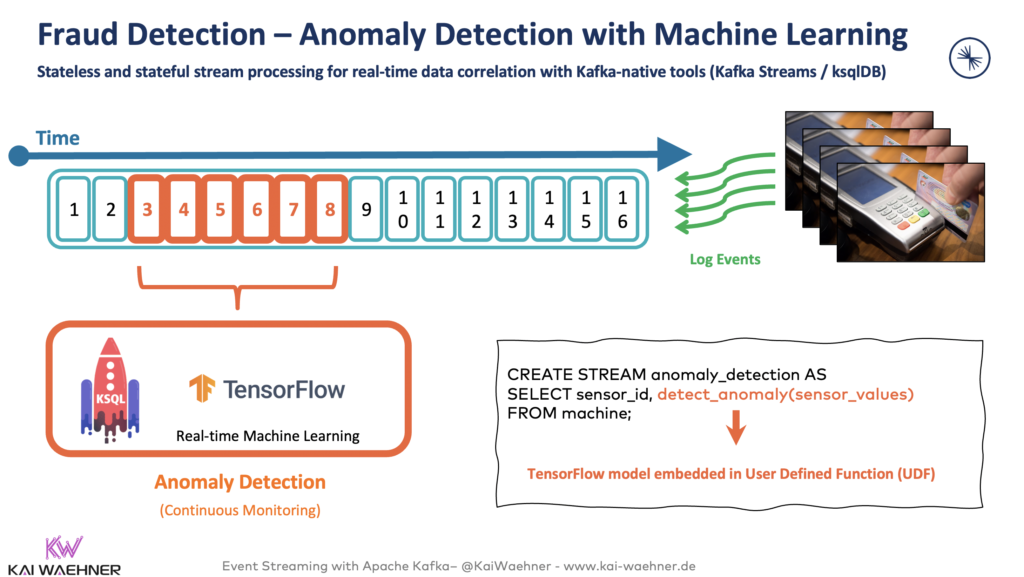

Kafka and Machine Learning with TensorFlow for real-time scoring for fraud detection

A KSQL UDF (user-defined function) embeds an analytic model trained with TensorFlow for real-time fraud prevention:

Case studies across industries

Several case studies exist for fraud detection with Kafka. It is usually combined with stream processing technologies, such as Kafka Streams, KSQL, and Apache Flink. Here are a few real-world deployments across industries, including financial services, gaming, and mobility services:

– Paypal processes billions of messages with Kafka for fraud detection.

– Capital One looks at events as running its entire business (powered by Confluent), where stream processing prevents $150 of fraud per customer on average per year by preventing personally identifiable information (PII) violations of in-flight transactions.

– ING Bank started many years ago by implementing real-time fraud detection with Kafka, Flink, and embedded analytic models

– Grab is a mobility service in Asia that leverages fully managed Confluent Cloud, Kafka Streams, and ML for stateful stream processing in its internal GrabDefence SaaS service.

– Kakao Games, a South-Korean gaming company uses data streaming to detect and operate anomalies with 300+ patterns through KSQL

Let’s explore the latter case study in more detail.

Deep dive into fraud prevention with Kafka and KSQL in mobile gaming

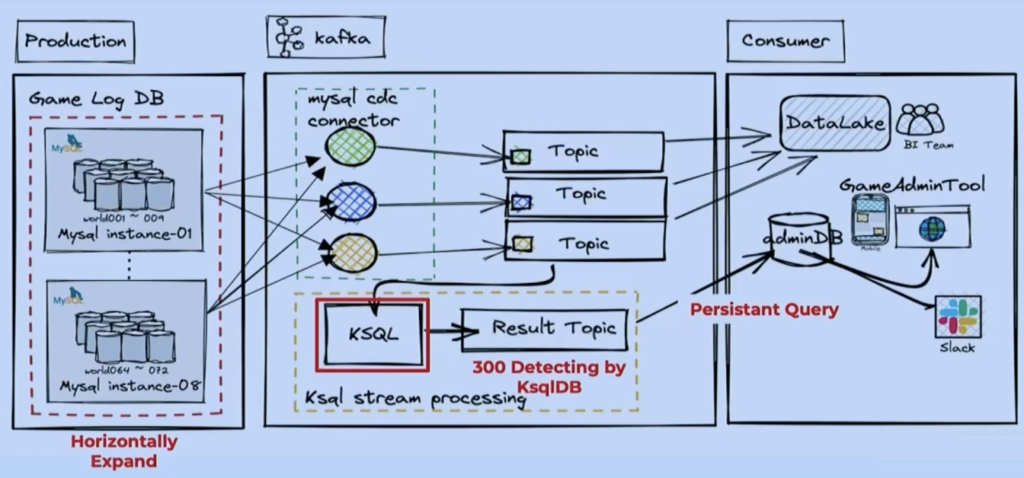

Kakao Games is a South Korea-based global video game publisher specializing in games across various genres for PC, mobile, and VR platforms. The company presented at Current 2022 – The Next Generation of Kafka Summit in Austin, Texas.

Here is a detailed summary of their compelling use case and architecture for fraud detection with Kafka and KSQL.

Use case: Detect malicious behavior by gamers in real-time

The challenge is evident when you understand the company’s history: Kakao Games has many outsourced games purchased via third-party game studios. Each game has its unique log with its standard structure and message format. Reliable real-time data integration at scale is required as a foundation for analytical business processes like fraud detection.

The goal is to analyze game logs and telemetry data in real-time. This capability is critical for preventing and remediating threats or suspicious actions from users.

Architecture: Change data capture and streaming analytics for fraud prevention

The Confluent-powered event streaming platform supports game log standardization. ksqlDB analyzes the incoming telemetry data for in-game abuse and anomaly detection.

Implementation: SQL recipes for data streaming with KSQL

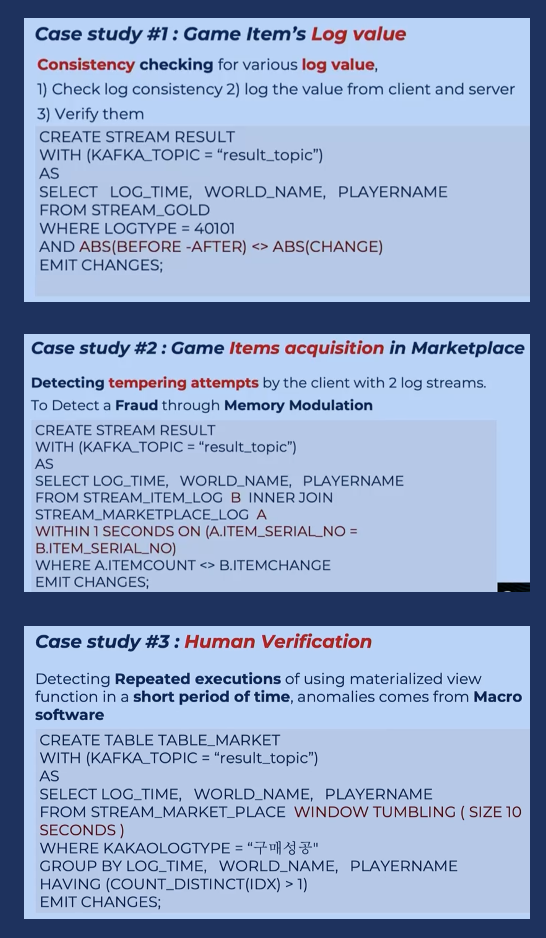

Kakao Games detects anomalies and prevents fraud with 300+ patterns through KSQL. Use cases include bonus abuse, multiple account usage, account takeover, chargeback fraud, and affiliate fraud.

Here are a few code examples written with SQL code using KSQL:

Results: Reduced risk and improved customer experience

Kakao Games can do real-time data tracking and analysis at scale. Business benefits are faster time to market, increased active users, and more revenue thanks to a better gaming experience.

Fraud detection only works in real-time

Ingesting data with Kafka into a data warehouse or a data lake is only part of a good enterprise architecture. Tools like Apache Spark, Databricks, Snowflake, or Google BigQuery enable finding insights within historical data. But real-time fraud prevention is only possible if you act while the data is in motion. Otherwise, the fraud already happened when you detect it.

Stream processing provides a scalable and reliable infrastructure for real-time fraud prevention. The choice of the right technology is essential. However, all major frameworks, like Kafka Streams, KSQL, or Apache Flink, are very good. Hence, the case studies of Paypal, Capital One, ING Bank, Grab, and Kakao Games look different. Still, they have the same foundation with data streaming powered by the de facto standard Apache Kafka to reduce risk, increase revenue, and improve customer experience.

If you want to learn more about streaming analytics with the Kafka ecosystem, check out how Apache Kafka helps in cybersecurity to create situational awareness and threat intelligence and how to learn from a concrete fraud detection example with Apache Kafka in the crypto and NFT space.

How do you leverage data streaming for fraud prevention and detection? What does your architecture look like? What technologies do you combine? Let’s connect on LinkedIn and discuss it! Stay informed about new blog posts by subscribing to my newsletter.