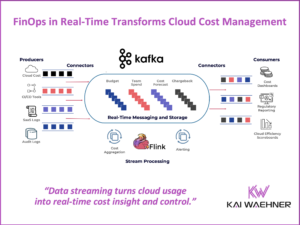

Kafka and MQTT are two complementary technologies. Together they allows to build IoT end-to-end integration from the edge to the data center. No matter if on premise or in the public cloud. I talked about this topic at Kafka Summit SF in San Francisco in October 2018: “Processing IoT Data from End to End with MQTT and Apache Kafka“. The main goal was to discuss different Kafka-native approaches and their trade-offs for integrating Kafka and MQTT.

As preparation for the talk, I created two demo projects on Github. I want to share these here, plus a video recording demoing it. The slide deck from my talk is also shared below. The full video recording will be available for free the website of Kafka Summit.

Motivation for using Apache Kafka and MQTT together

MQTT is a widely used ISO standard (ISO/IEC PRF 20922) publish-subscribe-based messaging protocol. MQTT has many implementations such as Mosquitto or HiveMQ. For a good overview, see Wikipedia’s comparison of MQTT brokers. MQTT is mainly used in Internet of Things scenarios (like connected cars or smart home). But it is also used more and more in mobile devices due to its support for WebSockets.

However, MQTT is not built for high scalability, longer storage or easy integration to legacy systems. Apache Kafka is a highly scalable distributed streaming platform. Kafka ingests, stores, processes and forwards high volumes of data from thousands of IoT devices.

Therefore, MQTT and Apache Kafka are a perfect combination for end-to-end IoT integration from edge to data center (and back, of course, i.e. bi-directional).

Let’s take a look at two different Kafka-native alternatives for building this integration. Both solutions allow highly scalable and mission-critical integration of IoT scenarios leveraging the features and benefits of the Apache Kafka open source ecosystem. Though, they use different concepts (including trade-offs).

MQTT Broker + Apache Kafka + Kafka Connect MQTT Connector

This project focuses on the integration of MQTT sensor data into Kafka via MQTT Broker and Kafka Connect for further processing:

In this approach, you pull the data from the MQTT broker via Kafka Connect to the Kafka broker. You can leverage any features of Kafka Connect such as built-in fault tolerance, load balancing, Converters, Single Message Transformations (SMT) for routing / filtering / etc., scaling different connectors in one Connect worker instance, and other Kafka Connect related benefits.

Here is the Github project including demo script to try it out by yourself:

Apache Kafka + Kafka Connect + MQTT Connector + Sensor Data

MQTT Proxy + Apache Kafka (no MQTT Broker)

As alternative to using Kafka Connect, you can leverage Confluent MQTT Proxy: Integrate IoT data from IoT devices directly without the need for a MQTT Broker:

In this approach, you push the data directly to the Kafka broker via the Confluent MQTT Proxy instead of using the pull approach of Kafka Connect (which uses Kafka Consumers under the hood). You can scale MQTT Proxy easily with a Load Balancer (similar to Confluent REST Proxy). The huge advantage is that you do not need an additional MQTT Broker in the middle. This reduces efforts and costs significantly. It is a better approach for pushing data into Kafka.

Here is the Github project including demo script to try it out by yourself:

Deep Learning UDF for KSQL for Streaming Anomaly Detection of MQTT IoT Sensor Data

Both approaches have their trade-offs. The good thing is that you have the choice. Choose the right tool and architecture for your use case.

Slide Deck from Kafka Summit SF 2018

Here you see the slide deck from my presentation at Kafka Summit 2018 in San Francisco. Focus lies on discussing different Kafka-native approaches and their trade-offs to implement MQTT-Kafka-Integration leveraging Kafka Connect, MQTT Proxy or REST / HTTP.

Click on the button to load the content from www.slideshare.net.

Video Recording: Kafka MQTT Integration via Kafka Connector / Confluent MQTT Proxy

Here is a 15min live demo showing both options:

Conclusion: MQTT and Apache Kafka are complementary and integrate well

Both, MQTT and Apache Kafka, have great benefits for their own use cases. But none of them is the single allrounder for everything. The combination of both makes them very powerful and a great solution to build IoT end-to-end scenarios from edge to data center and back.

Different approaches exist to integrate MQTT and Apache Kafka end-to-end. I am not just talking about connectivity, but also about data processing, filtering, routing, etc. You should compare Kafka Connect + MQTT Broker vs. MQTT Proxy without MQTT Broker vs. REST / HTTP integration. All have their trade-offs. Though, the key advantage all share is their Kafka-native integration. They leverage the features of Kafka under the hood enabling high throughput, scalability and reliability.

By the way: If you want to see an IoT example with Kafka sink applications like Elasticsearch / Grafana, please take a look at the project “Kafka and KSQL for Streaming IoT Data from MQTT to the Real Time UI“. This example shows how to realize the integration with ElasticSearch and Grafana via Kafka Connect.