FinOps in Real Time: How Data Streaming Transforms Cloud Cost Management

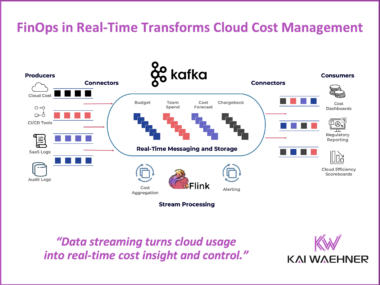

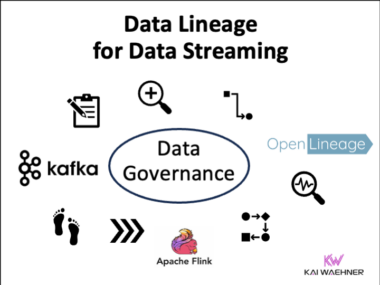

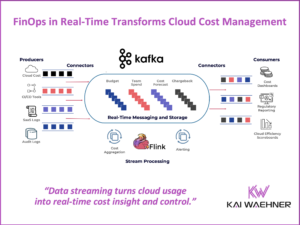

FinOps bridges the gap between finance and engineering to control cloud spend in real time. However, many organizations still rely on delayed, batch-driven data pipelines that limit visibility and slow down decisions. This blog explores how Apache Kafka and Apache Flink enable real-time, governed FinOps by streaming cloud usage data as it happens. It covers the challenges of data governance, compliance, and cross-functional accountability—and how streaming architecture addresses them. Real-world examples from Bitvavo and SumUp show how financial services companies scale securely, build cost-aware teams, and improve agility using event-driven platforms.