The public sector includes many different areas. Some groups leverage cutting-edge technology, like military leverage. Others like the public administration are years or even decades behind. This blog series explores how the public sector leverages data in motion powered by Apache Kafka to add value for innovative new applications and modernizing legacy IT infrastructures. This post is part 3: Use cases and architectures for Government and Citizen Services.

Blog series: Apache Kafka in the Public Sector and Government

This blog series explores why many governments and public infrastructure sectors leverage event streaming for various use cases. Learn about real-world deployments and different architectures for Kafka in the public sector:

- Life is a Stream of Events

- Smart City

- Citizen Services (THIS POST)

- Energy and Utilities

- National Security

Subscribe to my newsletter to get updates immediately after the publication. Besides, I will also update the above list with direct links to this blog series’s posts once published.

As a side note: If you wonder why healthcare is not on the above list. Healthcare is another blog series on its own. While the government can provide public health care through national healthcare systems, it is part of the private sector in many other cases.

Government and Citizen Services powered by Apache Kafka

We talk a lot about customer 360 and customer experience in the private sector. The public sector is different. Increasing revenue is usually not the primary motivation. For that reason, most countries’ citizen-related processes are terrible experiences.

Nevertheless, the same fact is actual as in the private sector: Real-time data beats slow data! It increases efficiency and makes the citizens happy. I want to share some real-world examples from the US and Europe, where data in motion improved processes and reduced bureaucracy.

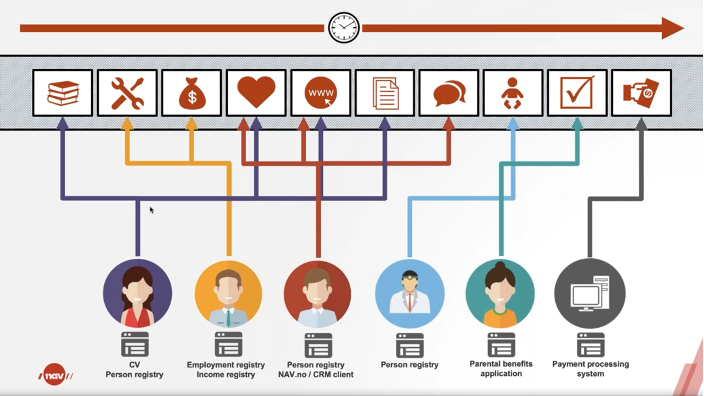

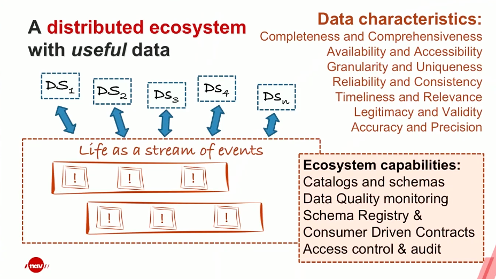

Norwegian Work and Welfare Department (NAV) – Personal Life as a Stream of Events

The Norwegian Work and Welfare Department (NAV) supports unemployment benefits, health insurance, social security, pensions, parental benefits. The organization has 23,000 employees and provides USD 50Bn disbursements per year. They assist people through all phases of life within work, family, health, retirement, and social security.

NAV’s had an impressive vision: Imagine a government that knows just enough about you to provide services without you applying for them first:

This vision is a reality today. NAV presented the implementation at the Kafka Summit 2018 already. NAV Implemented the life is a stream of events by leveraging event streaming technology. Citizens have a tremendous real-time experience while the public administration has optimized processes and reduced cost:

NAV’s real-time event streaming infrastructure provides several vast benefits to the enterprise architecture:

- The integration infrastructure enables proper decoupling between the applications with domain-driven design (DDD) powered by Apache Kafka. This approach supports diverging forces in the government.

- Data as the product enables to announce the arrival of events so the various business domains can trigger processes and update domain-specific data. You might know this concept from the new buzzword “data mesh“.

- Digitalization reduced bureaucracy, not by turning paper into digital forms, but by reengineering and optimizing the business processes (by avoiding spaghetti architectures).

- Data privacy and accuracy are ensured, including compliance to laws like GDPR via data minimization, purpose limitation, and data portability.

U.S. Department of Veterans Affairs (VA) – Improved Benefit Services

The United States Department of Veterans Affairs (VA) is a department of the federal government charged with integrating life-long healthcare services to eligible military veterans.

Event streaming powered by Apache Kafka improved the government’s veteran benefit services for ratings, awards, and claims through data streaming. The business value is enormous for the veterans and their families and the government:

- Assess, route, and verify the service request in real-time.

- Improve the experience for veterans and their families.

- Reduce management and operations complexity.

Government providing Omnichannel Claim Management leveraging Kafka

Let’s take a look at the claim example. Several ways exist to file a claim: In-Person (VSO), internet, or postal mail into VA. Such a challenge is called omnichannel customer experience in the retail industry. The IT infrastructure needs to handle changes in the status of a claim, requests for additional documentation, context when calling into the call center, due benefits when checking in at a hospital, and so on.

Event Streaming powered by Apache Kafka is a great approach to implement omnichannel requirements due to the unique combination of real-time data processing and storage, not just in retail but also in government services. VA chose Confluent for true decoupling, real-time integration, and omnichannel communication. Components include Kafka Streams, ksqlDB, Oracle CDC, Tiered Storage, etc.

Here is an excellent quote from the Kafka Summit presentation: “Implementing Confluent enables our agile teams to create reusable solutions that unlock Veteran data; provide real-time actionable information all while reducing complexity and load on existing data sources.”

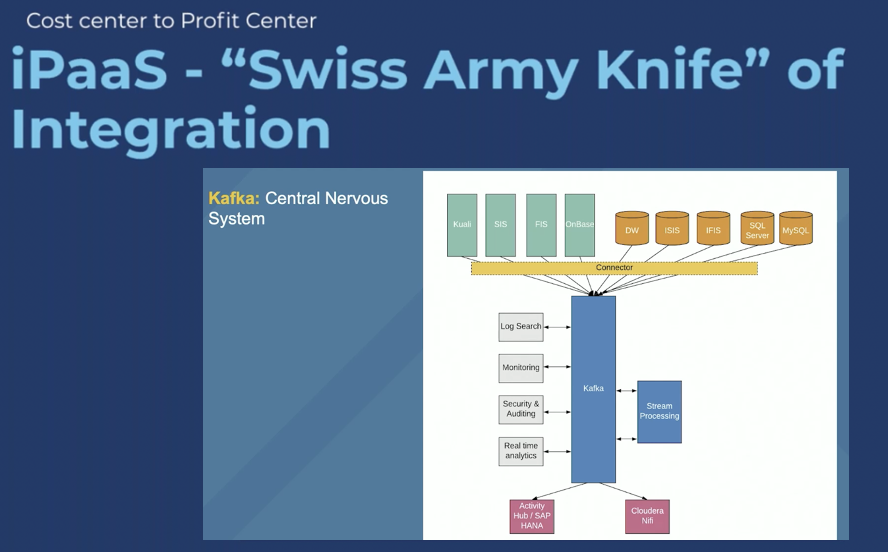

University of California, San Diego – Integration Platform as a Service (iPaaS)

The University of California, San DieUC (UC) is one of the world’s leading public research universities, located in beautiful La Jolla, California. The covid pandemic forced them to do a “once in a life transition”.

UC had to build out their online learning platform due to the pandemic, plus adding the new #1 priority: A comfortable and reliable student testing process. The biggest challenge in this process is the integration between many legacy applications and modern technologies.

Apache Kafka is the de facto standard for modern integration and middleware projects today. You might have seen my content discussing the difference between traditional middleware like MQ, ETL, ESB, iPaaS, and the Apache Kafka ecosystem. For similar reasons, the University of California, San Diego chose Confluent as the cloud-native Integration-platform-as-a-service (iPaaS) middleware layer to set data in motion for 90 million records a day.

iPaaS “Swiss Army Knife” of Integration for the Government with Kafka

A key benefit is the increased time to market with agile development and decoupled applications. Additionally, this opens up new revenue streams for other UC campuses – including the UC Office of President, and tracing student health.

Government Benefit: From Cost Center to Profit Center with Kafka

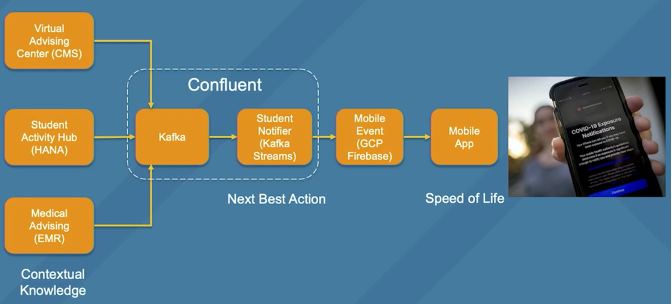

A modern, scalable, and reliable real-time middleware layer enables new use cases beyond integration. A great example from the UC is their use case to provide the next best action and contextual knowledge in real-time:

Continuous data processing from different sources in motion (aka stream processing or streaming analytics) makes these use cases possible. UC leverages Kafka Streams. Kafka-native tools such as Kafka Streams or ksqlDB enable end-to-end data processing at scale in real-time without the need for yet another big data framework (like Storm, Flink, or Spark Streaming).

Data in Motion for Comfortable Citizen Services and Reduced Government Bureaucracy

Real-time data beats slow data. That’s not just true for the private sector. This post showed several real-world examples of how the government can improve processes, reduce costs and bureaucracy, and improve citizens’ experience.

Apache Kafka and its ecosystem provide the capabilities to implement a modern, scalable, reliable real-time middleware layer. Additionally, stream processing allows building new innovative applications that have not been possible before.

How do you leverage event streaming in the public sector? Are you working on citizen services or other government projects? What technologies and architectures do you use? What projects did you already work on or are in the planning? Let’s connect on LinkedIn and discuss it! Stay informed about new blog posts by subscribing to my newsletter.