Event Streaming with Apache Kafka disrupts the retail industry. Walmart’s real-time inventory system and Target’s omnichannel distribution and logistics are two great examples. This blog post explores a key use case for postmodern retail companies: Real-time omnichannel retail and customer 360.

Disruption of the Retail Industry with Apache Kafka

Various deployments across the globe leverage event streaming with Apache Kafka for very different use cases. Consequently, Kafka is the right choice, whether you need to optimize the supply chain, disrupt the market with innovative business models, or build a context-specific customer experience.

I discussed the use cases of Apache Kafka in retail in a dedicated blog post: “The Disruption of Retail with Event Streaming and Apache Kafka“. Learn about the real-time inventory system from Walmart, omnichannel distribution and logistics at Target, context-specific customer 360 at AO.com, and much more.

This post explores a specific use case in more detail: Real-time omnichannel retail and customer 360 with Apache Kafka. Learn about a possible architecture to deploy this scenario across the whole supply chain: From design and manufacturing to sales and aftersales. The architecture is very flexible. Any infrastructure (one or multiple cloud providers and/or on-premise data centers, bare metal, containers, Kubernetes) can be used.

‘My Porsche’ – A Global Omnichannel Platform for customers, fans, and enthusiasts

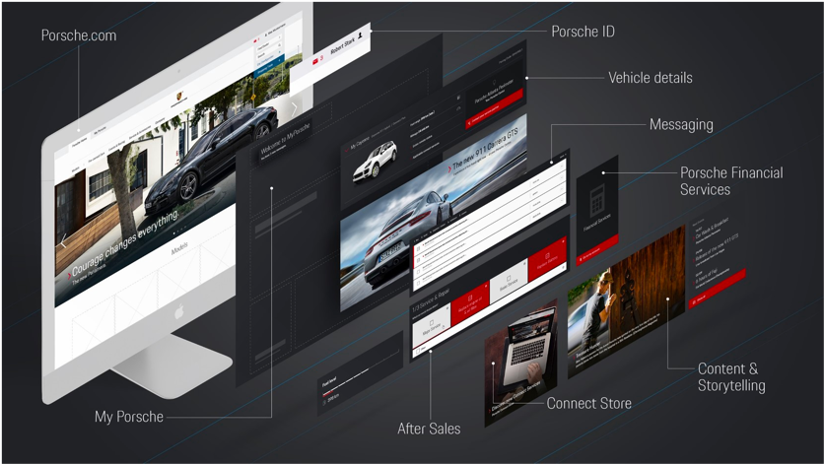

Let’s start with a great example from the automotive industry: ‘My Porsche’ is the innovative and modern digital omnichannel platform from Porsche for keeping a great relationship with their customers. Porsche can describe it better than me:

“The way automotive customers interact with brands has changed, accompanied by a major transformation of customer needs and requirements. Today’s brand experience expands beyond the car and other offline touchpoints to include various digital touchpoints. Automotive customers expect a seamless brand experience across all channels — both offline and online.”

The ‘Porsche Dev‘ group from Porsche published a few great posts about their architecture. Here is a good overview:

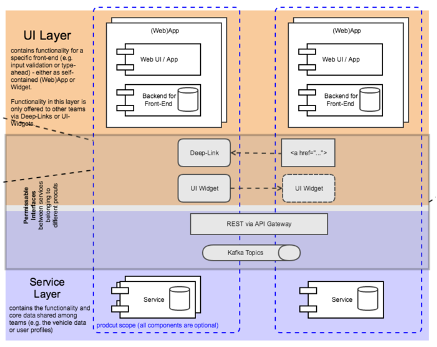

Kafka provides real decoupling between applications. Hence, Kafka became the defacto standard for microservices and Domain-driven Design (DDD) in many companies. It allows building independent and loosely coupled but scalable, highly available, and reliable applications.

That’s exactly what Porsche describes for their usage of Apache Kafka through its supply chain:

“The recent rise of data streaming has opened new possibilities for real-time analytics. At Porsche, data streaming technologies are increasingly applied across a range of contexts, including warranty and sales, manufacturing and supply chain, connected vehicles, and charging stations” writes Sridhar Mamella (Platform Manager Data Streaming at Porsche).

As you can see in the above architecture, there is no need to have a fight between REST / HTTP and Event Streaming / Kafka enthusiasts! As I explained in detail before, most microservice architectures need Apache Kafka and API Management for REST. HTTP and Kafka complement each other very well!

Last but not least, a great podcast link: The Porsche guys talked about “Apache Kafka and Porsche: Fast Cars and Fast Data” to explain their event streaming platform called Streamzilla.

Omnichannel Retail and Customer 360 with Kafka

After exploring an example, let’s take a look at an omnichannel architecture with Apache Kafka from different points of view: Marketing+Sales, Analytics, and Manufacturing.

The good news first: All business units can use the same Kafka cluster! That’s actually pretty common and a key reason why Kafka is used so much today: Events generated in one domain are consumed for very different use cases by different departments and stakeholders. The real decoupling with Kafka’s storage is quite different from HTTP/REST web services or traditional message queues like RabbitMQ.

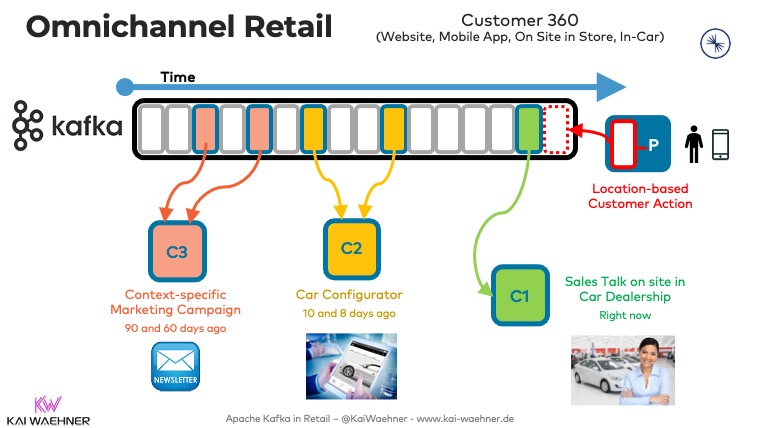

Marketing, Sales, and Aftersales (aka Customer 360)

From a marketing and sales perspective, companies need to correlate all the customer actions. No matter how old. No matter if online or offline channels. The following shows such a retail process for selling a car:

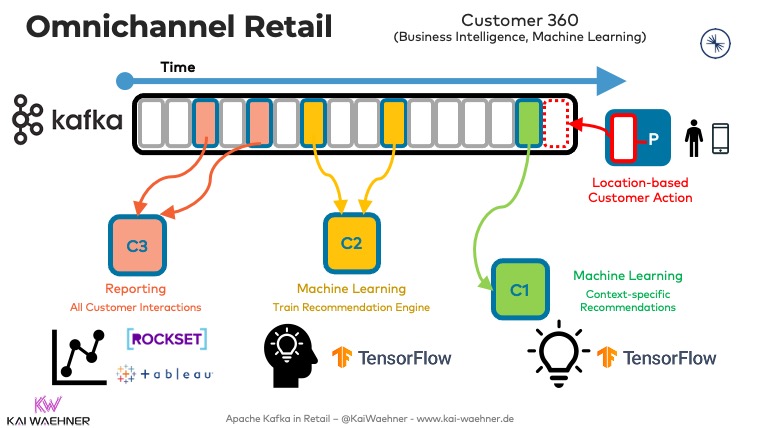

Reporting, Analytics, and Machine Learning

The data science team also uses the same events used for marketing and sales to create reports for management and train analytic models for making better decisions and recommendations in the future. The new, improved analytic model is then deployed back to the production pipeline of the marketing and sales channel:

In this example, Kafka is used together with TensorFlow for streaming machine learning. The reporting is done via Rockset’s native Kafka integration supporting ANSI SQL. This allows easy integration with business intelligence tools such as Tableau, Qlik, or Power BI in the same way as with other databases and batch data lakes.

Obviously, a key strength of Kafka is that it works together with any other technology. No matter if open source or proprietary. SaaS or self-managed, you choose. And no matter if real-time or batch. In most real-world Kafka deployments, the connectivity to systems changes over time.

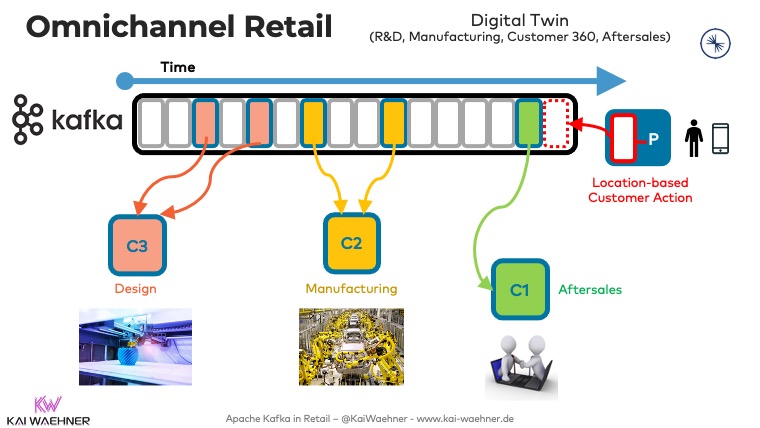

Design and Manufacturing

Here is a pretty cool fact in some postmodern omnichannel retail solutions: Customers can monitor the whole manufacturing and delivery process. When buying a car, you can track the manufacturing steps on your mobile app to know the exact status of your future car. When you finally pick up the car, all future data is also stored (for you and the carmaker) for various use cases:

Of course, this does not end when you picked up the car. Aftersales will become super important for carmakers in the future. Check out what you can do with a Tesla (which is actually more software on wheels than just hardware) today, already.

Apache Kafka as Digital Twin for Customer 360 and Omnichannel Aftersales

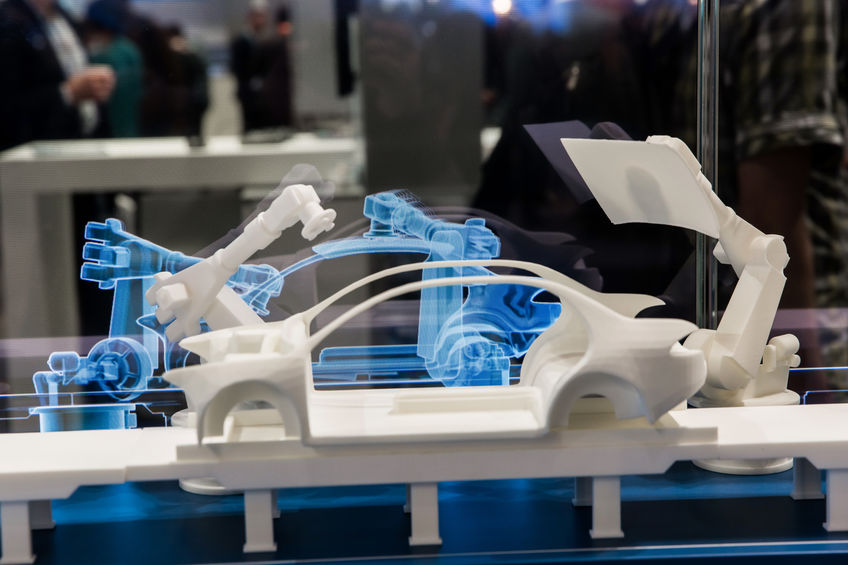

A key buzzword that has to be mentioned here is the digital twin or digital thread. The automotive industry is the perfect example for building a digital twin of each car. Both the carmaker and the buyer can get huge benefits. Use cases like predictive maintenance or context-specific upselling of new features are now possible.

Apache Kafka is the heart of this digital twin in many cases. Often, projects also combine Kafka with other technologies. For instance, Kafka can be the data integration pipeline into MongoDB. The latter stores the data of the cars. Check out the following two posts to learn more about this topic:

- Apache Kafka as Digital Twin for Open, Scalable, Reliable Industrial IoT (IIoT)

- IoT Architectures for Digital Twin with Apache Kafka

Omnichannel Customer 360 with Kafka for Happy Customers and Increased Revenue

In conclusion, Event Streaming with Apache Kafka plays a key role in this evolution of re-inventing the retail business. Walmart, Target, and many other retail companies rely on Apache Kafka and its ecosystem to provide a real-time infrastructure to make the customer happy, increase revenue, and stay competitive in this tough industry. Omnichannel and customer 360 end-to-end in real-time is key for success in a postmodern retail company. No matter if you operate in your own data center or in one or more public clouds.

What are your experiences and plans for event streaming in the retail industry? Did you already build applications with Apache Kafka for omnichannel, aftersales, customer 360? Let’s connect on LinkedIn and discuss it! Stay informed about new blog posts by subscribing to my newsletter.