Natural Language Processing (NLP) helps many projects in the real world for service desk automation, customer conversation with a chatbot, content moderation in social networks, and many other use cases. Apache Kafka became the predominant orchestration layer in these machine learning platforms for integrating various data sources, processing at scale, and real-time model inference. This article shows how companies across different industries such as the carmaker BMW, the online travel and booking Expedia, and the dating app Tinder leverage the combination of event streaming with machine learning for reliable real-time conversational AI, NLP, and chatbots.

Natural Language Processing (NLP)

Natural language processing is a subfield of linguistics, computer science, and artificial intelligence concerned with the interactions between computers and human language, mainly how to program computers to process and analyze large amounts of natural language data. The goal is a computer capable of “understanding” the contents of the text, documents, and speech, including the contextual nuances of the language within them. The technology can then accurately extract information and insights in the text and categorize and organize the text itself.

Use cases for natural language processing frequently involve speech recognition, natural language understanding, translation, and natural language generation.

Like most other ML concepts, NLP can use an ocean of different algorithms. In the 2010s, NLP kicked off when representation learning and deep neural network-style machine learning methods became widespread in NLP. Modern ML frameworks such as TensorFlow, in conjunction with the elastic compute power in the public cloud, enabled NLP usage for any company of any size.

Conversational AI has become mainstream because of Deep Learning

A chatbot is a software application used to conduct an online chat conversation via text or text-to-speech instead of direct contact with a live human agent. Designed to simulate how a human behaves as a conversational partner convincingly, chatbot systems typically require continuous tuning and testing, and many in production remain unable to converse adequately. Chatbots are used in dialog systems for various purposes, including customer service, request routing, or information gathering.

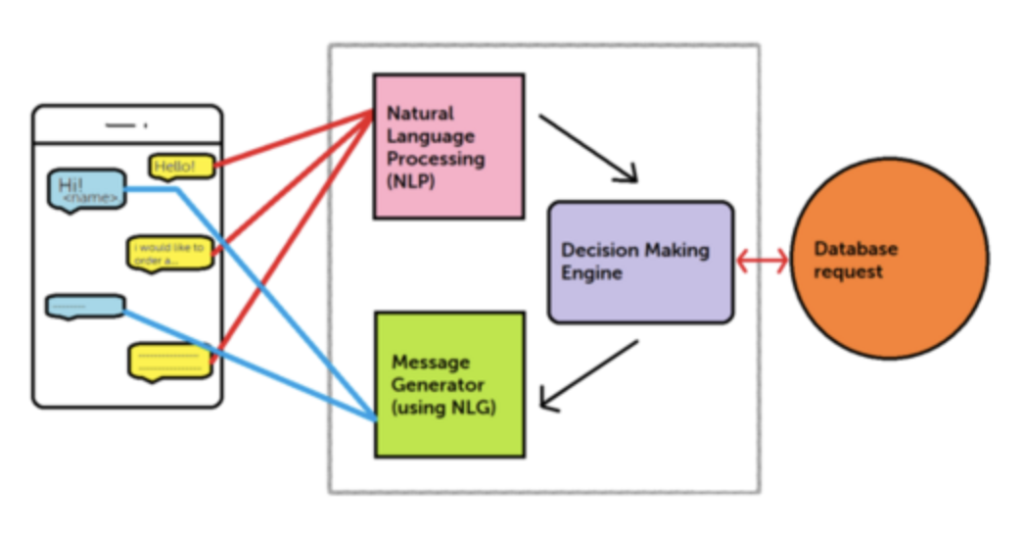

discover.bot published an excellent article explaining how a chatbot works behind the scenes using NLP. It uses a combination of Natural Language Understanding (NLU) and Natural Language Generation (NLG):

Like any machine learning/deep learning application, a chatbot requires model training (= teaching a chatbot) and model scoring (=applying the chatbot in a dialog with a human). Therefore, building a chatbot is a machine learning problem with related tools, APIs, and cloud services. How does event streaming with Kafka fit into this story?

Machine Learning / NLP and Kafka – An Impedance Mismatch

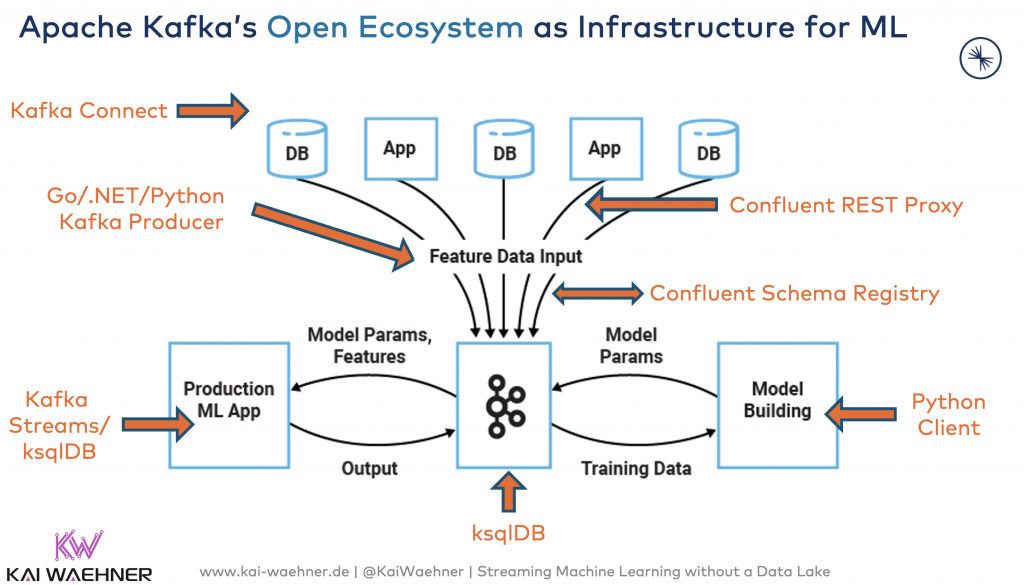

I wrote about Machine Learning and Kafka a lot in the past. TL;DR: Machine Learning requires data integration and processing at scale, and model predictions often require reliable and robust real-time applications. That’s where Kafka and its ecosystem fit into the story:

For more details:

- How to Build and Deploy Scalable Machine Learning in Production with Apache Kafka

- Using Apache Kafka to Drive Cutting-Edge Machine Learning

- Model Deployment and Real-Time Analytics with Kafka Streams and ksqlDB

- Streaming Machine Learning with Kafka-native Model Deployment

These articles describe different workarounds to solve the impedance mismatch. A few examples:

- Some teams let the data science teams deploy Python in Docker containers in production and integrate it with other applications and programming platforms like Java, Go, or .NET / C++.

- A few projects use Faust as Kafka-native streaming Python library (with several limitations compared to Kafka Streams or ksqlDB).

- Embedding NLP models (trained with any machine learning framework using any programming language, including Python) into a native Kafka application for robust model scoring is a well-known option.

- Last but not least, many model servers added Kafka-native streaming interfaces directly using the Kafka protocol as an alternative to RPC communication via HTTP or gRPC.

Let’s now look at a few real-world examples for machine learning, NLP, chatbots, and the Kafka ecosystem in companies such as BMW, Expedia, and Tinder.

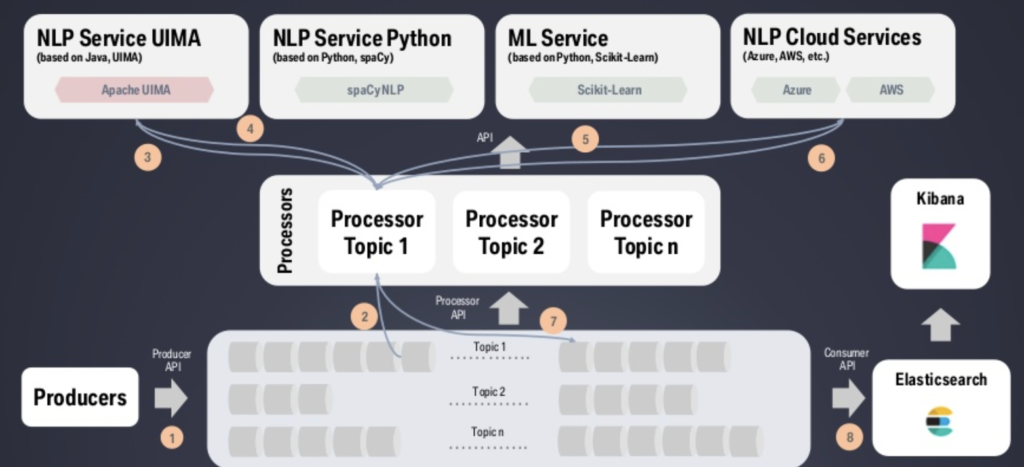

BMW – Kafka as Orchestration Layer for NLP and Chatbots

- Service desk automation

- Speech analysis of customer interaction center (CIC) calls to improve the quality

- Self-service using smart knowledge bases

- Agent support

- Chatbots

- Flexible integration: Multiple supported interfaces for different deployment scenarios, including various machine learning technologies, programming languages, and cloud providers

- Modular end-to-end pipelines: Services can be connected to provide full-fledged NLP applications.

- Configurability: High agility for each deployment scenario

Expedia – Conversations Platform powered by Cloud-native Kafka

Expedia is a leading online travel and booking. They have many use cases for machine learning. One of my favorite examples is their Conversations Platform built on Kafka and Confluent Cloud to provide an elastic cloud-native application.

The goal of Expedia’s Conversations Platform was simple: Enable millions of travelers to have natural language conversations with an automated agent via text, Facebook, or their channel of choice. Let them book trips, make changes or cancellations, and ask questions:

- “How long is my layover?”

- “Does my hotel have a pool?”

- “How much will I get charged if I want to bring my golf clubs?”

Then take all that is known about that customer across all of Expedia’s brands and apply machine learning models to give customers what they are looking for immediately in real-time and automatically, whether a straightforward answer or a complex new itinerary.

Real-time Orchestration realized in four Months

Such a platform is no place for batch jobs, back-end processing, or offline APIs. To quickly make decisions that incorporate contextual information, the platform needs data in near real-time, and it needs it from a wide range of services and systems. Meeting these needs meant architecting the Conversations Platform around a central nervous system based on Confluent Cloud and Apache Kafka. Kafka made it possible to orchestrate data from loosely coupled systems, enrich data as it flows between them so that by the time it reaches its destination, it is ready to be acted upon, and surface aggregated data for analytics and reporting.

Expedia built this platform from zero to production in four months. That’s the tremendous advantage of using a fully managed serverless event streaming platform as the foundation. The project team can focus on the business logic.

The Covid pandemic proved the idea of an elastic platform: Companies were hit with a tidal wave of customer questions, cancellations, and re-bookings. Throughout this once-in-a-lifetime event, the Conversations Platform proved up to the challenge, auto-scaling as necessary and taking off much of the load of live agents.

Expedia’s Migration from MQ to Kafka as Foundation for Real-time Machine Learning and Chatbots

I explored several times that event streaming is more than just a (scalable) message queue. Check out my old (but still accurate and relevant) Comparison between MQ and Kafka, or the newer comparison between cloud-native iPaaS and Kafka.

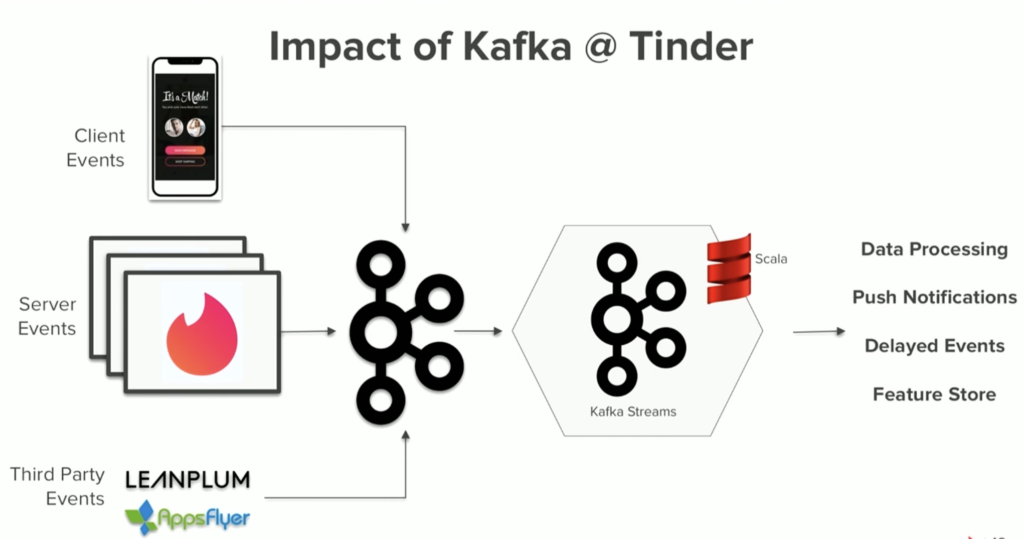

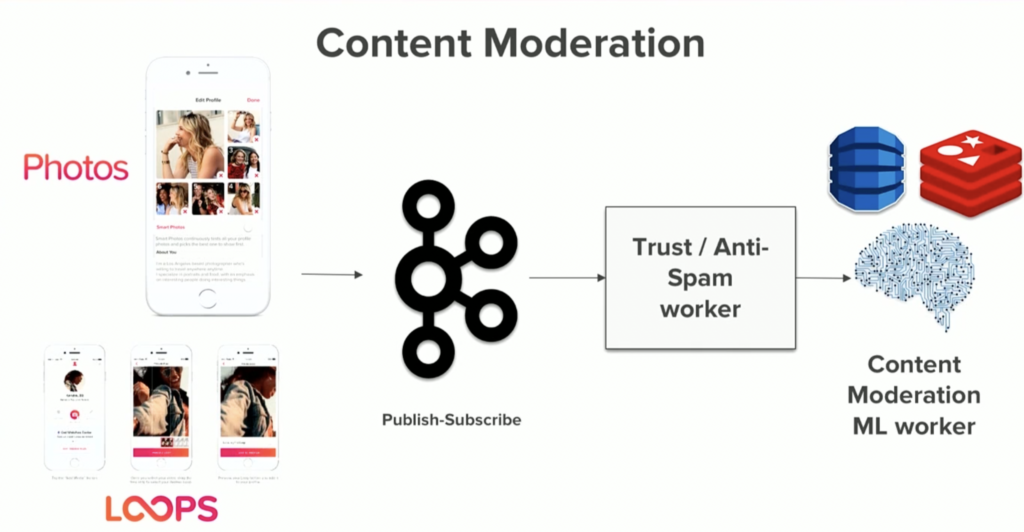

Tinder – Content Moderation with Kafka Streams and ML

The dating app Tinder is a great example where I can think of tens of use cases for NLP. Tinder talked at a past Kafka Summit about their Kafka-powered machine learning platform.

Tinder is a massive user of Kafka and its ecosystem for various use cases, including content moderation, matching, recommendations, reminders, and user reactivation. They used Kafka Streams as a Kafka-native stream processing engine for metadata processing and correlation in real-time at scale:

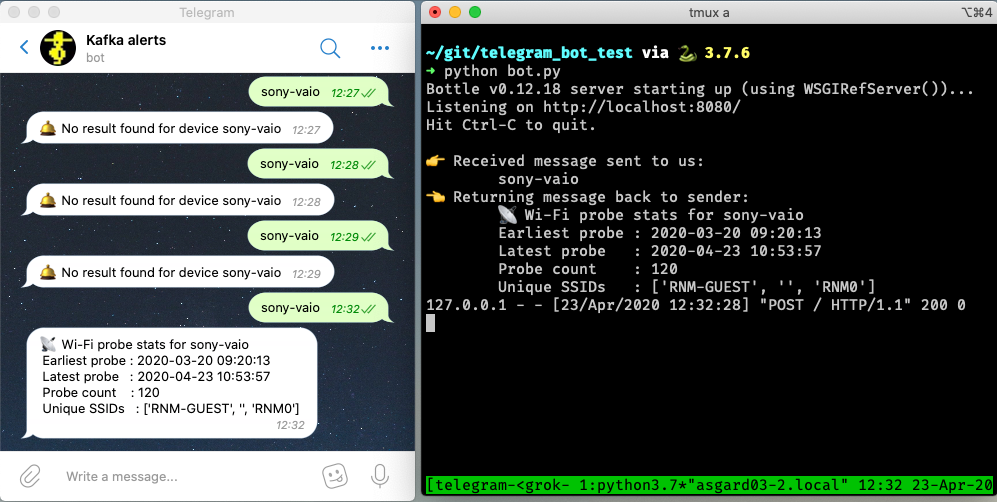

Telegram Bot API – ML in a Streaming App

Reddit Text Processing – NLP with Streaming Model Predictions

The drawback of the above Telegram example is integrating a REST API for the chatbot. Remote procedure calls (RPC) are still predominant in machine learning. However, RPC is an anti-pattern in the event streaming world as it creates challenges concerning robustness, latency, scalability, and error handling. RPC integration is fine for many use cases. But because of other available options, there is no need to do RPC calls.

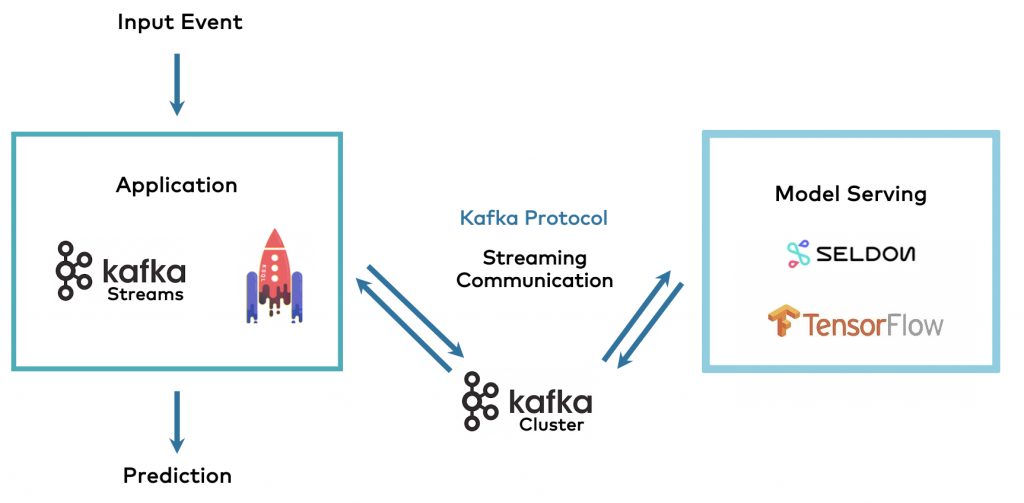

Kafka-native streaming machine learning is an alternative for model predictions. The two deployment options are embedding an analytic model into the Kafka application or using a model server that supports event streaming besides RPC (HTTP/gRPC) calls. I wrote a detailed article with the pros and cons of both approaches for model predictions using Kafka applications.

The following shows an example of a Kafka-native integration between a model server and other applications using the Kafka protocol:

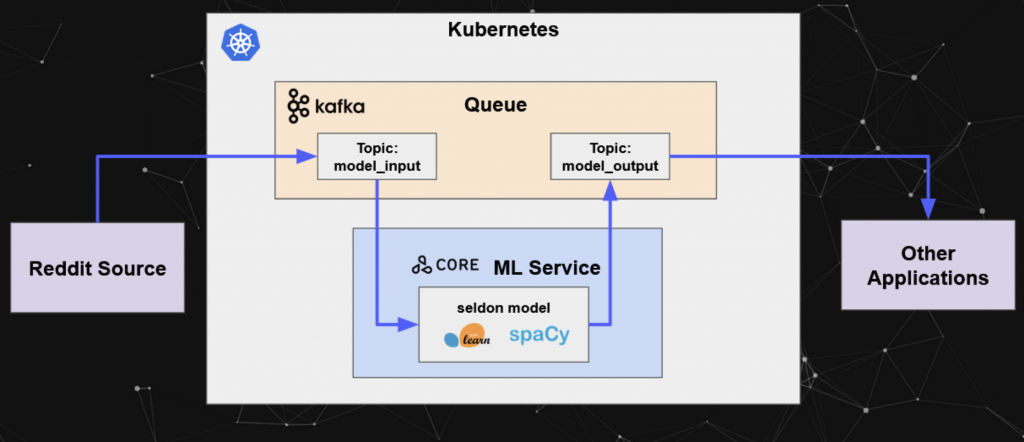

The Seldon model server is an example that already supports the Kafka interface. The Seldon team demoed how they train and deploy a machine learning model leveraging a scalable stream processing architecture for an automated text prediction use-case. They use Scikit-learn (Sklearn) and SpaCy to train an ML model from the Reddit Content Moderation dataset and deploy that model using Seldon Core for real-time processing of text data from Kafka real-time streams:

Kafka-native NLP to build the next Conversational AI and Chatbot

Apache Kafka became the de facto standard for event streaming. One pillar of Kafka use cases includes ML platforms including various NLP-related concepts such as conversational AI, chatbots, and speech translation for improving and automating service desks, content moderation, and plenty of other use cases.

A Kafka-based orchestration and integration layer provides true decoupling in a scalable real-time platform. The benefits for ML platforms include back-pressure handling, pre-processing, and aggregations at any scale in real-time. Another benefit is the capabilities to connect different communication paradigms and technologies. The examples from BMW, Expedia, and Tinder showed how Kafka-based NLP infrastructure could look.

How do you build conversational AI, chatbots, and other NLP applications? What technologies and architectures do you use? Are event streaming and Kafka part of the architecture? Let’s connect on LinkedIn and discuss it! Stay informed about new blog posts by subscribing to my newsletter.