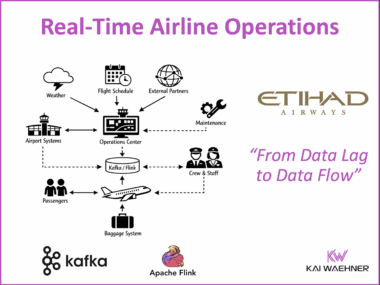

Etihad Airways Makes Airline Operations Real-Time with Data Streaming

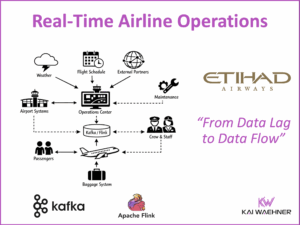

Airlines face constant pressure to deliver reliable service while managing complex operations and rising customer expectations. This blog post explores how Etihad Airways uses real-time data streaming with Apache Kafka and Flink to improve operational efficiency and passenger experience. Based on a presentation at the Data Streaming World Tour in Dubai, it highlights how Etihad built an event-driven platform to move from delayed insights to real-time action. The post also connects this story to other data streaming success cases in the aviation industry, including Lufthansa , Cathay Pacific, Virgin Australia, and Schiphol Airport in Amsterdam.