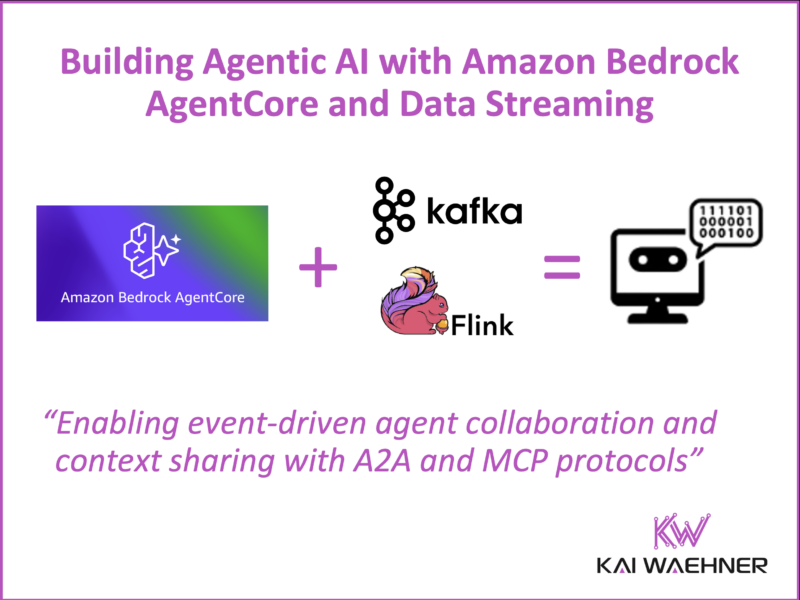

The AI journey for many started with chatbots. But agentic AI goes far beyond that. These are autonomous systems that observe, reason, and act—continuously and in real time. At the AWS Summit New York 2025, Amazon introduced Bedrock AgentCore, a new foundation for building and operating enterprise-grade agents. It’s a major step forward. But models and agents alone aren’t enough.

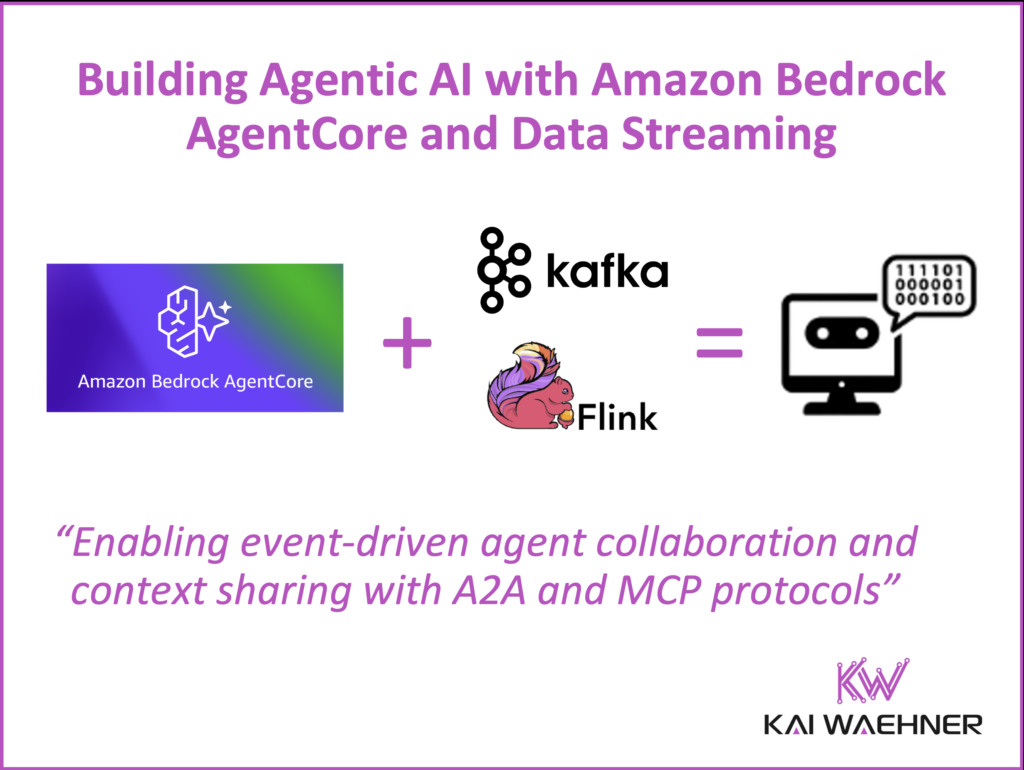

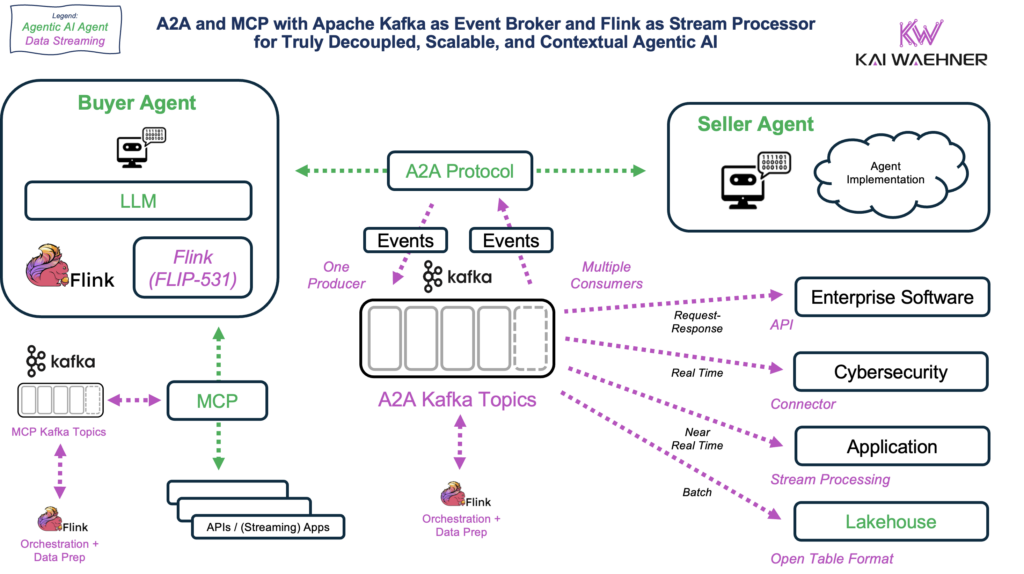

To succeed in production, agents need real-time data, asynchronous communication, and scalable integration across systems. That’s where data streaming with Apache Kafka and Apache Flink comes in. Paired with Amazon Bedrock AgentCore and using standard protocols like MCP and A2A, they provide the event-driven architecture needed for true agentic automation.

This post explores how these technologies work together to enable intelligent, always-on agents in real enterprise environments.

Join the data streaming community and stay informed about new blog posts by subscribing to my newsletter and follow me on LinkedIn or X (former Twitter) to stay in touch. And make sure to download my free book about data streaming use cases, including various AI examples across industries.

From Chatbots to Autonomous Agents: The Rise of Agentic AI

Agentic AI is no longer just an experimental technology. It’s evolving into a practical foundation for building intelligent, autonomous, and collaborative systems in production. These agents go far beyond chatbots. They reason, plan, and take actions independently across business systems—continuously and in real time.

At the AWS Summit New York 2025, Amazon introduced several major innovations to support this vision. The most prominent is Amazon Bedrock AgentCore. This is not just another platform for developers. It is a full suite of services for deploying secure, scalable AI agents across industries.

Amazon Bedrock AgentCore: AWS Strategy for Enterprise AI Agents

AgentCore is designed to bridge the gap between AI prototypes and scalable production agents. It includes core services such as:

- Secure runtime for long-lived, asynchronous workloads

- Memory components for context-aware decision-making

- Identity and access controls for agent interactions

- Gateway tools to turn existing APIs into agent-compatible interfaces

- Code execution and browser tools for dynamic tasks

- Observability features powered by Amazon CloudWatch

These features enable agents to work reliably across internal tools, external services, and dynamic data flows.

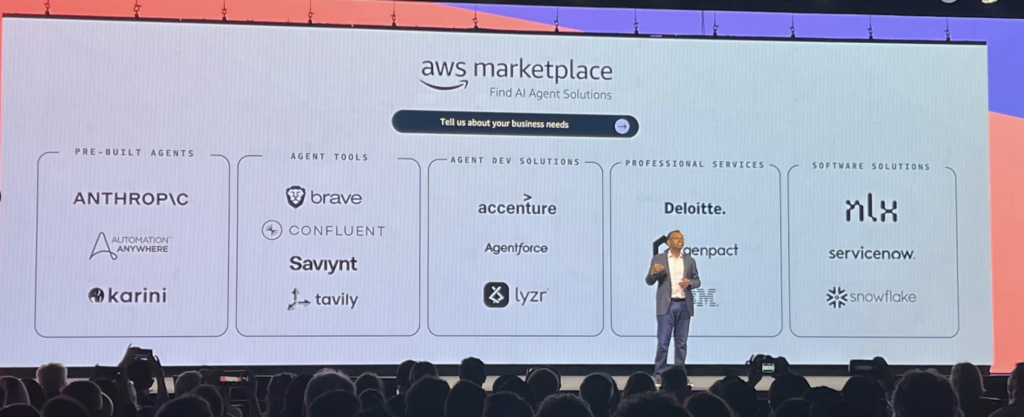

AWS highlighted a diverse mix of partners leading the charge in this new era, including:

- Anthropic – Pre-built agents (LLMs)

- ServiceNow – Customer support SaaS

- Deloitte and Accenture – Professional services to build and scale agent systems

And then a surprise to many:

- Confluent – Providing the agent tools powered by real-time data streaming

Why Event-Driven Architecture Matters for Autonomous Agents

Autonomous agents are NOT request-response tools. They are event-driven and reactive by nature.

Agents need to monitor signals across a system, evaluate conditions, and take action without waiting for a human prompt. This requires a continuous stream of data, not periodic updates.

Apache Kafka: Event Broker for Decoupling and Asynchronous Communication

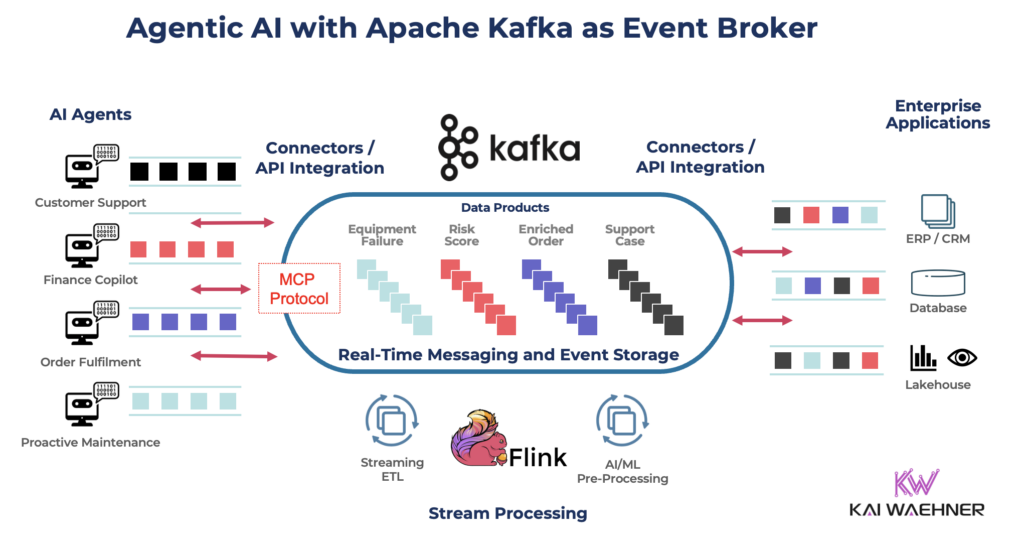

Apache Kafka plays a key role here. It serves as the event broker that connects agents running on AWS Bedrock with the rest of the enterprise. Kafka provides a shared event backbone across departments, applications, and even partner ecosystems.

With Apache Kafka, agents can:

- Subscribe to business events in real time

- Maintain shared state across distributed systems

- Publish updates and decisions asynchronously

- React to changes with minimal latency

Apache Kafka replaces fragile point-to-point APIs with a scalable, decoupled architecture. It ensures every part of the system stays in sync, even as new tools, services, and agents are added.

Apache Flink: Powering Always-On Intelligence with Continuous Stream Processing

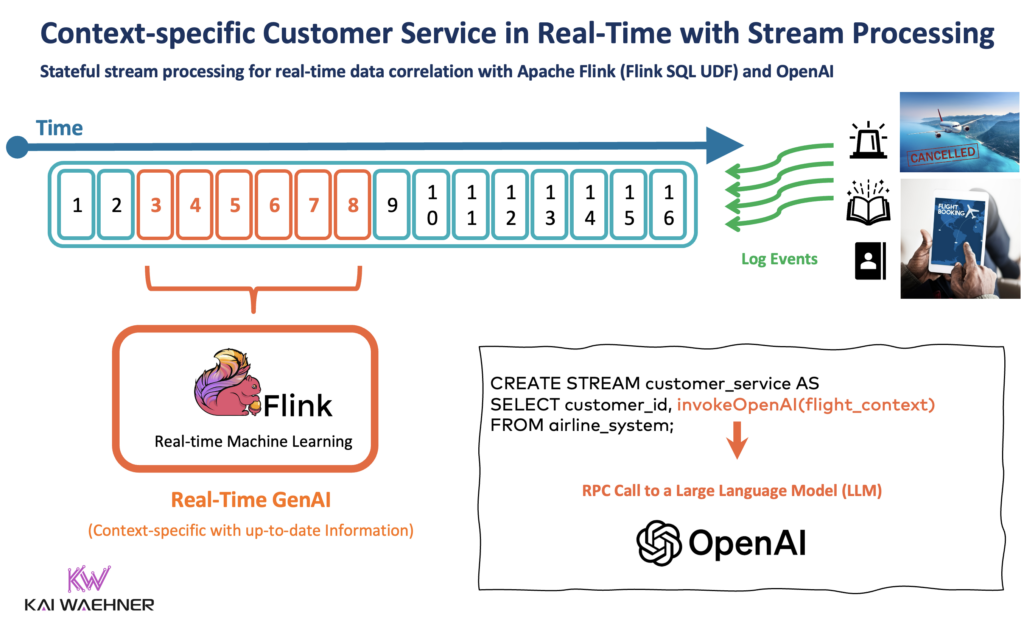

While Kafka connects the system, Apache Flink adds real-time intelligence to the stream.

Many production agents don’t just wait for a prompt. They run constantly in the background, observing patterns, detecting anomalies, and triggering actions. Think of fraud detection, supply chain optimization, or real-time personalization.

Here is an example using Flink with OpenAI’s LLM. I built such an example a few quarters ago. Amazon Bedrock EventCore can be integrated in the same way.

Flink enables this by:

- Processing events as they happen

- Maintaining complex application state over time

- Enriching, filtering, and correlating events from multiple sources

- Running business logic and AI models within the data stream

These agents become embedded services, not just LLM wrappers. They act as digital sentinels, always listening and ready to respond – automatically and intelligently.

And with FLIP-531, Apache Flink goes beyond orchestration and data preparation in Agentic AI environments. It now provides a native runtime to build, run, and manage autonomous AI agents at scale supporting A2A and MCP standard protocols.

Standardized Agent Communication: MCP and A2A

For agentic AI to scale, systems need a common language.

Two new protocols are addressing this:

- Model Context Protocol (MCP) – from Anthropic but widely adopted by many model and service providers across the AI community. MCP standardizes how agents receive inputs, maintain context, and interact with tools. It abstracts away the implementation details of each model or tool.

- Agent2Agent (A2A) – from Google (and handed over to the Linux Foundation). A2A defines how autonomous agents communicate with each other, delegate tasks, and share results without hardcoded integrations.

An event-driven architecture with data streaming powered by Apache Kafka and Flink provides the perfect foundation for both protocols.

Instead of synchronous APIs, agents communicate through Kafka topics—publishing and subscribing to structured events defined by MCP or A2A. This model is fully decoupled, highly scalable, and easy to monitor.

Kafka Topics act as as a data substrate between agents and systems using MCP and A2A, which are otherwise stateless protocols. Without Kafka, replayability and traceability aren’t possible so interoperability in production becomes infeasible. Kafka allows enterprises to mix and match implementations across languages, clouds, and teams without breaking downstream workflows.

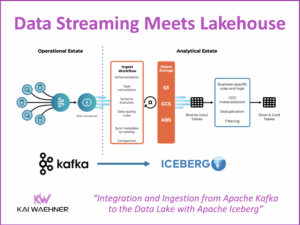

Confluent Cloud: Serverless Real-Time Data and Application Integration at Enterprise Scale

AWS supports a broad ecosystem of tools for AI. But Confluent is critical for one key reason: real-time integration combined with an event-driven for true decoupling and flexibility.

With Confluent Cloud providing serverless Kafka and Flink, organizations can:

- Stream data between AWS Bedrock EventCore and operational systems like Oracle, ServiceNow, SAP, Salesforce, and so on

- Build asynchronous agents directly on the data streaming platform using Flink

- Ensure data consistency, reliability, and observability at scale

- Integrate both SaaS tools and on-premises systems into the same event-driven fabric

This transforms Confluent from a data pipeline into an agent platform—not just supporting agents, but enabling the creation of new ones.

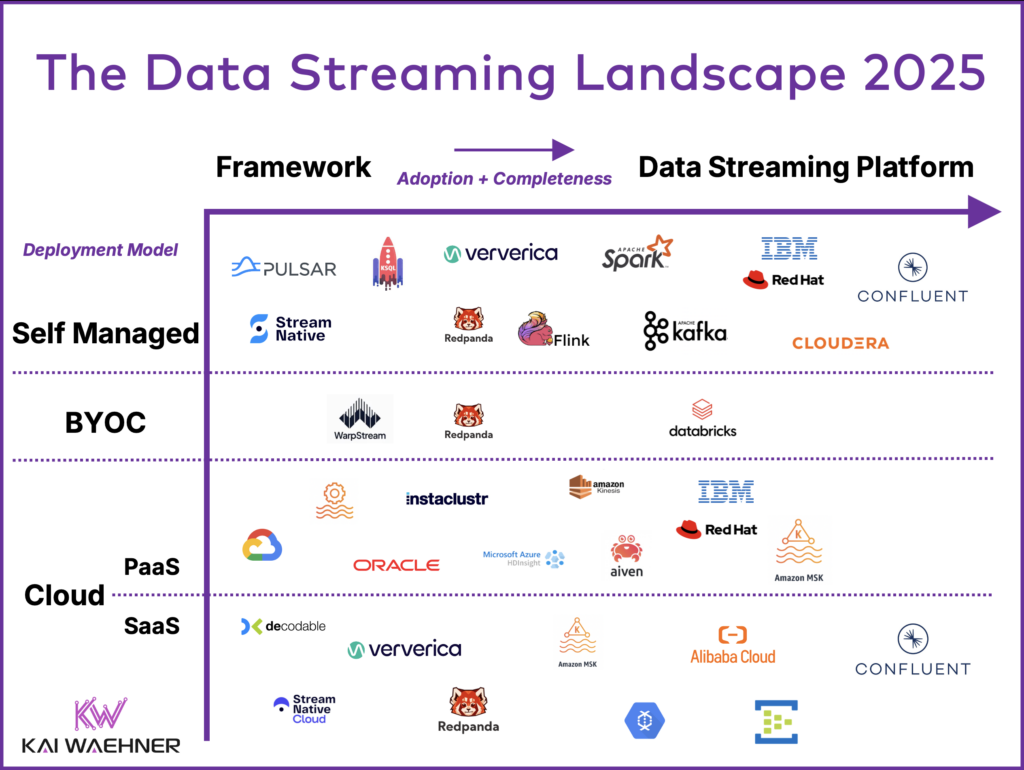

Obviously, many other frameworks, solutions, and vendors play a role in the data streaming landscape (but Confluent is the clear leader):

Don’t just take my word for it (I work at Confluent). Do your own research, evaluation and cost analysis. Look at Forrester Waves, technical comparisons, and independent benchmarks. Just make sure to look at the entire total cost of ownership (TCO). And think about time to market, too.

Amazon Bedrock AgentCore + Confluent Cloud = Foundation for Production-Grade Agentic AI

Agentic AI signals a new era of enterprise automation where intelligent software doesn’t just respond to prompts, but observes, reasons, and acts autonomously across systems.

To move from prototype to production, enterprises need:

- A secure and composable platform like Amazon Bedrock AgentCore

- A real-time event backbone with Apache Kafka

- Embedded, always-on intelligence via Apache Flink

- Open protocols like MCP and A2A for cross-agent collaboration (like a Bedrock AgentCore agent and a Flink agent)

- Seamless integration with core systems through event streaming, not brittle request-response APIs

Together, AWS and Confluent provide this foundation. Bedrock AgentCore delivers the platform for building enterprise-grade agents. Confluent Cloud provides the real-time, event-driven architecture to connect those agents with tools, data, and systems.

And importantly, Confluent runs wherever your business runs. Whether you’re all-in on AWS, operating in Azure, Google Cloud, Alibaba Cloud, on-premises, at the edge, or across a hybrid architecture. Confluent’s data streaming platform offers consistent deployment, management, and security.

The agentic future will be real-time, decoupled, and distributed.

And it runs on events.

Join the data streaming community and stay informed about new blog posts by subscribing to my newsletter and follow me on LinkedIn or X (former Twitter) to stay in touch. And make sure to download my free book about data streaming use cases, including various AI examples across industries.