Agentic AI is changing how enterprises think about automation and intelligence. Agents are no longer reactive systems. They are goal-driven, context-aware, and capable of autonomous decision-making. But to operate effectively, agents must be connected to the real-time pulse of the business. This is where data streaming with Apache Kafka and Apache Flink becomes essential.

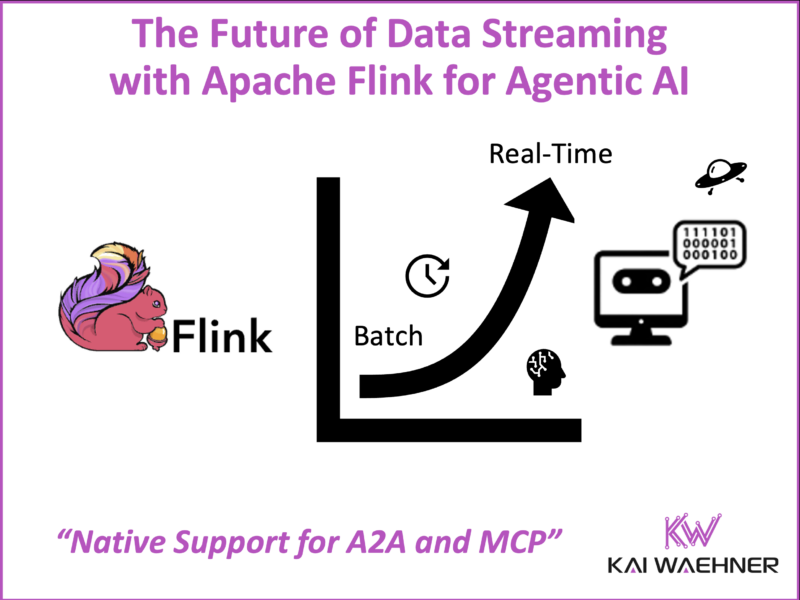

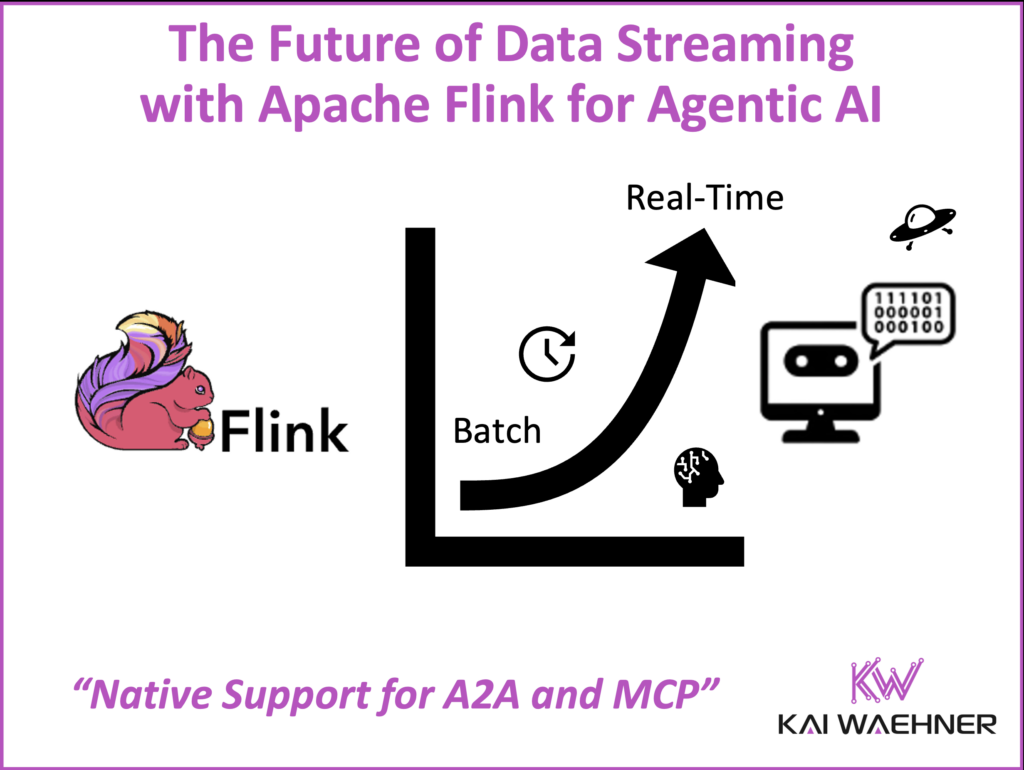

Apache Flink is entering a new phase with the proposal of Flink Agents, a sub-project designed to power system-triggered, event-driven AI agents natively within Flink’s streaming runtime. Let’s explore what this means for the future of agentic systems in the enterprise.

Join the data streaming community and stay informed about new blog posts by subscribing to my newsletter and follow me on LinkedIn or X (former Twitter) to stay in touch. And make sure to download my free book about data streaming use cases, including various real-world examples AI-related topics like fraud detection and generative AI for customer service.

The State of Agentic AI

Agentic AI is no longer experimental. It is still in early stage of the adoption lifecycle, but starting to move into production for first critical use cases.

Agents today are expected to:

- Make real-time decisions

- Maintain memory across interactions

- Use tools autonomously

- Collaborate with other agents

But these goals face real infrastructure challenges. Existing frameworks like LangChain or LlamaIndex are great for prototyping. But (without the help of other tools) they are not designed for long-running, system-triggered workflows that need high availability, fault tolerance, and deep integration with enterprise data systems.

The real problem is integration. Agents must operate on live data, interact with tools and models, and work across systems. This complexity demands a new kind of runtime. One that is real-time, event-driven, and deeply contextual.

Open Standards and Protocols for Agentic AI: MCP and A2A

Standards are emerging to build scalable, interoperable AI agents. Two of the most important are:

- Model Context Protocol (MCP) by Anthropic: A standardized interface for agents to access context, use tools, and generate responses. It abstracts how models interact with their environment, enabling plug-and-play workflows.

- Agent2Agent (A2A) protocol by Google: A protocol for communication between autonomous agents. It defines how agents discover each other, exchange messages, and collaborate asynchronously.

These standards help define what agents do and how they do it. But protocols alone are not enough. Enterprises need a runtime to execute these agent workflows in production—with consistency, scale, and reliability. This is where Flink fits in.

Similar to microservices a decade ago, Agentic AI and protocols like MCP and A2A risk creating tight coupling and point-to-point spaghetti architectures if used in isolation. An event-driven data streaming backbone ensures these standards deliver scalable, resilient, and governed agent ecosystems instead of repeating past mistakes.

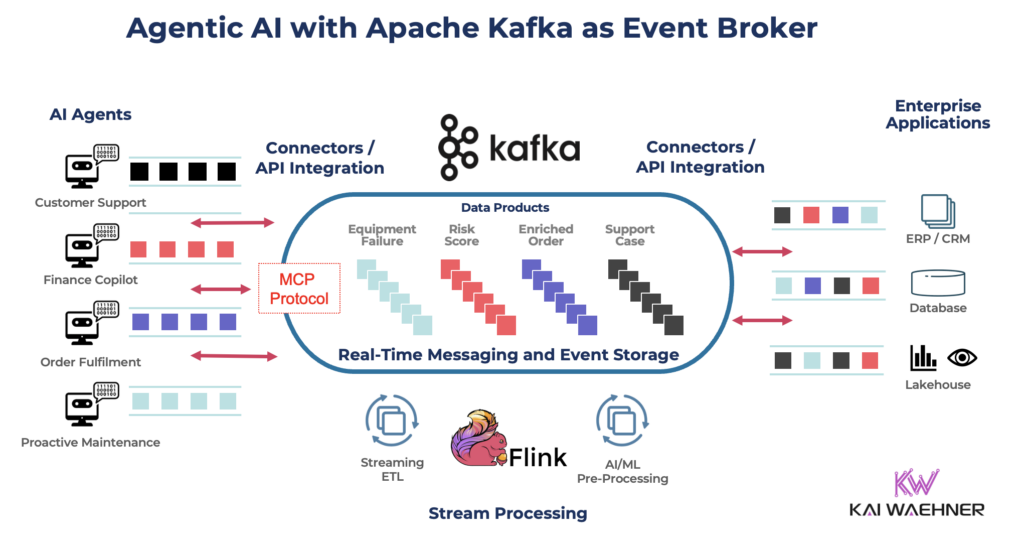

The Role of Data Streaming for Agentic AI with Kafka and Flink

Apache Kafka and Flink together form the event-driven backbone for Agentic AI.

- Apache Kafka provides durable, replayable, real-time event streams. It decouples producers and consumers, making it ideal for asynchronous agent communication and shared context.

- Apache Flink provides low-latency, fault-tolerant stream processing. It enables real-time analytics, contextual enrichment, complex event processing, and now—agent execution.

Agentic AI requires real-time data ingestion to ensure agents can react instantly to changes as they happen. It also depends on stateful processing to maintain memory across interactions and decision points. Coordination between agents is essential so that tasks can be delegated, results can be shared, and workflows can be composed dynamically. Finally, seamless integration with tools, models, and APIs allows agents to gather context, take action, and extend their capabilities within complex enterprise environments.

Apache Flink provides all of these natively. Instead of stitching together multiple tools, Flink can host the entire agentic workflow:

- Ingest event streams from Kafka

- Enrich and process data with Flink’s Table and DataStream APIs

- Trigger LLMs or external tools via UDFs

- Maintain agent memory with Flink state

- Enable agent-to-agent messaging using Kafka or Flink’s internal mechanisms

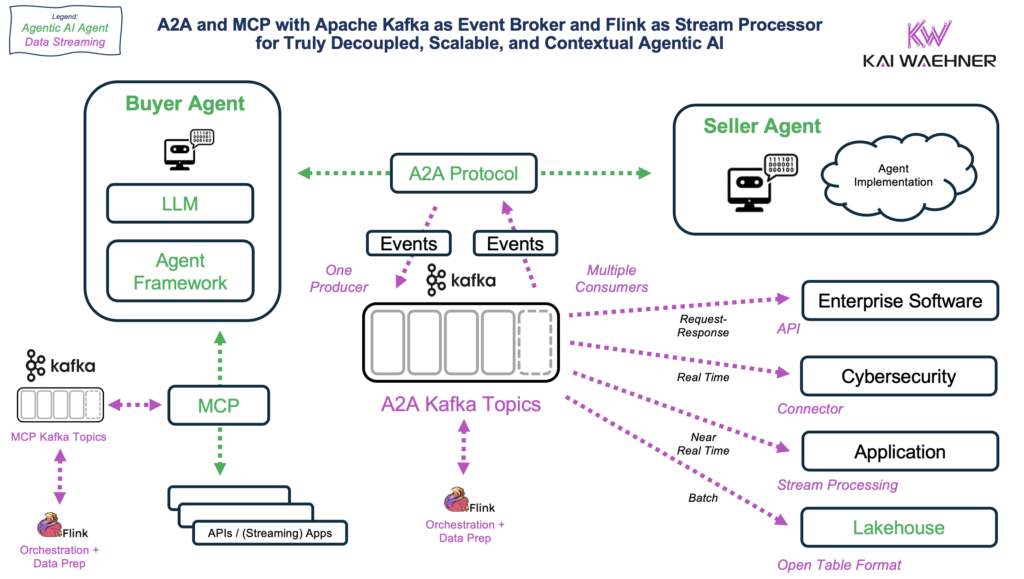

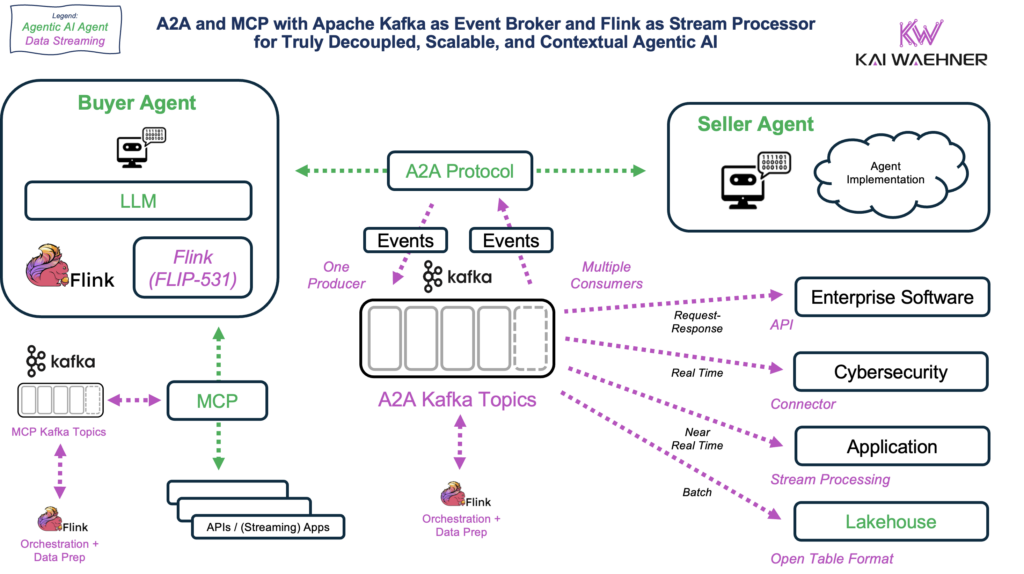

A2A and MCP with Apache Kafka and Flink

A2A and MCP define how autonomous agents communicate and access context. With Kafka as the event broker and Flink as the stream processor, enterprises can build scalable, decoupled, and context-aware Agentic AI systems.

Agents can still communicate point-to-point with each other via protocols like A2A. But their information must often reach many systems, and multiple point-to-point links create brittle architectures. Kafka solves this by acting as the scalable, decoupled event backbone.

More details: Agentic AI with the Agent2Agent Protocol (A2A) and MCP using Apache Kafka as Event Broker.

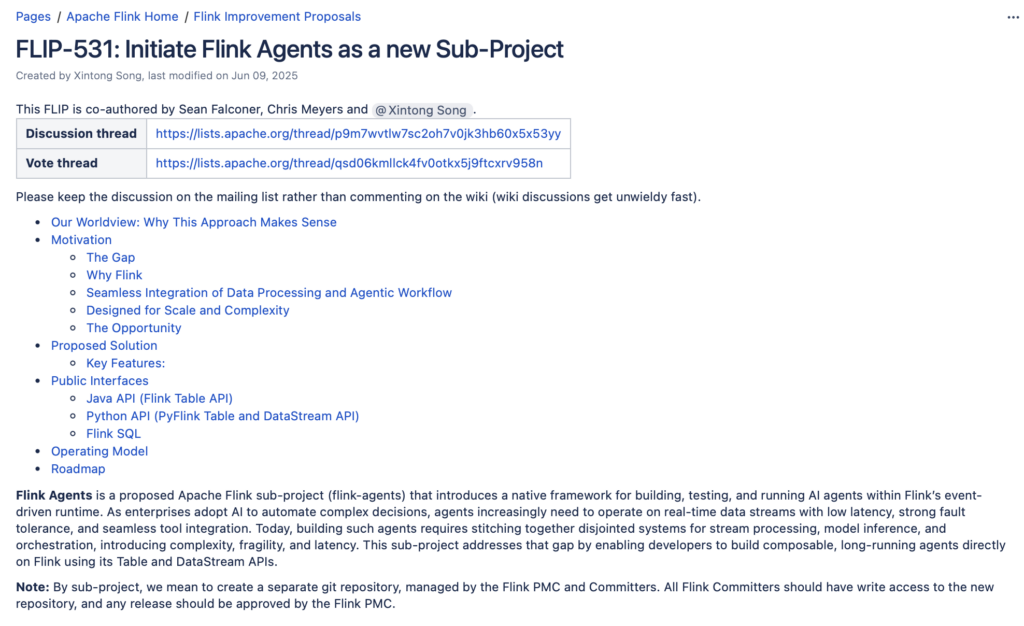

FLIP-531: Building and Running AI Agents in Flink

While this is already possible to implement today, Flink still needs more built-in support for standard protocols like MCP and A2A, along with native AI and ML capabilities, to fully meet the demands of enterprise-grade agentic systems.

FLIP-531: Initiate Flink Agents as a new Sub-Project is an exciting “Flink Improvement Proposal” led by Xintong Song, Sean Falconer, and Chris Meyers. It introduces a native framework for building and running AI agents within Flink.

Key Objectives

- Provide an execution framework for event-driven, long-running agents

- Integrate with LLMs, tools, and context providers via MCP

- Support agent-to-agent communication (A2A)

- Leverage Flink’s state management as agent memory

- Enable replayability for testing and auditing

- Offer familiar Java, Python, and SQL APIs for agent development

With FLIP-531, Apache Flink goes beyond orchestration and data preparation in Agentic AI environments. It now provides a native runtime to build, run, and manage autonomous AI agents at scale.

Developer Experience

Flink Agents will extend familiar Flink constructs. Developers can define agents using endpoints coming soon to Flink’s Table API or DataStream API. They can connect to endpoints of large language models (LLM), register models, call tools, and manage context—all from within Flink.

Sample APIs are already available for Java, Python (PyFlink), and SQL. These include support for:

- Agent workflows with tools and prompts

- UDF-based tool invocation

- Integration with MCP and external model providers

- Stateful agent logic and multi-step workflows

Roadmap Milestones

The Flink Agents project is moving fast with a clear roadmap focused on rapid delivery and community-driven development:

- Q2 2025: MVP design finalized

- Q3 2025: MVP with model support, replayability, and tool invocation

- Q4 2025: Multi-agent communication and example agents

- Late 2025: First formal release and community expansion

The team is prioritizing execution and fast iteration, with a GitHub-based development model and lightweight governance to accelerate innovation.

Event-Driven Flink Agents: The Future of Always-On Intelligence

The most impactful agents in the enterprise aren’t chatbots or assistants waiting for user input. They are always-on components, embedded in infrastructure, continuously observing and acting on real-time business events.

Apache Flink Agents are built for this model. Instead of waiting for a request, these agents run asynchronously as part of an event-driven architecture. They monitor streams of data, maintain memory, and trigger actions automatically—similar to observability agents, but for decision-making and automation.

This always-on approach is critical for modern use cases. Enterprises can’t afford delays in fraud detection, equipment failures, customer engagement, or supply chain response. Agents must act instantly—based on the data flowing through the system—not hours later via batch processing or human intervention.

Apache Flink provides the ideal foundation. Its low-latency, stateful stream processing enables agents to:

- Observe and react to real-time signals from events, APIs, databases or external SaaS reqests

- Maintain state across workflows and business processes

- Collaborate asynchronously

- Trigger tools, request-response APIs, or downstream actions

These aren’t chatbot wrappers—they’re autonomous services embedded in production. They function as part of your business nervous system, adapting in real time to changing conditions and continuously improving outcomes.

This architecture is what enterprises truly need: automation that is fast, reliable, and context-aware. It reduces time to detect and resolve issues, improves SLA adherence, and enables proactive decisions across the organization.

Always-on, embedded agents are the future of AI in business. Apache Flink is ready to power them. Let’s explore a few excellent use case examples across different industries in the next section.

Use Cases for Agentic AI with Apache Kafka and Flink Across Industries

Agentic AI use cases are emerging across industries. These systems demand real-time responsiveness, contextual intelligence, and full autonomy. Traditional REST APIs, manual orchestration, and batch jobs fall short in these environments. Instead, enterprises need infrastructure that is continuous, stateful, event-driven, and always-on (as discussed explicitly in the above section).

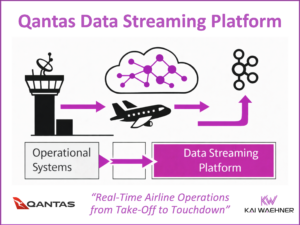

Apache Kafka and Apache Flink already power the data backbone of many digital-native organizations. For example:

- OpenAI uses Kafka and Flink to build the infrastructure behind its GenAI and Agentic AI offerings.

- TikTok relies on Flink and Kafka for its real-time recommendation engine and online model training architecture.

With Flink Agents, developers can build:

- Always-on and ReAct-style agents with structured workflows

- Retrieval-augmented agents with semantic search

- Long-lived stateful agents with memory

- Fully autonomous systems with tool and API access

Here are some examples where Flink Agents will shine:

Finance

- Fraud detection and risk scoring

- Compliance monitoring

- Adaptive trading systems with feedback loops

Manufacturing

- Predictive maintenance for industrial equipment

- Smart factory optimization

- Supply chain managing demand and inventory

Retail

- Real-time product tagging and catalog enrichment

- Personalized promotion based on customer behavior

- Inventory rebalancing and logistics agents

Healthcare

- Patient monitoring and alerting

- Claims processing and document triage

- Compliance audit

Telecommunications

- Self-healing networks

- Customer support automation with feedback loops

- Dynamic QoS optimization

Gaming

- Adaptive AI opponents that respond to player behavior.

- Dynamic content generation for evolving game environments.

- Real-time moderation for abuse and cheating detection.

Public Sector

- Traffic and energy optimization in a smart city

- Automated citizen service assistants

- Public safety agents for emergency detection and response

The Future of Agentic AI is Event-Driven

The rise of Agentic AI means a shift in infrastructure priorities.

It’s not enough to invest in model quality or prompt engineering. Enterprises must also modernize their data and execution layer.

Point-to-point communication between agents is fine for direct interaction, but at scale the real value comes from an event-driven backbone like Kafka and Flink that ensures information reliably reaches all required systems.

Flink Agents offers a production-grade, enterprise-ready foundation for agentic systems. It turns brittle demos into reliable applications by providing:

- Consistent real-time data via Kafka

- Stateful, fault-tolerant execution via Flink

- Standardized protocols via MCP and A2A

- Developer productivity via familiar APIs

This combination reduces time-to-market, increases system resilience, and lowers operational costs. It gives developers and architects the tools to build agents like real software. Scalable, testable, and observable.

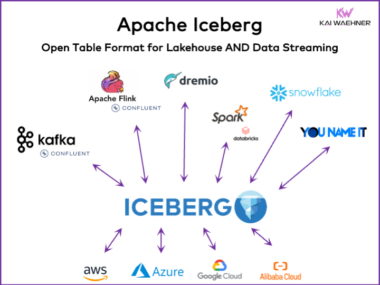

This shift is NOT a replacement for data lakes, lakehouses, or AI platforms. It complements them by enabling real-time, event-driven execution alongside batch and analytical workloads.

To explore how streaming and AI platforms work together in practice, check out my Confluent + Databricks blog series, which highlights the value of combining Kafka, Flink, and lakehouse architectures for modern AI use cases.

The future of Agentic AI is event-driven. Apache Flink is ready to power it.

Join the data streaming community and stay informed about new blog posts by subscribing to my newsletter and follow me on LinkedIn or X (former Twitter) to stay in touch. And make sure to download my free book about data streaming use cases.