Telecom is at a turning point. Networks are becoming software-defined, customer expectations are rising, and AI is moving from pilot projects into large-scale operations. The TM Forum Innovate Americas event in Dallas brought these trends into sharp focus. Leaders from AT&T, Verizon, and many others shared how AI is driving growth, how autonomous networks are moving from vision to execution, and how data streaming underpins it all. This article captures the highlights of the event, explores the key themes shaping the telecom industry, and explains why data streaming is becoming the central nervous system of modern telcos.

Join the data streaming community and stay informed about new blog posts by subscribing to my newsletter and follow me on LinkedIn or X (former Twitter) to stay in touch. And download my free book about data streaming use cases, including a dedicated chapter about the telco industry.

TM Forum and the Innovate Americas Event

TM Forum is a global non-profit association. It has shaped the telecom industry since the 1980s. Its members include service providers, vendors, and academic partners. The Forum is known for its collaborative model. It creates standards, frameworks, and APIs while encouraging open knowledge sharing across the ecosystem.

Innovate Americas continued this tradition in September 2025 in Dallas, Texas. The event brought together leading telcos, vendors, and innovators. Sessions were highly interactive, often with multiple speakers or panel discussions. This format encouraged dialogue, not just presentations.

I was on site in Dallas with a few colleagues to meet customers, prospects, partners, and the broader telecom community. We had great conversations and learned a lot.

Two themes dominated: AI as a growth engine and the vision of autonomous networks. Both depend on one essential capability: data streaming. Real-time, governed data flowing across systems enables AI to act with context and networks to operate with intelligence.

AI Trends in Telco

TM Forum CEO Nik Willets explored the path to successful AI adoption in the telecom sector:

- From data chaos to data product catalogs: turn scattered data into structured, reusable assets.

- From fragmented technology to agentic AI and ODA: build networks and systems that are open, composable, and intelligent.

- From security risks to governance and a common language: ensure trust, compliance, and collaboration across the ecosystem.

- From talent gaps to culture and common pathways: equip people and teams to adopt AI responsibly and at scale.

What Stood Out on Stage: AT&T, Verizon, and More Industry Leaders

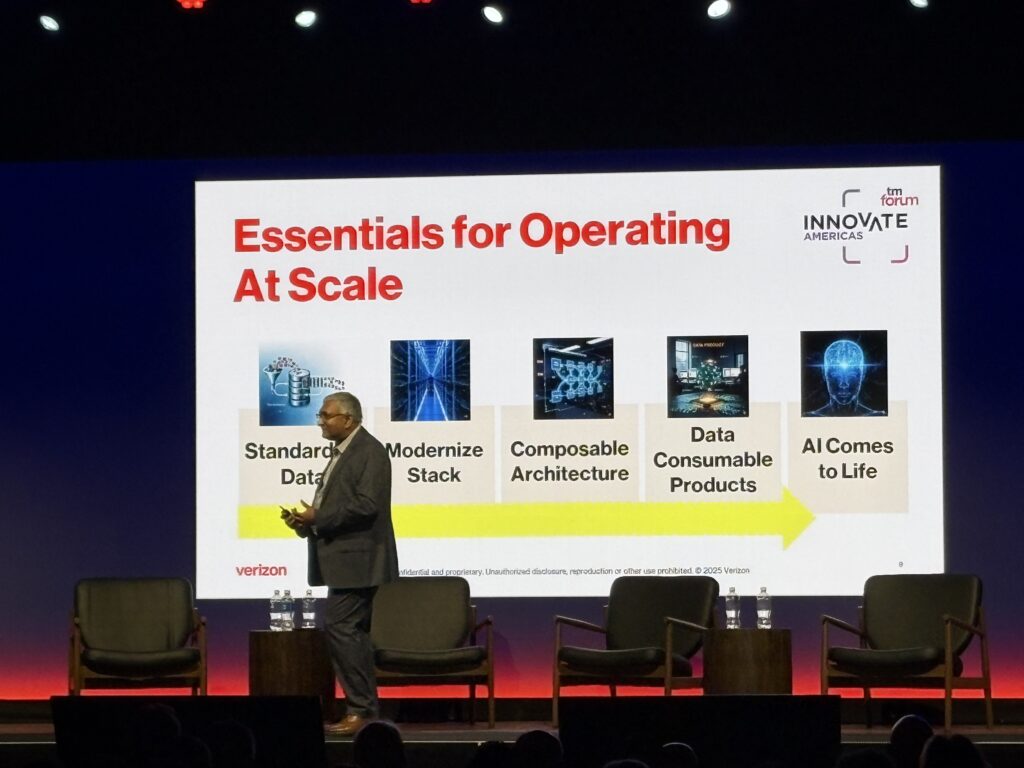

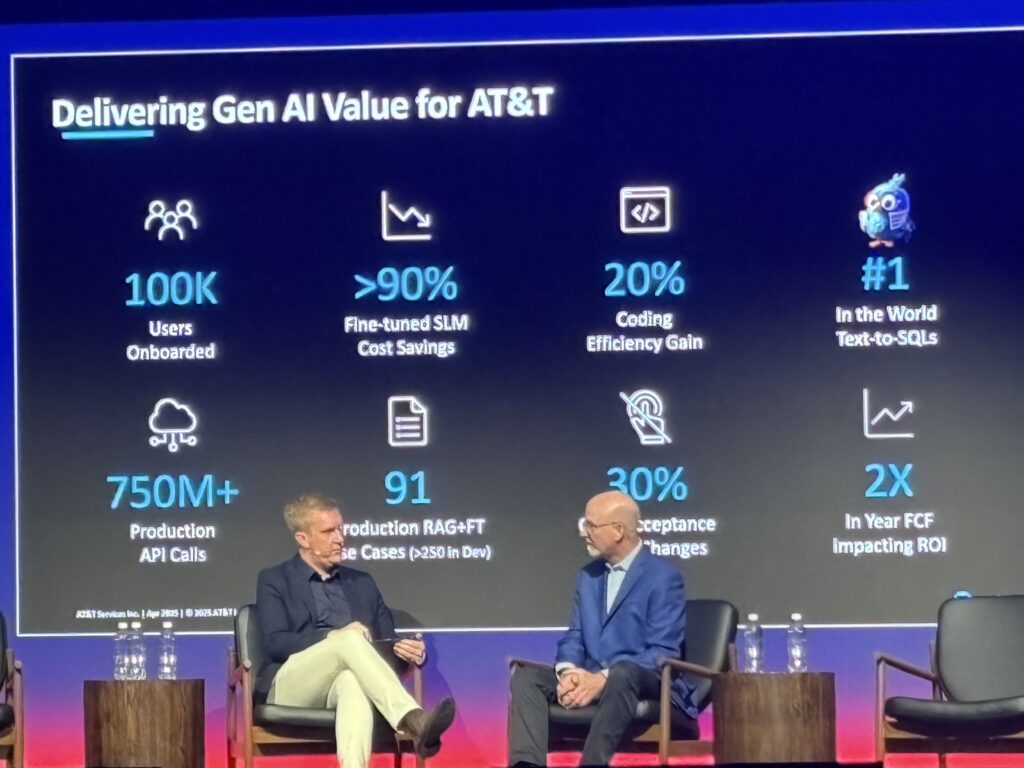

The keynotes made one thing clear: AI at scale is real in telecom. AT&T, Verizon, and Lumen showed how large models and real-time analytics are reshaping pricing, customer experience, and security. Verizon highlighted AI for network planning, while AT&T focused on monetization and data-driven growth.

Autonomous networks were another strong theme. Sessions with AT&T, AWS, and Tech Mahindra explained how to move from automated processes to fully agent-driven, self-optimizing operations. The message was practical: stream telemetry, detect anomalies, trigger AI agents, and scale step by step.

Security also stood out. Panelists discussed AI-powered defense for telco-specific threats like signaling storms and bot-driven fraud. The common factor was streaming analytics: live data enables faster detection and automated action.

Vendors Driving Innovation: Telco Specialists, Horizontal Platforms, and Global System Integrators

Telco-specific providers like Amdocs, Netcracker, Nokia, and Ericsson showed blueprints for autonomous operations and digital twins. Emerging players such as Resolve, Ribbon, and Grokstream shared automation and assurance use cases.

At the same time, horizontal platforms are gaining ground. Snowflake, Databricks, Pure Storage, Red Hat, NVIDIA, and Dell presented data and AI infrastructure now being applied to telco workloads. These vendors bring scale and cloud-native agility, while telcos adapt them for OSS, BSS, and NOC needs.

Why Data Streaming Matters

All of these stories depend on continuous data in motion. Telemetry from networks, events from OSS/BSS, and customer signals all need to flow in real time. A data streaming platform like Confluent connects these sources, applies governance, and feeds AI models and agents with fresh context. Without it, AI and autonomous networks remain PowerPoint slides.

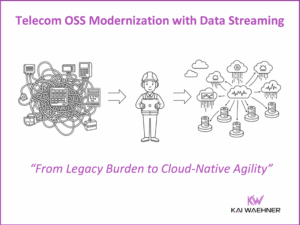

I explored in a deep dive how telcos can change: “Telecom OSS Modernization with Data Streaming: From Legacy Burden to Cloud-Native Agility“.

Data Streaming in Telco: The “Next Gen TIBCO”

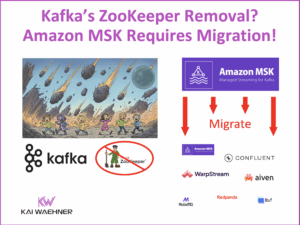

For many telecom professionals, the simplest way to explain data streaming with Confluent or other vendors is as a the “next-generation TIBCO.” Most telcos relied on TIBCO for event-driven architecture, real-time messaging, and integration over the past two decades.

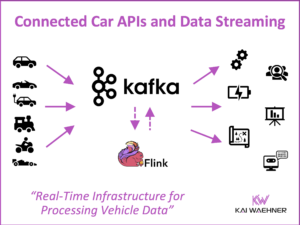

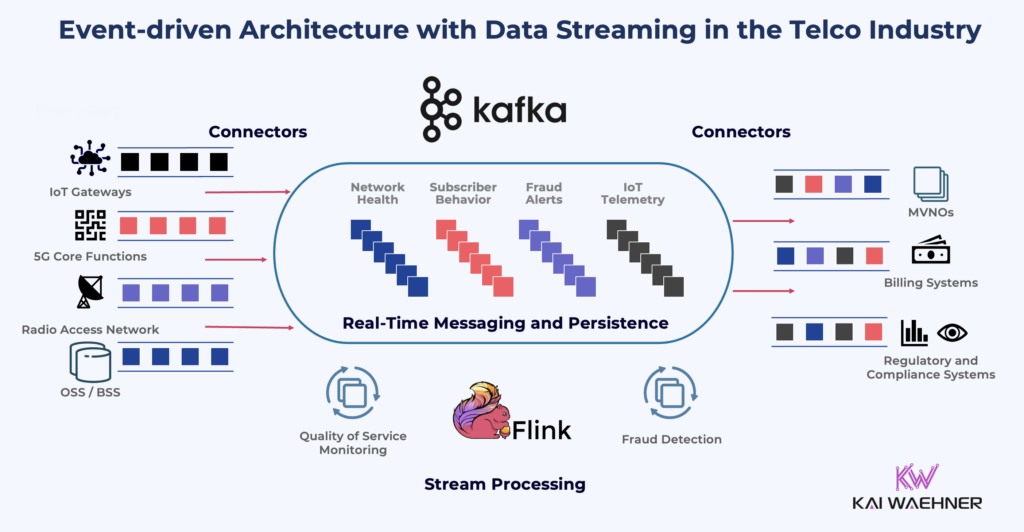

Data streaming with Apache Kafka and Flink provides the same foundation such as messaging, integration, and stream processing. The difference is that a data streaming platform can run at much larger scale and support cloud-native and hybrid multi-cloud environments, handling gigabytes per second of data.

Key benefits of data streaming compared to TIBCO and other legacy middleware in the telco space:

- Open source core with an enterprise-grade platform and/or cloud service around it

- Supported by a wide ecosystem of vendors, not just one provider

- Scales across both operational and analytical workloads

- Includes governance, lineage, and security by design

- Focus on a event log as persistence layer and continuous stateless and stateful stream processing, not only publish/subscribe messaging

With these capabilities, data streaming with the de facto standard Apache Kafka has become the central nervous system of modern telcos.

Telcos can connect existing TIBCO systems to a data streaming platform or migrate step by step. Many are already in the process, while some have fully shut down TIBCO because it did not scale, was not cloud native, lacked SaaS options, and suffered from a missing product vision.

Key Trends in the Telecom Industry and the Role of Data Streaming

The discussions at TM Forum Innovate Americas highlighted four major trends shaping the telecom industry, each of them relying on real-time data streaming as a critical enabler.

AI as a Growth Engine

AI is no longer experimental. Verizon and AT&T showcased how AI drives customer engagement, network optimization, and new revenue models. But the success of AI depends on fresh, high-quality data. A data streaming platform deliver this data in real time. It connects systems across the telco landscape, ensures quality and lineage, and feeds AI models continuously. Without an event-driven architecture and data streaming, AI works with stale snapshots and loses business value. Data streaming powers many AI workloads; no matter if the use case is mainframe modernization or cutting-edge agentic AI scenarios.

Open Digital Architecture (ODA)

ODA is about interoperability. It breaks down silos and allows telcos to expose and consume services consistently. Data streaming acts as the event-driven fabric beneath ODA. It integrates legacy and modern systems, moves data across domains, and supports APIs with real-time streams. This way, telcos can modernize without shutting down their existing operations.

Cybersecurity in the Age of AI

Telcos face rising cyber threats, from DDoS attacks to targeted strikes on IoT, edge, and network slicing. AI helps detect anomalies faster, but only if it can analyze live data. Data streaming provides this live feed, enabling early detection and automated defense. For enterprises and the public sector, real-time security monitoring powered by streaming platforms is already becoming a requirement. I explored this topic in an entire blog series about data streaming as the backbone for cybersecurity.

Autonomous Networks and Agentic AI

The vision is clear: networks that configure, heal, and optimize themselves. Autonomous networks rely on AI agents that make decisions in real time. Streaming data is the lifeblood of these agents. It ensures they operate on current conditions, not outdated reports. To reach this vision, telcos must modernize. Event-driven microservice architectures supported by a data streaming platform allow them to innovate while keeping core services running. A great starting point to learn more: “How Data Streaming with Apache Kafka and Flink Power Event-Driven Agentic AI in Real Time“.

Real-World Telco Success Stories with Data Streaming

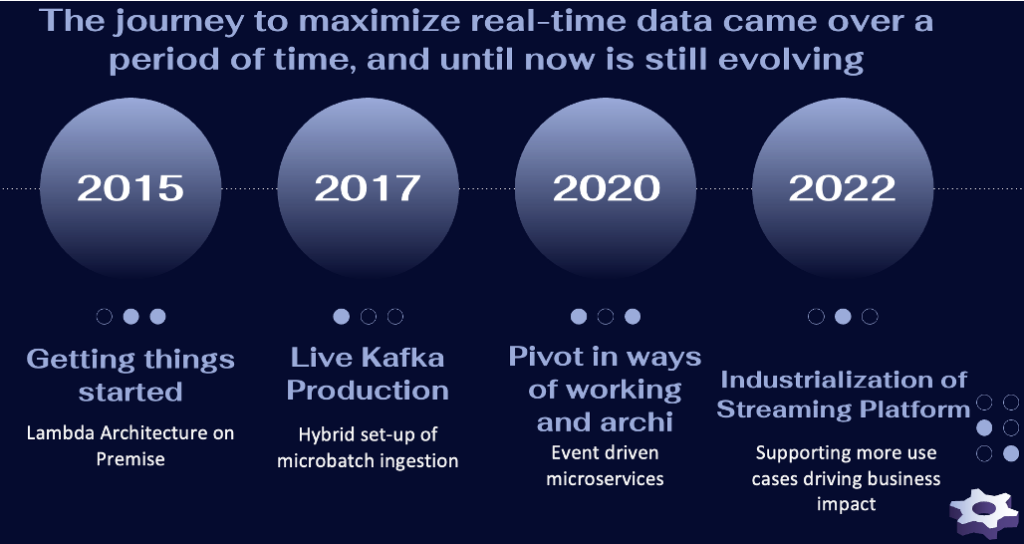

Telcos around the world are at different stages of adopting data streaming. Some are building greenfield 5G networks, others are modernizing decades-old systems, and many are focusing on customer experience. A few concrete success stories show how this shift is already delivering business value.

EchoStar / Dish Network (United States)

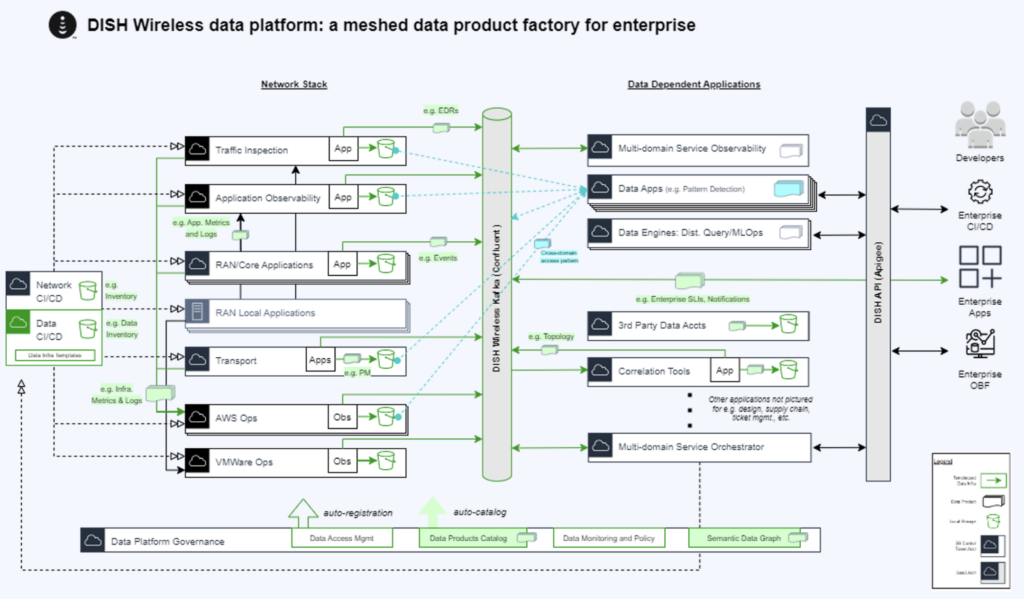

Dish built the first fully cloud-native 5G network in the US. From the start, data streaming was a core architectural choice. Confluent enables Dish to handle network events, device telemetry, and operational data in real time. This gives the company flexibility to scale rapidly and introduce new services such as IoT connectivity and on-demand slicing. Because streaming is central, Dish can integrate with partners and cloud platforms more easily than traditional telcos locked into legacy OSS and BSS stacks.

British Telecom (Europe)

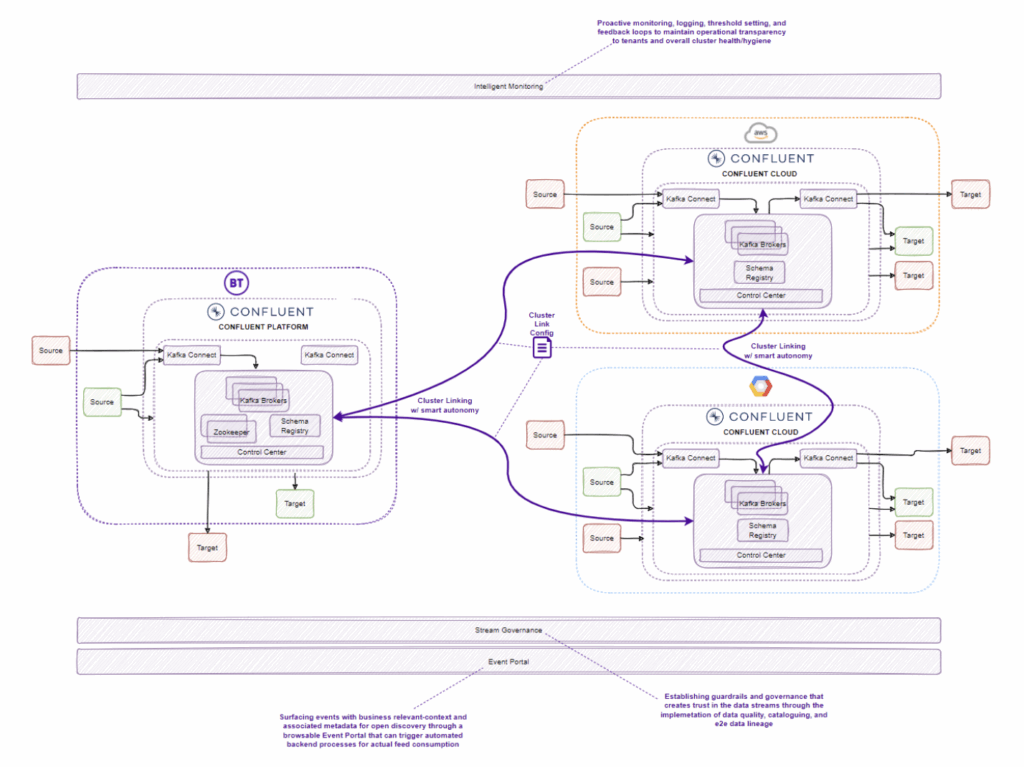

British Telecom (BT) faces the challenge of modernizing a large, established telco. The company is moving toward an event-driven architecture that allows it to decouple legacy systems and speed up new service delivery. Kafka-based streaming pipelines connect OSS, BSS, and customer-facing applications. This provides a real-time backbone for both operational data and analytics. With this hybrid multi-cloud architecture, BT can introduce new products faster, simplify IT integration, and reduce the cost and risk of modernizing brownfield environments.

Globe Telecom (Asia)

Globe Telecom uses data streaming to improve customer experience across digital and mobile services. By processing events in real time, Globe can personalize offers, detect issues, and respond to customer needs instantly. For example, when network problems occur, streaming pipelines feed monitoring systems and trigger automated actions. When customers interact with mobile apps, event data is processed in real time to tailor promotions and detect fraud. This event-driven approach improves both satisfaction and revenue growth for many telecom organizations.

Outlook: AI, Autonomous Networks, and the Rise of Data Streaming

AI and autonomous networks will dominate telco strategy. Data streaming will become the foundation of both infrastructure and customer-facing innovation.

The Blockers Holding Telcos Back

Despite strong momentum, several challenges slow down adoption of data streaming, AI, and autonomous networks:

- Legacy brownfield systems are difficult to integrate

- Operations cannot risk downtime during modernization

- Skills and culture must evolve to embrace streaming and AI

- Governance and security frameworks need to mature for AI-driven networks

The Path Forward: Balancing Continuity and Innovation

Telcos must balance continuity with innovation. Integrating legacy systems to an event-driven architecture allows modernization without disruption. Deploying a data streaming platform alongside existing systems creates flexibility. Training staff on streaming, event-driven architecture, and AI ensures sustainable progress. Governance must be embedded from the start.

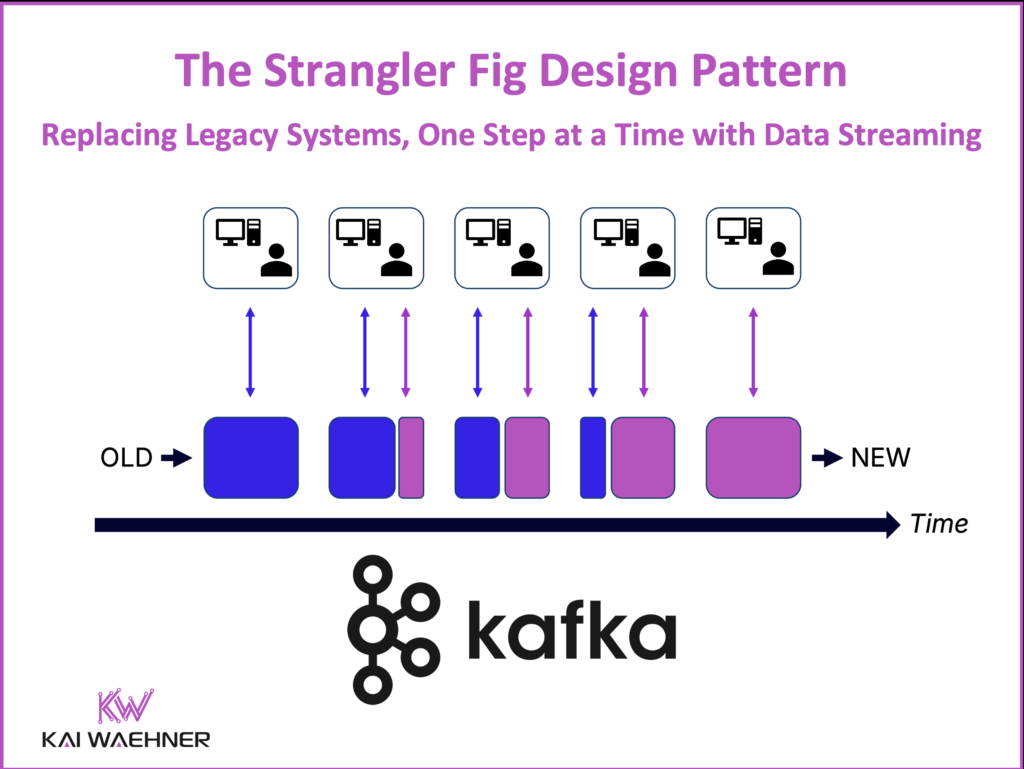

A strangler approach enables telcos to gradually replace legacy systems by fitting streaming around them, then ripping and replacing components step by step:

TM Forum Innovate Americas in Dallas confirmed a clear direction. AI is the growth engine, autonomous networks are the vision, and data streaming is the enabler. Without real-time, governed, and scalable data flows, neither AI nor self-managing networks can succeed.

Telcos that embrace this foundation will move faster, protect better, and serve customers in new ways. Those that hesitate will risk falling behind as the industry transforms.

Join the data streaming community and stay informed about new blog posts by subscribing to my newsletter and follow me on LinkedIn or X (former Twitter) to stay in touch. And download my free book about data streaming use cases, including a dedicated chapter about the telco industry.