I had the pleasure to deliver the keynote at OOP 2020 in Munich, Germany. This is a well-known international conference around topics like agility, architecture, security, programming languages and soft skill. My keynote had the title “The Rise Of Event Streaming – Why Apache Kafka Changes Everything“. Here are share some impressions and details of the talk…

Abstract of the Keynote Presentation

Business digitalization covers trends like microservices, the Internet of Things or Machine Learning. This is driving the need to process events at a whole new scale, speed and efficiency. Traditional solutions like ETL / data integration or messaging are not build to serve these needs.

Today, the open source project Apache Kafka is being used by thousands of companies including over 60% of the Fortune 100. These companies power and innovate their businesses by focusing their data strategies around event-driven architectures leveraging event streaming.

We will discuss the market and technology changes that have given rise to Kafka and to event-driven architectures. The audience learns the key aspects of building a platform for stream processing with Kafka. Examples of productive use cases from the automotive, manufacturing and transportation sector will showcase the power of event streaming.

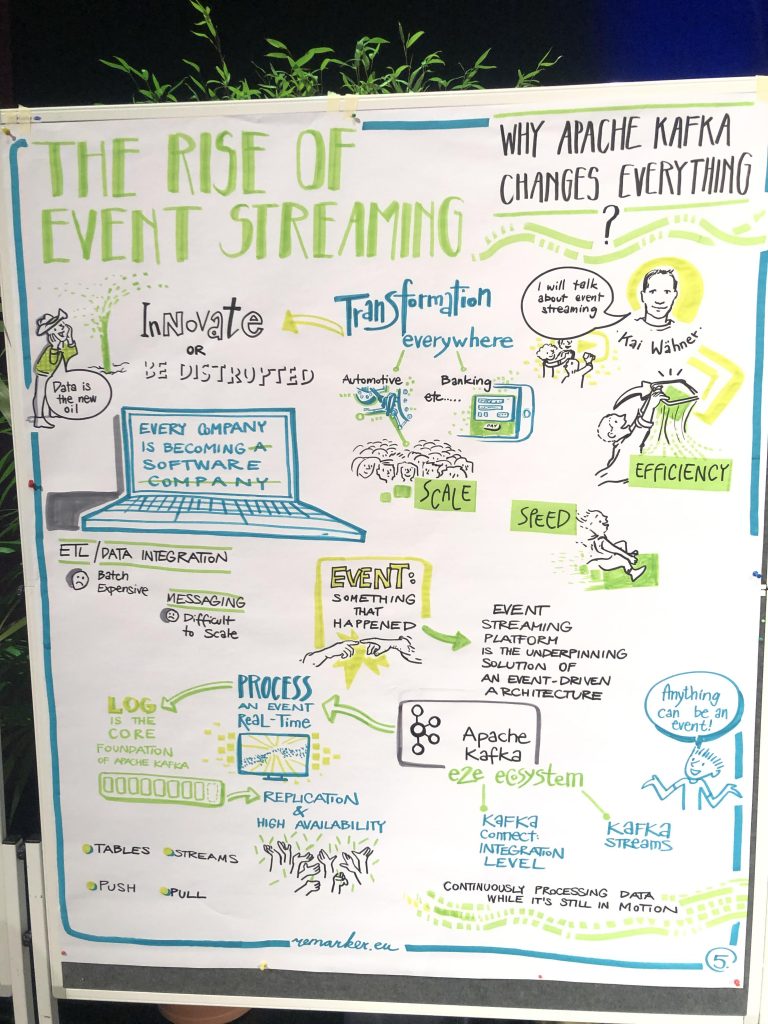

Event Streaming Whiteboard – A Fantastic Live Drawing

My session was live-drawn during my presentation by so called “graphic recorders” from remarker. Here is the impressive outcome:

Slide Deck

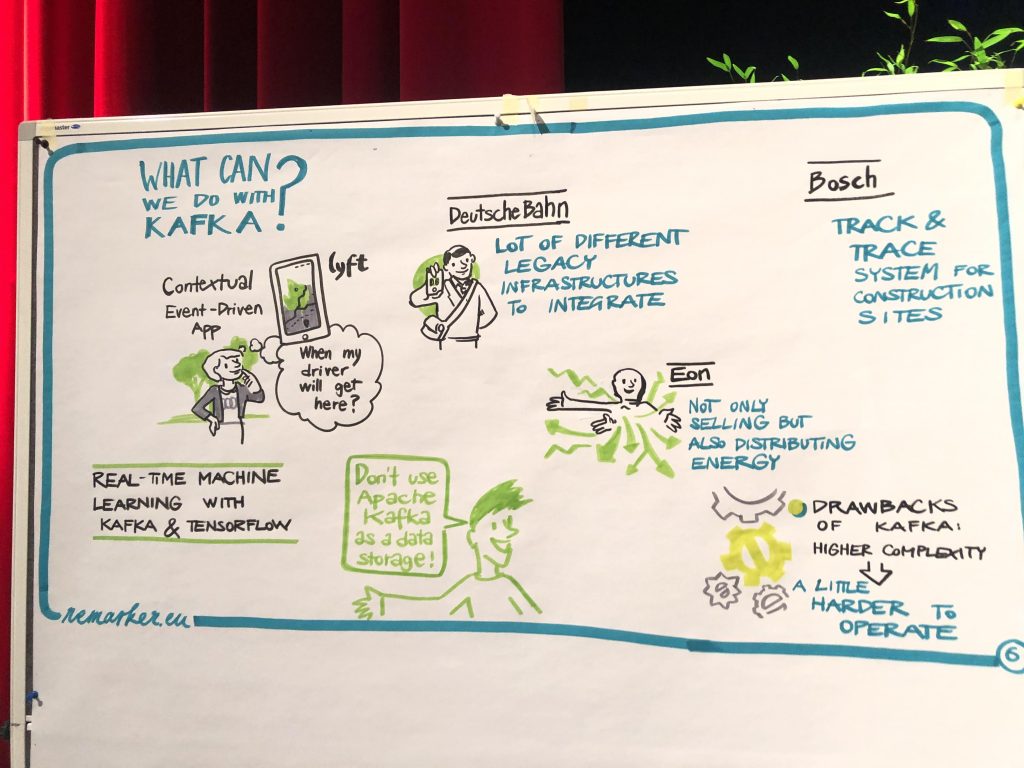

Here is the slide deck of my presentation. It covers

- the history of Apache Kafka and Event Streaming

- core design principles and architecture of Apache Kafka

- use cases from Lyft, Audi, Deutsche Bahn, Bosch, EON, and more

Click on the button to load the content from www.slideshare.net.

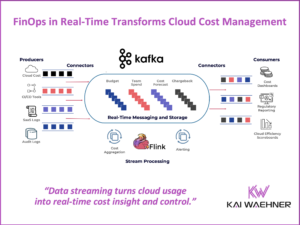

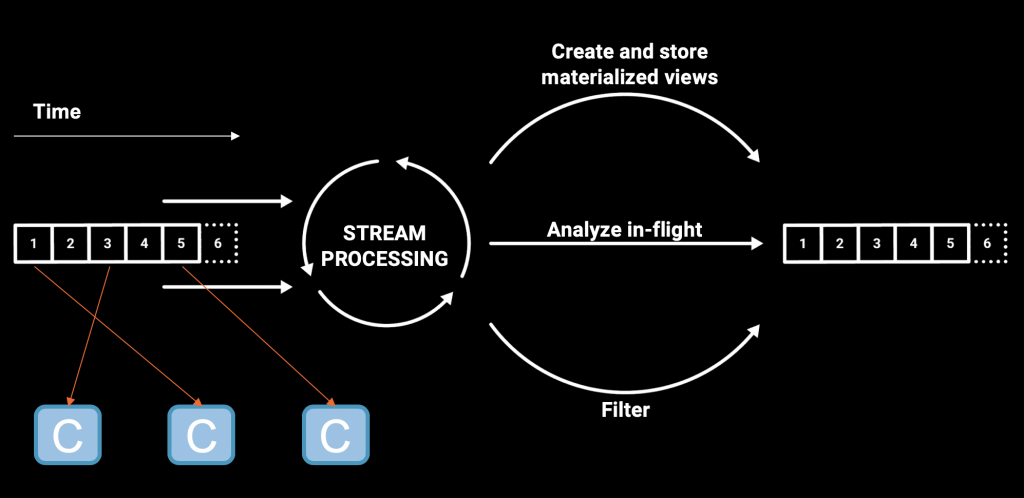

A specific focus of the presentation was on “stream processing”; a core feature and design concept as part of event streaming platforms. It allows to continuously process massive volumes of data in stateless or stateful applications in real time:

You can checkout my presentation and video recording about KSQL at Big Data Spain 2018 (and many other resources on the web) for more details about stream processing with the Apache Kafka ecosystem.