Financial services companies need to process data in real time, not just for trading but also for observability, log analytics, and compliance. Diskless Kafka is emerging as a powerful and cost-efficient option for these workloads. By combining the Kafka protocol with cloud-native object storage, it reduces infrastructure costs, improves elasticity, and simplifies operations. This article explores how Robinhood, a leading fintech company, uses Diskless Kafka with WarpStream to power its real-time architecture, optimize log analytics, and scale observability pipelines while significantly reducing cost.

Join the data streaming community and stay informed about new blog posts by subscribing to my newsletter and follow me on LinkedIn or X (former Twitter) to stay in touch. And make sure to download my free book about data streaming use cases, including many use cases and case studies from the financial services sector.

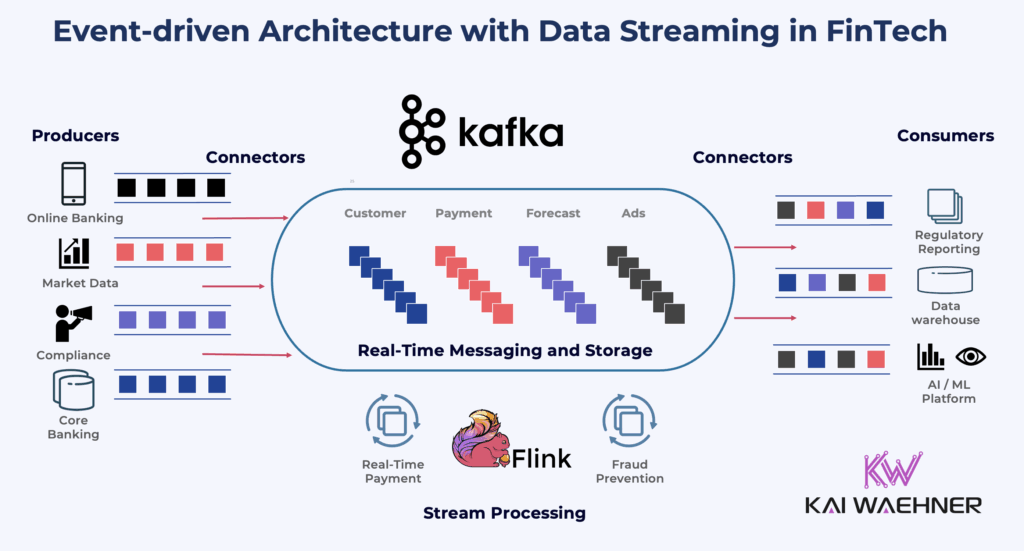

Data Streaming with Apache Kafka and Flink in Financial Services

The financial services industry runs on data. But batch systems are too slow. They introduce delays, increase risk, and limit innovation.

That’s why data streaming is becoming essential. With technologies like Apache Kafka and Apache Flink, firms process events continuously. Not hours or days later.

This enables use cases like:

- Fraud detection and prevention at Capital One

- Regulatory reporting at ING

- Customer analytics at Citigroup

- Mainframe offloading at RBC

These banks companies and many further financial services organizations use a Data Streaming Platform to move data between systems, process it in motion, and govern it across the enterprise.

The result: faster decisions, lower risk, and better customer experience.

Learn more: How Data Streaming with Apache Kafka and Flink Drives the Top 10 Innovations in FinServ.

Robinhood: The FinTech That Democratizes Finance with Real-Time Data

Robinhood is a fintech company based in the US. Its mission is to “Democratize finance for all.” The company gives retail investors access to stock, crypto, and options trading with zero commission.

Its mobile-first experience disrupted the traditional brokerage model.

Behind the scenes, Robinhood is a real-time business. All trading and pricing flows must complete in under one second. System availability, accuracy, and latency are critical.

Robinhood uses a microservices architecture. Each service is built for a specific task. These services are loosely coupled and communicate over Kafka as the scalable, reliable event-broker.

Robinhood processes more than 10 terabytes of data per day. It serves over 14 million monthly active users.

Data Streaming at Robinhood with Apache Kafka and Flink in the Cloud

Apache Kafka is central to Robinhood’s architecture. It is used for mission-critical systems and analytics.

Every trade order and price update flows through Kafka. This includes:

- Stock trading

- Clearing

- Crypto trading

- Push notifications

Kafka ensures high throughput, low latency, and resilience at scale. Data streaming helps optimize this further, with compliance and governance in mind. After using open source Kafka for some time, Robinhood invested into a stable and scalable future with Confluent’s Data Streaming Platform.

Robinhood also uses Apache Flink for stream processing. It runs more than 250 Flink applications in production.

Key use cases:

- Real-time data ingestion

- Fraud detection

- Shareholder position tracking

- Internal analytics

- Alerting and monitoring

Flink allows Robinhood to analyze and act on data in motion. This supports fast reactions to market events, suspicious behavior, or system failures.

Kafka and Flink together build the foundation for Robinhood’s real-time platform.

Log Analytics and Observability with Diskless Kafka Using WarpStream at Robinhood

Kafka also supports Robinhood’s log analytics and observability pipelines. Application and infrastructure logs were pushed through Apache Kafka, processed with Datadog Vector.

But there was a challenge. Traffic follows market hours. During trading times, logs spike sharply. At night and on weekends, traffic drops.

This meant Robinhood had to overprovision Kafka clusters for peak traffic — even if much of the time the resources were idle.

To solve this, Robinhood migrated its logging workloads from open-source Kafka to WarpStream. Look at the video recording and slides from Robinhood’s presentation at Current New Orleans to learn more.

WarpStream is a Kafka-compatible, diskless streaming platform. It offers elastic scale and stateless infrastructure, optimized for cloud environments.

Results after Robinhood’s migration from open source Apache Kafka to WarpStream:

- 45% total cost reduction

- 99% savings on inter-AZ networking

- 36% lower compute costs

- 13% lower storage costs

The elasticity of WarpStream allowed Robinhood to scale capacity up and down with real workload needs — instead of keeping a static Kafka cluster running all the time.

Logs still follow the same traffic patterns. But now, Robinhood only pays for what it uses. This improves efficiency and makes log analytics more sustainable at scale.

Read more about the migration from open source Apache Kafka to WarpStream (Diskless BYOC) in the related blog post.

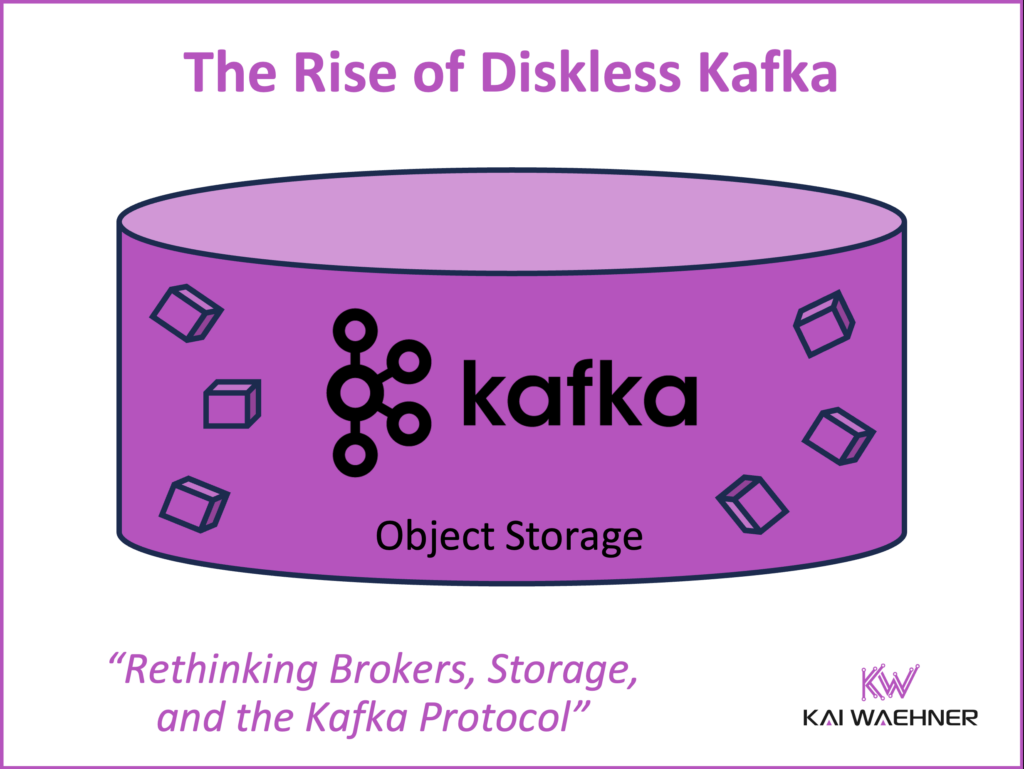

The Rise of Diskless Kafka for Cost Savings and Simplicity

Diskless Kafka marks a major shift in data streaming by removing brokers and storing all data directly in cloud object storage like Amazon S3, while still using the Kafka protocol.

Several vendors are driving this forward:

- WarpStream is the clear leader and innovator; already running in production with many customers, combining diskless Kafka with a BYOC (Bring Your Own Cloud) model for full control and security;

- Others solutions from like Aiven, Slack, AutoMQ, and Confluent’s Freight Clusters are exploring similar paths.

- While Confluent is in production with WarpStream and Freight Clusters at many customers, others still mostly work on proposals like KIP-1150 (Aiven), KIP-1176 (Slack), and KIP-1183 (AutoMQ) highlight different approaches to modernizing Kafka’s storage architecture.

This innovation simplifies operations, improves elasticity, and drastically cuts costs — especially for log analytics, observability, and long-term retention workloads. As the Kafka protocol becomes the common layer across platforms, diskless Kafka is shaping the next generation of scalable, cloud-native event streaming.

However, diskless architectures may not meet the ultra-low-latency needs of certain real-time transactional use cases. Object storage introduces higher read and write latencies, making this model better suited for asynchronous or analytical workloads rather than latency-sensitive applications where every millisecond matters. But innovations like WarpStream’s integration with Amazon Express OneZone even make diskless Kafka fast enough almost all most use cases.

Diskless Kafka at Scale: A New Standard for Streaming in Fintech for Log Analytics and Observability

Robinhood shows how a modern fintech can use real-time data streaming to support innovation, performance, and cost efficiency.

Kafka and Flink power everything from trade execution to fraud detection. Diskless Kafka with WarpStream improves cost control for infrastructure monitoring, log analytics and observability. All of this supports Robinhood’s mission to make finance accessible and fast.

For other financial services firms, this is a clear signal. Data streaming is not just a backend upgrade. It’s a foundation for digital products, customer trust, and competitive advantage.

Architects and engineers can drive this change. But to succeed, they must also show business leaders the value. That’s the path forward for financial services. Robinhood is already on it.

Join the data streaming community and stay informed about new blog posts by subscribing to my newsletter and follow me on LinkedIn or X (former Twitter) to stay in touch. And make sure to download my free book about data streaming use cases, including many use cases and case studies from the financial services sector.