This blog post explores the state of data streaming for financial services. The evolution of capital markets, retail banking and payments requires easy information sharing and open architecture. Data streaming allows integrating and correlating data in real-time at any scale. I look at trends to explore how data streaming helps as a business enabler. The foci are trending enterprise architectures in the FinServ industry for mainframe offloading, omnichannel customer 360 and fraud detection at scale, combined with data streaming customer stories from Capital One, Singapore Stock Exchange, Citigroup, and many more. A complete slide deck and on-demand video recording are included.

General trends in the financial services industry

Researchers, analysts, startups, and last but not least, labs and the first real-world rollouts of traditional players show a few upcoming trends in the financial services industry:

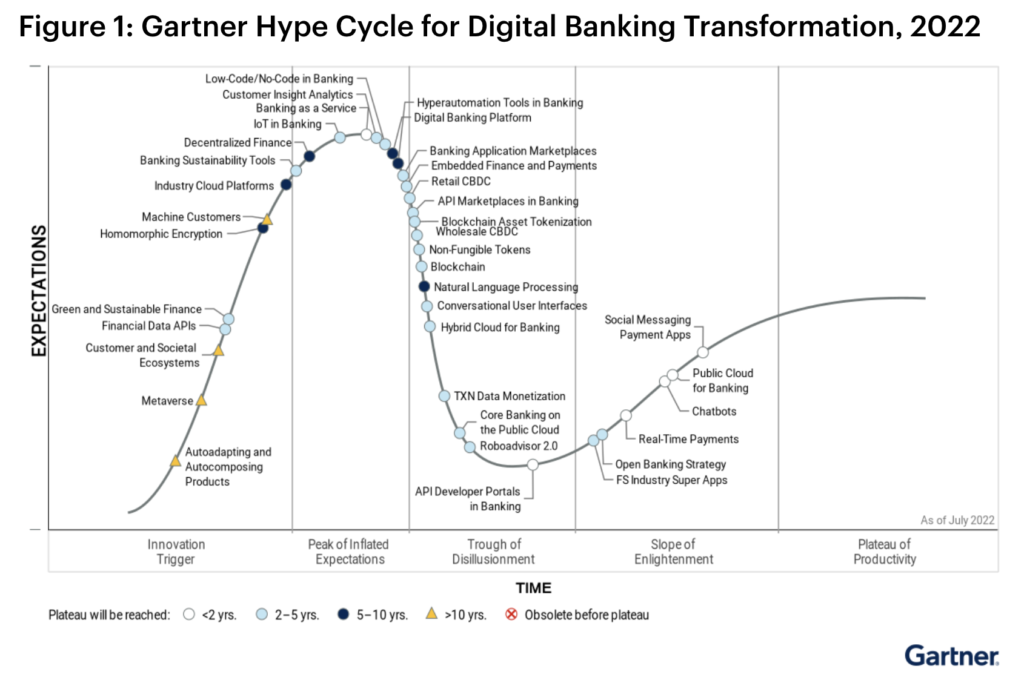

- Digital Transformation in Financial Services (see Gartner’s Hype Cycle for Digital Banking Transformation)

- Mainframe: A Critical Part Of Modern IT Strategies (see Deloitte’s Forrester Report about the value and support of mainframes in the modern IT world)

- Public Cloud and Open Banking APIs (see Forrester’s article about the history of banks moving to the cloud)

Let’s explore the goals and impact of these trends.

Innovation: Digital banking transformation

Gartner says that four technologies have the potential for high levels of transformation in the banking sector and are likely to mature within the next couple of years:

- Banking as a Service (BaaS) can be a discrete or broad set of financial service functions exposed by chartered banks or regulated entities to power new business models deployed by other banking market participants – fintechs, neobanks, traditional banks, and other third parties.

- Chatbots in banks will affect all areas of communication between machines and humans.

- Public Cloud for Banking is becoming highly transformational to the banking industry since banks can achieve greater efficiency and agility by moving workloads to the cloud.

- Social Messaging Payment Apps rely on instant messaging platforms to originate payment transactions. The messaging app interface is used to register payment accounts and to initiate and monitor related transactional activity.

Gartner’s Hype Cycle for Digital Banking Transformation shows the state of these and other trends in the financial services sector:

Mainframe: A critical part of modern IT strategies

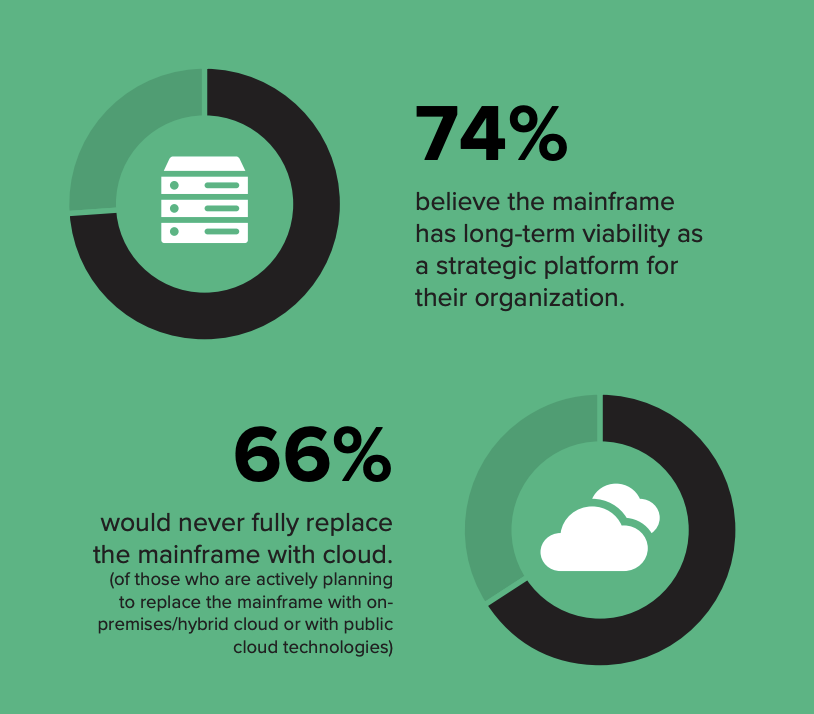

Deloitte published an interesting analysis by Forrester. It confirms my experience from customer meetings: “Unparalleled processing power and high security make the mainframe a strategic component of hybrid environments”. Or: The Mainframe is here to stay:

The mainframe has many disadvantages: Monolithic, legacy protocols and programming languages, inflexible, expensive, etc. However, the mainframe is battle-tested and works well for many mission-critical applications worldwide.

And look at the specifications of a modern mainframe: The IBM z15 was announced in 2019 with up to 40TB RAM and 190 Cores. Wow. Impressive! But it typically costs millions $$$ (variable software costs not included).

Public cloud and Open API: Data sharing in real-time at elastic scale

Many banks worldwide – usually more conservative enterprises – have a cloud-first strategy in 2023. Not just for analytics or reporting (that’s a great way to get started, though), but also for mission-critical workloads like core banking. And we are talking about the public cloud here (e.g., AWS, Azure, GCP, Alibaba). Not just private cloud deployments in their own data center built with Kubernetes or similar container-based, cloud-native technologies.

The Forrester article “A Short History Of Financial Services In The Cloud” is an excellent reminder: “But in 2015, Capital One shocked the world with its pronouncement of going all in on Amazon Web Services (AWS) and even migrating existing applications — an unheard-of notion for any company across any industry. Capital One claimed AWS could better secure its workloads than Capital One’s highly qualified security staff. Following this announcement, an onslaught of digital-native banks followed suit.”

The cloud is not cheaper. But it provides a flexible and elastic infrastructure to focus on business and innovation, not operations of IT. Open APIs are normal in cloud-native infrastructure. Open Banking trends and standards like PSD2 (European regulation for electronic payment services) make payments more secure, ease innovation, and enable easier integration between B2B partners.

Data streaming in financial services

Adopting trends like mobile social payment apps or open banking APIs is only possible if enterprises in the financial services world can provide and correlate information at the right time in the proper context. Real-time, which means using the information in milliseconds, seconds, or minutes, is almost always better than processing data later (whatever later means):

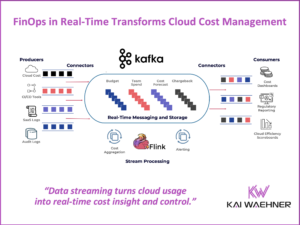

Data streaming combines the power of real-time messaging at any scale with storage for true decoupling, data integration, and data correlation capabilities. Apache Kafka is the de facto standard for data streaming.

“Apache Kafka in the Financial Services Industry” is a good article for starting with an industry-specific point of view on data streaming.

This is just one example. Data streaming with the Apache Kafka ecosystem and cloud services are used throughout the supply chain of the finance industry. Search my blog for various articles related to this topic: Search Kai’s blog.

Data streaming as a business enabler

Forbes published a great article about “high-frequency data and event streaming in banking“. Here are a few of Forbes’ pivotal use cases for data streaming in financial services:

Retail Banking

- From a limited view of the customer to a 360-degree view of the customer.

- Hyper-personalized customer experiences.

- App-first customer interactions.

Payments Processing

- Typical T+1 or T+2 batch of settlement cycle to real-time transfers

- Stringent event-driven infrastructure with decoupled producers and consumers.

Capital Markets

- From overnight pricing models to real-time sensitivities & market risk.

- Managing the complexities of timing and volume related to co-located execution

- T+1 trading Settlements to Automated clearing and settlement (T+0).

Open API for flexibility and faster time to market

Real-time data beats slow data in almost all use cases. But as essential is data consistency and an Open API approach across all systems, including non-real-time legacy systems and modern request-response APIs.

Apache Kafka’s most underestimated feature is the storage component based on the append-only commit log. It enables loose coupling for domain-driven design with microservices and independent data products in a data mesh.

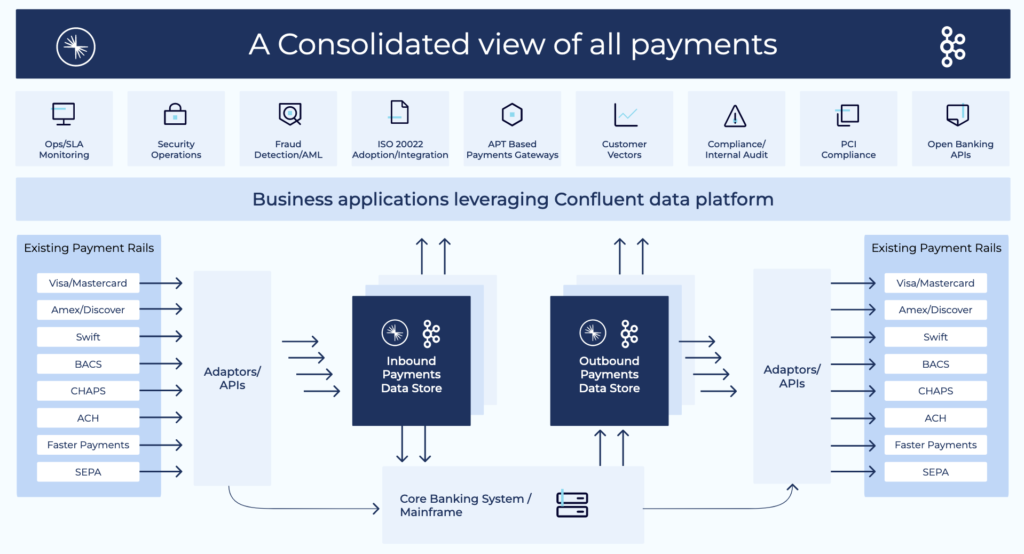

Here is an example of an open banking architecture for all payment information systems and external interfaces:

Architecture trends for data streaming

The financial services industry applies various trends for enterprise architectures for cost, flexibility, security, and latency reasons. The three major topics I see these days at customers are:

- Legacy modernization by offloading and migrating from monoliths to cloud-native

- Hyper-personalized customer experience with omnichannel banking and context-specific decisions

- Real-time analytics with stateless and stateful data correlation for transactional and analytical workloads

- Mission-critical data streaming across data centers and clouds for high availability and compliance

Legacy modernization and data offloading

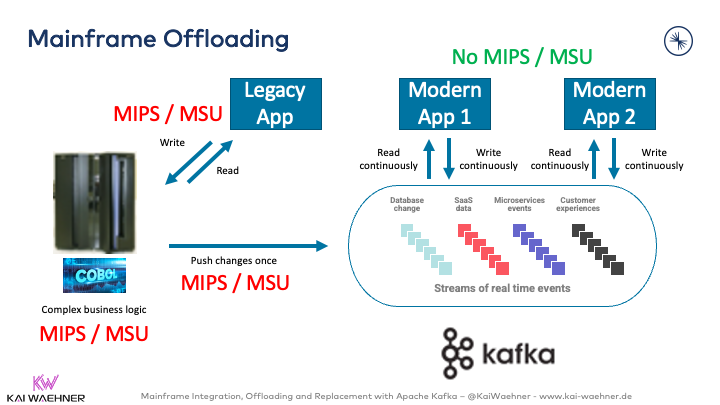

I covered the steps for a successful legacy modernization in various blog posts. Mainframe offloading is an excellent example. While existing transactional workloads still run on the mainframe using IBM DB2, VSAM, CICS, etc., event changes are pushed to the data streaming platform and stored in the Kafka log. New applications can be built with any technology and communication paradigm to access the data:

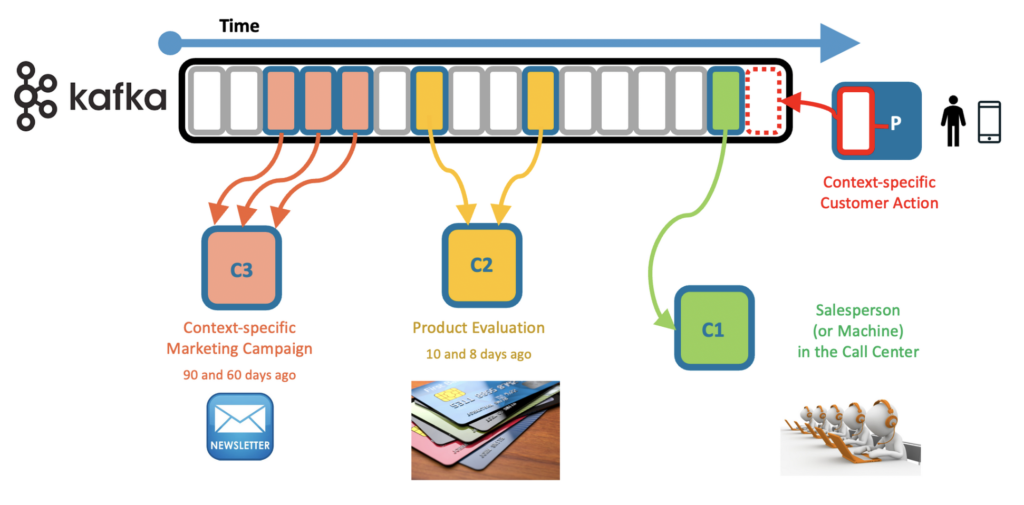

Hyper-personalized customer experience

Customers expect a great customer experience across devices (like a web browser or mobile app) and human interactions (e.g., in a bank branch). Data streaming enables a context-specific omnichannel banking experience by correlating real-time and historical data at the right time in the right context:

“Omnichannel Retail and Customer 360 in Real Time with Apache Kafka” goes into more detail. This is a great example where the finance sector can learn from other industries that had to innovate a few years earlier to solve a specific challenge or business problem.

Real-time analytics with stream processing

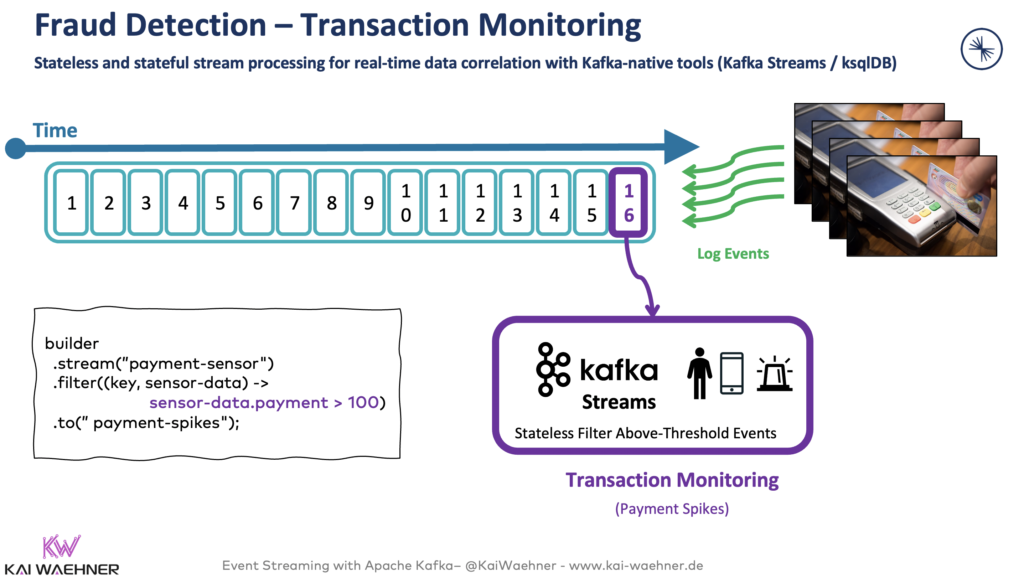

Real-time data beats slow data. That’s true for most analytics scenarios. If you detect fraud after the fact in your data warehouse, it is nice… But too late! Instead, you need to detect and prevent fraud before it happens.

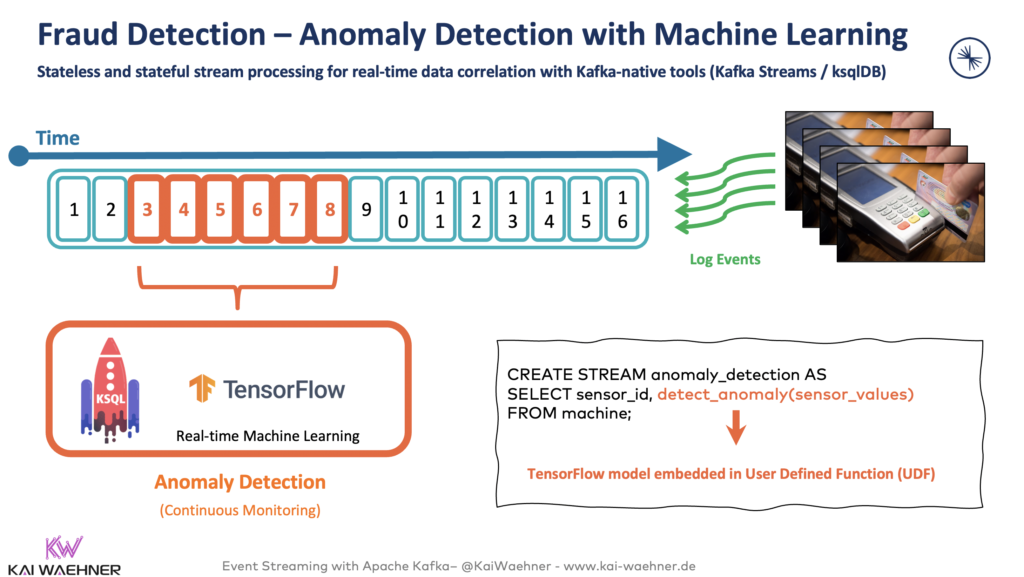

This requires intelligent decision-making in real-time (< 1 second end-to-end). Data Streaming’s stream processing capabilities with technologies like Kafka Streams, KSQL, or Apache Flink are built for these use cases. Stream processing is scalable for millions of transactions per second and is reliable to guarantee zero data loss.

Here is an example of stateless transaction monitoring of payment spikes (i.e., looking at one event at a time):

More powerful stateful stream processing aggregates and correlates events from one or more data sources together continuously in real time:

The article “Fraud Detection with Apache Kafka, KSQL and Apache Flink” explores stream processing for real-time analytics in more detail, shows an example with embedded machine learning, and covers several real-world case studies.

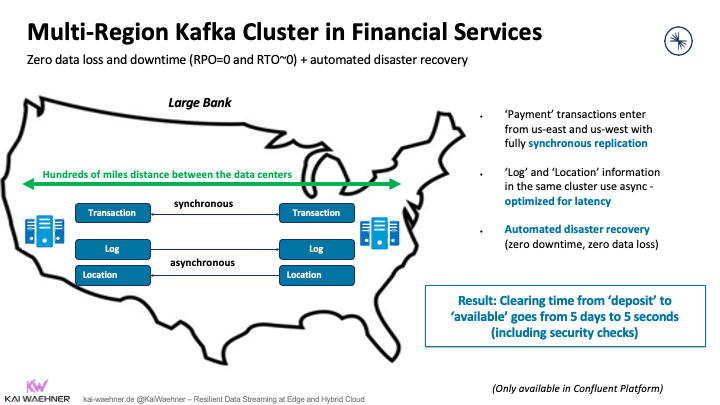

Mission-critical data streaming with a stretched Kafka cluster across regions

The most critical use cases require business continuity, even if disaster strikes and a data center or complete cloud region goes down.

Data streaming powered by Apache Kafka enables various architectures and deployments for different SLAs. Multi-cluster and cross-data center deployments of Apache Kafka have become the norm rather than an exception. A single Kafka cluster stretched across regions (like the US East, Central, and West) is the most resilient way to deploy data streaming:

Please note that this capability of Multi-Region Clusters (MRC) for Kafka is only available in the Confluent Platform as a commercial product.

In my other blog post, learn about architecture patterns for Apache Kafka that may require multi-cluster solutions and see real-world examples with their specific requirements and trade-offs. That blog explores scenarios such as disaster recovery, aggregation for analytics, cloud migration, mission-critical stretched deployments, and global Kafka.

New customer stories for data streaming in the financial services industry

So much innovation is happening in the financial services industry. Automation and digitalization change how we process payment, prevent fraud, communicate with partners and customers, and so much more.

Most FinServ enterprises use a cloud-first (but still hybrid) approach to improve time-to-market, increase flexibility, and focus on business logic instead of operating IT infrastructure.

Here are a few customer stories from worldwide FinServ enterprises across industries:

- Erste Bank: Hyper-personalized mobile banking

- Singapore Stock Exchange (SGX): Modernized trading platform with a hybrid architecture

- Citigroup: Global payment applications with redundancy and scalability to support 99.9999% uptime

- Raiffeisen Bank International: Hybrid data mesh across countries with end-to-end data governance

- Capital One: Context-specific fraud detection and prevention in real-time

- 10X Banking: Cloud-native core banking platform using a modern Kappa architecture

Find more details about these case studies in the below slide deck and video recording.

Resources to learn more

This blog post is just the starting point. Learn more in the following on-demand webinar recording, the related slide deck, and further resources, including pretty cool lightboard videos about use cases.

On-demand video recording

The video recording explores the FinServ industry’s trends and architectures for data streaming. The primary focus is the data streaming case studies. Check out our on-demand recording:

Slides

If you prefer learning from slides, check out the deck used for the above recording:

Fullscreen ModeCase studies and lightboard videos for data streaming in financial services

The state of data streaming for financial services in 2023 is fascinating. New use cases and case studies come up every month. This includes better data governance across the entire organization, collecting and processing data from payment interfaces in real-time, data sharing and B2B partnerships with Open Banking APIs for new business models, and many more scenarios.

We recorded lightboard videos showing the value of data streaming simply and effectively. These five-minute videos explore the business value of data streaming, related architectures, and customer stories. Here are the videos for the FinServ sector, each one includes a case study:

- Episode 1: Data Streaming in Real Life: Banking

- Episode 2: Core Banking

- Episode 3: Fraud Detection

- Episode 4: Cost Reduction Through Mainframe Offloading

- Episode 5: Data Mesh

And this is just the beginning. Every month, we will talk about the status of data streaming in a different industry. Manufacturing was the first. Financial services second, then retail, and so on…

Let’s connect on LinkedIn and discuss it! Join the data streaming community and stay informed about new blog posts by subscribing to my newsletter.