A smart city is an urban area that uses different types of electronic Internet of Things (IoT) sensors to collect data and then use insights gained from that data to manage assets, resources, and services efficiently. This blog post explores how Apache Kafka fits into the smart city architecture and the benefits and use cases.

What is a Smart City?

That’s a good question. Here is a sophisticated definition:

Okay, that’s too easy, right? No worries… Additionally, many more definitions exist for the term “Smart City”. Hence, make sure to define the term before discussing it. The following is a great summary described in the book “Smart Cities for Dummies“, authored by Jonathan Reichental:

“A smart city is an approach to urbanization that uses innovative technologies to enhance community services and economic opportunities, improves city infrastructure, reduce costs and resource consumption, and increases civic engagement.”

A smart city provides many benefits for the civilization and the city management. Some of the goals are:

- Improved Pedestrian Safety

- Improved Vehicle Safety

- Proactively Engaged First Responders

- Reduced Traffic Congestion

- Connected / Autonomous Vehicles

- Improved Customer Experience

- Automated Business Processes

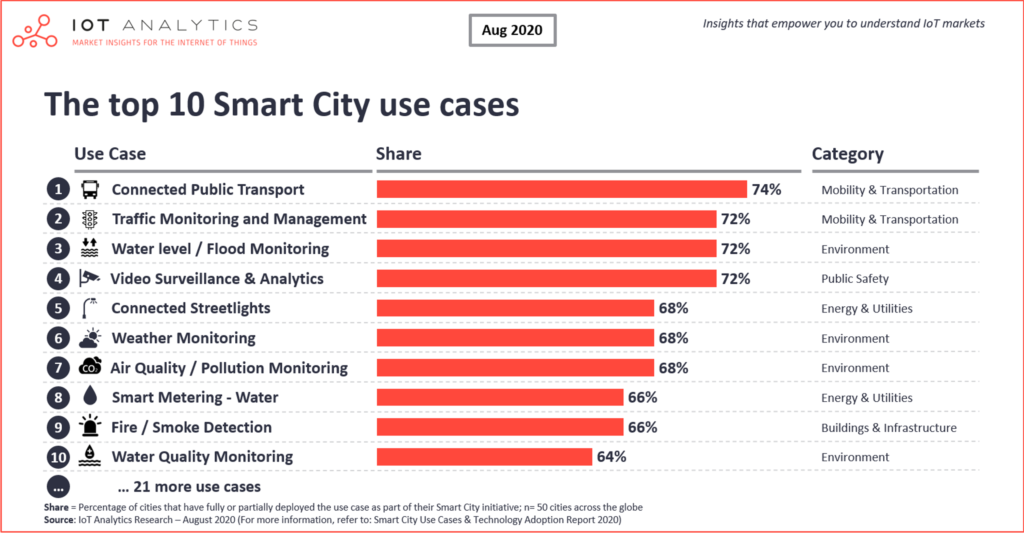

The research company IoT Analytics describes the top use cases of 2020:

These use cases indicate a significant detail about smart cities: The need for collaboration between various stakeholders.

Collaboration is Key for Success in a Smart City

A smart city requires the collaboration of many stakeholders. For this reason, smart city initiatives usually involve cities, vendors, and operators, such as the following:

- Public sector (including government, cities, communes) providing laws, regulations, initiatives, and often budgets.

- Hardware makers (including cars, scooters, drones, traffic lights, etc.) and their suppliers providing smart things.

- Telecom industry providing stable networks with high bandwidth and low latency (including 5G, Wifi hotspots, etc.

- Mobility services providing innovative apps and solutions like ride-hailing, car-sharing, map routing, etc. –

- Cloud providers like AWS, GCP, Azure, Alibaba providing cloud-native infrastructure for the backend applications and services

- Software providers providing SaaS and/or edge and mobile applications for CRM, analytics, payment, location-based services, and many other use cases.

Obviously, different stakeholders are often in competition or coopetition. For instance, the cities have a huge interest in building their own mobility services as this is the main gate to the end-users.

Funding is another issue, as IOT Analytics states: “Cities typically rely either on public or private funding for realizing their Smart City projects. To overcome funding-related limitations, successful Smart Cities tend to encourage and facilitate collaboration between the public and private sectors (i.e., Public-Private-Partnerships (PPPs)) in the development and implementation of Smart City projects.”

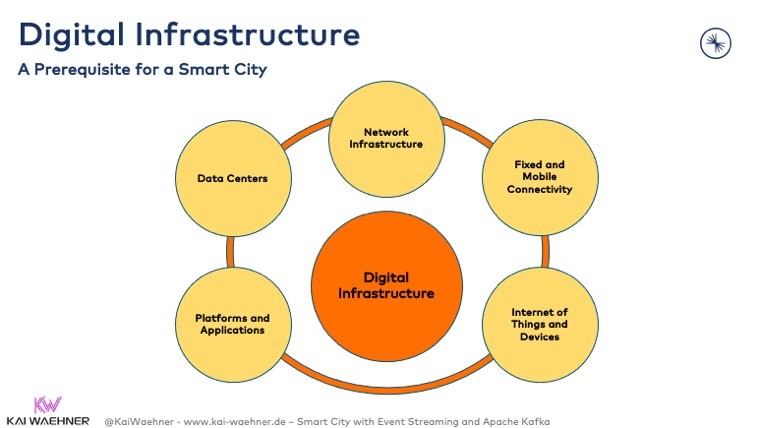

Digital Infrastructure as Prerequisite for a Smart City

A smart city is not possible without a digital infrastructure. No way around this. That includes various components:

A digital infrastructure enables building a smart city. Hence, let’s take a look at how to do that next…

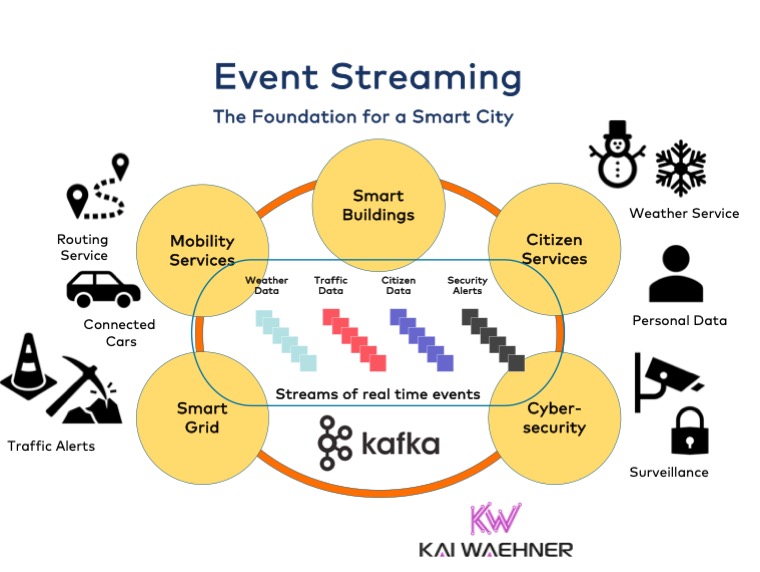

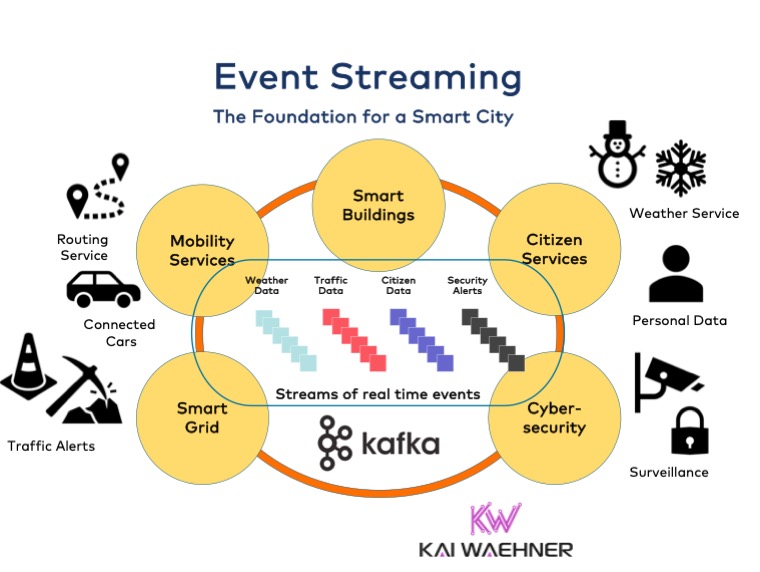

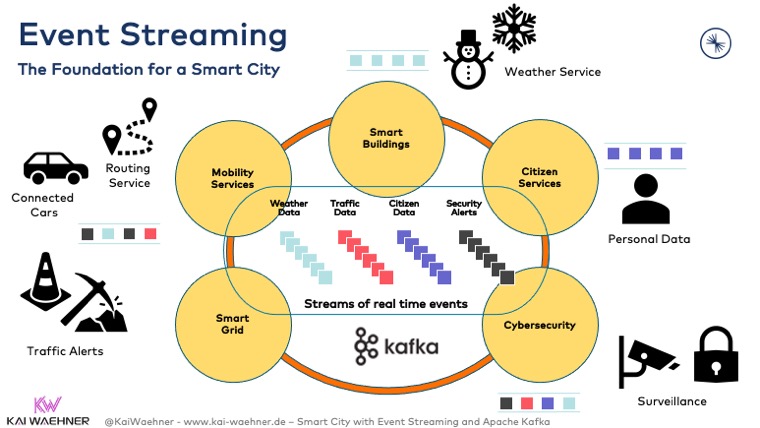

Event Streaming as Foundation for a Smart City

A smart city has to work with various interfaces, data structures, and technologies. Many data streams have to be integrated, correlated, and processed in real-time. Many data streams are high volume. Hence, scalability and elastic infrastructure are essential for success. Many data streams contain mission-critical workloads. Therefore, characteristics like reliability, zero data loss, persistence are super important.

An event streaming platform based on Apache Kafka and its ecosystem provide all these capabilities:

Smart city use cases often include hybrid architectures. Some parts have to run at the edge, i.e., closer to the streets, buildings, cameras, and many other interfaces, for high availability, low latency, and lower cost. Check out the “use cases for Kafka in edge and hybrid architectures” for more details.

Public Sector Use Cases for Event Streaming

Smart cities and the public sector are usually looked at together as they are closely related. Here are a few use cases that can be improved significantly by leveraging event streaming:

Citizen Services

- Health services, e.g., hospital modernization, track&trace – Covid distance control

- Efficient and digital citizen engagement, e.g., the personal ID application process

- Open exchange, e.g., mobility services (working with partners such as car makers)

Smart City

- Smart driving, parking, buildings, environment

- Waste management

- Mobility services

Energy and Utilities

- Smart grid and utility infrastructure (energy distribution, smart home, smart meters, smart water, etc.)

Security

- Law enforcement, surveillance

- Defense, military

- Cybersecurity

Obviously, this is just a small number of possible scenarios for event streaming. Additionally, many use cases from other industries can also be applied to the public sector and smart cities. Check out “real-life examples across industries for use cases and architectures leveraging Apache Kafka” to learn about real-world deployments from Audi, BMW, Disney, Generali, Paypal, Tesla, Unity, Walmart, William Hill, and many more.

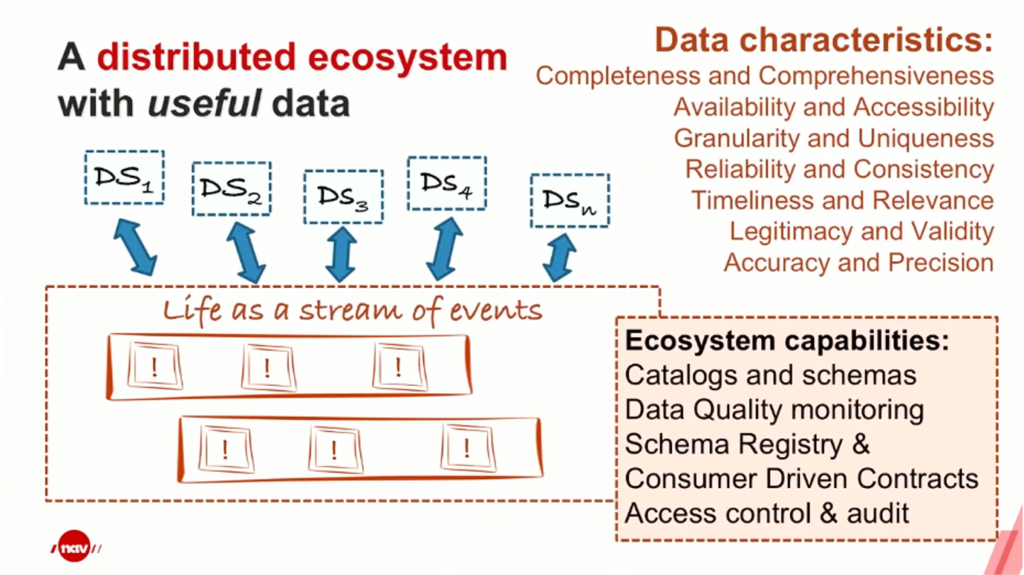

NAV (Norwegian Work and Welfare Department) – Life is a Stream of Events

Here is a great example from the public sector: The Norwegian Work and Welfare Department (NAV) has implemented the life of its citizens as a stream of events:

The consequence is a digital twin of citizens. This enables various use cases for real-time and batch processing of citizen data. For instance, new ID applications can be processed and monitored in real-time. At the same time, anonymized citizen data allows the aggregation for improving city services (e.g., by hiring the correct number of people for each department).

Obviously, such a use case is only possible with security and data governance in mind. Authentication, authorization, encryption, role-based access control, audit logs, data lineage, and other concepts need to be applied end-to-end using the event streaming platform.

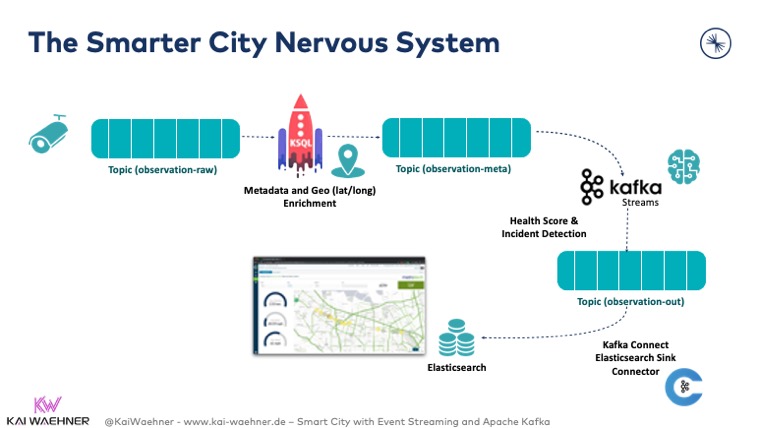

The Smarter City Nervous System

A smart city requires more than real-time data integration and real-time messaging. Many use cases are only possible if the data is also processed in real-time continuously. That’s where Kafka-native stream processing frameworks like Kafka Streams and ksqlDB come into play. Here is an example for receiving images or videos from surveillance cameras to do monitoring and alerting in real-time at scale:

For more details about data integration and stream processing with the Kafka ecosystem, check out the post “Stream Processing in a Smart City with Kafka Connect and KSQL“.

Kafka Enables a Scalable Real-Time Smart City Infrastructure

The public sector and smart city architectures leverage event streaming for various use cases. The reasons are the same as in all other industries: Kafka provides an open, scalable, elastic infrastructure. Additionally, it is battle-tested and runs in every infrastructure (edge, data center, cloud, bare metal, containers, Kubernetes, fully-managed SaaS such as Confluent Cloud). But event streaming is not the silver bullet for every problem. Therefore, Kafka is very complementary to other technologies such as MQTT for edge integration or a cloud data lake for batch analytics.

What are your experiences and plans for event streaming in smart city use cases? Did you already build applications with Apache Kafka? Let’s connect on LinkedIn and discuss it! Stay informed about new blog posts by subscribing to my newsletter.