The supply chain in the food and retail industry is complex, error-prone, and slow. This blog post explores real-world deployments across the end-to-end supply chain powered by data streaming with Apache Kafka to improve business processes with real-time services. The examples include manufacturing, logistics, stores, delivery, restaurants, and other parts of the business. Case studies include Walmart, Albertsons, Instacart, Domino’s Pizza, Migros, and more.

The supply chain in the food and retail industry

The food industry is a complex, global network of diverse businesses that supplies most of the food consumed by the world’s population. It is far beyond the following simplified visualization 🙂

The term food industry covers a series of industrial activities directed at the production, distribution, processing, conversion, preparation, preservation, transport, certification, and packaging of foodstuffs.

Today’s food industry has become highly diversified, with manufacturing ranging from small, traditional, family-run activities that are highly labor-intensive to large, capital-intensive, and highly mechanized industrial processes.

This post explores several case studies with use cases and architectures to improve supply chain and business processes with real-time capabilities.

Most of the following companies leverage Kafka for various use cases. They sometimes overlap and compete. I structured the next sections to choose specific examples from each company. This way, you see a complete real-time supply chain flow using data streaming examples from different industries related to the food and retail business.

Why do the food and retail supply chains need real-time data streaming using Apache Kafka?

Before I start with the real-world examples, let’s discuss why I thought this was the right time for this post to collect a few case studies.

In February 2022, I was in Florida for a week for customer meetings. Let me report on my inconvenient experience as a frequent traveler and customer. Coincidentally, all this happened within one weekend.

A horrible weekend travel experience powered by manual processes and batch systems

- Issue #1 (hotel): While I canceled a hotel night a week ago, I still got emails for buying upgrades from the batch system of the hotel’s booking engine. Contrarily, I did not get promotions for upgrading my new booking at the same hotel chain.

- Issue #2 (clothing store): The Point-of-sale (POS) was down because of a power outage and no connectivity to the internet. The POS could not process payments without the internet. People left without buying items. I needed the new clothes (as my hotel also did not provide laundry service because of the limited workforce).

- Issue #3 (restaurant): I went to a steak house to get dinner. Their POS was down, too, because of the same power outage. The waiter could not take orders. The separate software in the kitchen could not receive manual orders from the waiter either.

- Issue #4 (restaurant): After 30 minutes of waiting, I ordered and had dinner. However, the dessert I chose was not available. The waiter explained that supply chain issues don’t provide this food, but the restaurant could never update the paper menu or online PDF.

- Issue #5 (clothing store): After dinner, I went back to the other store to buy my clothes. It worked. The salesperson asked me for my email address to give a 15 percent discount. I wonder why they don’t see my loyalty information already. Maybe via a location based-service. Or at least, I would expect to pay and log in via my mobile app.

- Issue #6 (hotel): Back in the hotel, I check out. The hotel did not add my loyalty bonus (discount on food in the restaurant) as the system only supports batch integration of other applications. Also, the receipt could not display loyalty information and the correct receipt value. I had to accept this, even though this is not even legal by German tax law.

This story shows why the digital transformation is crucial across all parts of the food, retail, and travel industry. I have already covered use cases and architecture for data streaming with Apache Kafka in the Airline, Aviation, and Travel Industry. Hence, this post focuses more on food and retail. But the concepts do not differ across these industries.

As you will see in the following sections, optimizing business processes, automating tasks, and improving customer experience is not complicated with the right technologies.

Digital transformation with automated business processes and real-time services across the supply chain

With this story in mind, I thought it was a good time to share various real-world examples across the food and retail supply chain. Some companies have already started with innovation. They digitalized their business processes and built innovative new real-time services.

Food processing machinery for IoT analytics at Baader

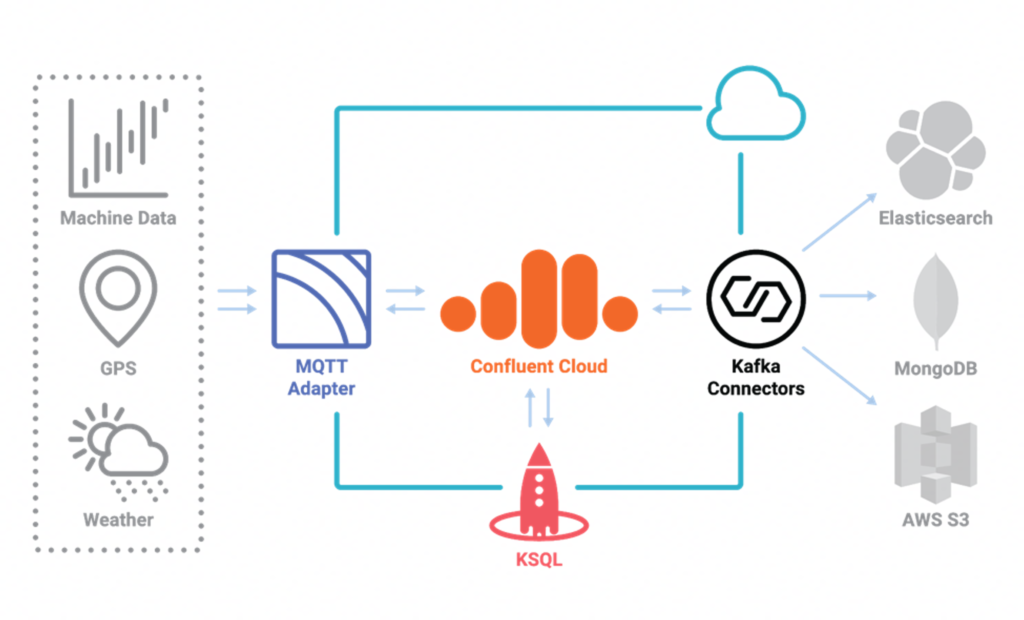

BAADER is a worldwide manufacturer of innovative machinery for the food processing industry. They run an IoT-based and data-driven food value chain on Confluent Cloud.

The Kafka-based infrastructure provides a single source of truth across the factories and regions across the food value chain. Business-critical operations are available 24/7 for tracking, calculations, alerts, etc.

The event streaming platform runs on Confluent Cloud. Hence, Baader can focus on building innovative business applications. The serverless Kafka infrastructure provides mission-critical SLAs and consumption-based pricing for all required capabilities: messaging, storage, data integration, and data processing.

MQTT provides connectivity to machines and GPS data from vehicles at the edge. Kafka Connect connectors integrate MQTT and other IT systems, such as Elasticsearch, MongoDB, and AWS S3. ksqlDB processes the data in motion continuously.

Check out my blog series about Kafka and MQTT for other related IoT use cases and examples.

Logistics and track & trace across the supply chain at Migros

Migros is Switzerland’s largest retail company, largest supermarket chain, and largest employer. They leverage MQTT and Kafka for real-time visualization and processing of logistics and transportation information.

As Migros explored in a Swiss Kafka meetup, they optimized their supply chain with a single data streaming pipeline, not just for real-time purposes but also for use cases that require replaying all historical events of the whole day.

The goal was to provide “one global optimum instead of four local optima”. Specific logistics use cases include forecasting the truck arrival time and rescheduling truck tours.

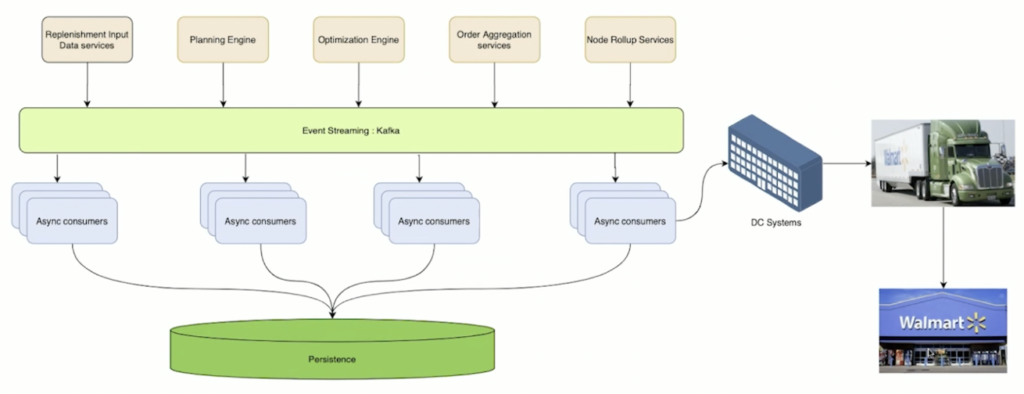

Optimized inventory management and replenishment at Walmart

Walmart operates Kafka across its end-to-end supply chain at a massive scale. The VP of Walmart Cloud says, “Walmart is a $500 billion in revenue company, so every second is worth millions of dollars. Having Confluent as our partner has been invaluable. Kafka and Confluent are the backbone of our digital omnichannel transformation and success at Walmart.”

The real-time supply chain includes Walmart’s inventory system. As part of that infrastructure, Walmart built a real-time replenishment system:

Here are a few notes from Walmart’s Kafka Summit talk covering this topic.

- Caters millions of its online and walk-in customers

- Ensures optimal availability of needed assortment and timely delivery on online fulfillment

- 4+ billion messages in 3 hours generate an order plan for the entire network of Walmart stores with great accuracy for ordering decisions made daily

- Apache Kafka as the data hub and for real-time processing

- Apache Spark for micro-batches

The business value is enormous from a cost and operations perspective: cycle time reduction, accuracy, speed reduced complexity, elasticity, scalability, improved resiliency, reduced cost.

And to be clear: This is just one of many Kafka-powered use cases at Walmart! Check out other Kafka Summit presentations for different use cases and architectures.

Omnichannel order management in restaurants at Domino’s Pizza

Domino’s Pizza is a multinational pizza restaurant chain with ~17,000 stores. They successfully transformed from a traditional pizza company to a data-driven e-commerce organization. They focus on a data-first approach and a relentless customer experience.

Domino’s Pizza provides real-time operation views to franchise owners. This includes KPIs, such as order volume by channel and store efficiency metrics across different ordering channels.

Domino’s Pizza mentions the following capabilities and benefits of their real-time data hub to optimize their supply chain:

- Improve store operational real-time analytics

- Support global expansion goals via legacy IT modernization

- Implement more personalized marketing campaigns

- Real-time single pane of glass

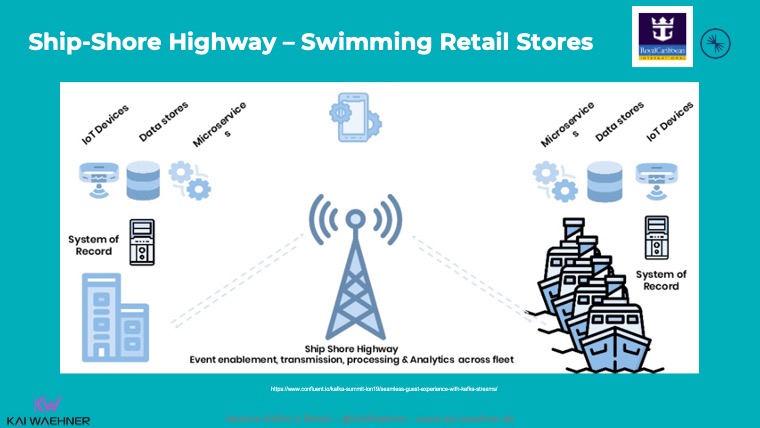

Location-based services and upselling at the point-of-sale at Royal Caribbean

Royal Caribbean is a cruise line. It operates the four largest passenger ships in the world. As of January 2021, the line operates twenty-four ships and has six additional ships on order.

Royal Caribbean implemented one of Kafka’s most famous use cases at the edge. Each cruise ship has a Kafka cluster running locally for use cases, such as payment processing, loyalty information, customer recommendations, etc.:

Edge computing on the ship is crucial for an efficient supply chain and excellent customer experience at Royal Caribbean:

- Bad and costly connectivity to the internet

- The requirement to do edge computing in real-time for a seamless customer experience and increased revenue

- Aggregation of all the cruise trips in the cloud for analytics and reporting to improve the customer experience, upsell opportunities, and many other business processes

Hence, a Kafka cluster on each ship enables local processing and reliable mission-critical workloads. The Kafka storage guarantees durability, no data loss, and guaranteed ordering of events – even though they are processed later. Only very critical data is sent directly to the cloud (if there is connectivity at all). All other information is replicated to the central Kafka cluster in the cloud when the ship arrives in a harbor for a few hours. A stable internet connection and high bandwidth are available before leaving for the next trip again.

Learn more about use cases and architecture for Kafka at the edge in its dedicated blog post.

Recommendations and discounts using the loyalty platform at Albertsons

Albertsons is the second-largest American grocery company with 2200+ stores and 290,000+ employees. They also operate pharmacies in many stores.

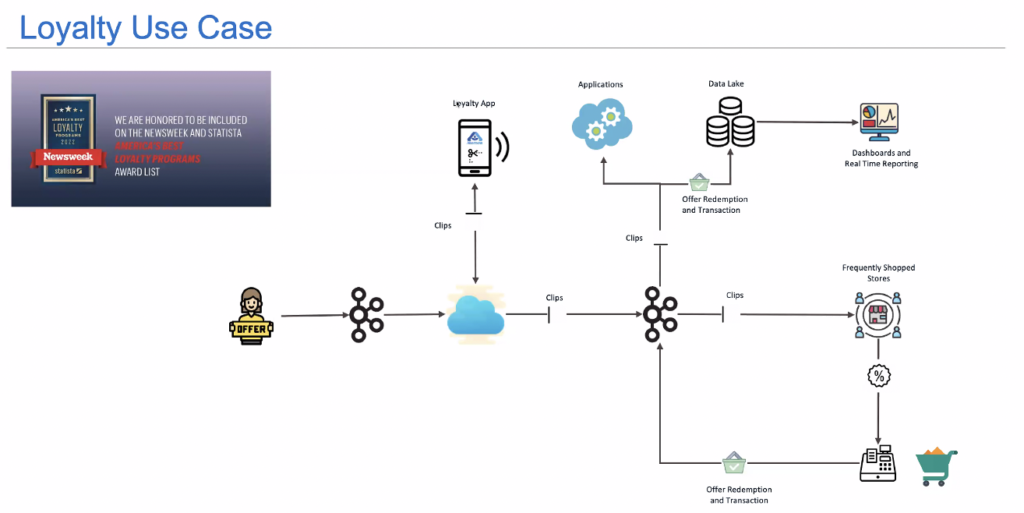

Albertsons operates Kafka powered by Confluent as the real-time data hub for various use cases, including:

- Inventory updates from 2200 stores to the cloud services in near real-time to maintain visibility in the health of the stores and to ensure well managed out-of-stock and

substitution recommendations for digital customers - Distributing offers and customer clips to each store in near real-time and improving store checkout experience.

- Feed the data in real-time to analytics engines for supply chain order forecasts, warehouse order management, delivery, labor forecasting and management, demand planning, and inventory management.

- Ingesting transactions near real-time to the data lake for batch workloads to generate dashboards for associates and business, training data models, and hyper-personalization.

As an example, here is how Albertsons data streaming architecture empowers their real-time loyalty platform:

Learn more about Albertsons’ innovative real-time data hub by watching the on-demand webinar I did with two of their leading architects recently.

Payment processing and fraud detection at Grab

Grab is a mobility service in Asia, similar to Uber and Lyft in the United States or FREE NOW (former mytaxi) in Europe. The Kafka ecosystem heavily powers all of them. Like FREE NOW, Grab leverages serverless Confluent Cloud to focus on business logic.

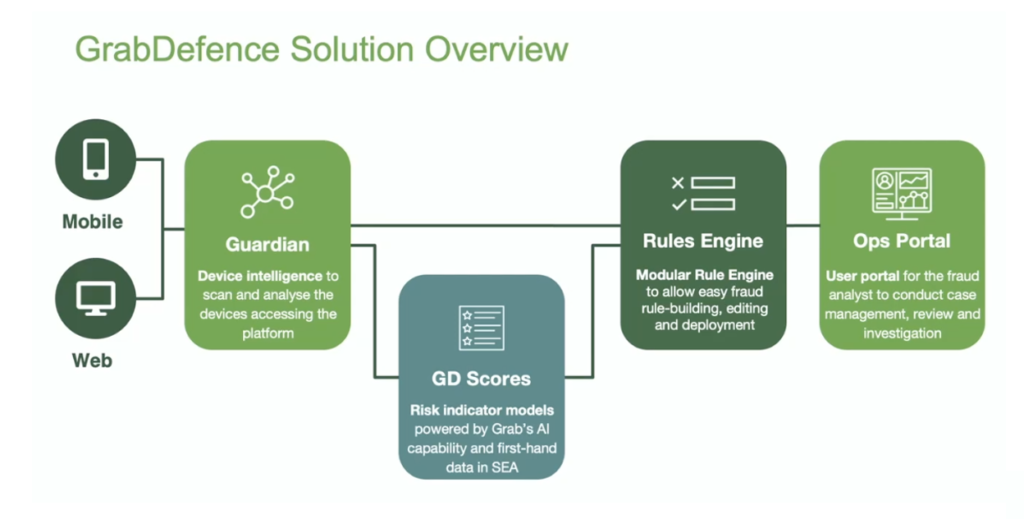

As part of this, Grab has built GrabDefence. This internal service provides a mission-critical payment and fraud detection platform. The platform leverages Kafka Streams and Machine Learning for stateful stream processing and real-time fraud detection at scale.

Grab struggles with billions of fraud and safety detections performed daily for millions of transactions. Companies lose 1.6% in fraud in Southeast Asia. Therefore, Grab’s data science and engineering team built a platform to search for anomalous and suspicious transactions and identify high-risk individuals.

Grab struggles with billions of fraud and safety detections performed daily for millions of transactions. Companies lose 1.6% in fraud in Southeast Asia. Therefore, Grab’s data science and engineering team built a platform to search for anomalous and suspicious transactions and identify high-risk individuals.

Here is one fraud example: An individual who pretends to be both the driver and passenger and makes cashless payments to get promotions.

Delivery and pickup at Instacart

Instacart is a grocery delivery and pickup service in the United States and Canada. The service orders groceries from participating retailers, with the shopping being done by a personal shopper.

Instacart requires a platform that enables elastic scale and fast, agile internal adoption of real-time data processing. Hence, Confluent Cloud was chosen to focus on business logic and roll out new features with fast time-to-market. As Instacart said, during the Covid pandemic, they had to “handle ten years’ worth of growth in six weeks”. Very impressive and only possible with cloud-native and serverless data streaming.

Learn more in the recent Kafka Summit interview between Confluent found Jun Rao and Instacart.

Real-time data streaming with Kafka beats slow data everywhere in the supply chain

This post showed various real-world deployments where real-time information increases operational efficiency, reduces the cost, or improves the customer experience.

Whether your business domain cares about manufacturing, logistics, stores, delivery, or customer experience and loyalty, data streaming with the Apache Kafka ecosystem provides the capability to be innovative at any scale. And in the cloud, serverless Kafka makes it even easier to focus on time to market and business value.

How do you optimize your supply chain? Do you leverage data streaming? Is Apache Kafka the tool of choice for real-time data processing? Or what data streaming technologies do you use? Let’s connect on LinkedIn and discuss it! Stay informed about new blog posts by subscribing to my newsletter.