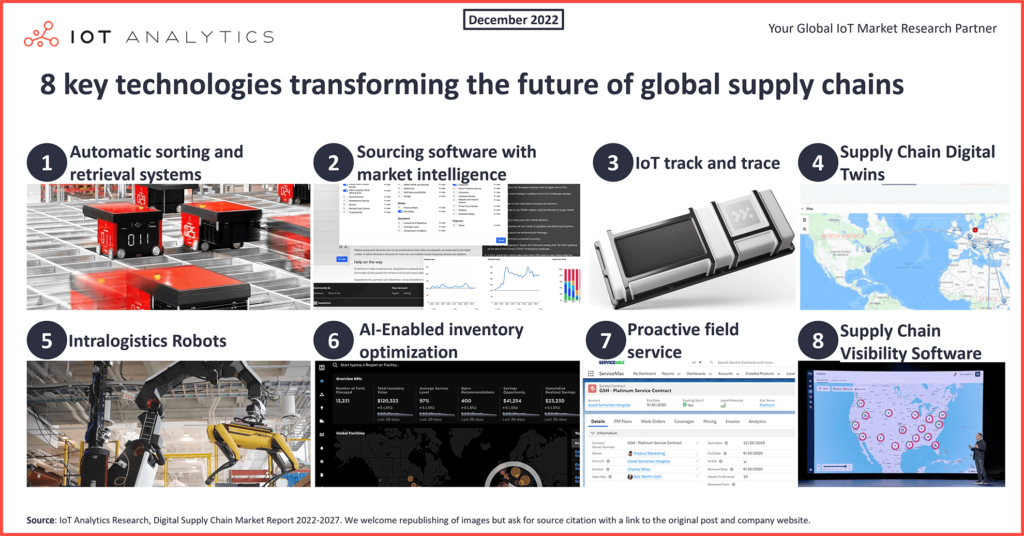

A supply chain is a complex logistics system that converts raw materials into finished products distributed to end-consumers. The research company IoT Analytics found eight key technologies transforming the future of global supply chains. This article explores how data streaming helps to innovate in this area. Real-world case studies from global players such as BMW, Bosch, and Walmart show the value of real-time data streaming to improve the supply chain by building use cases such as automated intralogistics, track and trace of vehicles, and proactive and context-specific decision-making with MES and ERP integration.

8 key technologies transforming the future of global supply chains

“The Digital Supply Chain market is starting to accelerate, according to new research by IoT Analytics. Eight supply chain technology innovations are helping to make global supply chains more robust, including AS/RS technology, intralogistics robots, IoT track and trace, AI-enabled software, and supply chain digital twins.”

“26 weeks (or half a year)—that is how long companies have to wait for their semiconductor orders, on average. In some cases, it takes much longer. Before the current supply shortage, the average had been approximately 14 weeks—significantly shorter. This is just one example of supply chain issues stressing the economy worldwide.”

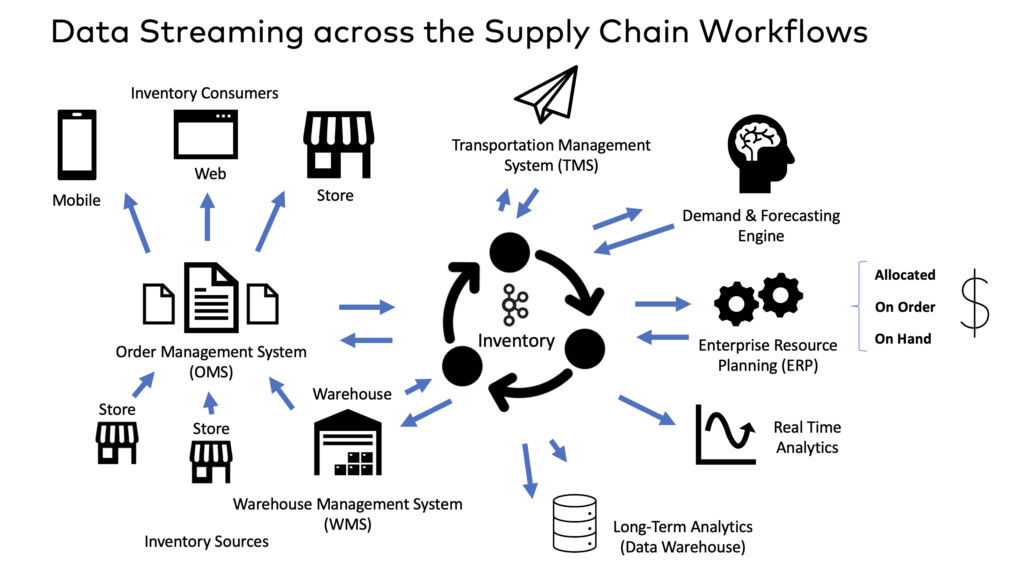

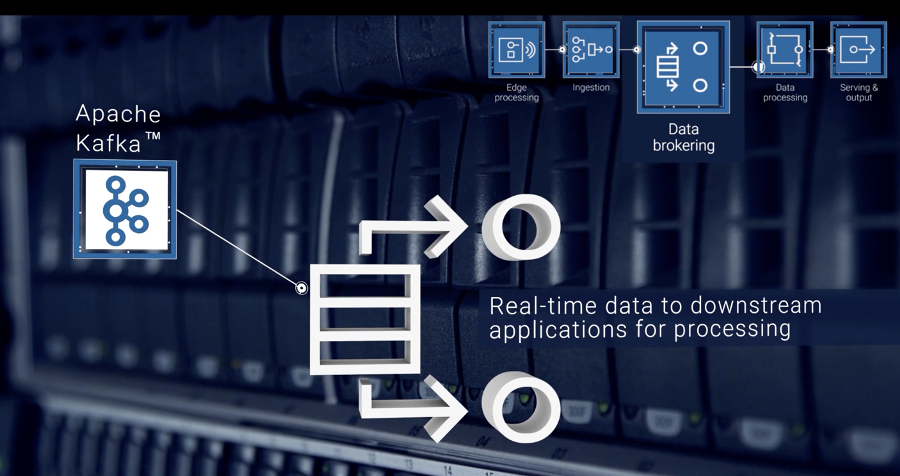

Data Streaming with Apache Kafka across the global supply chain

Real-time data beats slow data across global supply chains. That is true in any industry. Most modern supply chains rely on the de facto standard for data streaming Apache Kafka to improve the information flow across internal and external systems.

I wrote about data streaming with Apache Kafka and its broader ecosystem a lot in the past:

- Efficiency: Apache Kafka for Supply Chain Management (SCM) Optimization

- Case Studies: Real-Time Supply Chain with Apache Kafka in the Food and Retail Industry

- Real-Time: Supply Chain Control Tower powered by Kafka

- Innovation: Building a Postmodern ERP with Data Streaming

To be clear: Data Streaming is a concept, and Apache Kafka is a technology to integrate and correlate incoming and outgoing information. Data streaming is not competing with other supply chain products or technologies. Apache Kafka is either part of the solution (e.g., within an ERP or MES cloud service) or connecting the different interfaces (e.g., sensors, robots, IT applications, analytics platforms).

With this background, let’s look at IOT Analytics’ 8 key technologies transforming the future of global supply chains and how data streaming helps here. The following real-world examples show that the data streaming part I mention in each section is not the key technology IoT Analytics mentioned, but part of that overall solution or implementation.

1. Automatic sorting and retrieval systems

The VDMA defines intra-logistics as the entire process in a company that has to do with the connection and interaction of internal systems for material flow, automated guided vehicles, logistics, production and goods transport on the company premises.

Automatic sorting and retrieval systems (AR/RS) replace conveyors, forklifts, and racks. These systems are purpose-built machines with embedded software. However, data synchronization between these machines and the overall logistics process (that includes many other applications) is critical to automate and improve intralogistics as much as possible.

Intralogistics combines AR/RS systems with standard software, such as the warehouse management system (WMS), enterprise resource planning (ERP), and manufacturing execution system (MES). Most of these systems connect real-time from various sensors and applications. Data streaming is a perfect fit for such standard software. Hence, most modern, cloud-native MES and ERP systems leverage data streaming powered by Apache Kafka.

Critical Manufacturing is a leading MES powered by Apache Kafka. It combines MES transaction workloads and big data IoT analytics. Data from AR/RS and other IoT systems is ingested, stored, processed, transformed, and analyzed in real-time. Data Streaming provides a durable, distributed, highly scalable unified analytics platform for large-scale online or offline data processing embedded into the MES.

2. Sourcing software with market intelligence

IoT Analytics defines sourcing and supplier management software as a helper tool to find suppliers to ensure they have the right components available in the right quantity to maintain their activities. The latest innovation in this segment is the addition of market intelligence that allows the procurement team to act more strategically.

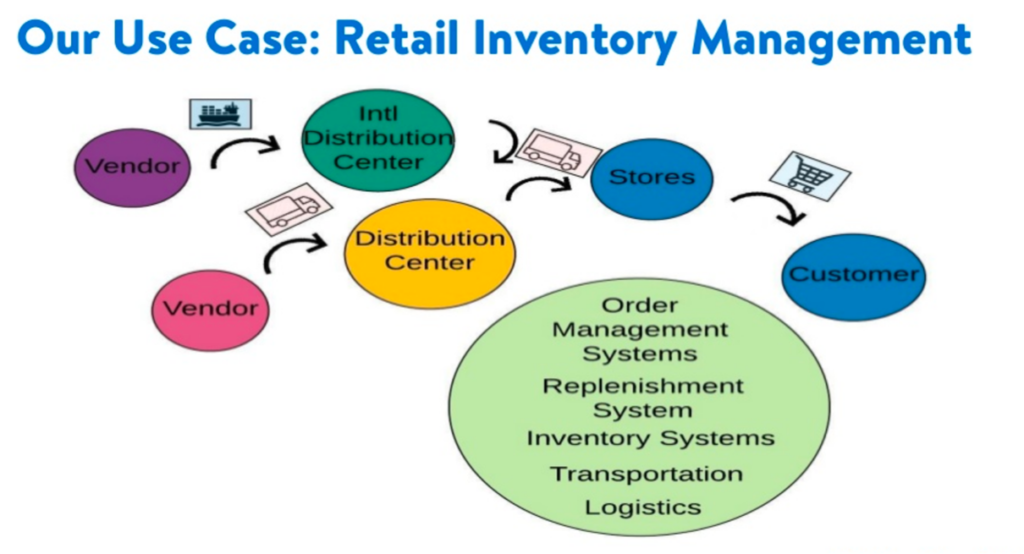

Walmart leverages data streaming across the entire supply chain, including sourcing and procurement, to enable real-time inventory management, cost-efficient procurement, and a better customer experience:

Here is a quote of the VP of Walmart Cloud: “Walmart is a $500 billion in revenue company, so every second is worth millions of dollars. Having Confluent as our partner has been invaluable. Kafka and Confluent are the backbone of our digital omnichannel transformation and success at Walmart.”

3. IoT track and trace devices

In the distribution and logistics of many types of products, track&trace determines the current and past locations (and other information) of a unique item or property. IoT devices provide sensors and connectivity modules (e.g., via a cellular network).

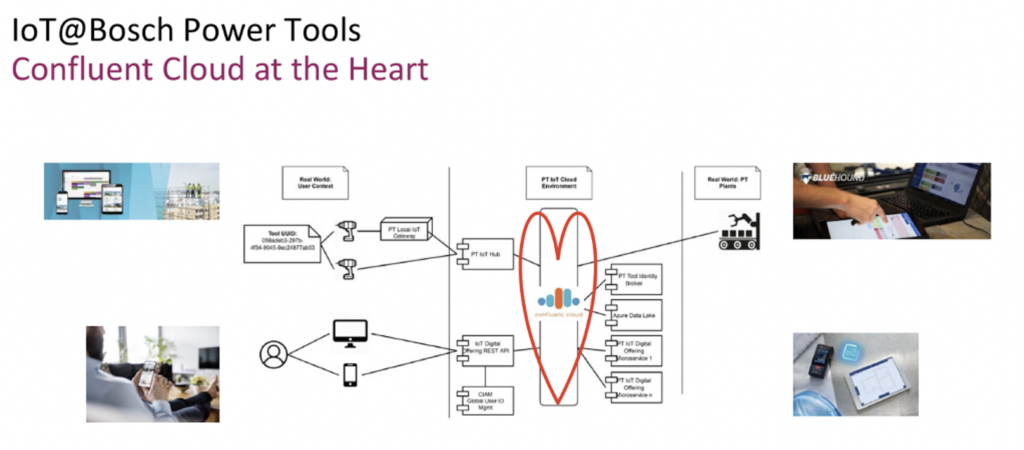

Data Streaming with Apache Kafka is a key enabler for IoT at Bosch Power Tools:

Bosch tracks, manages, and locates tools and other equipment anytime and anywhere from the warehouse to the job site with Confluent Cloud.

4. Supply chain digital twins

Digital twin refers to a digital replica of potential and actual physical assets (physical twin), processes, people, places, systems, and devices.

The term ‘Digital Twin’ usually means the copy of a single asset. In the real world, many digital twins exist. The term ‘Digital Thread’ spans the entire life cycle of one or more digital twins.

In a supply chain context, the digital thread is a digital model of the supply chain process to enable monitoring, simulation, and predictions.

Various IoT architectures exist for building a digital twin or digital thread with data streaming.

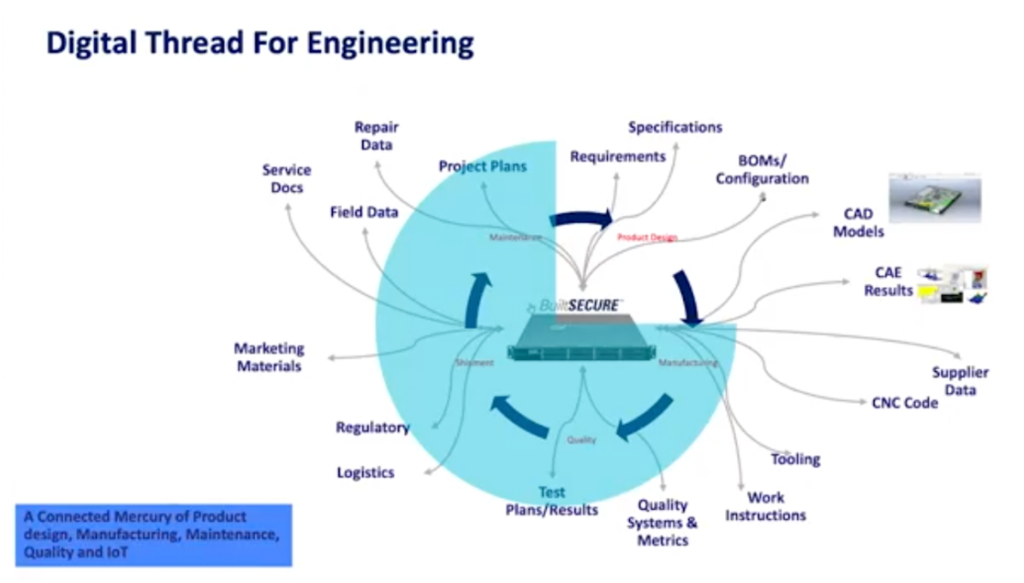

Mercury Systems is a global technology company serving the aerospace and defense industry. Mercury built a Digital Thread powered by data streaming to bring together design and product information across the product life cycle:

The following technologies are included:

- A Mendix-based portal with combined data from PLM/ERP/MES

- Confluent for cloud-native and reliable real-time event streaming across applications

- AI and ML technologies

The digital thread lets Mercury Systems view all data in one location using common tools. Further benefits of data streaming are faster time to market, the ability to scale, an improved innovation pipeline, and reduced cost of failure.

5. Intralogistics robots

Smart factories include various robots to automate shop floor processes and produce tangible goods. For instance, autonomous Mobile Robots (AMRs) are vehicles that autonomously use onboard sensors to move materials around a facility. Many car makers use these robots in their factories already. I visited the Mercedes “Factory 56” in 2022. This factory is a lighthouse project of Mercedes. It does not use paper anymore, but only digital services. AMRs drive around you while you look at the production line and workers.

Robots cannot work alone. They need to communicate with other systems and applications to bring the right pieces to equipment and workers along the production line.

BMW Group leverages data streaming to connect all data from its smart factories to the cloud. Robots ingest the data into the fully managed Kafka cluster in the cloud. BMW makes all data generated by its 30+ production facilities and worldwide sales network available in real-time to anyone across the global business.

BMW’s use cases include:

- Logistics and supply chain in global plants

- The right stock in place (physically and in ERP systems like SAP)

- OT / IoT integration with open standards like OPC-UA

- Just in time, just in sequence manufacturing

Here is why BMW chose data streaming for this (and many other) use cases:

- Why Kafka? Decoupling. Transparency. Innovation.

- Why Confluent? Stability is key in manufacturing.

- Why Confluent Cloud? Focus on business logic.

- Decoupling between logistics and production systems

6. Al-enabled inventory optimization

“Modern inventory planning is a very data-heavy task with companies compiling millions of data points. AI-enabled inventory optimization software is helping companies crunch these numbers much faster than before. It automates, streamlines, and controls the in- and outbound inventory flows and improves the process with AI capabilities.” as IoT Analytics describes.

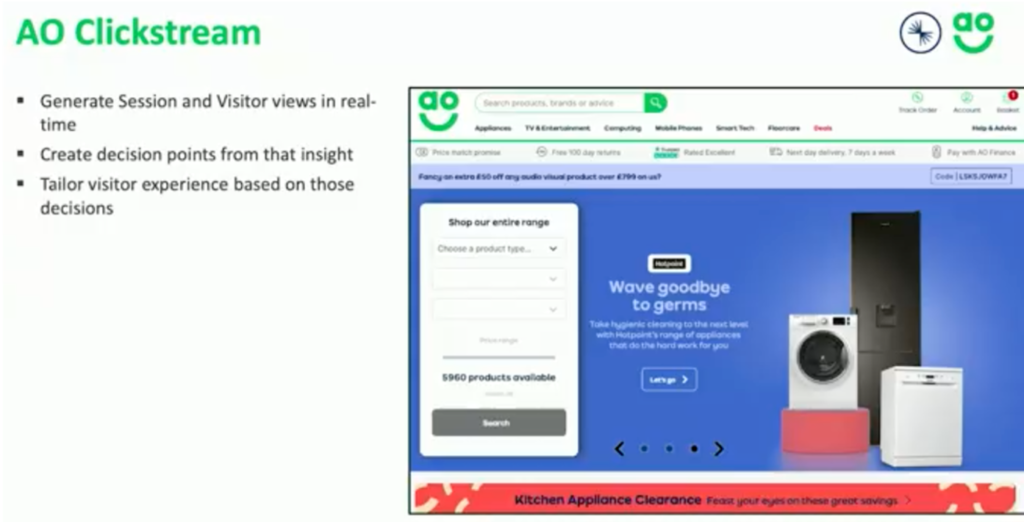

AO.com is one of many retailers that leverages data streaming for real-time inventory optimization across the supply chain. The electrical retailer provides a hyper-personalized online retail experience, turning each customer visit into a one-on-one marketing opportunity. This is only possible because AO.com correlates inventory data with historical customer data and real-time digital signals like a click in the mobile app.

Context-specific pricing, discounts, upselling, and other techniques are only possible because of AI-powered real-time decision-making based on inventory information across the supply chain. Information from warehouses, department stores, suppliers, shipping, and similar inventory-related data sources must be correlated to maximize customer satisfaction and revenue growth and increase customer conversions.

7. Proactive field service

IoT Analytics describes a pain we all experienced from Telco and many other industries: “IoT-based proactive field service software provides a step up from traditional field service of assets running in the field. While traditional field service software mostly works reactively by allocating field service technicians to a site after a failure has been reported, proactive field service uses IoT and predictive maintenance to send field service technicians to a remote site before the asset fails.”

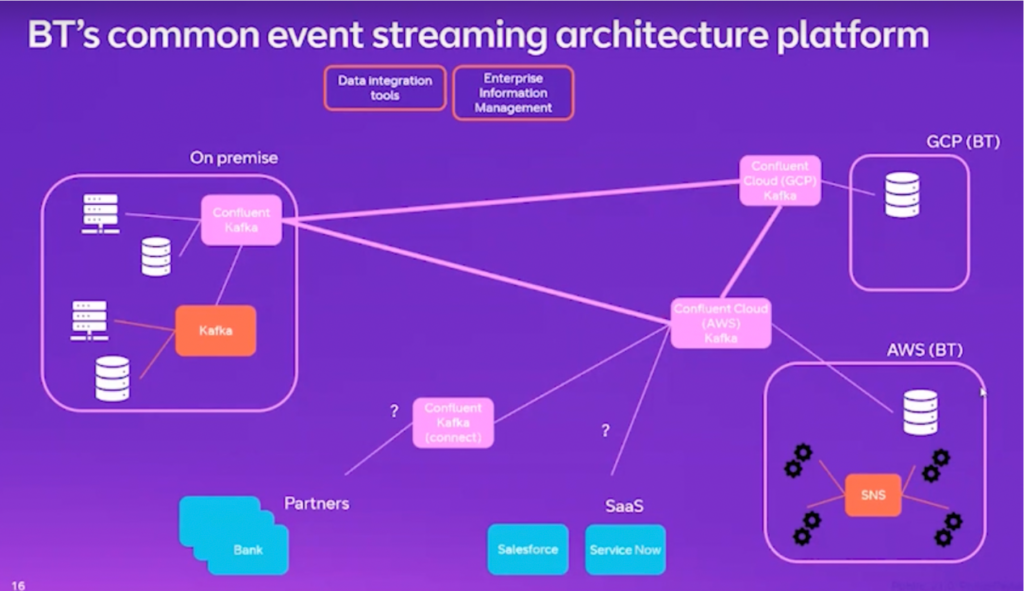

British Telecom (BT) is a telco that operates in ~180 countries. It is the largest telecommunications enterprise in the UK. BT leverages data streaming to expose real-time data events externally to improve the field service. Consequently, this provides a better customer experience and creates additional revenue streams.

For instance, a broadband outage can be recognized when it happens or even before it happens because of real-time data. This enables proactive field service. Customers receive notifications in real-time. Premium customers even receive extra data to their phone to tether a connection, while the outage persists.

British Telecom’s enterprise architecture comprises a hybrid and multi-cloud data streaming deployment:

8. Supply chain visibility software

Supply chain visibility is crucial in creating supply chain networks that will survive disruptions like the global COVID-19 pandemic or the Ukraine war. Questions like “when will my supplies arrive?” or “which alternative supplier has stock to ship”? are only answerable with real-time information across the supply chain.

BAADER is a worldwide manufacturer of innovative machinery for the food processing industry. They run an IoT-based and data-driven food value chain on Confluent Cloud.

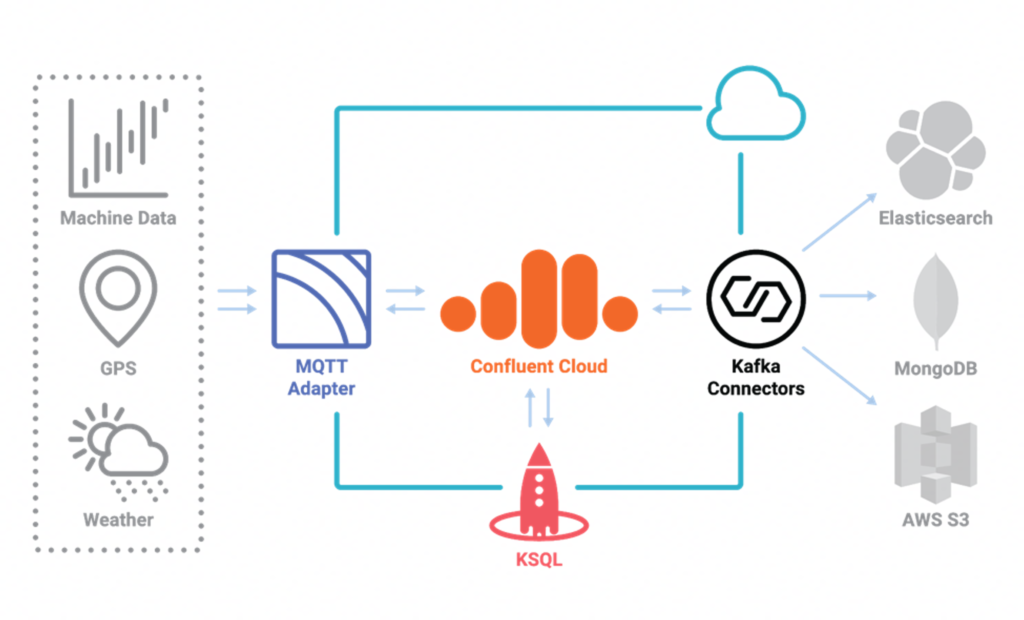

The Kafka-based infrastructure runs as a fully managed service in the cloud to provide end-to-end supply chain visibility. A single source of truth shows the information flow across the factories and regions across the food value chain:

Business-critical operations are available 24/7 for tracking, calculations, alerts, etc.

From a technical perspective, MQTT provides connectivity to machines and GPS data from vehicles. ksqlDB processes the data in motion continuously directly after the ingestion from the edge. Kafka Connect connectors integrate applications and IT systems, such as Elasticsearch, MongoDB, and AWS S3.

Data streaming optimizes the global supply chain

Innovative IoT technologies transform the global supply chain. End-to-end visibility in real-time cost reduction and better customer experiences are the consequence.

Case studies from companies across different industries showed how innovative IoT technologies and data streaming with the de facto standard Apache Kafka enable innovation while keeping the different business units and technologies decoupled from each other. Only a scalable and distributed real-time data streaming platform empowers such innovation.

How do you leverage data streaming to improve the supply chain? What are your use cases and architectures? Which IoT technologies do you integrate with Apache Kafka? Let’s connect on LinkedIn and discuss it! Join the data streaming community and stay informed about new blog posts by subscribing to my newsletter.