Enterprise resource planning (ERP) exists for many years. It is often monolithic, complex, proprietary, batch, and not scalable. Postmodern ERP represents the next generation of ERP architectures. It is real-time, scalable, and open. A Postmodern ERP uses a combination of open source technologies and proprietary standard software. This blog post explores why and how companies, both software vendors and end-users, leverage event streaming with Apache Kafka to implement a Postmodern ERP.

What is ERP (Enterprise Ressource Planning)?

Let’s define the term “ERP” first. This is not an easy task, as ERP is used for concepts and various standard software products.

Enterprise resource planning (ERP) is the integrated management of main business processes, often in real-time and mediated by software and technology.

ERP is usually referred to as a category of business management software—typically a suite of integrated applications – that an organization can use to collect, store, manage, and interpret data from many business activities.

ERP provides an integrated and continuously updated view of core business processes using common databases. These systems track business resources – cash, raw materials, production capacity – and the status of business commitments: orders, purchase orders, and payroll. The applications that make up the system share data across various departments (manufacturing, purchasing, sales, accounting, etc.) that provide the data ERP facilitates information flow between all business functions and manages connections to outside stakeholders.

It is important to understand that ERP is not just for manufacturing and relevant across various business domains. Hence, Supply Chain Management (SCM) is orthogonal to ERP.

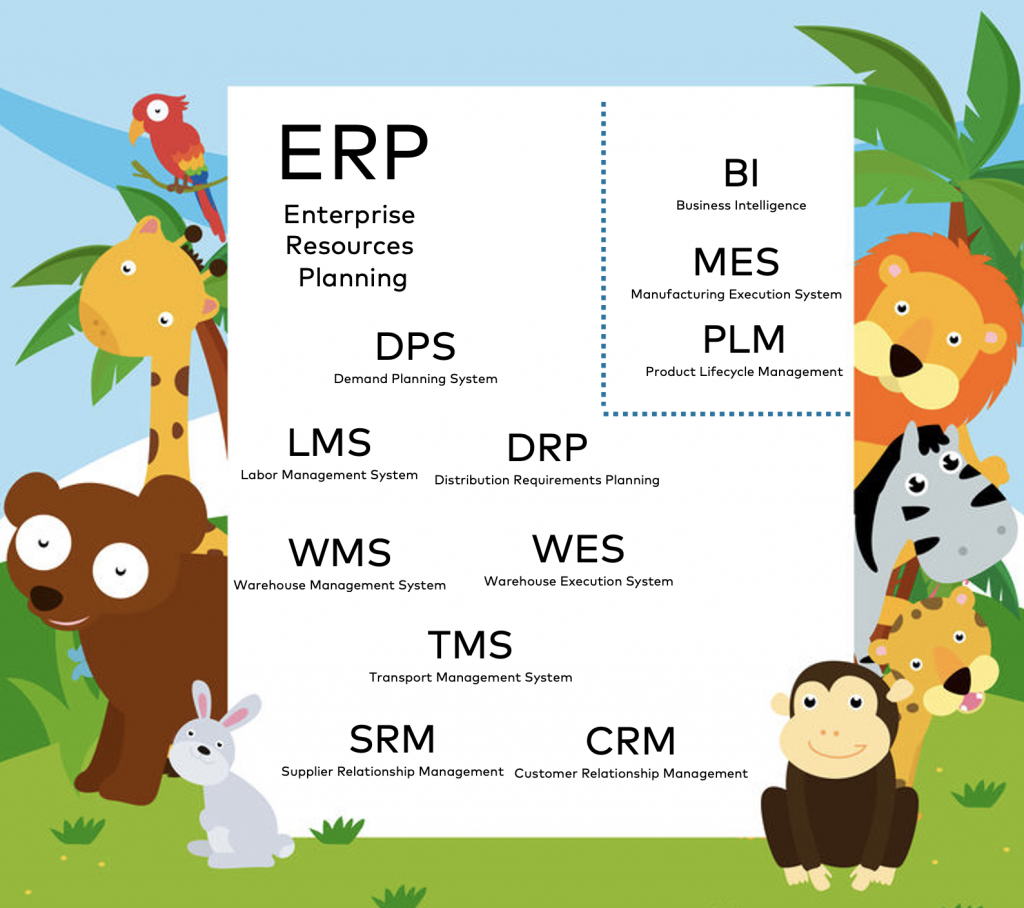

ERP is a Zoo of Concepts, Technologies, and Products

An ERP is a key concept and typically uses various products as part of every supply chain where tangible goods are produced. For that reason, an ERP is very complex in most cases. It usually is not just one product, but a zoo of different components and technologies:

Example: SAP ERP – More than a Single Product…

SAP is the leading ERP vendor. I explored SAP, its product portfolio, and integration options for Kafka in a separate blog post: “Kafka SAP Integration – APIs, Tools, Connector, ERP et al.”

Check that out if you want to get deeper into the complexity of a “single product and vendor”. You will be surprised how many technologies and integration options exist to integrate with SAP. SAP’s stack includes plenty of homegrown products like SAP ERP and acquisitions with their own codebase, including Ariba for supplier network, hybris for e-commerce solutions, Concur for travel & expense management, and Qualtrics for experience management. The article “The ERP is Dead. Long live the Distributed Planning System” from the SAP blog goes in a similar direction.

ERP Requirements are Changing…

This is not different for other big vendors. For instance, if you explore the Oracle website, you will also find a confusing product matrix. 🙂

That’s the status quo of most ERP vendors. However, things change due to shifting requirements: Digital Transformation, Cloud, Internet of Things (IoT), Microservices, Big Data, etc. You know what I mean… Requirements for standard software are changing massively.

Every ERP vendor (that wants to survive) is working on a Postmodern ERP these days by upgrading its existing software products or writing a completely new product – that’s often easier. Let’s explore what a Postmodern ERP is in the next section.

Introducing the Postmodern ERP

The term “Postmodern ERP” was coined by Gartner several years ago, already.

From the Gartner Glossary:

“Postmodern ERP is a technology strategy that automates and links administrative and operational business capabilities (such as finance, HR, purchasing, manufacturing, and distribution) with appropriate levels of integration that balance the benefits of vendor-delivered integration against business flexibility and agility.”

This definition shows the tight relation to other non-Core-ERP systems, the company’s whole supply chain, and partner systems.

The Architecture of a Postmodern ERP

According to Gartner’s definition of the postmodern ERP strategy, legacy, monolithic and highly customized ERP suites, in which all parts are heavily reliant on each other, should sooner or later be replaced by a mixture of both cloud-based and on-premises applications, which are more loosely coupled and can be easily exchanged if needed. Hint: This sounds a lot like Kafka, doesn’t it?

The basic idea is that there should still be a core ERP solution that would cover the most important business functions. In contrast, other functions will be covered by specialist software solutions that merely extend the core ERP.

There is, however, no golden rule as to what business functions should be part of the core ERP and what should be covered by supplementary solutions. According to Gartner, every company must define its own postmodern ERP strategy, based on its internal and external needs, operations, and processes. For example, a company may define that the core ERP solution should cover those business processes that must stay behind the firewall and choose to leave their core ERP on-premises. At the same time, another company may decide to host the core ERP solution in the cloud and move only a few ERP modules as supplementary solutions to on-premises.

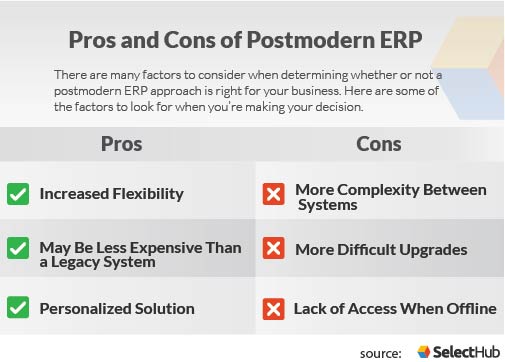

Pros and Cons of a Postmodern ERP

SelectHub explores the pros and cons of a Postmodern ERP compared to legacy ERPs:

The pros are pretty obvious, and the main motivation why companies want or need to move away from their legacy ERP system. Software is eating the world. Companies (need to) become more flexible, elastic, and scalable. Applications (need to) become more personalized and context-specific—all that (need to be) in real-time. There are no ways around a Postmodern ERP and all the related supply chain processes to leverage solve these requirements.

The main benefits that companies will gain from implementing a Postmodern ERP strategy are speed and flexibility when reacting to unexpected changes in business processes or on the organizational level. With most applications having a relatively loose connection, it is fairly easy to replace or upgrade them whenever necessary. Companies can also select and combine cloud-based and on-premises solutions that are most suited for their ERP needs.

The cons are more interesting because they need to be solved to deploy a Postmodern ERP successfully. The key downside of a postmodern ERP is that it will most likely lead to an increased number of software vendors that companies will have to manage and pose additional integration challenges for central IT.

Coincidentally, I had similar discussions with customers in the past quarters regularly. More and more companies adopt Apache Kafka to solve these challenges to build a Postmodern ERP and flexible, scalable supply chain processes.

Kafka as the Foundation of a Postmodern ERP

If you follow my blog and presentations, you know that Kafka is used in all areas where an ERP is relevant, for instance, Industrial IoT (IIoT), Supply Chain Management, Edge Analytics, and many other scenarios. Check out “Kafka in Industry 4.0 and Manufacturing” to learn more details about various use cases.

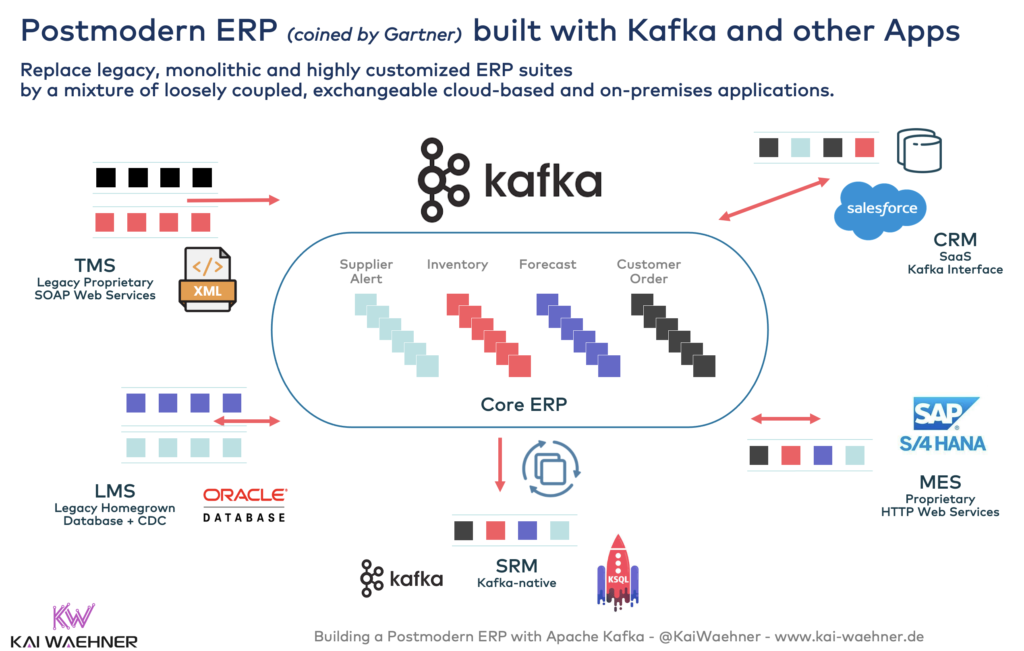

Example: A Postmodern ERP built on top of Kafka

A Postmodern ERP built on top of Apache Kafka is part of this story:

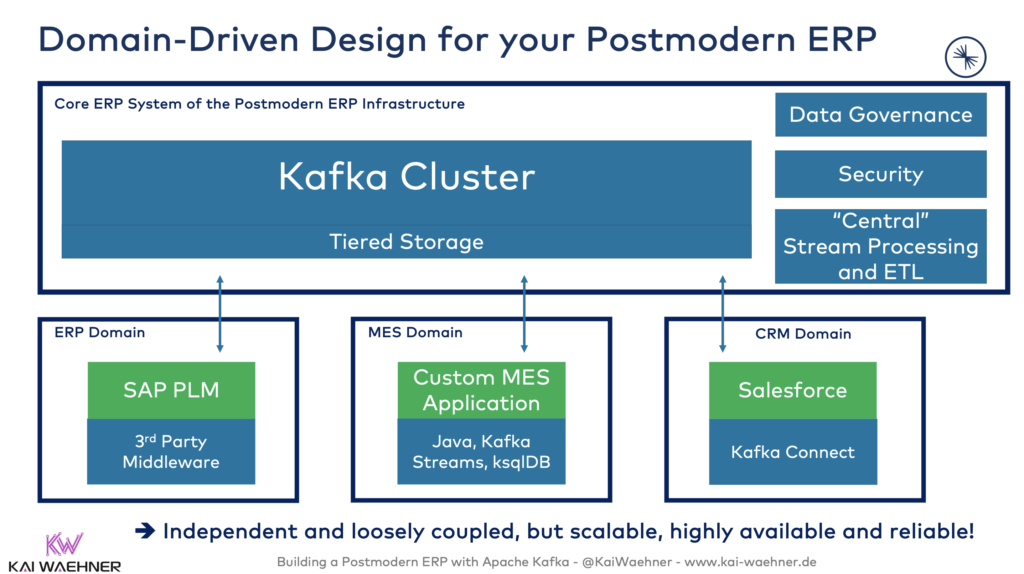

This architecture shows a Postmodern ERP with various components. Note that the Core ERP is built on Apache Kafka. Many other systems and applications are integrated.

Each component of the Postmodern ERP has a different integration paradigm:

- The TMS (Transportation Management System) is a legacy COTS application providing only a legacy XML-based SOAP Web Service interface. The integration is synchronous and not scalable but works for small transactional data sets.

- The LMS (Labor Management System) is a legacy homegrown application. The integration is implemented via Kafka Connect and a CDC (Change-Data-Capture) connector to push changes from the relational Oracle database in real-time into Kafka.

- The SRM (Supplier Relationship Management) is a modern application built on top of Kafka itself. Integration with the Core ERP is implemented with Kafka-native replication technologies like MirrorMaker 2, Confluent Replicator, or Confluent Cluster Linking to provide a scalable real-time integration.

- The MES (Manufacturing Execution System) is an SAP COTS product and part of the SAP S4/Hana product portfolio. The integration options include REST APIs, the Eventing API, and Java APIs. The right choice depends on the use case. Again, read Kafka SAP Integration – APIs, Tools, Connector, ERP et al. to understand how complex the longer explanation is.

- The CRM (Customer Relationship Management) is Salesforce, a SaaS cloud service, integrated via Kafka Connect and the Confluent connector.

- Many more integrations to additional internal and external applications are needed in a real-world architecture.

This is a hypothetical implementation of a Postmodern ERP. However, more and more companies implement this architecture for all the discussed benefits. Unfortunately, such modern architecture also includes some challenges. Let’s explore them and discuss how to solve them with Apache Kafka and its ecosystem.

Solving the Challenges of a Postmodern ERP with Kafka

This section covers three main challenges of implementing a Postmodern ERP and how Kafka and its ecosystem help implement this architecture.

I quote the three main challenges from the blog post “Postmodern ERP: Just Another Buzzword?” and then explain how the Kafka ecosystem solved them more or less out-of-the-box.

Issue 1: More Complexity Between Systems!

“Because ERP modules and tools are built to work together, legacy systems can be a lot easier to configure than a postmodern solution composed entirely of best-of-breed solutions. Because postmodern ERP may involve different programs from different vendors, it may be a lot more challenging to integrate. For example, during the buying process, you would need to ask about compatibility with other systems to ensure that the solution that you have in mind would be sufficient.”

First of all, is your existing ERP system easy to integrate? Any ERP system older than five years uses proprietary interfaces (such as BAPI and iDoc in case of SAP) or ugly/complex SOAP web services to integrate with other systems. Even if all the software components come from one single vendor, it was built by different business units or even acquired. The codebases and interfaces speak very different languages and technologies.

So, while a Postmodern ERP requires complex integration between systems, so does any legacy ERP system! Nevertheless:

How Kafka Helps…

Kafka provides an open, scalable, elastic real-time infrastructure for implementing the middleware between your ERP and other systems. More details in the comparison between Kafka and traditional middleware such as ETL and ESB products.

Kafka Connect is a key piece of this architecture. It provides a Kafka-native integration framework.

Additionally, another key reason why Kafka makes these complex integration successful is that Kafka really decouples systems (in contrary to traditional messaging queues or synchronous SOAP/REST web services):

The heart of Kafka is real-time and event-based. Additionally, Kafka decouples producers and consumers with its storage capabilities and handles the backpressure and optionally the long-term storage of events and data. This way, batch analytics platforms, REST interfaces (e.e.g mobiles apps) with request-response, and databases can access the data, too. Learn more about “Domain-driven Design (DDD) for decoupling applications and microservices with Kafka“.

Understanding the relation between event streaming with Kafka and non-streaming APIs (usually HTTP/REST) is also very important in this discussion. Check out “Apache Kafka and API Management / API Gateway – Friends, Enemies or Frenemies?” for more details.

The integration capabilities and real coupling using Kafka enables the integration of the complexity between systems.

Issue 2: More Difficult Upgrades!

“This con goes hand in hand with the increased complexity between systems. Because of this increased complexity and the fact that the solution isn’t an all-in-one program, making system upgrades can be difficult. When updates occur, your IT team will need to make sure that the relationship between the disparate systems isn’t negatively affected.”

How Kafka Helps…

The issue with upgrades is solved with Kafka out-of-the-box. Remember: Kafka really decouples systems from each other due to its storage capabilities. You can upgrade one system without even informing the other systems and without downtime! Two reasons why this works so well and out-of-the-box:

- Kafka is backward compatible. Even if you upgrade the server-side (Kafka brokers, ZooKeeper, Schema Registry, etc.), the other applications and interfaces continue to work without breaking changes. Server-side and client-side can be updated independently. Sometimes an older application is not updated anymore at all because it will be replaced soon. That’s totally fine. An old Kafka client can speak to a newer Kafka broker.

- Kafka uses rolling upgrades. The system continues to work without any downtime. 24/7. For Mission-critical workloads like ERP or MES transactions. From the outer perspective, the upgrade will not even be recognized at all.

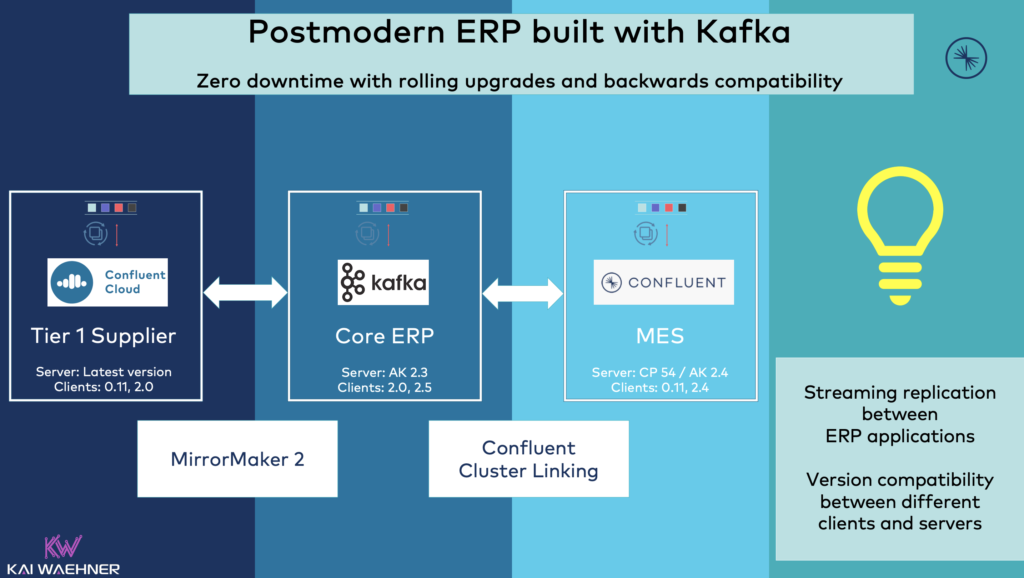

Let’s take a look at an example with different components of the Postmodern ERP:

In this case, we see different versions and distributions of Kafka being used:

- The Tier 1 Supplier uses the fully-managed and serverless Confluent Cloud solution. It automatically upgrades to the latest Kafka release under the hood (this is never a problem due to backward compatibility). The client applications use pretty old versions of Kafka.

- The Core ERP uses open-source Kafka as it is a homegrown solution, not standard software. The operations and support are handled by the company itself (pretty risky for such a critical system, but totally valid). The Kafka version is relatively new. One client application even uses a Kafka version, which is newer than the server-side, to leverage a new feature in Kafka Streams (Kafka is backward compatible in both directions, so this is not a problem).

- The MES vendor uses Confluent Platform, which embeds Apache Kafka. The version is up-to-date as the vendor does regular releases and supports rolling upgrades.

- Integration between the different ERP applications is implemented with Kafka-native replication tools, MirrorMaker 2, respectively Confluent Cluster Linking. As discussed in a former section, various other integration options are available, including REST, Kafka Connect, native Kafka clients in any programming languages, or any ETL or ESB tool.

Backward compatibility and rolling upgrades make updating systems easy and invisible for integrated systems. Business continuity is guaranteed out-of-the-box.

Issue 3: Lack of Access When Offline

“When you implement a cloud-based software, you need to account for the fact that you won’t be able to access it when you are offline. Many legacy ERP systems offer on-premise solutions, albeit with a high installation cost. However, this software is available offline. For cloud ERP solutions, you are reliant on the internet to access all of your data. Depending on your specific business needs, this may be a dealbreaker.”

How Kafka Helps…

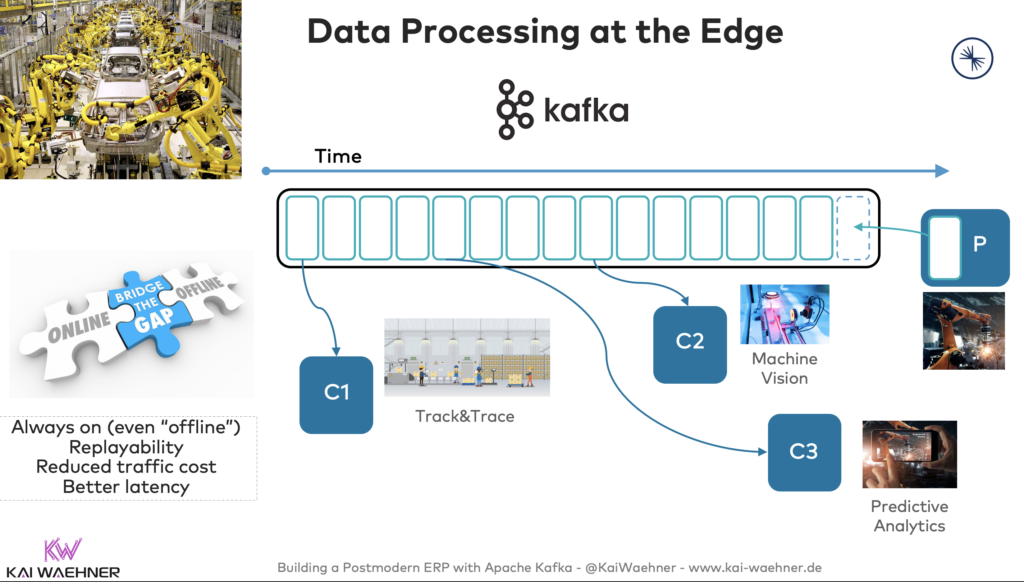

Hybrid architectures are the new black. Local processing on-premise is required in most use cases. It is okay to build the next generation ERP in the cloud. But the integration between cloud and on-premise/edge is key for success. A great example is Mojix, a Kafka-native cloud platform for real-time retail & supply chain IoT processing with Confluent Cloud.

When tangible goods are produced and sold, some processing needs to happen on-premise (e.g., in a factory) or even closer to the edge (e.g., in a restaurant or retail store). No access to your data is a dealbreaker. No capability of local processing is a dealbreaker. Latency and cost for cloud-only can be another deal-breaker.

Kafka works well on-premise and at the edge. Plenty of examples exist. Including Kafka-native bi-directional real-time replication between on-premise / edge and the cloud.

I covered these topics so often already; therefore, I just share a few links to read:

-

Architecture patterns for distributed, hybrid, edge, and global Apache Kafka deployments

-

Apache Kafka is the New Black at the Edge in Industrial IoT, Logistics and Retailing

-

IoT Live Demo – 100.000 Connected Cars with Kubernetes, Kafka, MQTT, TensorFlow

I specifically recommend the latter link. It covers hybrid architectures where processing at the edge (i.e. outside the data center) is key and required even offline, like in the following example running Kafka in a factory (including the server-side):

The hybrid integration capabilities using Kafka and its ecosystem solves the issue with lack of access when offline.

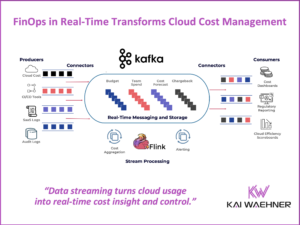

Kafka and Event Streaming as Foundation for a Postmodern ERP Infrastructure

Postmodern ERP represents the next generation of ERP architectures. It is real-time, scalable, and open by using a combination of open source technologies and proprietary standard software. This blog post explored how software vendors and end-users leverage event streaming with Apache Kafka to implement a Postmodern ERP.

What are your experiences with ERP systems? Did you already implement a Postmodern ERP architecture? Which approach works best for you? What is your strategy? Let’s connect on LinkedIn and discuss it!