Supply Chain Management (SCM) involves planning and coordinating all the people, processes, and technology involved in creating value for a company. This includes cross-cutting processes, including purchasing/procurement, logistics, operations/manufacturing, and others. Automation, robustness, flexibility, real-time, and hybrid deployment (edge + cloud) are key for future success, no matter what industry. This blog explores how Apache Kafka helps optimize a supply chain providing decoupled microservices, data integration, real-time analytics, and more…

The following topics are covered:

- Definition of supply chain management

- Challenges of current supply chains

- Event streaming to optimize the supply chain

- Use cases and real-world enterprise examples for Apache Kafka deployments

Supply Chain Management (SCM)

Supply chain management (SCM) covers the management of the flow of goods and services. It involves the movement and storage of raw materials, work-in-process inventory, and finished goods, and an end to end order fulfillment from the point of origin to the point of consumption. Interconnected, interrelated, or interlinked networks, channels, and node businesses combine to provide products and services required by end customers in a supply chain.

SCM is often defined as the design, planning, execution, control, and monitoring of supply chain activities to create net value, build a competitive infrastructure, leverage worldwide logistics, synchronize supply with demand, and measure performance globally.

Supply chain management is the broad range of activities required to plan, control, and execute a product’s flow from materials to production to distribution in the most economical way possible. SCM encompasses the integrated planning and execution of processes required to optimize the flow of materials, information, and capital in functions that broadly include demand planning, sourcing, production, inventory management, and logistics.

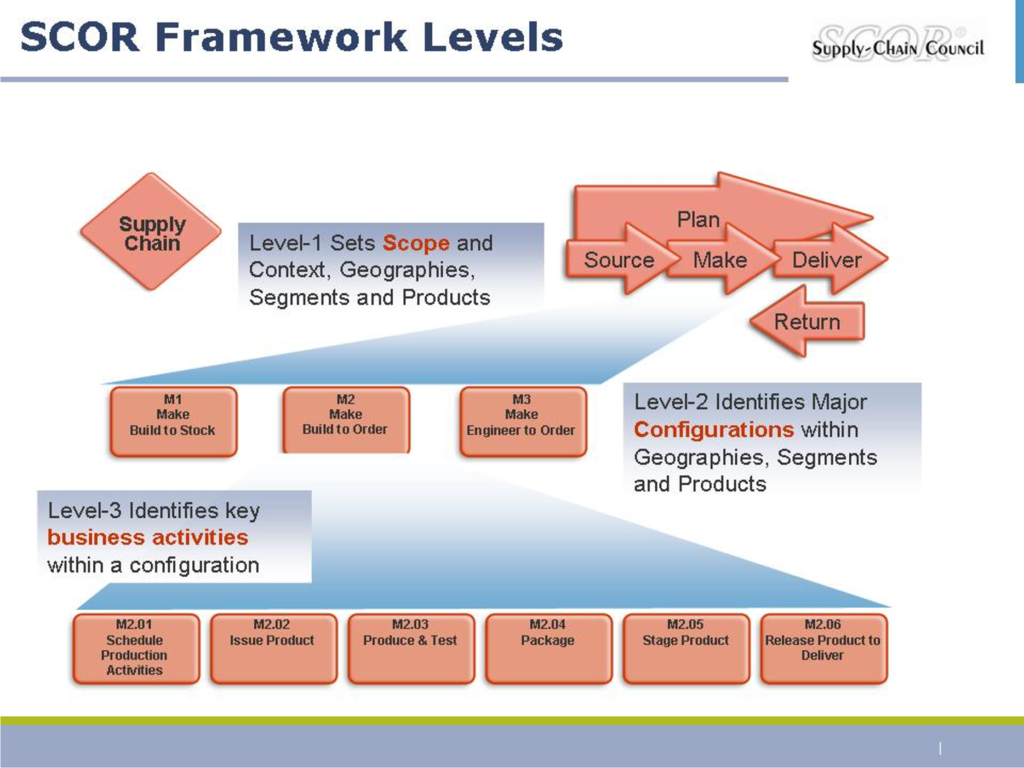

SCOR (Supply-Chain Operations Reference Model)

There are a variety of supply-chain models, which address both the upstream and downstream elements of SCM. The SCOR (Supply-Chain Operations Reference) model was developed by a consortium of industry and the non-profit Supply Chain Council (now part of APICS). SCOR became the cross-industry de facto standard defining the scope of supply-chain management.

SCOR measures total supply-chain performance. It is a process reference model for supply-chain management, spanning from the supplier’s supplier to the customer’s customer. It includes delivery and order fulfillment performance, production flexibility, warranty, returns processing costs, inventory and asset turns, and other factors in evaluating a supply chain’s overall effective performance.

Here is an example of the SCOR framework levels:

Challenges within the Evolving Supply Chain Processes in a Digital Era

The above definition of SCM and the related SCOR model shows how complex supply chain processes and solutions are. Here are the top 5 key challenges of supply chains:

- Time Frames are Shorter

- Rapid Change

- Zoo of Technologies and Products

- Historical Models are No Longer Viable

- Lack of visibility

Let’s explore the challenges in more detail…

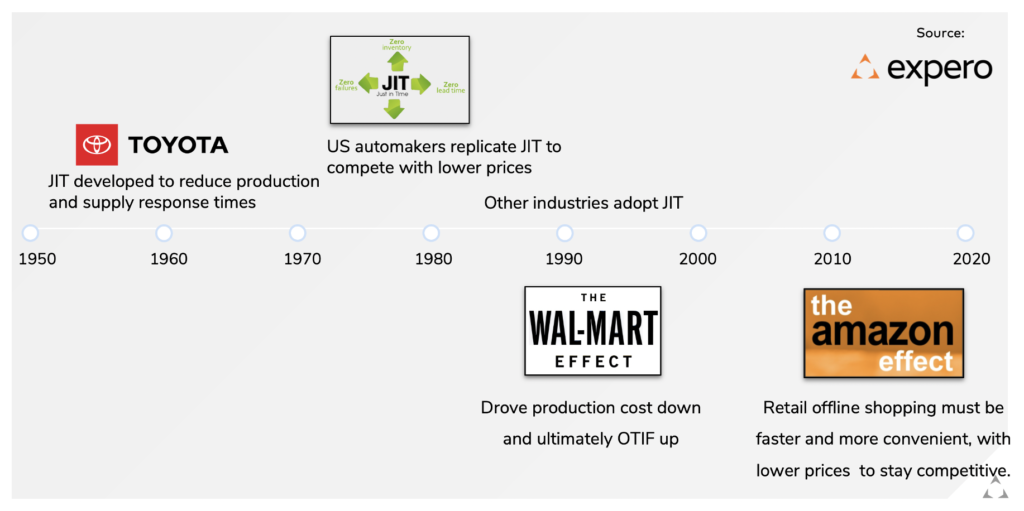

Challenge 1: Time Frames are Shorter

Challenge 2: Rapid Change

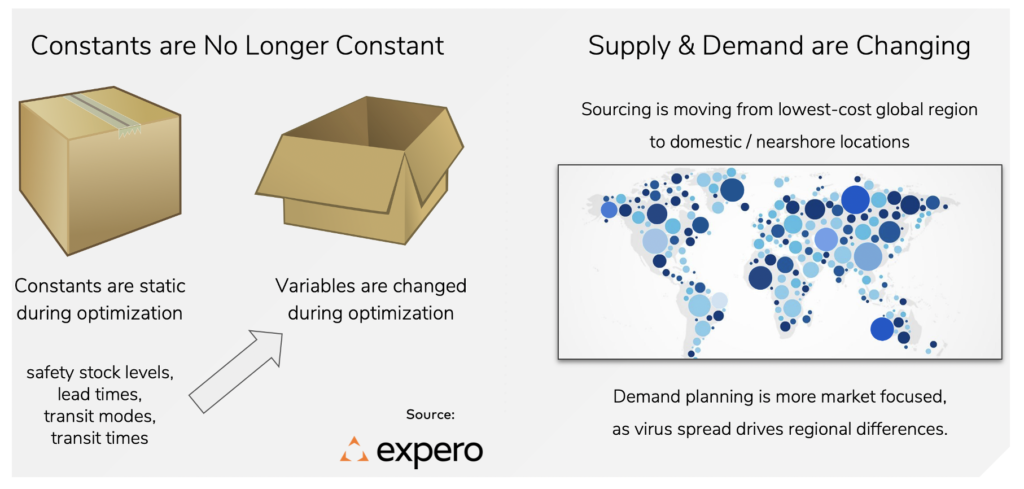

Challenge 3: Historical Models are No Longer Viable

Challenge 4: Lack of visibility

Lack of plan visibility leads to inventory and resource utilization imbalances. Imbalances mean waste (overproduction) and uncaptured revenue (underproduction). Here are some stats:

- 69% of companies lack complete visibility of their supply chains (BCI Supply Chain Resilience Report)

- 78% of companies lack a proactive response supply chain network (Logistics Bureau)

- 85% of companies report inadequate visibility into production operations (GEODIS Supply Chain Worldwide Survey)

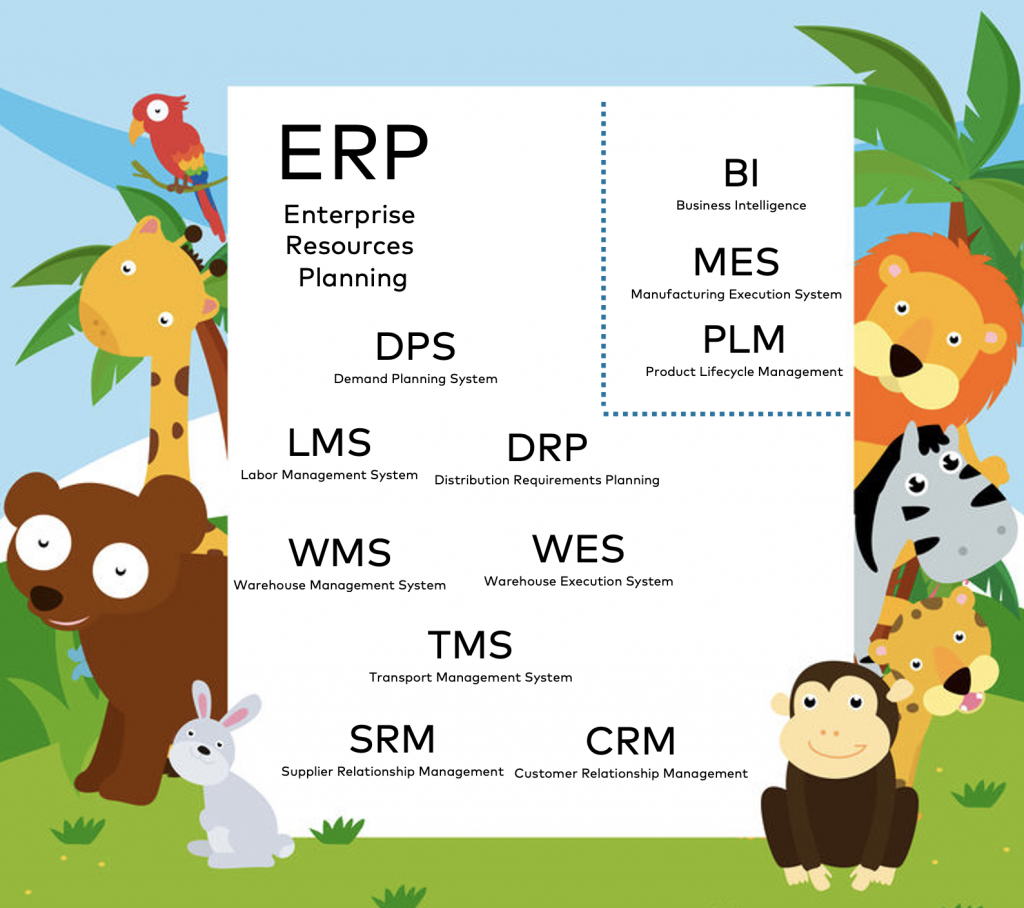

Challenge 5: Zoo of Technologies and Products

A zoo of supply chain technologies and products needs to be integrated and modernized. Here are a few examples:

Check out my blog post about “integration alternatives and connectors for Apache Kafka and SAP standard software” to explore how complex such an integration environment typically looks like.

Are more detailed explanation of these supply chain challenges (and the related solutions) is discussed in the Confluent webinar recording done by me with experts from Expero: Supply Chain Optimization with Event Streaming and the Apache Kafka Ecosystem.

Consequences of the Supply Chain Challenges

The consequences of all these challenges are horrible for an enterprise supply chain:

- Missed orders

- Lost revenue

- Expediting fees

- Contract penalties

- Frustrated customers

- …

So let’s talk about how event streaming with Apache Kafka can help to fix these problems.

Why Apache Kafka for Supply Chain Optimization?

Solving the requirements described above usually requires various of the characteristics and features Kafka and its ecosystem provide with one single technology and infrastructure:

- Real-time messaging (at scale, mission-critical)

- Global Kafka (edge, data center, multi-cloud)

- Cloud-native (open, flexible, elastic)

- Data integration (legacy + modern protocols, applications, communication paradigms)

- Data correlation (real-time + historical data, omni-channel)

- Real decoupling (not just messaging, but also infinite storage + replayability of events)

- Real-time monitoring

- Transactional data and analytics data (MES, ERP, CRM, SCM, …)

- Applied machine learning (model training and scoring)

- Cybersecurity

- Complementary to legacy and cutting-edge technology (Mainframe, PLCs, 3D printing, augmented reality, …)

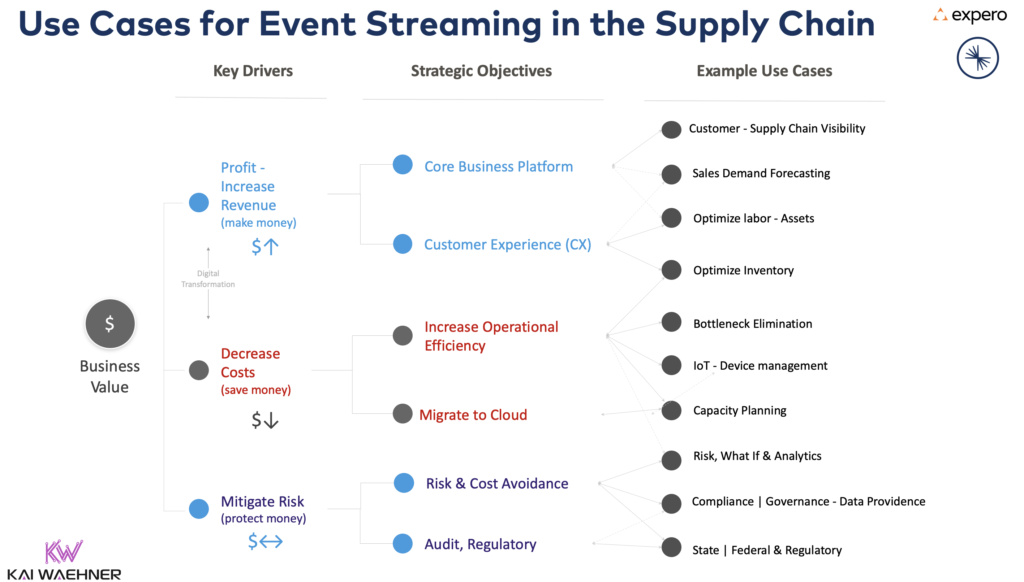

Use Cases for Apache Kafka in the Supply Chain

The supply chain is obviously a huge topic. Plenty of different use cases leverage Apache Kafka. Here are just a few examples to give a feeling about the width of possibilities:

Examples for Supply Chain Optimization with Apache Kafka Across Industries

This section explores very different use cases at enterprises across industries from carmakers (Audi, BMW, Porsche), retailers (Walmart), and food manufacturing (Baader). All content comes directly from the public talks and blog posts which their employees created and published. Exciting to see how many different problems event streaming can solve!

Manufacturing of Food Machinery @ Baader

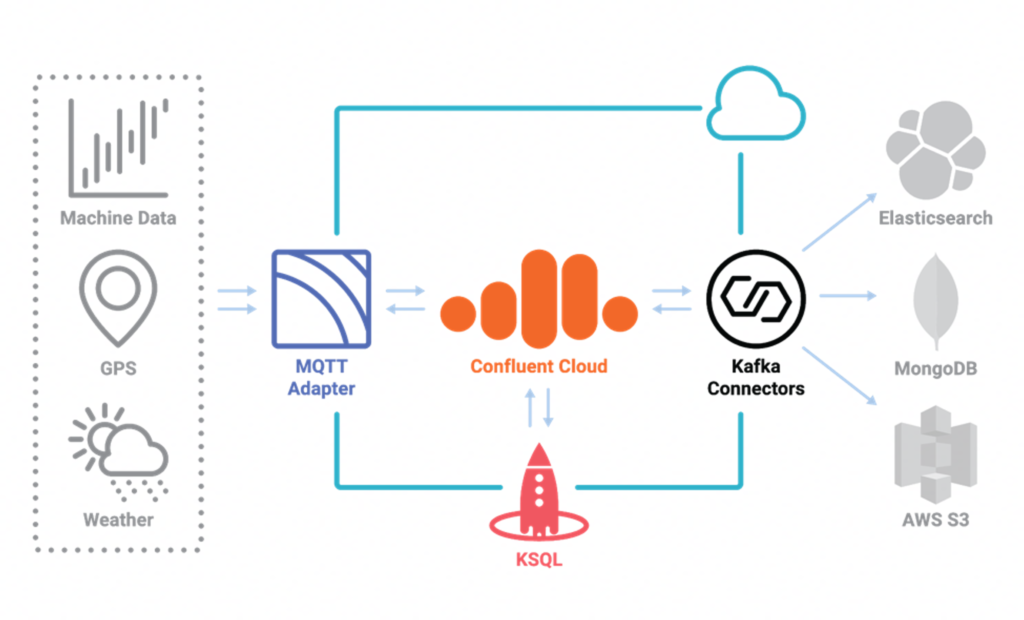

BAADER is a worldwide manufacturer of innovative machinery for the food processing industry. They run an IoT-based and data-driven food value chain on Confluent Cloud.

The Kafka-based infrastructure provides a single source of truth across the factories and regions across the food value chain. Business-critical operations are available 24/7 for tracking, calculations, alerts, etc.

Integrated Sales, Manufacturing, Connected Vehicles and Charging Stations @ Porsche

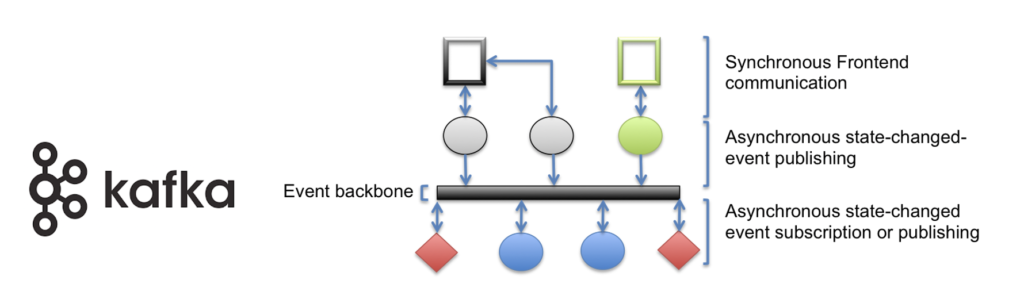

Kafka provides real decoupling between applications. Hence, Kafka became the defacto standard for microservices and Domain-driven Design (DDD). It allows to build independent and loosely coupled, but scalable, highly available, and reliable applications.

That’s exactly what Porsche describes for their usage of Apache Kafka through its supply chain:

“The recent rise of data streaming has opened new possibilities for real-time analytics. At Porsche, data streaming technologies are increasingly applied across a range of contexts, including warranty and sales, manufacturing and supply chain, connected vehicles, and charging stations” writes Sridhar Mamella (Platform Manager Data Streaming at Porsche).

The following picture shows the event hub which Heiko Scholtes from PorscheDev explored in one of their blog posts:

This architecture was published in the mid of 2017 already. Hence, Porsche already uses Kafka for a long time in their projects. That’s a pretty common pattern for Kafka: Build one pipeline. Then let more and more consumers use the data. Some real-time or near real-time, some others via batch processes or request-response interfaces.

The Confluent Podcast also features the story around Porsche’s event streaming platform Streamzilla, built on top of Kafka. Check out this podcast from December 2020 to hear directly from Porsche.

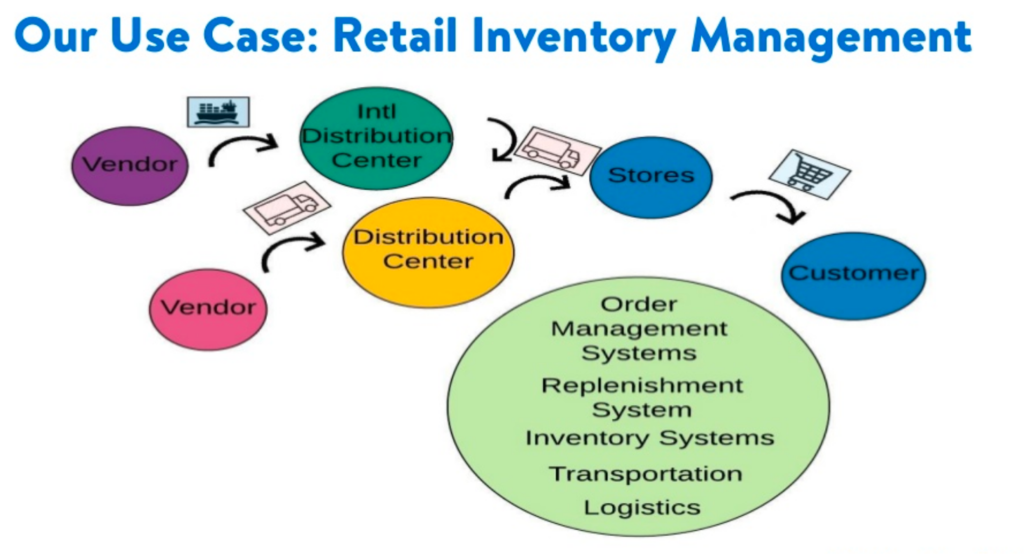

Real-Time Inventory System @ Walmart

A real-time inventory is a key piece of a modern supply chain. Many companies even require it to stay competitive and to provide a good customer experience. Business models such as “order online, pick up in the store” are impossible without real-time inventory and supply chain.

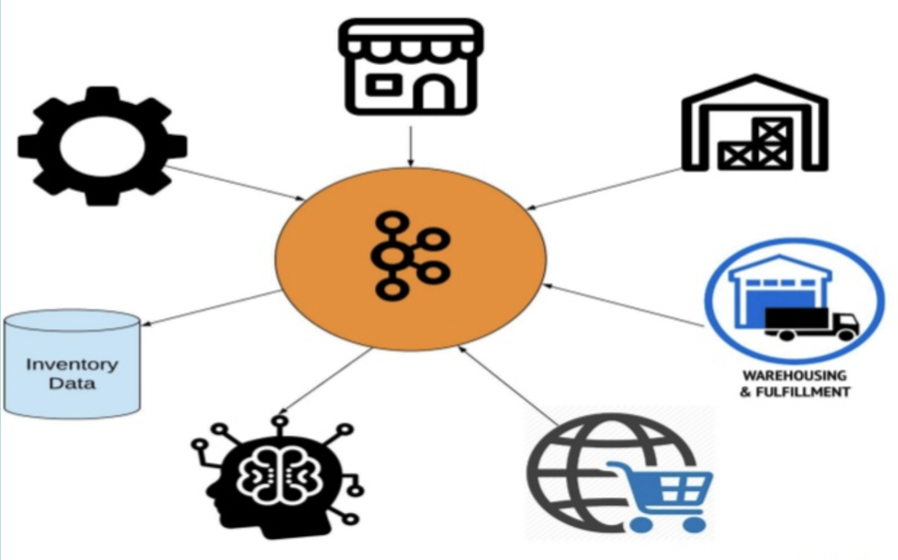

Walmart is a great example. They leverage Apache Kafka as the heart of their supply chain:

Let’s quote Suman Pattnaik, Big Data Architect @ Walmart:

“Retail shopping experiences have evolved to include multiple channels, both online and offline, and have added to a unique set of challenges in this digital era. Having an up to date snapshot of inventory position on every item is an essential aspect to deal with these challenges. We at Walmart have solved this at scale by designing an event-streaming-based, real-time inventory system leveraging Apache Kafka… Like any supply chain network, our infrastructure involved a plethora of event sources with all different types of data”.

The real-time infrastructure around Apache Kafka includes the whole supply chain, including distribution centers, stores, vendors, and customers:

Please find out more details about Walmart’s Kafka usage in their fantastic Kafka Summit talk.

Supply Chain Purchasing using Deep Learning @ BMW

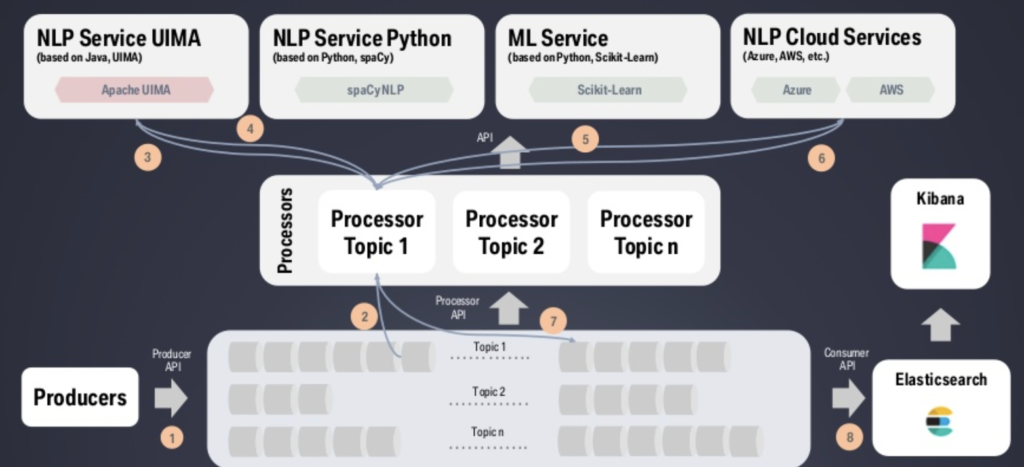

BMW leverages real-time Natural Language Processing (NLP) in various use cases. For example, the implementation of digital contract intelligence enables the automation of the processing and analysis of legal documents.

BMW built an industry-ready NLP service framework based on Kafka for smart information extraction and search, automated risk assessment, plausibility checks and negotiation support:

Check out the details in BMW’s Kafka Summit talk about their use cases for Kafka and Deep Learning / NLP.

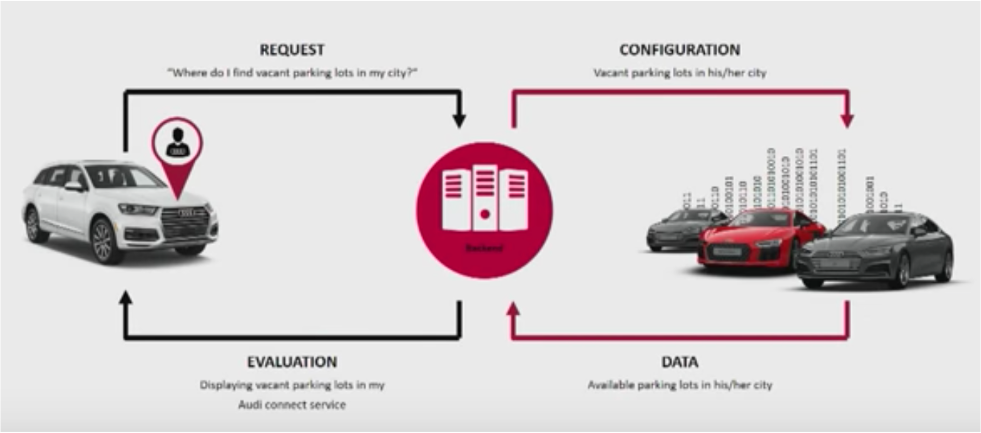

Connected Cars for Aftersales and Customer 360 @ Audi

Audi has built a connected car infrastructure with Apache Kafka. Their Kafka Summit keynote explored the use cases and architecture:

Use cases include:

- Real-Time Data Analysis

- Swarm Intelligence

- Collaboration with Partners

- Predictive AI

- …

Depending on how you define the term and buzzword “Digital Twin”, this is a perfect example: All sensor data from the connected cars are processed in real-time and stored for historical analysis and reporting.

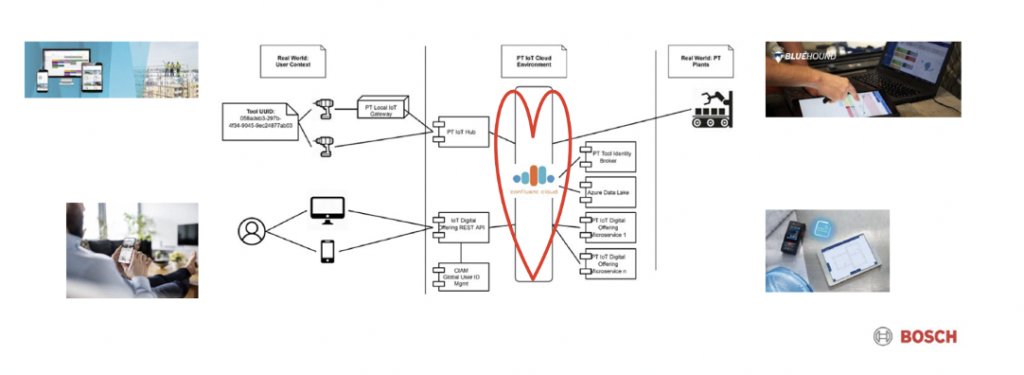

Track&Trace for Construction Management @ Bosch

The global supplier Bosch has another impressive use case for a “Digital Twin” leveraging Apache Kafka and Confluent Cloud: Construction site management analyzing sensors, machines, and workers. Use cases include collaborative planning, inventory and asset management, and track, manage, and locate tools and equipment anytime and anywhere:

If you are interested in more details about building a digital twin with the Apache Kafka ecosystem, check out more material here: “Apache Kafka for Building a Digital Twin IoT Infrastructure“.

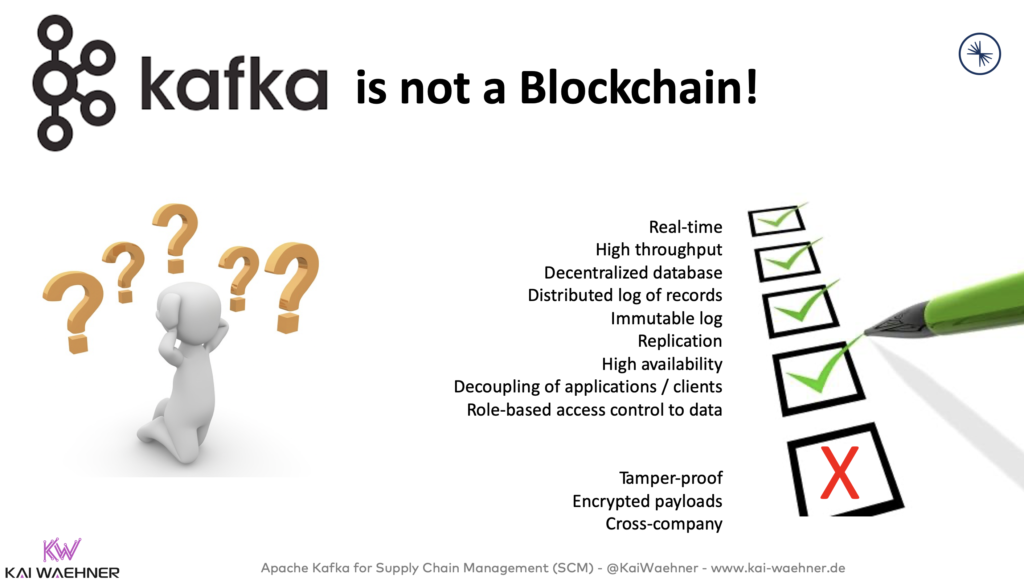

Kafka and Blockchain for Supply Chain Management

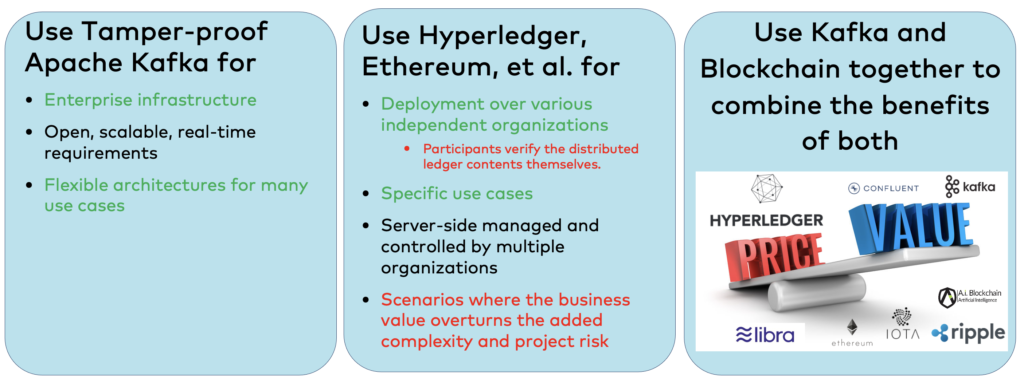

If there is one use case where blockchain really makes sense, then it is supply chain management. Blockchain provides features to support cross-company interaction securely. However, blockchain is also very complex and immature. I have not seen many projects where the added value is bigger than the added cost and risk. Often, Kafka is “good enough”. But let’s be clear: Kafka is NOT a Blockchain:

Having said this, please be aware:

- Many blockchain products are not really a blockchain, but just a distributed ledger.

- Many projects don’t require all the features of a blockchain.

- Tamper-proof storage on disk and end-to-end payload encryption (often applied on field/attribute level) are not part of Kafka but can be added with some nice add-ons).

- Cross-company integration with non-trusted parties is the only real reason when a blockchain is needed and adds value.

Hence, make sure to define all requirements and then evaluate if you need Kafka, a blockchain, or a combination of both:

If you want to learn more about the relation between Apache Kafka and blockchain projects, check out this material: “Apache Kafka as Part of a Blockchain Project and its Relation to Frameworks like Hyperledger and Ethereum“.

Slides and Video Recording

Here are the slides and video recording exploring the optimization of supply chains with the Apache Kafka ecosystem in more detail:

Slide Deck

Click on the button to load the content from www2.slideshare.net.

Video Recording

What are your experiences with Supply Chain Management architectures, applications, and optimization? Did you already implement a more automated, scalable, real-time supply chain? Which approach works best for you? What is your strategy? Let’s connect on LinkedIn and discuss it! Stay informed about new blog posts by subscribing to my newsletter.