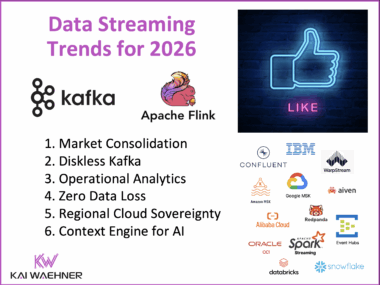

Top Trends for Data Streaming with Apache Kafka and Flink in 2026

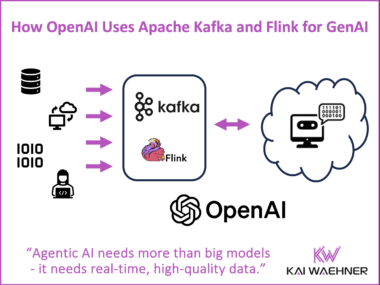

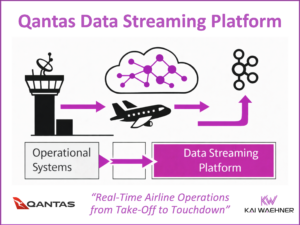

Each year brings new momentum to the data streaming space. In 2026, six key trends stand out. Platforms and vendors are consolidating. Diskless Kafka and Apache Iceberg are reshaping storage. Real-time analytics is moving into the stream. Enterprises demand zero data loss and regional compliance. Streaming is now powering operational AI with real-time context. Data streaming has evolved. It is now strategic infrastructure at the heart of modern enterprise systems.