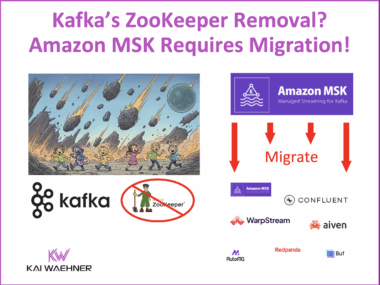

In-Place Kafka Cluster Upgrades from ZooKeeper to KRaft are Not Possible with Amazon MSK

The Apache Kafka community introduced KIP-500 to remove ZooKeeper and replace it with KRaft, a new built-in consensus layer. This was a major milestone. It simplified operations, improved scalability, and reduced complexity. Importantly, Kafka supports smooth, zero downtime migrations from ZooKeeper to KRaft, even for large, business critical clusters. But NOT with Amazon MSK…