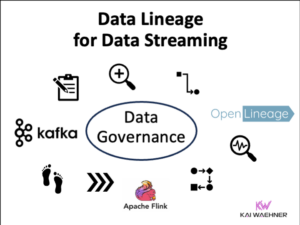

Learn the differences between an event-driven streaming platform like Apache Kafka and middleware like Message Queues (MQ), Extract-Transform-Load (ETL) and Enterprise Service Bus (ESB). Including best practices and anti-patterns, but also how these concepts and tools complement each other in an enterprise architecture.

This blog post shares my slide deck and video recording. I discuss the differences between Apache Kafka as Event Streaming Platform and integration middleware. Learn if they are friends, enemies or frenemies.

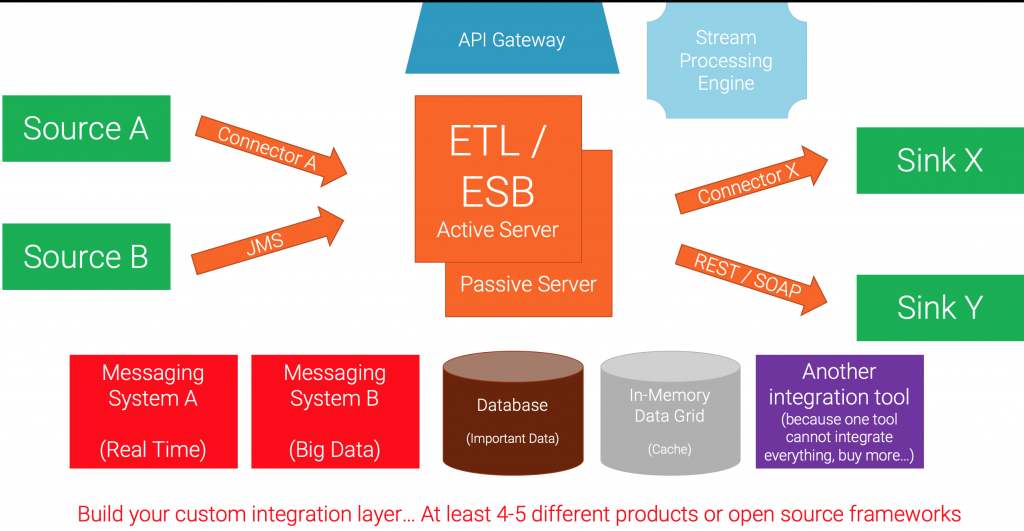

Problems of Legacy Middleware

Extract-Transform-Load (ETL) is still a widely-used pattern to move data between different systems via batch processing. Due to its challenges in today’s world where real time is the new standard, an Enterprise Service Bus (ESB) is used in many enterprises as integration backbone between any kind of microservice, legacy application or cloud service to move data via SOAP / REST Web Services or other technologies. Stream Processing is often added as its own component in the enterprise architecture for correlation of different events to implement contextual rules and stateful analytics. Using all these components introduces challenges and complexities in development and operations.

Apache Kafka – An Open Source Event Streaming Platform

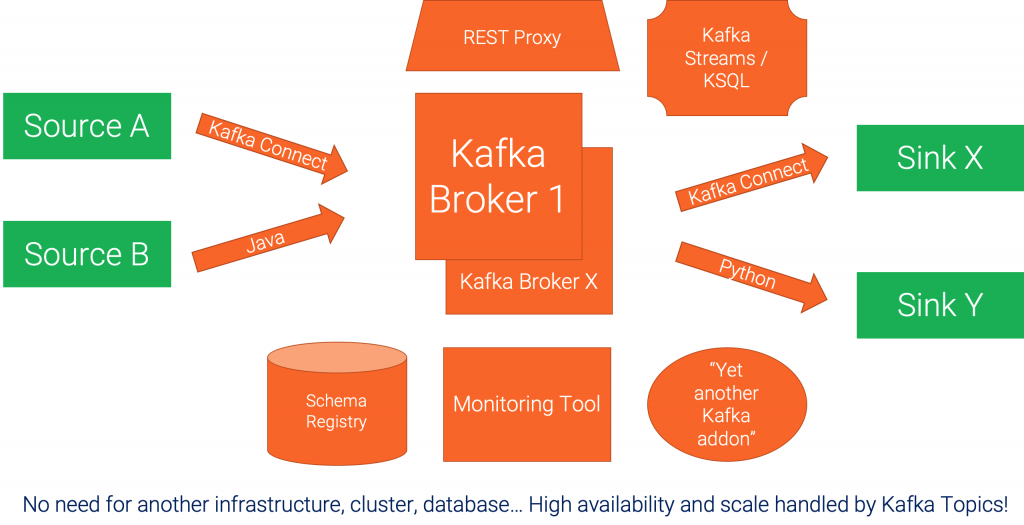

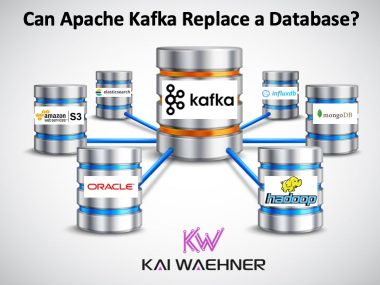

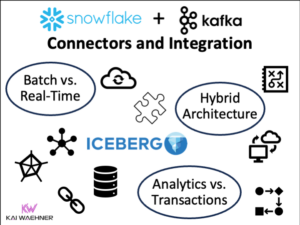

This session discusses how teams in different industries solve these challenges by building a native event streaming platform from the ground up instead of using ETL and ESB tools in their architecture. This allows to build and deploy independent, mission-critical streaming real time application and microservices. The architecture leverages distributed processing and fault-tolerance with fast failover, no-downtime, rolling deployments and the ability to reprocess events, so you can recalculate output when your code changes. Integration and Stream Processing are still key functionality but can be realized in real time natively instead of using additional ETL, ESB or Stream Processing tools.

A concrete example architecture shows how to build a complete streaming platform leveraging the widely-adopted open source framework Apache Kafka to build a mission-critical, scalable, highly performant streaming platform. Messaging, integration and stream processing are all build on top of the same strong foundation of Kafka; deployed on premise, in the cloud or in hybrid environments. In addition, the open source Confluent projects, based on top of Apache Kafka, adds additional features like a Schema Registry, additional clients for programming languages like Go or C, or many pre-built connectors for various technologies.

Slides: Apache Kafka vs. Integration Middleware

Here is the slide deck:

Click on the button to load the content from www.slideshare.net.

Video Recording: Kafka vs. MQ / ETL / ESB – Friends, Enemies or Frenemies?

Here is the video recording where I walk you through the above slide deck:

Article: Apache Kafka vs. Enterprise Service Bus (ESB)

I also published a detailed blog post on Confluent blog about this topic in 2018:

Apache Kafka vs. Enterprise Service Bus (ESB)

Talk and Slides from Kafka Summit London 2019

The slides and video recording from Kafka Summit London 2019 (which are similar to above) are also available for free.

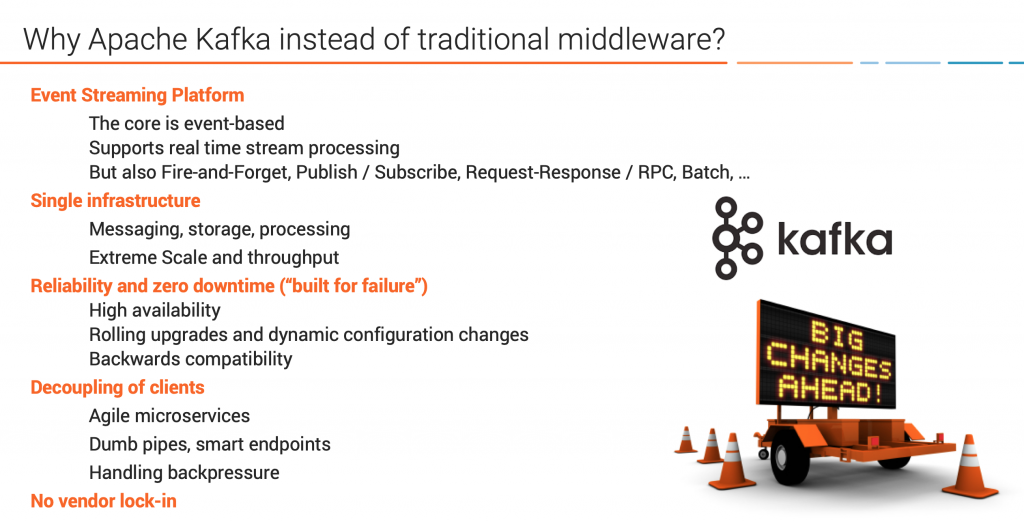

Why Apache Kafka instead of Traditional Middleware?

If you don’t want to spend a lot of time on the slides and recording, here is a short summary of the differences of Apache Kafka compared to traditional middleware:

Questions and Discussion…

Please share your thoughts, too!

Does your infrastructure see similar architectures? Do you face similar challenges? Do you like the concepts behind an Event Streaming Platform (aka Apache Kafka)? How do you combine legacy middleware with Kafka? What’s your strategy to integrate the modern and the old (technology) world? Is Kafka part of that architecture?

Please let me know either via a comment or via LinkedIn, Twitter, email, etc. I am curious about other opinions and experiences (and people who disagree with my presentation).

2 comments

I completely agree with you on the benefits of moving to event streaming architecture. However, the replacement options for existing messaging and ESB tech is vastly simplified. There are a number of reasons why Kafka isn’t immediately replacing these well established technologies.

1. Kafka doesn’t have a mainframe native (CICS/COBOL) option. MQ is still the ‘simplest’ way to integrate with the mainframe and there is 30 years of integration experience and development in that space.

2. Orchestration and choreography are concerns that live outside most messaging systems so requires investment. If an organisation is going to invest in this area then they are looking at ESB style solutions. Kafka becomes a transport mechanism only. We need ksql, query, connect to be supported as first class citizens in these tools otherwise they become competing not coordinating choices.

3. ETL tools have many well developed patterns and UI based interfaces to facility their role in the ETL flows. Kafka requires significant development effort (coding) to perform the same functionality. Kafka needs a low code/no code option to compete in this space.

If we can’t replace these older technologies with event streaming tool, we’re just adding another tool to our already complex stack. It may provide new capabilities, but without facilitating simplification, it’s ultimately going to become another piece of the puzzle. Here’s hoping we can solve all of these as event streaming is the best option for future integration requirements.

I mostly agree with your comments, Ron. I want to point out that all my talks explain why this is not an immediate replacement for the reasons you brought up, but a complementary technology and concept. Some use cases can only be built with event streaming, some others need event streaming + existing middleware.

I also would like to see Kafka-native low code/no-code tools 🙂 This will come and solve some problems. On the other side, writing source code is not a negative point, it simply has trade-offs (–> pros and cons).