Databricks and Confluent Leading Data and AI Architectures – What About Snowflake, BigQuery, and Friends?

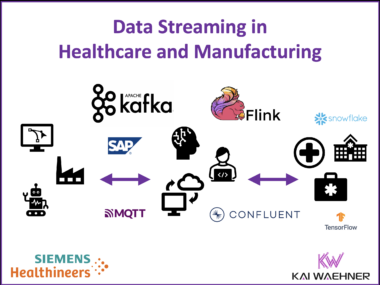

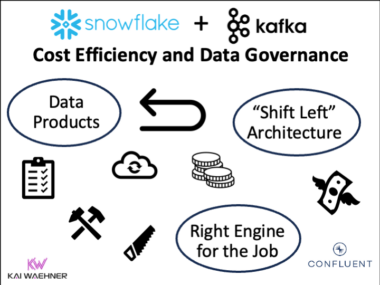

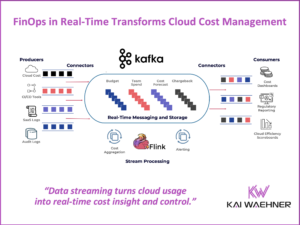

Confluent, Databricks, and Snowflake are trusted by thousands of enterprises to power critical workloads—each with a distinct focus: real-time streaming, large-scale analytics, and governed data sharing. Many customers use them in combination to build flexible, intelligent data architectures. This blog highlights how Erste Bank uses Confluent and Databricks to enable generative AI in customer service, while Siemens combines Confluent and Snowflake to optimize manufacturing and healthcare with a shift-left approach. Together, these examples show how a streaming-first foundation drives speed, scalability, and innovation across industries.