IT modernization and innovative new technologies change the healthcare industry significantly. This blog series explores how data streaming with Apache Kafka enables real-time data processing and business process automation. Real-world examples show how traditional enterprises and startups increase efficiency, reduce cost, and improve the human experience across the healthcare value chain, including pharma, insurance, providers, retail, and manufacturing. This is part two: Legacy modernization and hybrid multi-cloud. Examples include Optum / UnitedHealth Group, Centene, and Bayer.

Blog Series – Kafka in Healthcare

Many healthcare companies leverage Kafka today. Use cases exist in every domain across the healthcare value chain. Most companies deploy data streaming in different business domains. Use cases often overlap. I tried to categorize a few real-world deployments into different technical scenarios and added a few real-world examples:

- Overview – Data Streaming Use Cases and Architectures for Healthcare (including Slide Deck)

- THIS POST: Legacy Modernization and Hybrid Cloud (Optum / UnitedHealth Group, Centene, Bayer)

- Streaming ETL (Bayer, Babylon Health)

- Real-time Analytics (Cerner, Celmatix, CDC/Centers for Disease Control and Prevention)

- Machine Learning and Data Science (Recursion, Humana)

- Open API and Omnichannel (Care.com, Invitae)

Stay tuned for a dedicated blog post for each of these topics as part of this blog series. I will link the blogs here as soon as they are available (in the next few weeks). Subscribe to my newsletter to get an email after each publication (no spam or ads).

Legacy Modernization and Hybrid Multi-Cloud with Kafka

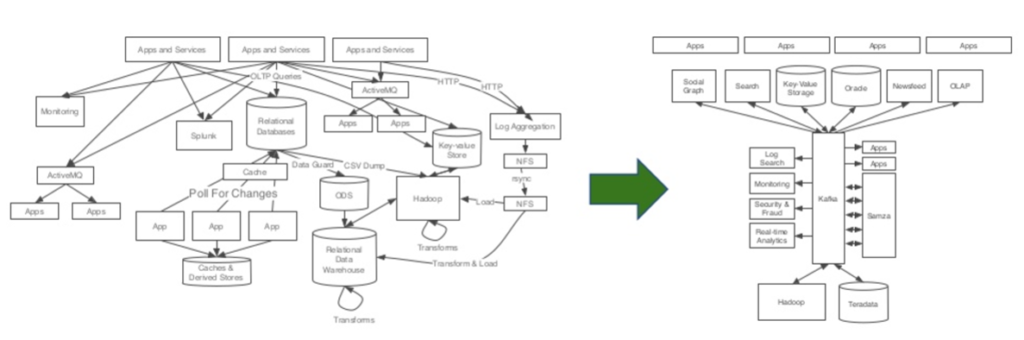

Application modernization benefits from the Apache Kafka ecosystem for hybrid integration scenarios.

Most enterprises require a reliable and scalable integration between legacy systems such as IBM Mainframe, Oracle, SAP ERP, and modern cloud-native applications like Snowflake, MongoDB Atlas, or AWS Lambda.

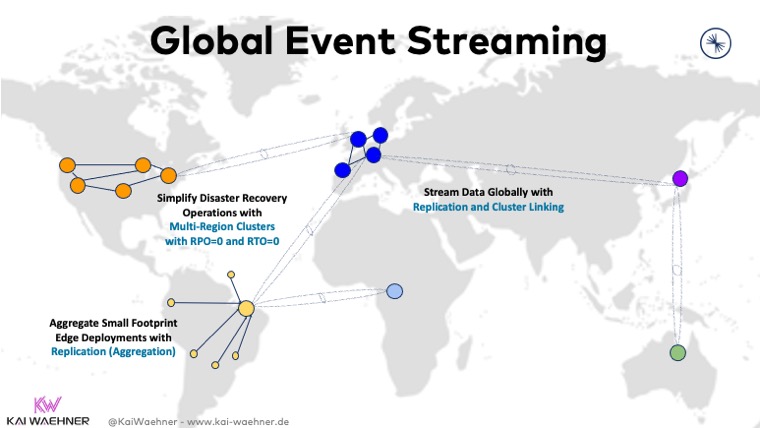

I already explored “architecture patterns for distributed, hybrid, edge and global Apache Kafka deployments” some time ago:

TL;DR: Various alternatives exist to deploy Apache Kafka across data centers, regions, and continents. There is no single best architecture. It always depends on characteristics such as RPO / RTO, SLAs, latency, throughput, etc.

Some deployments focus on on-prem to cloud integration. Others link Kafka clusters on multiple cloud providers. Technologies such as Apache Kafka’s MirrorMaker 2, Confluent Replicator, Confluent Multi-Region-Clusters, and Confluent Cluster Linking help build such an infrastructure.

Let’s look at a few real-world deployments in the healthcare sector.

Optum (United Health Group) – Cloud-native Kafka-as-a-Service

Optum is an American pharmacy benefit manager and health care provider. It is a subsidiary of UnitedHealth Group. The Apache Kafka infrastructure is provided as an internal service, centrally managed, and used by over 200 internal application teams.

Optum built a repeatable, scalable, cost-efficient way to standardize data. They leverage the whole Kafka ecosystem:

- Data ingestion from multiple resources (Kafka Connect)

- Data enrichment (Table Joins & Streaming API)

- Aggregation and metrics calculation (Kafka Streams API)

- Sinking data to database (Kafka Connect)

- Near real-time APIs to serve the data

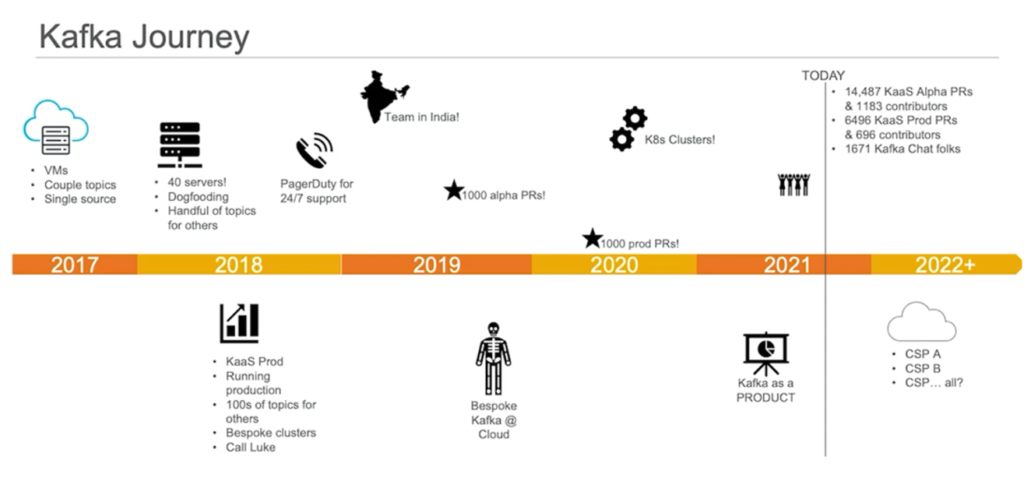

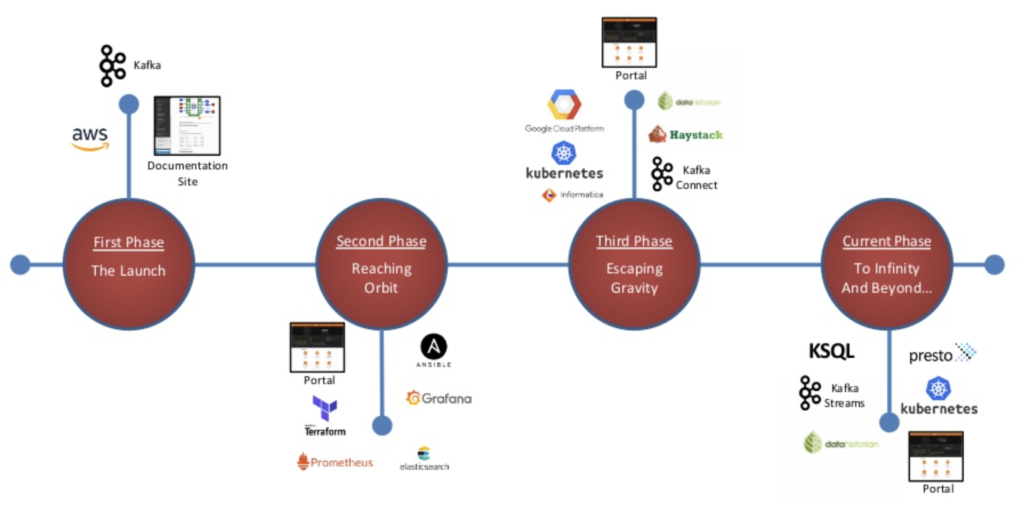

Optum’s Kafka Summit talk explored the journey and maturity curve for their data streaming evolution:

As you can see, the journey started with a self-managed Kafka cluster on-premises. Over time, they migrated to a cloud-native Kubernetes environment and built an internal Kafka-as-a-Service offering. Right now, Optum works on multi-cloud enterprise architecture to deploy across multiple cloud service providers.

Centene – Data Integration for M&A across Infrastructures

Centene is the largest Medicaid and Medicare Managed Care Provider in the US. The healthcare insurer acts as an intermediary for government-sponsored and privately insured healthcare programs. Centene’s mission is to “help people live healthier lives and to help make the health system work better for everyone”.

The critical challenge of Centene is interesting: Growth! Many mergers and acquisitions happened in the last decade: Envolve, HealthNet, Fidelis, and Wellcare.

Data integration and processing at scale in real-time between various systems, infrastructures, and cloud environments is a considerable challenge. Kafka provides Centene with valuable capabilities, as they explained in an online talk:

- Highly scalable

- High autonomy/decoupling

- High availability & data resiliency

- Real-time data transfer

- Complex stream processing

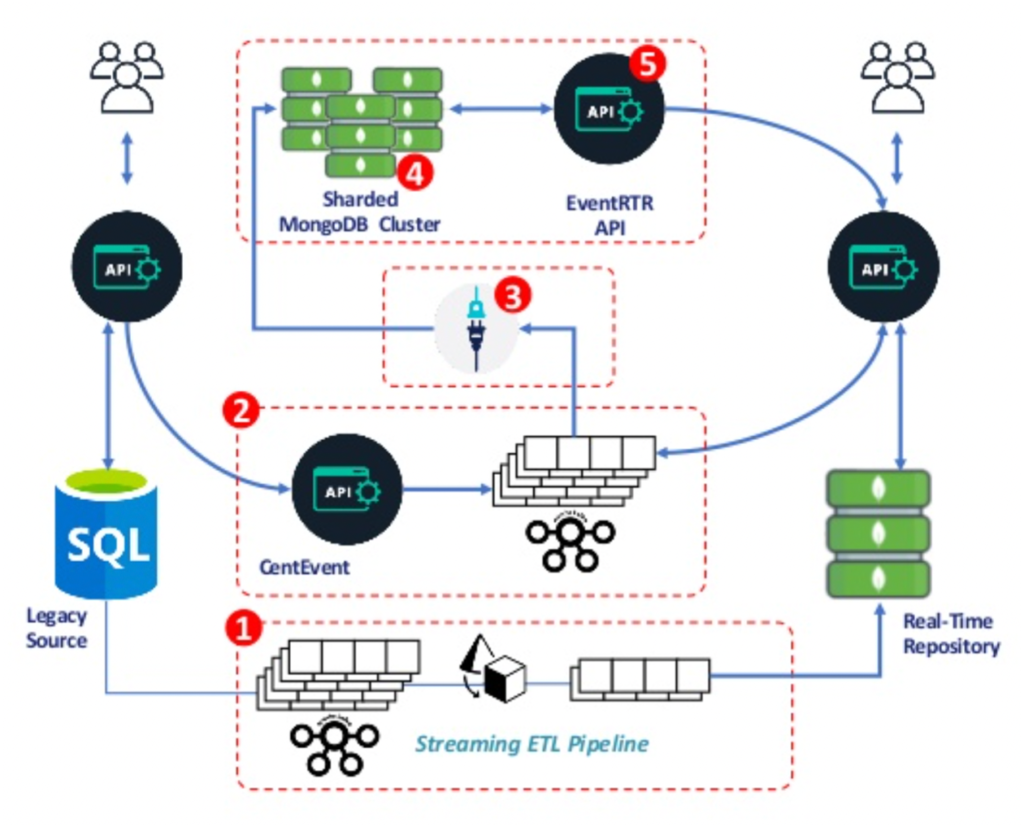

The event-driven integration architecture leverages Apache Kafka with MongoDB:

Bayer – Hybrid Multi-Cloud Data Streaming

Bayer AG is a German multinational pharmaceutical and life sciences company and one of the largest pharmaceutical companies in the world. They leverage Kafka in various use cases and business domains. The following scenario is from Monsanto.

Bayer adopted a cloud-first strategy and started a multi-year transition to the cloud to provide real-time data flows across hybrid and multi-cloud infrastructures.

The Kafka-based cross-data center DataHub facilitated migration and the shift to real-time stream processing. It offers strong enterprise adoption and supports a myriad of use cases. The Apache Kafka ecosystem is the “middleware” to build a bi-directional streaming replication and integration architecture between on-premises data centers and multiple cloud providers:

The Kafka journey of Bayer started on AWS. Afterward, some project teams worked on GCP. In parallel, DevOps and cloud-native technologies modernized the underlying infrastructure. Today, Bayer operates a multi-cloud infrastructure with mature, reliable, and scalable stream processing use cases:

Learn about Bayer’s journey and how they built their hybrid and multi-cloud Enterprise DataHub with Apache Kafka and its ecosystem: Bayer’s Kafka Summit talk.

Data Streaming with Kafka across Hybrid and Multi-cloud Infrastructures

Think about IoT sensor analytics, cybersecurity, patient communication, insurance, research, and many other domains. Real-time data beats slow data in the healthcare supply chain almost everywhere.

This blog post explored the value of data streaming with Apache Kafka to modernize IT infrastructure and build hybrid multi-cloud architectures. Real-world deployments from Optum, Centene, and Bayer showed how enterprises deploy Kafka successfully for different use cases in the enterprise architecture.

How do you leverage data streaming with Apache Kafka in the healthcare industry? What architecture does your platform use? Which products do you combine with data streaming? Let’s connect on LinkedIn and discuss it! Stay informed about new blog posts by subscribing to my newsletter.