Aftermarket sales and customer service require the right information at the right time to make context-specific decisions. This post explains the modernization of supply chain business process choreography based on the real-life use case of Michelin, a tire manufacturer in France. Data Streaming with Apache Kafka enables true decoupling, domain-driven design, and data consistency across real-time and batch systems. Common business goals drove them: Increase customer retention, increase revenue, reduce costs, and improve time to market for innovation.

The State of Data Streaming for Manufacturing in 2023

The evolution of industrial IoT, manufacturing 4.0, and digitalized B2B and customer relations require modern, open, and scalable information sharing. Data streaming allows integrating and correlating data in real-time at any scale. Trends like software-defined manufacturing and data streaming help modernize and innovate the entire engineering and sales lifecycle.

I have recently presented an overview of trending enterprise architectures in the manufacturing industry and data streaming customer stories from BMW, Mercedes, Michelin, and Siemens. A complete slide deck and on-demand video recording are included:

This blog post explores one of the enterprise architectures and case studies in more detail: Context-specific aftersales and service management in real-time with data streaming.

What is Aftermarket Sales and Service? And how does Data Streaming help?

The aftermarket is the secondary market of the manufacturing industry, concerned with the production, distribution, retailing, and installation of all parts, chemicals, equipment, and accessories after the product’s sale by the original equipment manufacturer (OEM) to the consumer. The term ‘aftermarket’ is mainly used in the automotive industry but as relevant in other manufacturing industries.

Enterprises leverage data streaming for collecting data from cars, dealerships, customers, and many other backend systems to make automated context-specific decision-making in real-time when it is relevant (predictive maintenance) or valuable (cross-/upselling).

Challenges with Aftermarket Customer Communication

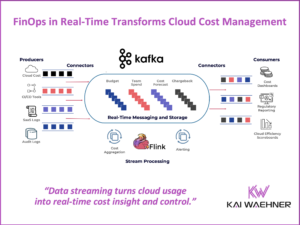

Manufacturers face many challenges when seeking to implement digital tools for aftermarket services. McKinsey defined research points to five central priorities – all grounded in data – for improving aftermarket services: People, operations, offers, a network of external partners, and digital tools.

While these priorities are related, digitalization is relevant across all business processes in aftermarket services:

Disclaimer: The McKinsey research focuses on aerospace and defense, but the challenges look very similar in other industries, in my experience from customer conversations.

Data Streaming to make Context-specific Decisions in Real-Time

“The newest aftermarket frontier features the robust use of modern technological developments such as advanced sensors, big data, and artificial intelligence.” says McKinsey.

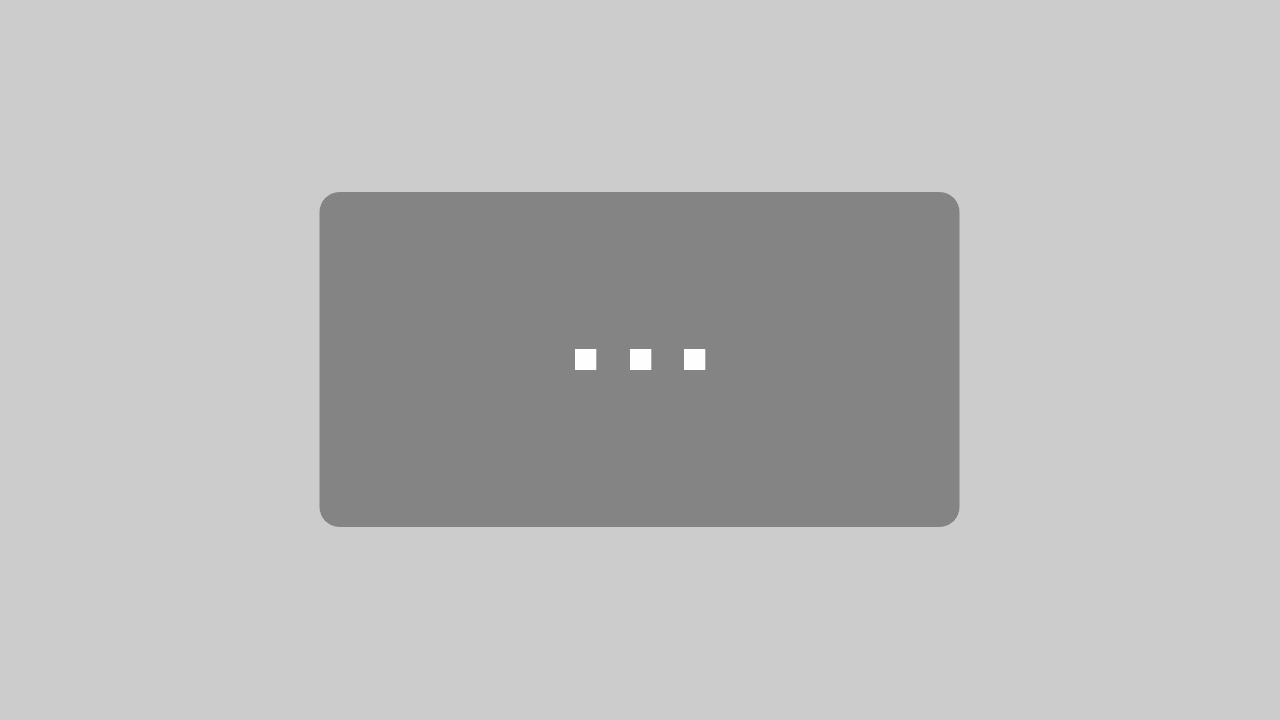

Data streaming helps transform the global supply chain, including aftermarket business processes, with real-time data integration and correlation to make context-specific decisions.

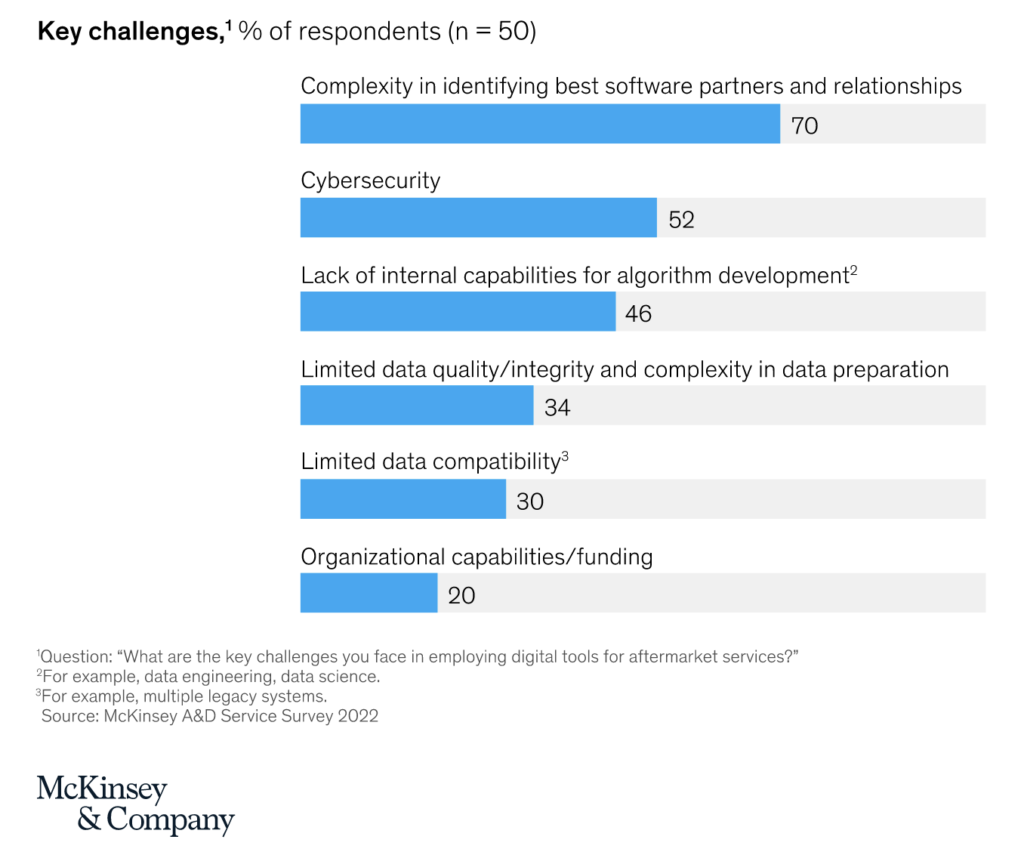

McKinsey mentioned various digital tools that are valuable for aftermarket services:

Interestingly, this coincides with what I see from applications built with data streaming. One key reason is that data streaming with Apache Kafka enablement data consistency across real-time and non-real-time applications.

Omnichannel retail and aftersales are very challenging for most enterprises. That’s why many enterprise architectures rely on data streaming for their context-specific customer 360 infrastructure and real-time applications.

Michelin: Context-specific Aftermarket Sales and Customer Service

Michelin is a French multinational tire manufacturing company for almost every type of vehicle. The company sells a broad spectrum of tires. They manufacture products for automobiles, motorcycles, bicycles, aircraft, space shuttles, and heavy equipment.

Michelin’s many inventions include the removable tire, the ‘pneurail’ (a tire for rubber-tired metros), and the radial tire.

Michelin presented at Kafka Summit how they moved from monolithic orchestrator to data streaming with microservices. This project was all about replacing a huge and complex Business Process Management tool (Oracle BPM), an orchestrator of their internal logistic flows.

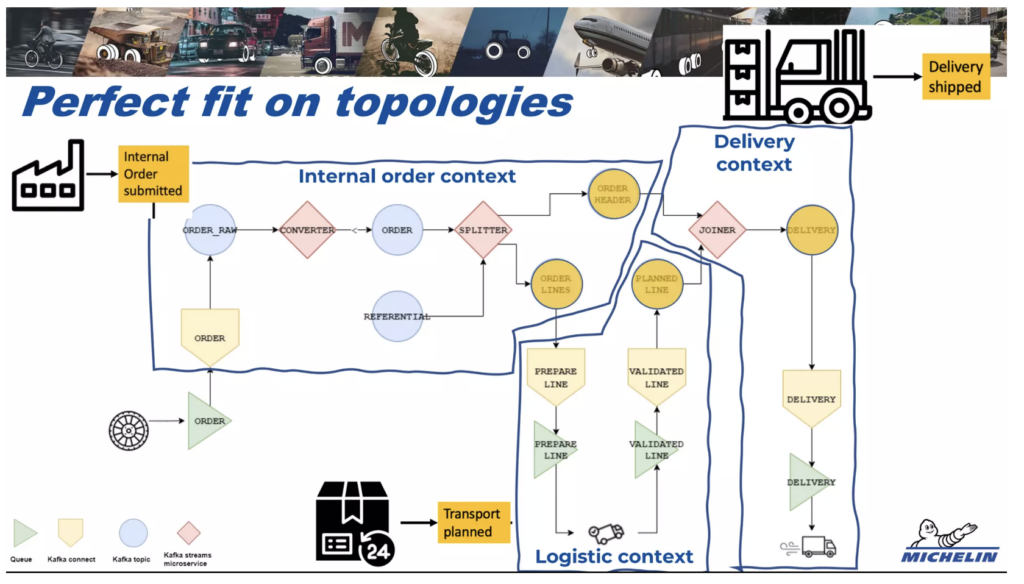

And when Michelin says huge, they really mean it: over 24 processes, 150 millions of tyres moved representing 10 billions € of Michelin turnover. So why replacing such a critical component in their Information System? Mainly because “it was built like a monolithic ERP and became difficult to maintain, not to say a potential single point of failure”. Michelin replaced it with a choreography of micro-services around our Kafka cluster.

From spaghetti integration to decoupled microservices

Michelin faced the same challenges as most manufacturers: Slow data processing, conflicting information, and complex supply chains. Hence, Michelin moved from a spaghetti integration architecture and batch processing to decoupled microservices and real-time event-driven architecture.

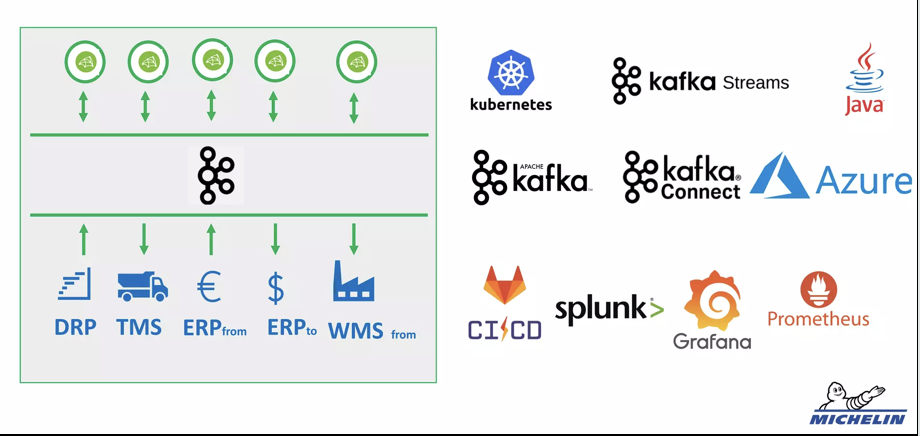

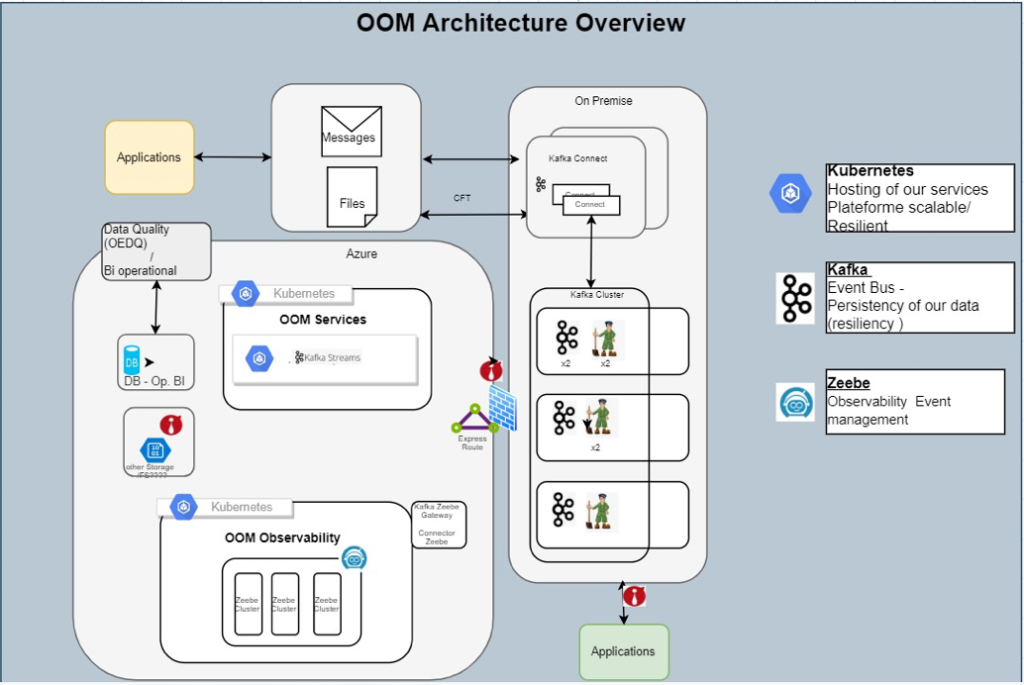

They optimized unreliable and outdated reporting on inventory, especially for raw and semi-finished materials by connecting various systems across the supply chain, including DRP, TMS, ERP, WMS, and more. Apache Kafka provides the data fabric for data integration and to ensure truly decoupled and independent microservices.

From human processes to predictive mobility services

However, the supply chain does not end with manufacturing the best tires. Michelin aims to provide the best services and customer experience via data-driven analytics. As part of this initiative, Michelin migrated from orchestration and a single point of failure with a legacy BPM engine to a flexible choreography and true decoupling with an event-driven architecture leveraging Apache Kafka:

Michelin implemented mobility solutions to provide mobility assistance and fleet services to its diverse customer base. For instance, predictive insights notify customers to replace tires or show the best routes to optimize fuel. The new business process choreography enables proactive marketing and aftersales. Context-specific customer service is possible as the event-driven architecture gives access to the right data at the right time (e.g. when the customer calls the service hotline).

The technical infrastructure is based on cloud-native technologies such as Kubernetes (elastic infrastructure), Apache Kafka (data streaming with components like Kafka Connect and Kafka Streams), and Zeebe (a modern BPM and workflow engine).

From self-managed operations to fully managed cloud

Michelin’s commercial supply chain spans 170 countries. Michelin relies on a real-time inventory system to efficiently manage the flow of products and materials within their massive network.

A strategic decision was the move to a fully managed data streaming service to focus on business logic and innovation in manufacturing, after-sales, and service management. The migration of self-managed Kafka to Confluent Cloud cut operations costs by 35%.

Many companies replace existing legacy BPM engines with workflow orchestration powered by Apache Kafka.

Lightboard Video: How Data Streaming improves Aftermarket Sales and Customer Service

Here is a five-minute lightboard video that describes how data streaming helps with modernizing non-scalable and inflexible data infrastructure for improving the end-to-end supply chain, including aftermarket sales and customer service:

If you liked this video, make sure to follow the Confluent YouTube channel for many more lightboard videos across all industries.

Apache Kafka for automated business processed and improved aftermarket

The Michelin case study explored how a manufacturer improved the end-to-end supply chain from production to aftermarket sales and customer service. For more case studies, check out the free “The State of Data Streaming in Manufacturing” on-demand recording or read the related blog post.

Critical aftermarket sales and customer services challenges are missing information, rising costs, customer churn, and decreasing revenue. Real-time monitoring and context-specific decision-making improve the customer journey and retention. Learn more by reading how data streaming enables building a control tower for real-time supply chain operations.

How do you leverage data streaming in your aftermarket use cases for sales and service management? Did you already build a real-time infrastructure across your supply chain? Let’s connect on LinkedIn and discuss it! Join the data streaming community and stay informed about new blog posts by subscribing to my newsletter.