Live commerce combines instant purchasing of a featured product and audience participation. The covid pandemic accelerated this trend. Live commerce emerged in China but arrived in the west across industries, no matter if you sell fashion, toys, cars, digital features, or anything else. This blog post explores the need for real-time data streaming with Apache Kafka between applications to enable live commerce across online stores and brick & mortar stores across regions, countries, and continents.

The discussion covers several buildings blocks of a live commerce enterprise architecture. Retail topics include omnichannel retail, hyper-personalized customer communication, transactional data processing, and innovative entertainment with Augmented Reality. Other technical aspects cover the replayability of historical data and correlation with real-time events, AI and Machine Learning applied to real-time data, and edge analytics in the retail store.

Live commerce transforms the retail experience

“The arrival of Alibaba’s Taobao Live in May 2016 marked the opening of a new chapter in sales. The Chinese retail giant had pioneered a powerful new approach: linking up an online live stream broadcast with an e-commerce store to allow viewers to watch and shop at the same time,” reports McKinsey in a great article about the shopping revolution. They explain: “Live commerce combines instant purchasing of a featured product and audience participation through a chat function or reaction buttons. In China, live commerce has transformed the retail industry and established itself as a major sales channel in less than five years.”

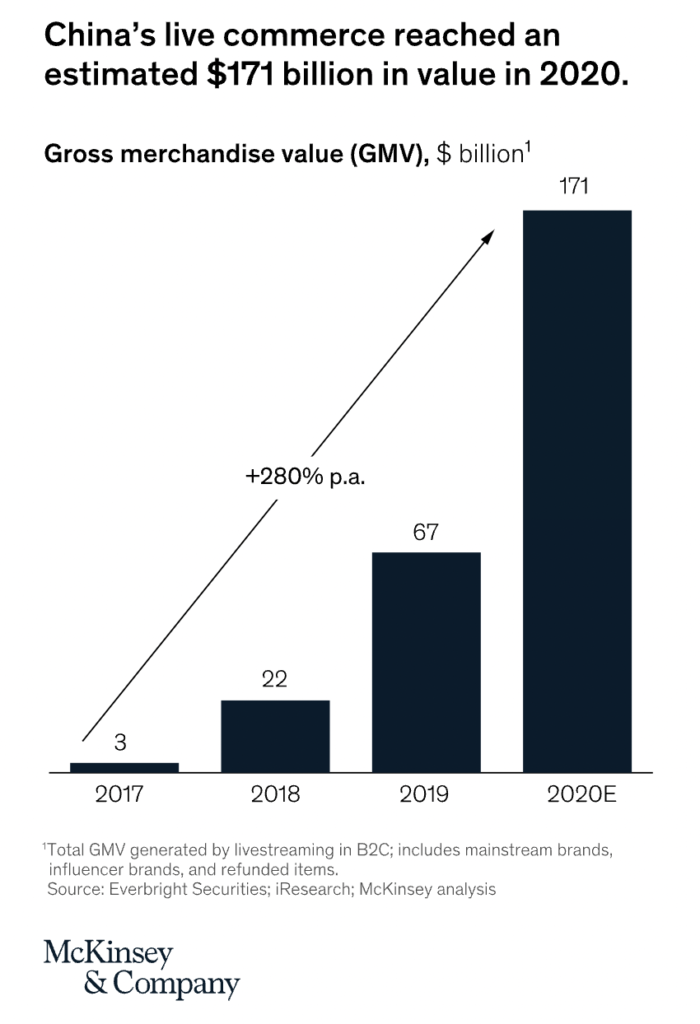

McKinsey shows the impressive growth of live commerce in China in the following diagram:

In the meantime, live commerce arrived in the western world. The earliest adopters outside of China are the German beauty retailer Douglas, fashion retailer Tommy Hilfiger in Europe and the US, and the US retail giant Walmart. The global Covid pandemic was a huge driver, too.

Live commerce via social apps everywhere

Live commerce helps brands and retailers to create value and increase online revenue. Online marketplaces, live auctions, influencer streaming, and live events such as a product launch drive sales in various ways:

- More web traffic and an increased audience

- Increased conversion rates via interactive discussions combined with time-limited tactics such as one-off coupons

- Context-specific upsell strategies in real-time to increase the average basket size

- Improved brand appeal and differentiation by providing an innovative and entertaining shopping experience

For example, AliExpress, an Alibaba subsidiary, launched a live commerce service called “AliExpress Live”, which saw as many as 320,000 goods being added to the cart per one million views during a single live streaming session. The growth numbers and conversation rates are insane compared to the traditional retail history. It is no surprise that many retailers, auction houses, and social platforms want to get a piece of this enormous cake.

Buy now, pay later (BNPL) as an accelerator for live commerce

Point-of-sale (POS) financing services in the United States have grown significantly over the past 24 months, especially since the onset of COVID-19. Trends fueling growth include digitization, rising merchant adoption, increasing repeat usage among younger consumers, and an expanding set of players targeting lending at point of sale, a service also known as “buy now, pay later.” reports McKinsey.

We can see this trend across the globe. Companies like Klarna, Afterpay, and Paypal added BNPL to their primary products and apps. It is just one click away and often even set as the default payment option.

The following diagram shows the “Buy Now, Pay Later Adoption by Generation, 2019-2021” from Cornerstone Advisors:

BNPL is an excellent combination with live commerce. People can buy cool stuff even though they cannot afford it. A scary trend for people, but a massive opportunity for retailers (moral point of view excluded).

Let’s now look at data streaming, and why this is so relevant for live commerce.

Real-time data streaming with Apache Kafka for live commerce

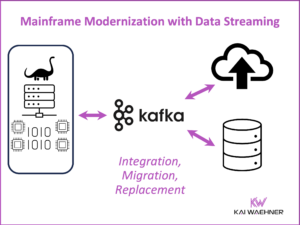

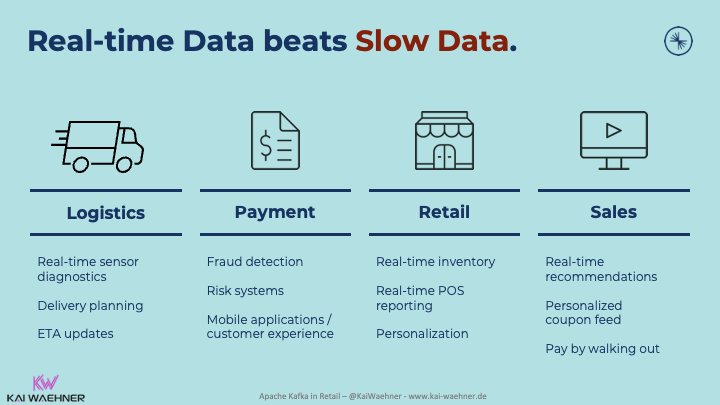

Real-time data beats slow data. That’s true for almost every business scenario:

Live commerce contains not just the active live sales activity but the whole end-to-end sales process, including payment, order fulfillment, shipping, and much more. Hence, don’t expect buying a live commerce COTS sales platform will solve all your challenges!

The live commerce retail experience is a stream of events

Live commerce requires a great customer experience end to end. Most actions and data correlations should or even have to happen in real-time. Data correlation requires connectivity to the social platforms, the live commerce sales platform, and many other backend processes and applications:

Several concepts play a role in live commerce to provide a good customer experience and increased conversion rate compared to traditional retail techniques:

- Integration with backend systems such as real-time inventory, CRM, ERP, 3rd payment providers, loyalty platform, and so on to provide the correct contextual information to any consumer application

- Real-time data correlation for intelligent communication and pricing

- Omnichannel user interfaces for cross-device experiences

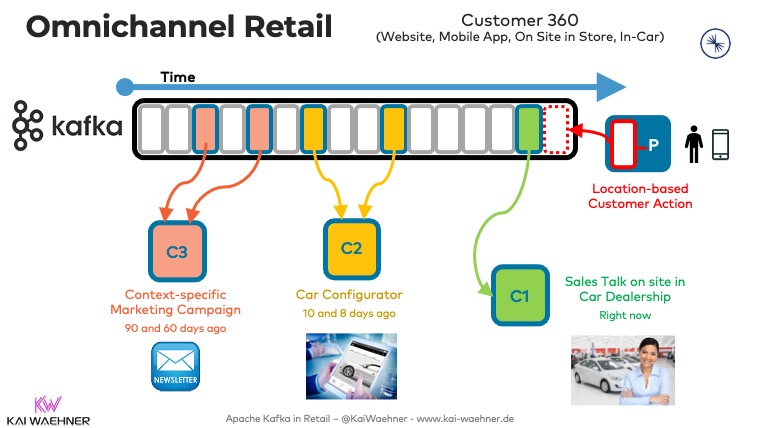

- The replayability of historical events for context-specific next best actions and recommendations

- Automation of (some) communication with chatbots and other natural language processing (NLP) for faster response times and cost reduction

- Enhanced and entertaining customer experiences with groundbreaking technologies such as Augmented Reality (AR) and Virtual Reality (VR)

- Edge analytics for location-based services and deeper integration into brick and mortar stores while the customer is attending live events.

Live commerce in motion with event streaming and Kafka

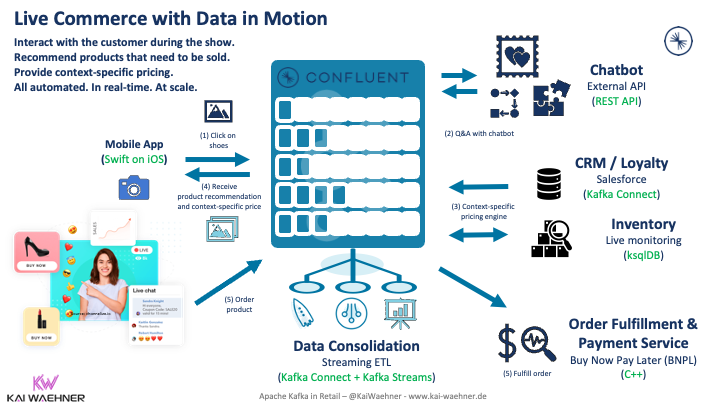

Live commerce requires the right action at the right time. Requirements include:

- Interact with the customer during the show.

- Recommend products that need to be sold.

- Provide context-specific pricing.

- All automated. In real-time. At scale.

Some businesses buy a live commerce platform. Others differentiate by building their own. Live commerce only works well if all the other applications are integrated in real-time. Hence, event streaming with Kafka plays a pivotal role in many next-generation retail architectures – no matter if you build your live commerce platform or buy (and integrate) a 3rd party product or cloud service.

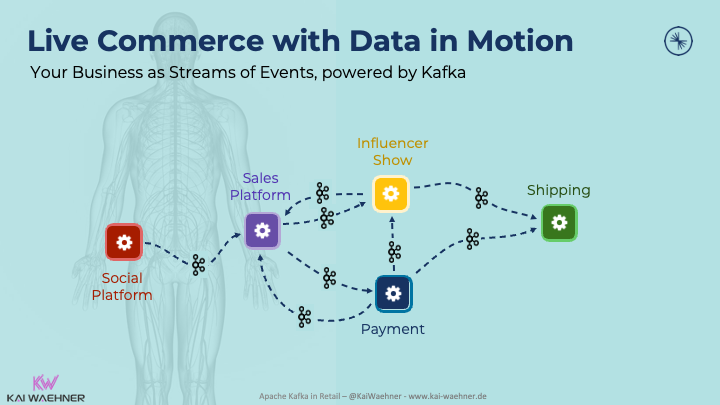

Here is an example architecture for a decentralized, scalable, real-time live commerce infrastructure powered by Kafka and its ecosystem:

Building blocks for a live commerce architecture powered by Kafka

From an event streaming perspective, here are some potential building blocks for a live commerce architecture (you don’t need all, and there can be others, too):

- Omnichannel retail

- The replayability of historical data

- AI and Machine Learning applied to real-time data

- Hyper-personalized customer communication

- Transactional and analytical data processing

- Groundbreaking entertainment with augmented reality / virtual reality

- Edge analytics in the retail store

Let’s explore each building block in more detail in the following subsections.

Omnichannel real-time customer experience with true decoupling

One of Kafka’s key strengths is the true decoupling between producers and consumers to allow omnichannel retail architectures. As Kafka stores events as long as you want (from minutes to years), a consumer can process the data at its own pace, either real-time, near real-time, batch, or with a request-response call:

Domain-driven Design (DDD) and truly decoupled microservices are much easier to build with Kafka than using traditional message queues or ETL/ESB tools. Kafka enables a truly decentralized Data Mesh architecture with any combination of technologies, products, and cloud services.

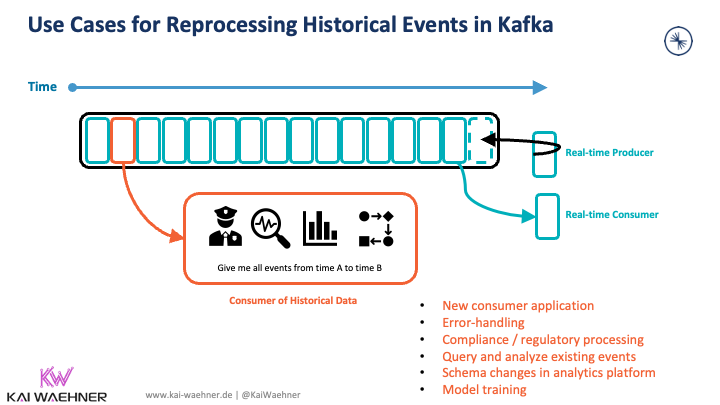

Replayability to reuse and correlate historical data with real-time events

The storage capability of Kafka is helpful for many use cases. From a technical perspective, the replayability of historical events allows scenarios like:

- New consumer application

- Error-handling

- Compliance / regulatory processing

- Query and analyze existing events

- Schema changes in an analytics platform

- Model training

From a business perspective, the replay of historical events helps to

- improve the next live event by analyzing past events (including customer reactions, Q&A, order history, etc.),

- estimate the demand for live events and correlate it to real-time inventory

- enable data science teams to build new algorithms for marketing and sales strategies

- many other use cases that a retail business expert might become after seeing this possibility of accessing historical information in guaranteed order with timestamps and correlated to customer IDs.

A long retention time and Tiered Storage for an initial bootstrap

The retention time in Kafka can be configured to be months, years, or even forever. The replay capability solves the challenge of building an initial bootstrap. Don’t underestimate this feature. Most proprietary streaming services (such as AWS Kinesis) and eventing interfaces from cloud services (such as Salesforce) only provide a few days of historical data. Limited retention time kills many replay use cases, as it does not offer the option to perform a one-time snapshot before starting the real-time CDC.

Tiered Storage for Kafka makes long-term storage in Kafka cost-efficient and scalable, been for Terabytes or Petabytes of data. “Can Apache Kafka replace a database, data lake, or lakehouse?” goes into more detail on this discussion.

Conversational AI for cost reduction with chatbots and speech translation

Natural Language Processing (NLP) helps many projects in the real world for service desk automation, customer conversation with a chatbot, content moderation in social networks, and many other use cases. Kafka is the scalable real-time orchestration layer, but often used for additional use cases, such as embedded an analytic model into a Kafka streaming microservice:

NLP within the streaming architecture enables massive cost reductions and shortens the response time in a live commerce infrastructure. NLP adds immense business value even if just 50% of the most fundamental questions in the chat and comments are answered automatically.

I wrote a detailed article that explores how Apache Kafka is used with Machine Learning platforms at the carmaker BMW, the online travel and booking portal Expedia, and the dating app Tinder for reliable real-time conversational AI, NLP, and chatbots.

Real-time sentiment analysis to improve live shows

Related to the above topic, NLP is also helpful to analyze the chat, comments, live surveys, and other feedback in real-time to act proactively during the live event.

Sentiment analysis uses NLP to systematically identify, extract, quantify, and study affective states and subjective information. You can make (manual or automated) real-time decisions on questions such as:

- Do people like the product?

- Should we present it differently?

- Do the structure of the show and the camera view work?

- Should we focus on other features of the product?

- Is any immediate emergency action needed, like focusing on different parts or stopping the product presentation?

Sentiment analysis is a prevalent hello world example for AI and Machine Learning. If you search for Kafka-powered examples with any ML framework, most examples show you how to implement sentiment analysis on Twitter data. The adaption to your data set is pretty straightforward regarding the model training, even though the devil lies in the details, of course. Hence, the model training is only a fraction of the real-world challenges in an ML architecture.

Data integration at scale, ML infrastructure monitoring, and reliable model predictions in real-time, and similar challenges often use Kafka’s helpful characteristics to make the ML project successful.

Sony Playstation understands gamer’s sentiment in real-time

Sony Playstation is a great real-world example for sentiment analysis with Kafka. In a Kafka Summit talk, Sony talked about their journey from daily batch jobs to real-time data processing and analytics with Apache Kafka. This enables understanding of gamers’ sentiment by streaming data from social feeds and performing language processing in real-time.

I wrote a detailed article if you want to learn more about deploying anyMachine Learning models in Kafka applications.

Hyper-personalized context-specific customer experience

A hyper-personalized online retail experience turns each customer visit into a one-on-one marketing opportunity. This communication technique is crucial for online stores and can significantly change live commerce, too.

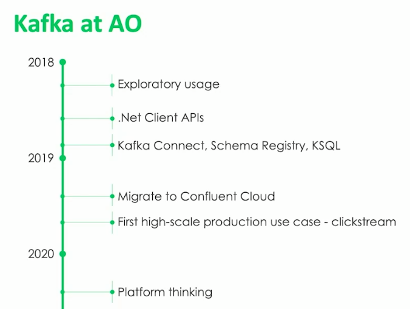

AO.com is an electrical retailer in the UK that implemented a hyper-personalized real-time experience. Event Streaming applications correlate historical customer data with real-time digital signals. This capability maximizes customer satisfaction and revenue growth and increases customer conversions.

Building a hyper-personalized experience requires real-time data integration and correlation at scale. The realization is a journey that takes some time. AO presented their maturity curve of the last few years:

Similar to AO.com, imagine how you could improve your live commerce use cases with hyper-personalized real-time customer communication.

Let’s talk about one example: Embedding a Lead Scoring Model (LSM) into your real-time conversations with customers can speed up sales engagement and generate conversion. Speed to contact leads with the correct contextual information is critical in live commerce. Insights to lead score, e.g., signals, are essential as well. Recommendations, product discounts, up-and cross-selling go beyond simple business rules and are applied in real-time when it makes the most sense.

Transactional and analytical data processing in motion

Many people still think about Kafka as a system for big data workloads. That’s indeed what it was built for over a decade ago. However, in the meantime, over 50% of use cases I see at our customers are about processing transactions in real-time with the need for zero data loss. Transactional data includes integration with the point of sale (POS), payment processing, fraud detection, CRM and ERP communication, and much more in the retail industry.

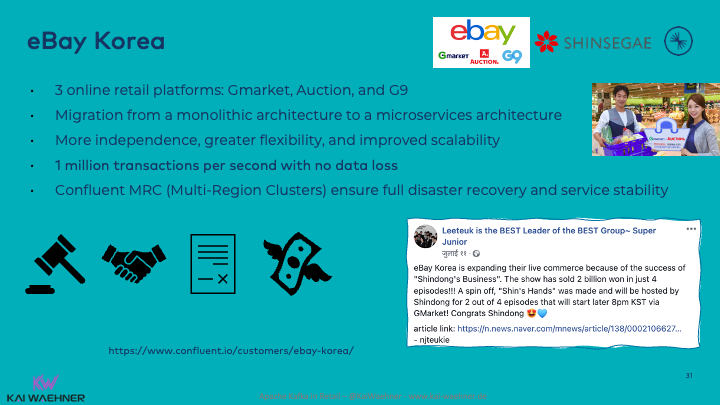

eBay Korea – Multi-region Kafka for processing transactional data

Here is a brilliant case study for transactional workloads across multiple regions to ensure full disaster recovery and service stability without any data loss. eBay Korea (acquired by Shinsegae) uses Apache Kafka for live commerce and transactional event streaming:

More details about eBay Korea’s Kafka deployments are available in the case study.

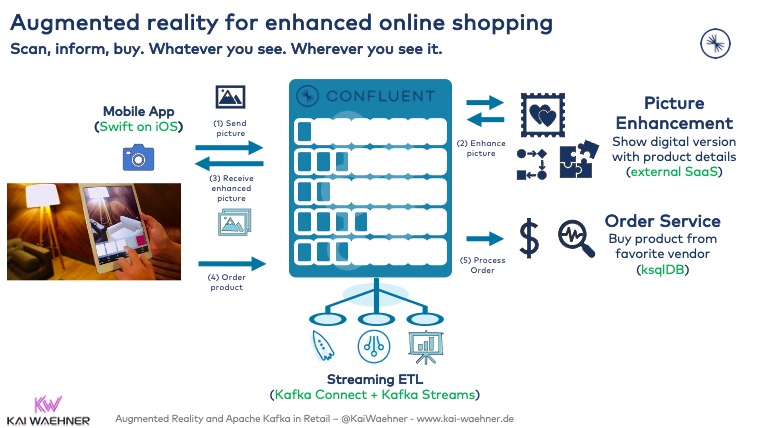

Augmented Reality to build an entertaining live commerce metaverse

Augmented Reality (AR) and Virtual Reality (VR) get traction across industries beyond gaming – including retail, manufacturing, transportation, and healthcare. Event Streaming plays a key role as scalable real-time integration and orchestration layer for AR and VR applications:

Today, most live commerce offerings “just” use standard mobile apps. However, AR and VR make the customer experience much more fun. It allows closer interaction with the salesperson (a beloved celebrity or influencer).

We built a demo that integrates an innovative AR mobile shopping experience with the backend systems via the event streaming platform Apache Kafka.

The beauty of an event-driven architecture combined with patterns like Data Mesh enables one to onboard new features or technologies step-by-step. There is no need for a big bang or integration of a monolithic proprietary product to provide such a solution.

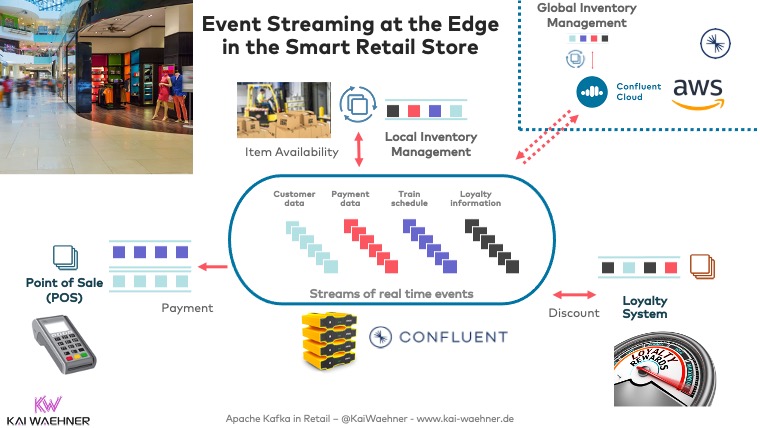

Edge analytics in the retail store

Most retail companies have a cloud-first strategy to focus on business problems using an agile, elastic, serverless infrastructure.

However, low-latency use cases, cost-efficiency in a connected world, or lousy internet connectivity (i.e., stores in malls) require edge computing outside a data center or cloud. Hence, many retailers deploy application logic, including event streaming at the edge:

“A Hybrid Streaming Architecture for Smart Retail Stores with Apache Kafka” explores this use case in more detail. A key benefit is that the same architecture, technologies, APIs, and software retailers use in the cloud can be deployed on small computers in the retail store to enable edge computing. Use cases include location-based services, up-selling and discounting, integration with on-site devices (point of sale, sales machines, fun devices, whatever).

I have written plenty of articles about this already, such as use cases for event streaming at the edge and an infrastructure checklist for Apache Kafka at the edge.

Live commerce requires real-time data streaming

The building blocks in this blog post covered various concepts used in a live commerce enterprise architecture. One thing is clear: You can buy a live commerce product or build your own. But the retail innovation only works if data is moved between different applications in real-time and used for data correlation at the right time and context.

Event streaming plays a crucial role in modern retail architectures. Therefore, it is no surprise that Apache Kafka can help to build your next-generation live commerce infrastructure. eBay Korea is a great success story for deploying transactional data flows across multiple regions for zero data loss, even with a disaster.

Do you already sell your products via live commerce? What technologies and architectures do you use? Are event streaming and Kafka part of the architecture? Let’s connect on LinkedIn and discuss it! Stay informed about new blog posts by subscribing to my newsletter.