This blog post explores the state of data streaming for the insurance industry. The evolution of claim processing, customer service, telematics, and new business models requires real-time end-to-end visibility, reliable and intuitive B2B and B2C communication, and integration with pioneering technologies like AI/machine learning for image recognition. Data streaming allows integrating and correlating data in real-time at any scale to improve most business processes in the insurance sector much more cost-efficiently.

I look at trends in the insurance sector to explore how data streaming helps as a business enabler, including customer stories from Allianz, Generali, Policygenius, and more. A complete slide deck and on-demand video recording are included.

General trends in the insurance industry

The insurance industry is fundamental for various aspects of modern life and society, including financial protection, healthcare, life insurance, property protection, business continuity, transportation and travel, etc.

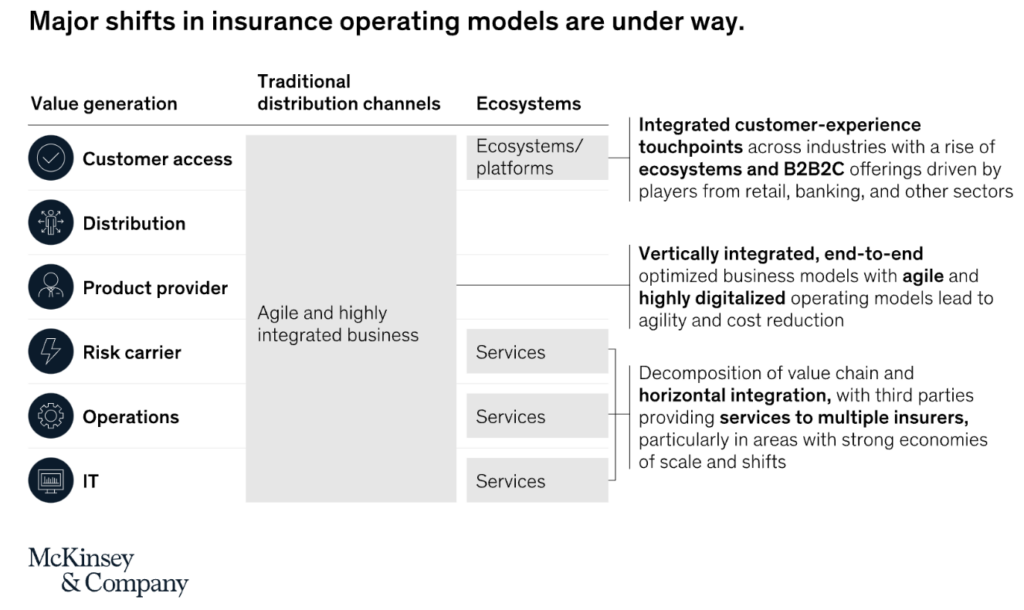

McKinsey & Company expects that “major shifts in insurance operating models are under way” in the following years through tech-driven innovation:

Data streaming in the insurance industry

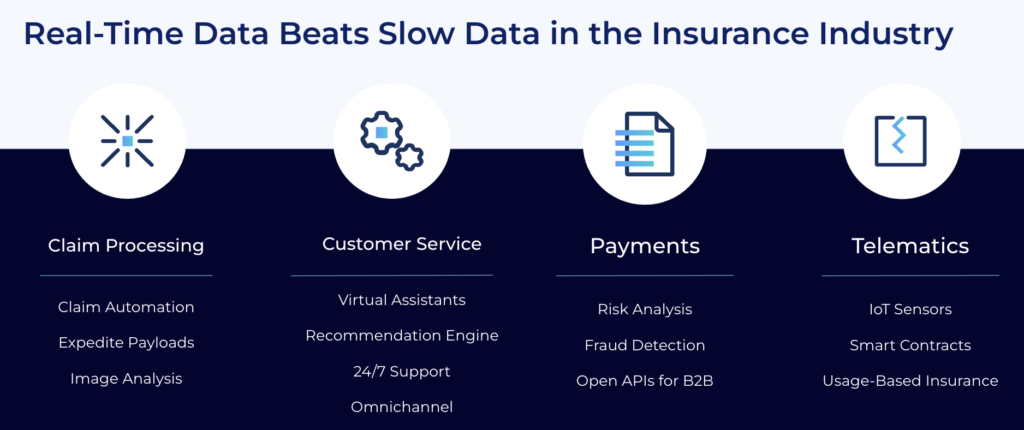

Adopting trends like claim automation, expediting payloads, virtual assistants, or usage-based insurance is only possible if enterprises in the insurance sector can provide and correlate information at the right time in the proper context. Real-time, which means using the information in milliseconds, seconds, or minutes, is almost always better than processing data later (whatever later means):

Data streaming combines the power of real-time messaging at any scale with storage for true decoupling, data integration, and data correlation capabilities. Apache Kafka is the de facto standard for data streaming.

“Apache Kafka in the Insurance Industry” is a great starting point to learn more about data streaming in the industry, including a few Kafka-powered case studies not covered in this blog post – such as

- Centene: Integration and data processing at scale in real-time

- Swiss Mobiliar: Decoupling and orchestration

- Humana: Real-time data integration and analytics

- freeyou: Stateful streaming analytics

- Tesla: Carmaker and utility company, now also a car insurer

Architecture trends for data streaming

The insurance industry applies various trends for enterprise architectures for cost, flexibility, security, and latency reasons. The three major topics I see these days at customers are:

- Focus on business logic and faster time-to-market by leveraging fully managed SaaS

- Event-driven architectures (in combination with request-response communication) to enable domain-driven design and independent innovation

- Omnichannel customer services across the customer lifecycle

Let’s look deeper into some enterprise architectures that leverage data streaming for insurance use cases

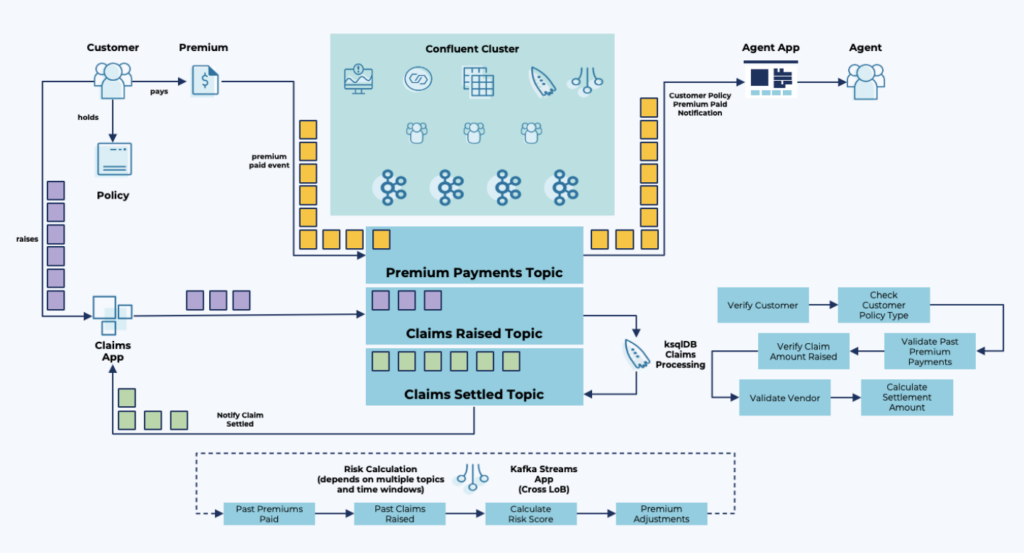

Event-driven patterns for insurance

Domain-driven design with decoupled applications and microservices is vital to enabling change and innovation in the enterprise architecture of insurers. Apache Kafka’s unique capabilities to provide real-time messaging at scale plus storing events as long as needed for true decoupling creates independence for business units.

Business processes are not orchestrated by a monolithic application or middleware. Instead, teams build decentralized data products with their technologies, APIs, SaaS, and communication paradigms like streaming, batch, or request-response:

As the heart of the enterprise architecture, data streaming is real-time, scalable, and reliable. The streaming platform collects, stores, processes, and shares data. But the underlying event store makes integrating with non-real-time systems like a legacy file-based application on the mainframe or the cloud-native data lake, data warehouse, or lakehouse possible.

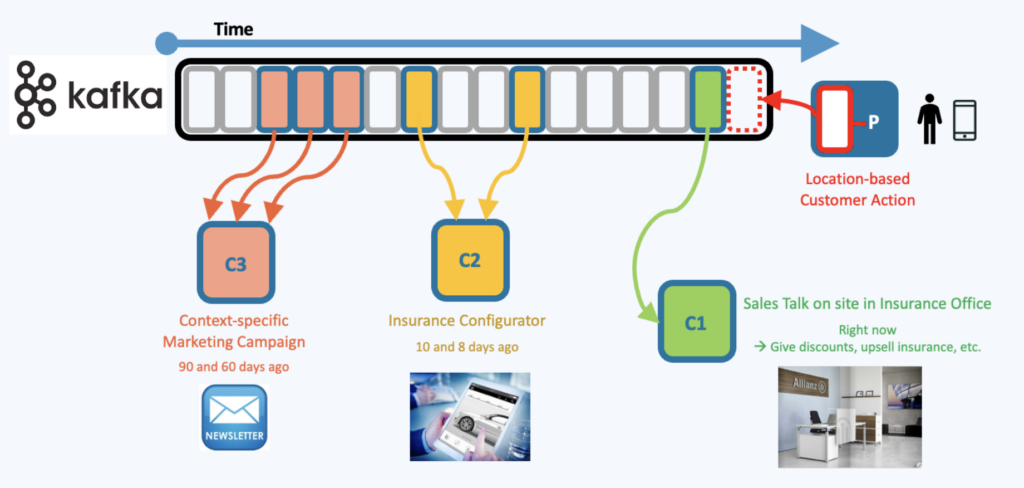

Omnichannel customer journey

Customers expect consistent information across all channels, including mobile apps, web browsers, brick-and-mortar agencies, and 3rd party partner services. Kafka’s event log of the streaming platform ensures that historical and current events can be correlated to make the right automated decision, alert, recommendation, etc.

The following diagram shows how historical marketing data from a SaaS platform is combined with other historical data from the product configurator to feed the real-time decisions of the salesperson in the brick-and-mortar agency when the customer is entering the store:

Data consistency is one of the most challenging problems in the IT and software world. Apache Kafka ensures data consistency across all applications and databases, whether these systems operate in real-time, near-real-time, or batch.

New customer stories for data streaming in the insurance sector

So much innovation is happening in the insurance sector. Automation and digitalization change how insurers process claims, build customer relationships, and create new business models with enterprises of other verticals.

Most insurance providers use a cloud-first approach to improve time-to-market, increase flexibility, and focus on business logic instead of operating IT infrastructure. And elastic scalability gets even more critical with all the growing real-time expectations and mobile app capabilities.

Here are a few customer stories from worldwide insurance organizations:

- Generali built a critical integration platform between legacy databases on-premises and cloud-native workloads running on Google Cloud.

- Allianz has separate projects for brownfield integration and IT modernization and greenfield innovation for real-time analytics to implement use cases like pricing for insurance products, analytics for customer 360 views, and fraud management.

- Ladder leverages machine learning in real-time for an online, direct-to-consumer, full-stack approach to life insurance.

- Roosevelt built an agnostic claim processing and B2B platform to automatically process the vast majority of claims for over 20 million members, saving hundreds of millions of dollars in treatment costs…

- Policygenius is an online insurance marketplace with 30+ million customers for life, disability, home, and auto insurance; the data streaming platform checks with real agents to deliver quotes from leading insurance companies in real-time.

Resources to learn more

This blog post is just the starting point. Learn more about data streaming in the insurance industry in the following on-demand webinar recording, the related slide deck, and further resources, including pretty cool lightboard videos about use cases.

On-demand video recording

The video recording explores the telecom industry’s trends and architectures for data streaming. The primary focus is the data streaming case studies.

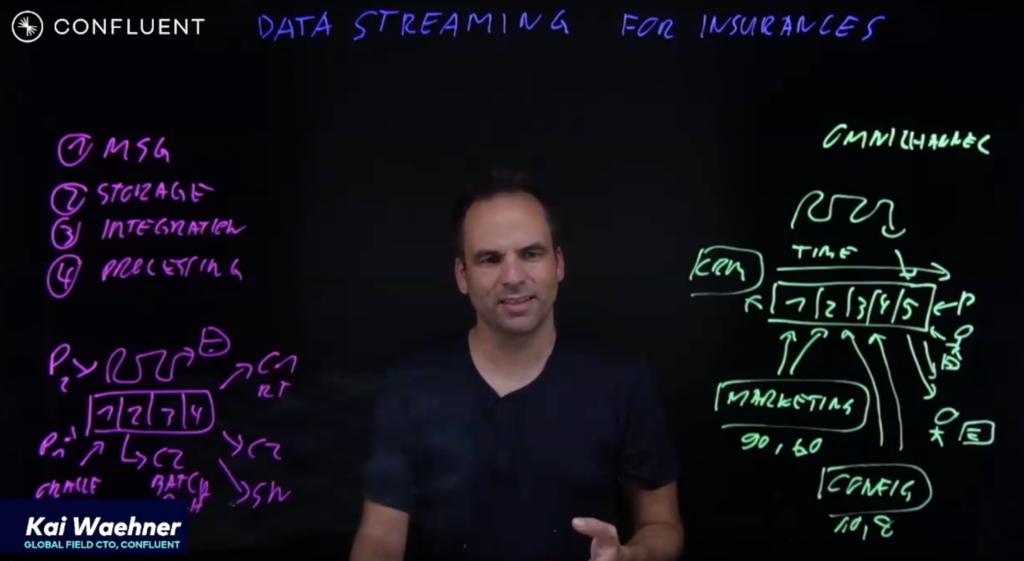

I am excited to have presented this webinar in my interactive light board studio:

This creates a much better experience, especially in a time after the pandemic where many people are “Zoom fatigue”.

Check out our on-demand recording:

Slides

If you prefer learning from slides, check out the deck used for the above recording:

Fullscreen ModeCase studies and lightboard videos for data streaming in the insurance industry

The state of data streaming for insurance is fascinating. New use cases and case studies come up every month. This includes better data governance across the entire organization, real-time data collection and processing data across legacy applications in the data center and modern cloud infrastructures, data sharing and B2B partnerships for new business models, and many more scenarios.

We recorded lightboard videos showing the value of data streaming simply and effectively. These five-minute videos explore the business value of data streaming, related architectures, and customer stories. Stay tuned; I will update the links in the next few weeks.

And this is just the beginning. Every month, we will talk about the status of data streaming in a different industry. Manufacturing was the first. Financial services second, then retail, telcos, gaming, and so on… Check out my other blog posts.

Let’s connect on LinkedIn and discuss it! Join the data streaming community and stay informed about new blog posts by subscribing to my newsletter.