The lightweight and open IoT messaging protocol MQTT gets adopted more widely across industries. This blog post explores relevant market trends for MQTT: cloud deployments and fully managed services, data governance with unified namespace and Sparkplug B, MQTT vs. OPC-UA debates, and the integration with Apache Kafka for OT/IT data processing in real-time.

MQTT Summit in Munich

In December 2023, I attended the MQTT Summit Connack. HiveMQ sponsored the event. The agenda included various industry experts. The talks covered industrial IoT deployments, unified namespace, Sparkplug B, security and fleet management, and use cases for Kafka combined with MQTT like connected vehicles or smart city (my talk).

It was a pleasure to meet many industry peers of the MQTT community, independent consultants, and software vendors. I learned a lot about the adoption of MQTT in the real world, best practices, and a few trade-offs of Sparkplug B. The following sections summarize my trends for MQTT of this event combined with experiences I had this year in customer meetings around the world.

Special thanks to Kudzai Manditereza of HiveMQ to organize this great event with many international attendees across industries:

What is MQTT?

MQTT stands for Message Queuing Telemetry Transport. MQTT is a lightweight and open-source messaging protocol designed for small sensors and mobile devices with high-latency or unreliable networks. IBM originally developed MQTT in the late 1990s and later became an open standard.

MQTT follows a publish/subscribe model, where devices (or clients) communicate through a central message broker. The key components in MQTT are:

- Client: The device or application that connects to the MQTT broker to send or receive messages.

- Broker: The central hub that manages the communication between clients. It receives messages from publishing clients and routes them to subscribing clients based on topics.

- Topic: A hierarchical string that acts as a label for a message. Clients subscribe to topics to receive messages and publish messages to specific topics.

When to use MQTT?

The publish/subscribe model allows for efficient communication between devices. When a client publishes a message to a specific topic, all other clients subscribed to that topic receive the message. This decouples the sender and receiver, enabling a scalable and flexible communication system.

The MQTT standard is known for its simplicity, low bandwidth usage, and support for unreliable networks. These characteristics make it well-suited for Internet of Things (IoT) applications, where devices often have limited resources and may operate under challenging network conditions. Good MQTT implementations provide a scalable and reliable platform for IoT projects.

MQTT has gained widespread adoption in various industries for IoT deployments, home automation, and other scenarios requiring lightweight and efficient communication.

I discuss the following four market trends for MQTT in the following sections. These have huge impact on the adoption and making a decision to choose MQTT:

- MQTT in the Public Cloud

- Data Governance for MQTT

- MQTT vs. OPC-UA Debates

- MQTT and Apache Kafka for OT/IT Data Processing

Trend 1: MQTT in the Public Cloud

Most companies have a cloud first strategy. Go serverless if you can! Focus on business problems, faster time-to-market, and an elastic infrastructure are the consequence.

Mature MQTT cloud services exist. At Confluent, we work a lot with HiveMQ. The combination even provides a fully managed integration between both cloud offerings.

Having said that, not everything can or should go to the (public) cloud. Security, latency and cost often make a deployment in the data center or at the edge (e.g., in a smart factory) the preferred or mandatory option. Hybrid architectures allow the combination of both options for building the most cost-efficient but also reliable and secure IoT infrastructure. I talked about zero-trust and air-gapped environments leveraging unidirectional hardware for the most critical use cases in another blog..

Automation and Security are the Typical Blockers for Public Cloud

Key for success, especially in hybrid architectures, is automation and fleet management with CI/CD and GitOps for multi-cluster management. Many projects leverage Kubernetes as a cloud-native infrastructure for the edge and private cloud. However, in the public cloud, the first option should always be a fully managed service (if security and other requirements allow it).

Be careful when adopting fully-managed MQTT cloud services: Support for MQTT is not always equal across the cloud vendors. Many vendors do not implement the entire protocol, miss features, and require usage limitations. HiveMQ wrote a great article showing this. The article is a bit outdated (and opinionated, of course, as a competing MQTT vendor). But it shows very well how some vendors provide offerings that are far away from a good MQTT cloud solution.

The hardest problem for public cloud adoption of MQTT is security! Double check the requirements early. Latency, availability or specific features are usually not the problem. The deployment and integration need to be compliant and follow the cloud strategy. As Industrial IoT projects always have to include some kind of edge story, it is a tougher discussion than sales or marketing projects.

Trend 2: Data Governance for MQTT

Data governance is crucial across the enterprise. From an IoT and MQTT perspective, the two main topics are unified namespace as the concept and Sparkplug B as the technology.

Unified Namespace for Industrial IoT

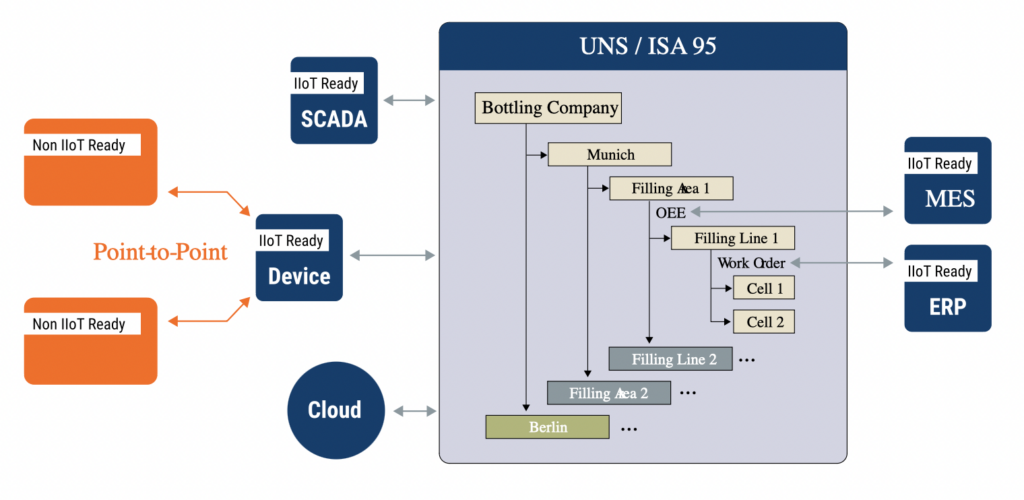

In the context of Industrial Internet of Things (IIoT), a unified namespace (UNS) typically refers to a standardized and cohesive way of naming and organizing devices, data, and resources within an industrial network or ecosystem. The goal is to provide a consistent naming structure that facilitates interoperability, data sharing, and management of IIoT devices and systems.

The term Unified Namespace (in Industrial IoT) was coined and popularized by Walker Reynolds, an expert and content creator for Industrial IoT.

Concepts of Unified Namespace

Here are some key aspects of a unified namespace in Industrial IoT:

- Device Naming: Devices in an IIoT environment may come from various manufacturers and have different functionalities. A unified namespace ensures that devices are named consistently, making it easier for administrators, applications, and other devices to identify and interact with them.

- Data Naming and Tagging: IIoT involves the generation and exchange of vast amounts of data. A unified namespace includes standardized naming conventions and tagging mechanisms for data points, variables, or attributes associated with devices. This consistency is crucial for applications that need to access and interpret data across different devices.

- Interoperability: A unified namespace promotes interoperability by providing a common framework for devices and systems to communicate. When devices and applications follow the same naming conventions, it becomes easier to integrate new devices into existing systems or replace components without causing disruptions.

- Security and Access Control: A well-defined namespace contributes to security by enabling effective access control mechanisms. Security policies can be implemented based on the standardized names and hierarchies, ensuring that only authorized entities can access specific devices or data.

- Management and Scalability: In large-scale industrial environments, having a unified namespace simplifies device and resource management. It allows for scalable solutions where new devices can be added or replaced without requiring extensive reconfiguration.

- Semantic Interoperability: Beyond just naming, a unified namespace may include semantic definitions and standards. This helps in achieving semantic interoperability, ensuring that devices and systems understand the meaning and context of the data they exchange.

Overall, a unified namespace in Industrial IoT is about establishing a common and standardized structure for naming devices, data, and resources, providing a foundation for efficient, secure, and scalable IIoT deployments. Standards organizations and industry consortia often play a role in developing and promoting these standards to ensure widespread adoption and compatibility across diverse industrial ecosystems.

Sparkplug B: Interoperability and Standardized Communication for MQTT Topics and Payloads

Unified Namespace is the theoretical concept for interoperability. The standardized implementation for payload structure enforcement is Sparkplug B. This is a specification created at the Eclipse foundation and turned into an ISO standard later.

Sparkplug B provides a set of conventions for organizing data and defining a common language for devices to exchange information. Here is an example of HiveMQ depicting how a unified namespace makes communication between devices, systems, and sites easier:

Key features of Sparkplug B include:

- Payload Structure: Sparkplug B defines a specific format for the payload of MQTT messages. This format includes fields for information such as timestamp, data type, and value. This standardized payload structure ensures that devices can consistently understand and interpret the data being exchanged.

- Topic Namespace: The specification defines a standardized topic namespace for MQTT messages. This helps in organizing and categorizing messages, making it easier for devices to discover and subscribe to relevant information.

- Birth and Death Certificates: Sparkplug B introduces the concept of “Birth” and “Death” certificates for devices. When a device comes online, it sends a Birth certificate with information about itself. Conversely, when a device goes offline, it sends a Death certificate. This mechanism aids in monitoring the status of devices within the IIoT network.

- State Management: The specification includes features for managing the state of devices. Devices can publish their current state, and other devices can subscribe to receive updates. This helps in maintaining a synchronized view of device states across the network.

Sparkplug B is intended to enhance the interoperability, scalability, and efficiency of IIoT deployments by providing a standardized framework for MQTT communication in industrial environments. Its adoption can simplify the integration of diverse devices and systems within an industrial ecosystem, promoting seamless communication and data exchange.

Limitations of Sparkplug B

Sparkplug B has a few limitations, such as:

- Only supports Quality of Service (QoS) 0 providing at most once message delivery guarantees.

-

Limits in the structure of topic namespaces.

-

Very device centric (but MQTT is for many “things”)

Understand the pros and cons of Sparkplug B. It is perfect for some use cases. But the above limitations are blockers for some others. Especially, only supporting QoS 0 is a huge limitation for mission-critical use cases.

Trend 3: MQTT vs. OPC-UA Debates

MQTT has many benefits compared to other industrial protocols. However, OPC-UA is another standard in the IoT space that gets at least as much traction in the market as MQTT. The debate about choosing the right IoT standard is controversial, often led by emotions and opinions, and still absolutely valid to discuss.

OPC-UA (Open Platform Communications Unified Architecture) is a machine-to-machine communication protocol for industrial automation. It enables seamless and secure communication and data exchange between devices and systems in various industrial settings.

OPC UA has become a widely adopted standard in the industrial automation and control domain, providing a foundation for secure and interoperable communication between devices, machines, and systems. Its open nature and support from industry organizations contribute to its widespread use in applications ranging from manufacturing and process control to energy management and more.

If you look at the promises of MQTT and OPC-UA, a lot of overlapping exists:

- Scalable

- Reliable

- Real-time

- Open

- Standardized

All of them are true for both standards. Still, trade-offs exist. I won’t start a flame war here. Just search for “MQTT vs. OPC-UA”. You will find many blog posts, articles and videos. Most are very opinionated (and often driven by a vendor). Reality is that the industry adopted both MQTT and OPC-UA widely.

And while the above characteristics might all be true for both standards in general, the details make the difference for specific implementations. For instance, if you try to connect plenty of Siemens S3 PLCs via OPC-UA, then you quickly realize that the number of parallel connections is not as scalable as the OPC-UA standard specification tells you.

When to Choose MQTT vs. OPC-UA?

The clear recommendation is starting with the business problem, not the technology. Evaluate both standards and their implementations, supported interfaces, vendors cloud services, etc. Then choose the right technology.

Here is what I use as a simplified rule of thumb if you have to start a technical discussion:

- MQTT: Use cases for connected IoT devices, vehicles, and other interfaces with support for lightweight infrastructure, large number of connections, and/or bad networks.

- OPC-UA: Use cases for industrial automation to connect heavy equipment, PLCs, SCADA systems, data historians, etc.

This is just a rule of thumb. And the situation changes. Modern PLCs and other equipment add support for multiple protocols to be more flexible. But, nowadays, you rarely have an option anyway because specific equipment, devices, or vehicles only support one or the other. And you can still be happy: Otherwise, you need to use another IIoT platform to connect to proprietary legacy protocols like S3, Modbus, et al.

MQTT and OPC-UA Gotchas

A few additional gotchas I realized from various customer conversations around the world in the past quarters:

- In theory, MQTT and OPC-UA work well together, i.e., MQTT is the underlying transportation protocol for OPC-UA. I did not see this yet in the real world (no statistical evidence, just my personal experience). But what I see is the combination of OPC-UA for the last mile integration to the PLC and then forwarding the data to other consumers via MQTT. All in a single gateway, usually a proprietary IoT platform.

- OPC-UA defines many sub-standards for different industries or use cases. In theory, this is great. In practice, I see this more like the WS-* hell in the SOAP/WSDL web service world where most projects moved to a much simpler HTTP/REST architectures. Similarly, most integrations I see to OPC-UA use simple, custom-coded clients in Java or other programming languages – because the tools don’t support the complex standards.

- IoT vendors pitch any possible integration scenario in marketing. I am amazed that MQTT and OPC-UA platforms directly integrate with MES and ERP system like SAP, and any data warehouse and data lake, like Google Big Query, Snowflake, or Databricks. But that’s only the theory. Should you really do this? And did you ever try to connect SAP ECC to MQTT or OPC-UA? Good luck from a technical, and even harder, from an organizational perspective. And do you want tight coupling and point-to-point communication in between the OT world and the ERP? In most cases, it is a good thing to have a clear separation of concerns between different business units, domains, and use cases. Choose the right tool and enterprise architecture; not just for the POC and first pipeline, but for the entire long-term strategy and vision.

The last point brings me to another growing trend: The combination of MQTT for IoT / OT workloads and data streaming with Apache Kafka for the integration with the IT world.

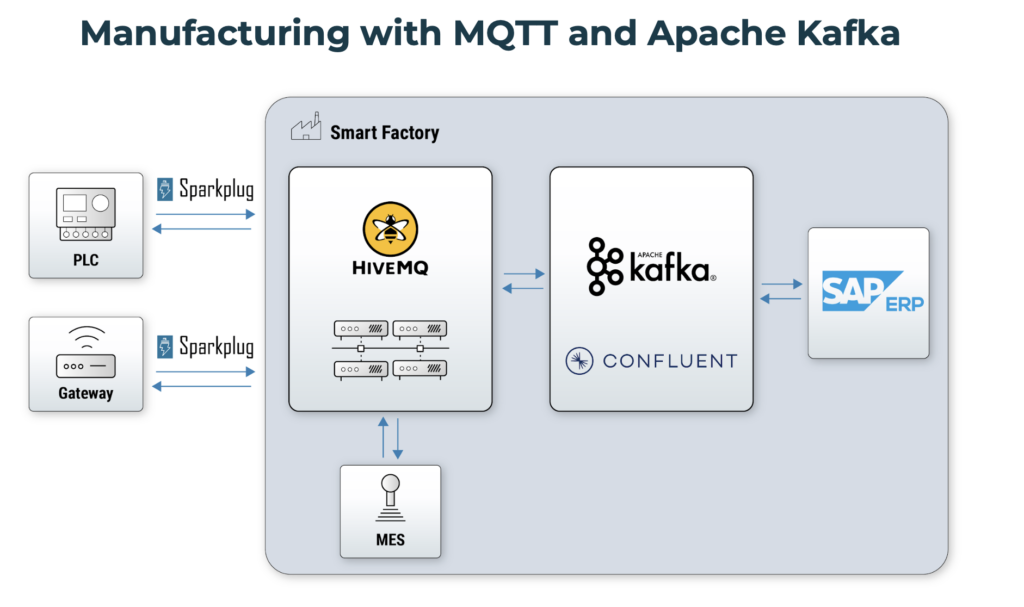

Trend 4: MQTT and Apache Kafka for OT/IT Data Processing

Contrary to MQTT, Apache Kafka is NOT an IoT platform. Instead, Kafka is an event streaming platform and used the underpinning of an event-driven architecture for various use cases across industries. It provides a scalable, reliable, and elastic real-time platform for messaging, storage, data integration, and stream processing. Apache Kafka and MQTT are a perfect combination for many IoT use cases.

Let’s explore the pros and cons of both technologies from the IoT perspective.

Trade-offs of MQTT

MQTT’s pros:

- Lightweight

- Built for thousands of connections

- All programming languages supported

- Built for poor connectivity / high latency scenarios

- High scalability and availability (depending on broker implementation)•ISO Standard

- Most popular IoT protocol (competing with OPC UA)

MQTT’s cons:

- Adoption mainly in IoT use cases

- Only pub/sub, not stream processing

- No reprocessing of events

Trade-offs of Apache Kafka

Kafka’s pros:

- Stream processing, not just pub/sub

- High throughput

- Large scale

- High availability

- Long-term storage and buffering

- Reprocessing of events

- Good integration to rest of the enterprise

Kafka’s cons:

- Not built for tens of thousands of connections

- Requires stable network and good infrastructure

- No IoT-specific features like keep alive, last will, or testament

Use Cases, Architectures and Case Studies for MQTT and Kafka

I wrote a blog series about MQTT in conjunction with Apache Kafka with many more technical details and real-world case studies across industries.

The first blog post explores the relation between MQTT and Apache Kafka. Afterward, the other four blog posts discuss various use cases, architectures, and reference deployments.

- Part 1 – Overview: Relation between Kafka and MQTT, pros and cons, architectures

- Part 2 – Connected Vehicles: MQTT and Kafka in a private cloud on Kubernetes; use case: remote control and command of a car

- Part 3 – Manufacturing: MQTT and Kafka at the edge in a smart factory; use case: Bidirectional OT-IT integration with Sparkplug B between PLCs, IoT Gateways, Data Historian, MES, ERP, Data Lake, etc.

- Part 4 – Mobility Services: MQTT and Kafka leveraging serverless cloud infrastructure; use case: Traffic jam prediction service using machine learning

- Part 5 – Smart City: MQTT at the edge connected to fully-managed Kafka in the public cloud; use case: Intelligent traffic routing by combining and correlating different 1st and 3rd party services

The following presentation is from my talk at the MQTT Summit. It explores various use cases and reference architectures for MQTT and Apache Kafka:

Fullscreen ModeIf you have a bad network, tens of thousands of clients, or the need for a lightweight push-based messaging solution, then MQTT is the right choice. Elsewhere, Kafka, a powerful event streaming platform, is probably the right choice for real-time messaging, data integration, and data processing. In many IoT use cases, the architecture combines both technologies. And even in the industrial space, various projects use Kafka for use cases like building a cloud-native data historian or real-time condition monitoring and predictive maintenance.

Data Governance for MQTT with Sparkplug and Kafka (and Beyond)

Unified Namespace and the concrete implementation with Sparkplug B is excellent for data governance in IoT workloads with MQTT. In a similar way, the Schema Registry defines the data contracts for Apache Kafka data pipelines.

Schema Registry should be the foundation of any Kafka project! Data contracts (aka Schemas, similar to Swagger in REST/HTTP APIs) enforce good data quality and interoperability between independent microservices in the Kafka ecosystem. Each business unit and its data products can choose any technology or API. But data sharing with others works only with good (enforced) data quality.

You can see the issue: Each technology uses its own data governance technology. If you add your favorite data lake, you will add another concept, like Apache Iceberg, to define the data tables for analytics storage systems. And that’s okay! Each data governance suite is optimized for its workloads and requirements. A company-wide master data management failed in the last two decades because each software category has different requirements.

Hence, one clear trend I see is an enterprise-wide data governance strategy across the different systems (with technologies like Collibra or Azure Purview). It has open interfaces and integrates with specific data contracts like Sparkplug B for MQTT, Schema Registry for Kafka, Swagger for HTTP/REST applications, or Iceberg for data lakes. Don’t try to solve the entire enterprise-wide data governance strategy with a single technology. It will fail! We have seen this before…

Legacy PLC (S7, Modbus, BACnet, etc.) with MQTT or Kafka?

MQTT and Kafka enable reliable and scalable end-to-end data pipelines between IoT and IT systems. At least, if you can use modern APIs and standards. Most IoT projects today are still brownfield. A lot of legacy PLCs, SCADA systems, and data historians only support proprietary protocols like Siemens S7, Modbus, BACnet, and so on.

MQTT or Kafka don’t support these legacy protocols out-of-the-box. Another middleware is required. Usually, enterprises choose a dedicated IoT platform for this. That means more cost and complexity, and slower projects.

In the Kafka world, Apache PLC4X is a great open source option if you want to build a modern, cloud-native data historian with Kafka. The framework provides integration with many legacy protocols. And it offers a Kafka Connect connector. The main issue is no official vendor support behind. Companies cannot buy support with a 24/7 business model for mission-critical applications. And that’s typically a blocker for any industrial deployment.

As MQTT is only a pub/sub message broker, it cannot help with legacy protocol integration. HiveMQ tries to solve this challenge with a new framework called HiveMQ Edge: A software-based industrial edge protocol converter. It is a young project and just kicking off. The core is open source. The first supported legacy protocol is Modbus. I think this is an excellent product strategy. I hope the project gets traction and evolves to support many other legacy IIoT technologies to modernize the brownfield shop floor. The project actually also supports OPC-UA. We will see how much demand that feature creates, too.

MQTT and Sparkplug Adoption Grows Year-By-Year for IoT Use Cases

In the IoT world, MQTT and OPC UA have established themselves as open and platform-independent standards for data exchange in Industrial IoT and Industry 4.0 use cases. Data Streaming with Apache Kafka is the data hub for integrating and processing massive volumes of data at any scale in real-time. The “Trinity of Data Streaming in IoT explores the combination of MQTT, OPC-UA and Apache Kafka” in more detail.

MQTT adoption grows year by year with the need for more scalable, reliable and open IoT communication between devices, equipment, vehicles, and the IT backend. The sweet spots of MQTT are unreliable networks, lightweight (but reliable and scalable) communication and infrastructure, and connectivity to thousands of things.

Maturing trends like the Unified Namespace with Sparkplug B, fully managed cloud services, and combined usage with Apache Kafka make MQTT one of the most relevant IoT standards across verticals like manufacturing, automotive, aviation, logistics, and smart city.

But don’t get fooled by architecture pictures and theory. For example, most diagrams for MQTT and Sparkplug show integrations with the ERP (e.g., SAP) and Data Lake (e.g., Snowflake). Should you really integrate directly from the OT world into the analytics platform? Most times, the answer is no because of cost, decoupling of business units, legal issues, and other reasons. This is where the combination of MQTT and Kafka (or another integration platform) shines.

How do you use MQTT and Sparkplug today? What are the use cases? Do you combine it with other technologies, like Apache Kafka, for end-to-end integration across the OT/IT pipeline? Let’s connect on LinkedIn and discuss it! Join the data streaming community and stay informed about new blog posts by subscribing to my newsletter.