Embarking on a journey towards Environmental, Social, and Governance (ESG) excellence demands more than just good intentions – it requires innovative tools that can turn sustainability aspirations into tangible actions. In the realm of data streaming, Apache Kafka and Flink emerged as a game-changer, offering a scalable and open platform to propel ESG initiatives forward. This blog post explores the synergy between ESG principles and Kafka and Flink’s real-time data processing capabilities, unveiling a powerful alliance that transforms intentions into impactful change. Beyond just buzzwords, real-world deployments architectures across industries show the value of data streaming for better ESG ratings.

A Green Future with Improved ESG

ESG stands for Environmental, Social, and Governance. It’s a set of criteria that investors use to evaluate a company’s impact on the world beyond just financial performance. Environmental factors assess how a company manages its impact on nature, social criteria examine how it treats people (both internally and externally), and governance evaluates a company’s leadership, ethics, and internal controls. Essentially, it’s a way for investors to consider the broader impact and sustainability of their investments.

ESG Buzzword Bingo with Scores and Ratings

ESG seems to be an excellent marketing hit. In the ESG world, phrases like “climate risk“, “social responsibility“, and “impact investing” are all the rage. Everyone’s throwing around terms like “carbon footprint”, “diversity and inclusion,” and “ethical governance” like confetti at a party. It’s all about showing off your commitment to a better, more sustainable future.

To be more practical, ESG scores or ratings are quantitative assessments that measure a company’s performance in terms of environmental, social, and governance factors.

These scores reflect a company’s performance on environmental, social, and governance factors. High scores mean you’re the A-lister of the sustainability world, while low scores might land you in the “needs improvement” category.

Various rating agencies or organizations that evaluate how well a company aligns with sustainable and responsible business practices assign scores. Investors and stakeholders use these scores to make informed decisions, considering not only financial performance but also a company’s impact on the environment, its treatment of employees, and the effectiveness of its governance structure. It’s a way to encourage businesses to prioritize sustainability and social responsibility.

No matter if you use these scores or your own (maybe more reasonable) KPIs, let’s find out how data streaming helps to improve the ESG in your company.

Data Streaming to Improve ESG and Sustainability

Data streaming with Kafka and Flink is a dynamic synergy. Apache Kafka efficiently handles the high-throughput, fault-tolerant ingestion of real-time data. Apache Flink processes and analyzes this streaming data with low-latency,

The combination empowers complex event processing and enabling timely insights for applications ranging from ESG monitoring to financial analytics. Together, Kafka and Flink form a powerful duo, orchestrating the seamless flow of information, transforming how organizations harness the value of real-time data in diverse use cases.

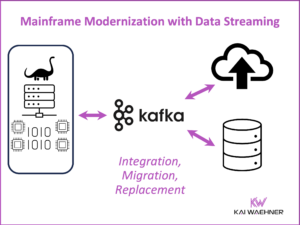

Apache Kafka: The De Facto Standard for Data Streaming

The Kafka API became the de facto standard for event-driven architectures and event streaming. Similar to Amazon S3 for object storage. Two proof points:

- Use cases across all industries and infrastructure. Including various kinds of transactional and analytic workloads. Edge, hybrid, multi-cloud. I collected a few examples across verticals that use Apache Kafka to show the prevalence across markets.

- Adoption by various open-source frameworks and many software/cloud vendors. Check out my blog post if you are interested in a comparison of Kafka vendors such as Confluent, Cloudera, Red Hat or Amazon MSK and related technologies like Azure Event Hubs, AWS Kinesis, Redpanda, or Apache Pulsar.

Apache Flink is gaining a similar adoption right now. The early adopters in Silicon valley use it in combination with Kafka for years already. Software and cloud vendors adopt it similarly to Kafka. Both together will have a grand future across all industries.

A New Software Category with Apache Kafka and Flink

In December 2013, the research company published “The Forrester Wave™: Streaming Data Platforms, Q4 2023“. Get free access to the report here. The leaders are Microsoft, Google and Confluent, followed by Oracle, Amazon, Cloudera, and a few others.

You might agree or disagree with the positions of a specific vendor regarding its offering or strategy strength. But the emergence of this new wave is a proof that data streaming is a new software category; not just yet another hype or next-generation ETL / ESB / iPaaS tool.

ESG and Sustainability Use Cases for Kafka and Flink

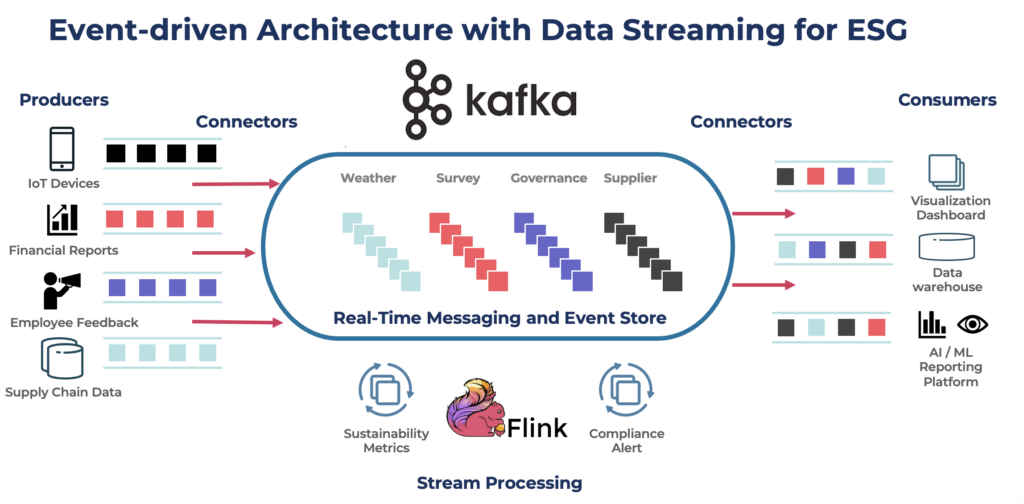

Using Kafka and Flink for data streaming in the context of ESG brings serious positive impact. Imagine a continuous flow of real-time data on environmental metrics, social impact indicators, and governance practices. Here’s how data streaming with Kafka and Flink helps:

- Real-time Monitoring: With Kafka and Flink, you can monitor and analyze ESG-related data in real time. This means instant insights into a company’s environmental practices, social initiatives, and governance structures. This immediate feedback loop allows for quicker response to any deviations from sustainability goals.

- Proactive Risk Management: Streaming data enables the identification of potential ESG risks as they emerge. Whether it’s an environmental issue, a social controversy, or a governance concern, early detection allows companies to address these issues promptly, reducing the overall impact on their ESG performance.

- Transparent Reporting: ESG reporting requires transparency. By streaming data, companies can provide stakeholders with real-time updates on their sustainability efforts across the entire data pipeline with data governance and lineage. This transparency builds trust among investors, customers, and the public.

- Automated Compliance Monitoring: ESG regulations are evolving, and compliance is crucial. With Kafka and Flink, you can automate the monitoring of compliance with ESG standards. This reduces the risk of overlooking any regulatory changes and ensures that a company stays on the right side of environmental, social, and governance requirements.

- Data Integration: Data streaming facilitates the integration of diverse ESG-related data sources. Whether it’s data from IoT devices, social media sentiment analysis, or governance-related documents, these tools enable seamless integration, providing a comprehensive view of a company’s ESG performance. Good data quality ensures correct reporting, no matter if real-time with stream processing or batch in a data lake or data warehouse.

Leveraging Kafka and Flink for data streaming in the ESG space enables companies to move from retrospective analysis to proactive management, fostering a culture of continuous improvement in environmental impact, social responsibility, and governance practices.

ESG Data Sources for the Data Streaming Platform

In the ESG context, data sources for Kafka and Flink are diverse, reflecting the multidimensional nature of environmental, social, and governance factors. Here are some key data sources:

- Environmental Data:

- IoT Devices: Sensors measuring air and water quality, energy consumption, and emissions.

- Satellite Imagery: Monitoring changes in land use, deforestation, and other environmental indicators.

- Weather Stations: Real-time weather data influencing environmental conditions.

- Social Data:

- Social Media: Analyzing sentiments and public perceptions related to a company’s social initiatives.

- Employee Feedback: Gathering insights from internal surveys or employee feedback platforms.

- Community Engagement Platforms: Monitoring community interactions and feedback.

- Governance Data:

- Financial Reports: Analyzing financial transparency, disclosure practices, and governance structures.

- Legal and Regulatory Documents: Tracking compliance with governance regulations and changes.

- Board Meeting Minutes: Extracting insights into decision-making and governance discussions.

- Supply Chain Data:

- Supplier Information: Assessing the ESG practices of suppliers and partners.

- Logistics Data: Monitoring the environmental impact of transportation and logistics.

- ESG Ratings and Reports:

- Third-Party ESG Ratings: Integrating data from ESG rating agencies to gauge a company’s overall performance.

- Sustainability Reports: Extracting information from company-issued sustainability reports.

- Internal Operational Data:

- Employee Diversity Metrics: Tracking diversity and inclusion metrics within the organization.

- Internal Governance Documents: Analyzing internal policies and governance structures.

By leveraging Kafka to ingest and process data from these sources in real-time, organizations can create a comprehensive and up-to-date view of their ESG performance, enabling them to make informed decisions and drive positive change.

ESG Data Sinks from Kafka and Flink

In the ESG context with Kafka and Flink, data sinks play a crucial role in storing and further using the processed information. Here are some common data sinks:

- Databases:

- Time-Series Databases: Storing time-stamped ESG metrics for historical analysis.

- Data Warehouses: Aggregating and storing ESG data for business intelligence and reporting.

- Analytics Platforms (aka Data Lake):

- Big Data Platforms (e.g., Hadoop, Spark): Performing complex analytics on ESG data for insights and trend analysis.

- Machine Learning Models: Using ESG data to train models for predictive analytics and pattern recognition.

- Visualization Tools:

- Dashboarding Platforms (e.g., Tableau, Power BI): Creating visual representations of ESG metrics for stakeholders and decision-makers.

- Custom Visualization Apps: Building specialized applications for interactive exploration of ESG data.

- Alerting Systems:

- Monitoring and Alerting Tools: Generating alerts based on predefined thresholds for immediate action on critical ESG events.

- Notification Systems: Sending notifications to relevant stakeholders based on specific ESG triggers.

- Compliance and Reporting Systems:

- ESG Reporting Platforms: Integrating with systems that automate ESG reporting to regulatory bodies.

- Internal Compliance Systems: Ensuring adherence to internal ESG policies and regulatory requirements.

- Integration with ESG Management Platforms:

- ESG Management Software: Feeding data into platforms designed for comprehensive ESG performance management.

- ESG Rating Agencies: Providing necessary data for third-party ESG assessments and ratings.

By directing Kafka-streamed ESG data to these sinks, organizations can effectively manage, analyze, and report on their environmental, social, and governance performance, fostering continuous improvement and transparency.

Real World Deployments with Kafka and Flink for a Better ESG Rating

The following case studies mainly focus on green energy, decarbonization and other relevant techniques to achieve a sustainable world. Social and governance aspects are as relevant and can leverage in similar ways from data streaming to provide accurate, curated, and consistent data in real-time.

Fun fact: Almost all the case studies come out of Europe. Would be great to see similar success stories about improved ESG in other regions.

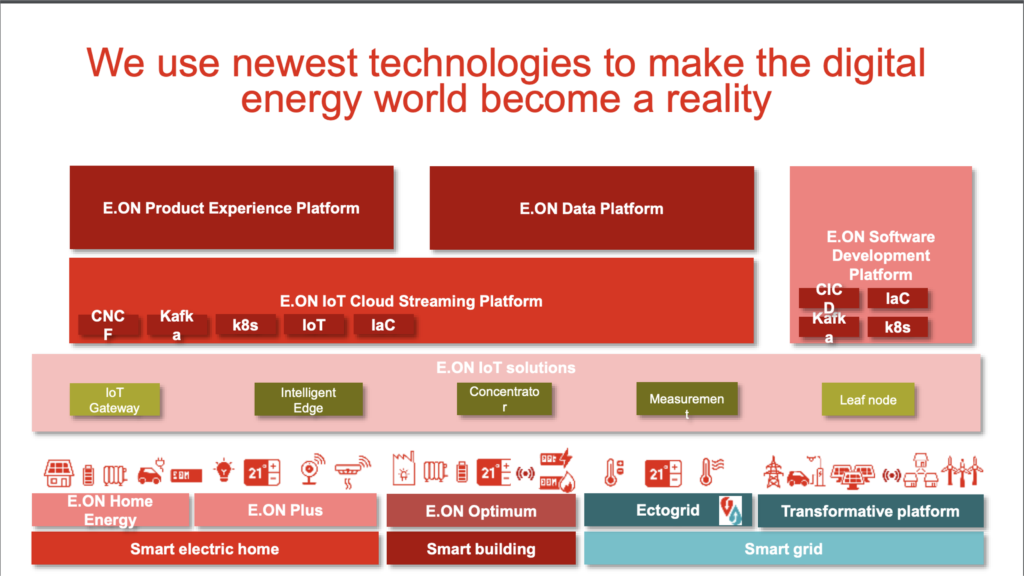

EON: Smart Grid for Energy Production and Distribution with Cloud-Native Apache Kafka

EON explained to us already years ago in 2018 at a Confluent event in Munich how the mankind’s energy world is transforming:

From a linear pipe model…

- System-centric

- Fossil-fuel and nuclear

- Large-scale and central

… to a connected energy world…

- Green and clean technologies

- Smaller-scale, distributed

- Micro Grids, user centric

… with the EON Streaming Platform Smart Grid Infrastructure

The EON Streaming Platform is built on the following paradigms to provide a cloud-native smart grid infrastructure:

- IoT scale capabilities of public cloud providers

- EON microservices that are independent of cloud providers

- Real-time data integration and processing powered by Apache Kafka

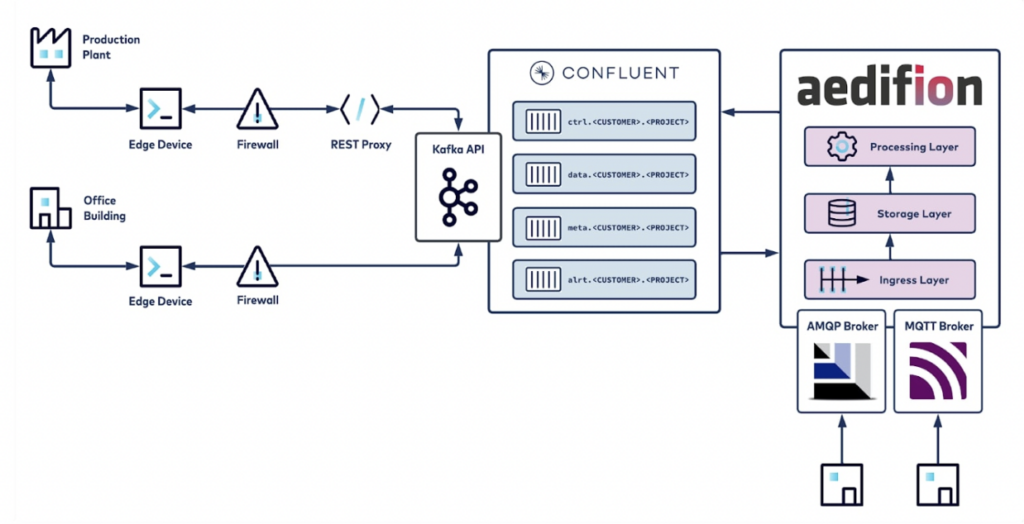

aedifion: Efficient Management of Real Estate with MQTT and Kafka

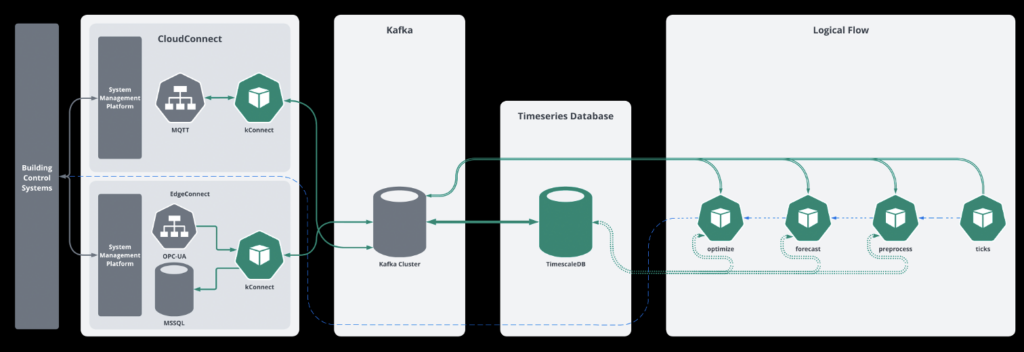

The aedifion cloud platform is a solution for optimizing building portfolios. A simple digital software upgrade enables you to get an optimally managed portfolio and sustainably reduce your operating costs and CO2 emissions. Connectivity includes Industrial IoT, smart sensors, MQTT, AMQP, HTTP, and others.

A few notes about the architecture of aedifion’s cloud platform:

- Digital, data-driven monitoring, analytics and controls products to operate buildings better and meet Environmental, Social, and Corporate Governance (ESG) goals

- Secure connectivity and reliable data collection with fully managed Confluent Cloud

- Deprecated MQTT-based pipeline – Kafka serves as a reliable buffer between producers (Edge Devices) and consumers (backend microservices) smoothing over bursts and temporary application downtimes

The latter point is very interesting. While Kafka and MQTT are a match made in heaven for many use cases, MQTT is not needed if you have a stable internet connection in a building and only hundreds of connections to devices. For bad networks or thousands of connections, MQTT is still the best choice for the last mile integration.

Ampeers Energy: Decarbonization for the Real Estate with OPC-UA and Confluent Cloud

The building sector is one of the major sources of climate-damaging CO₂ emissions. AMPEERS ENERGY achieves a significant CO₂ reduction of up to 90% – through intelligent software and established partners for the necessary hardware and services. The technology determines the optimal energy concept and automates processes to market locally generated energy, for example, to tenants.

The service provides district management with IoT-based forecasts and optimization, and local energy usage accounting. The real-time analytics of time-series data is implemented with OPC-UA, Confluent Cloud and TimescaleDB.

The solution is either deployed as fully managed services or with hybrid edge nodes if security or compliance requires this.

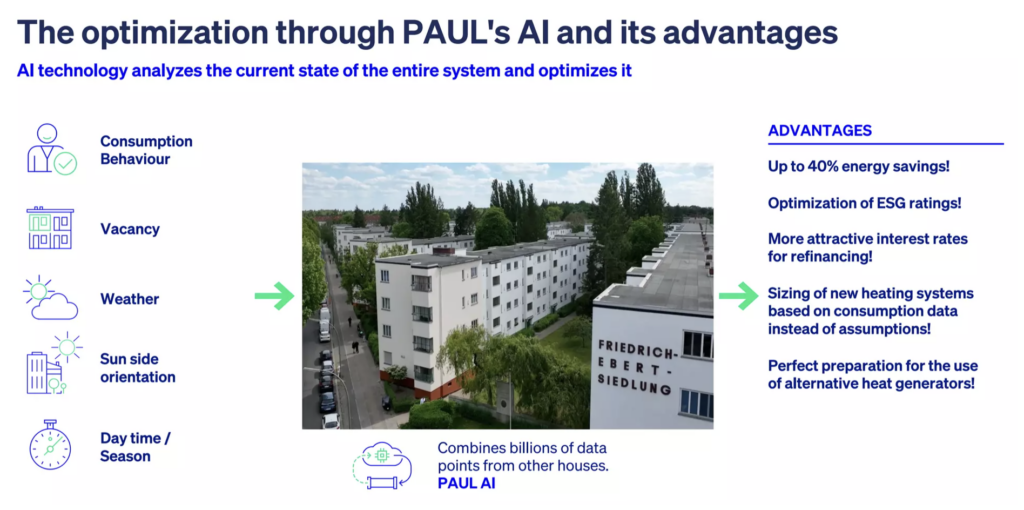

PAUL Tech AG: Energy Savings and Optimized ESG Ratings without PLC / SCADA

The PAUL Tech AG digitalizes central building systems by installing intelligent hardware in water-bearing systems. PAUL optimizes and automates volume flows by using artificial intelligence, resulting in significant energy and CO₂ savings.

The platform helps to optimize ESG ratings and enables energy savings up to 40 percent.

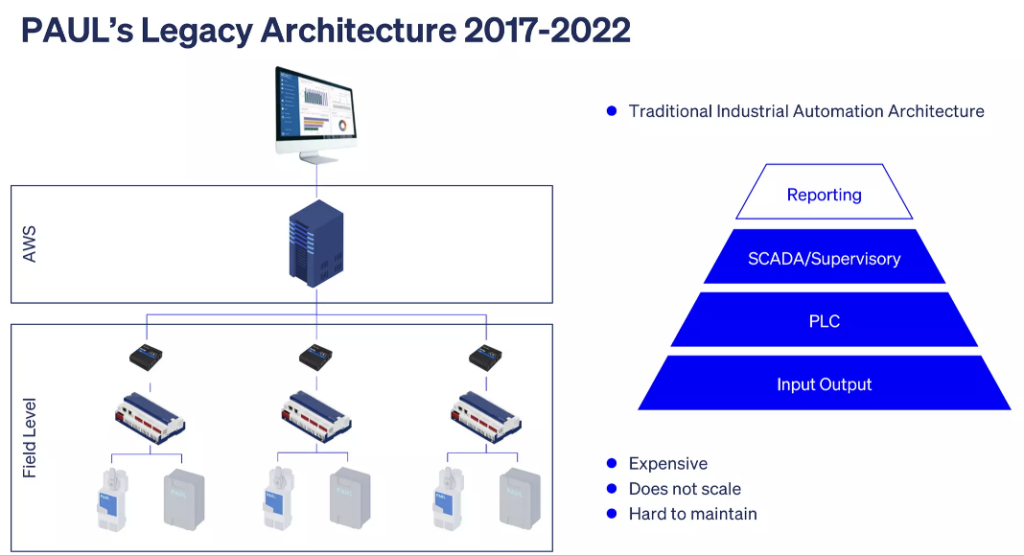

PAUL’s legacy architecture shows the traditional deployment patterns of the past using complex, expensive, proprietary PLCs and SCADA systems. The architecture is expensive, does not scale, and is hard to maintain:

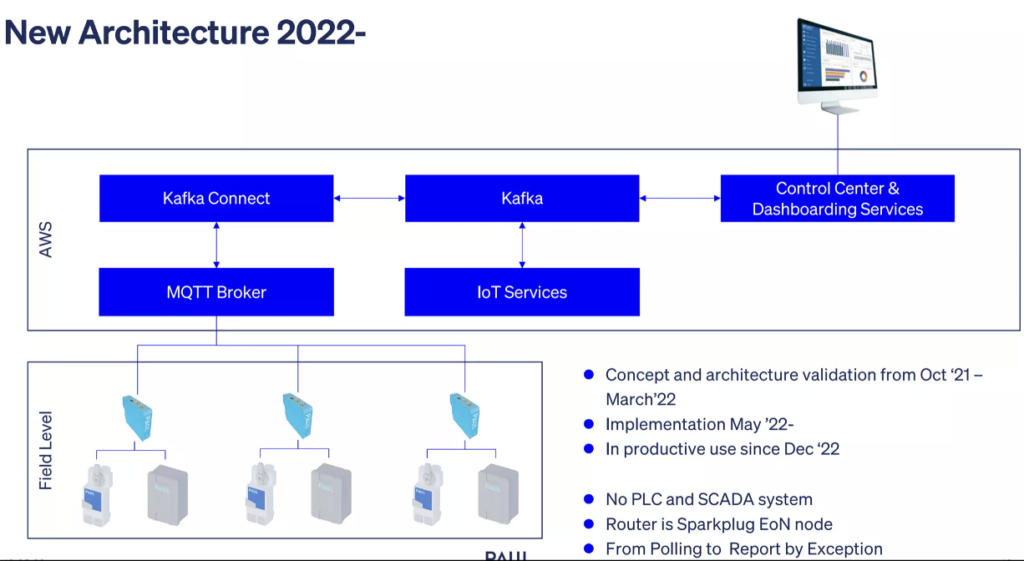

PAUL’s cloud-native architecture is open and elastic. It does not require PLCs and SCADA systems. Instead, the open standards like MQTT and Sparkplug connect the Industrial IoT with the IT environments. Apache Kafka in the AWS cloud connects, streams and processes the IoT data and shares information with dashboards and other data management systems:

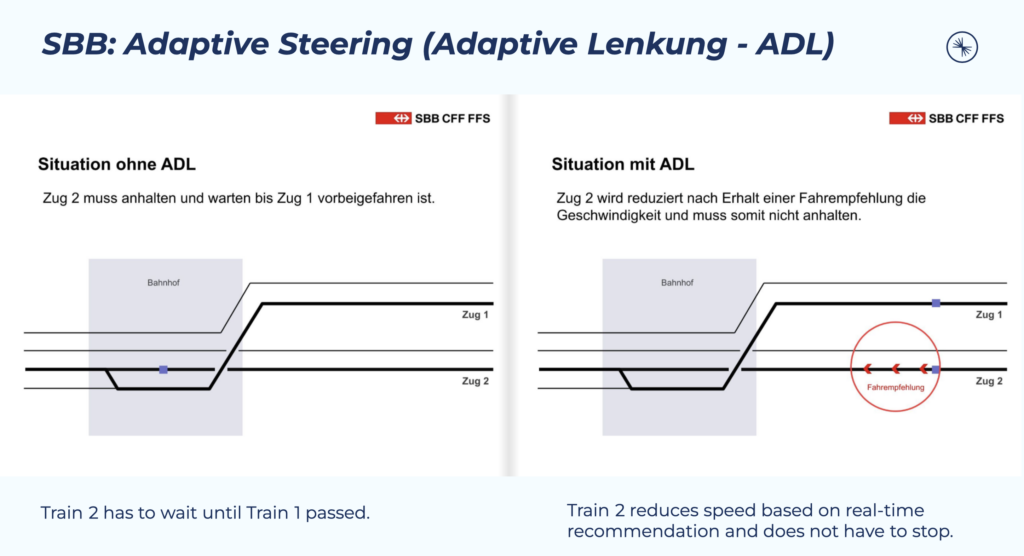

SBB: Trains use Adaptive Steering with Sustainability Effect

SBB (Swiss Federal Railways / Schweizerische Bundesbahnen in German) is the national railway company of Switzerland. It’s known for its extensive network of trains connecting various cities and regions within Switzerland, as well as international routes to neighboring countries.

The company uses adaptive steering for more punctuality and energy efficiency. This saves electricity and ensures greater punctuality and comfort.

The so called RCS module Adaptive Direction Control (ADL) aims to reduce power consumption by preventing unnecessary train stops and thus energy-intensive restarts. RCS-ADL calculates the energy-optimized speed and sends this to the train driver’s tablet.

Based on these calculations, RCS-ADL provides the corresponding speed recommendations. These are purely driving recommendations and not driving regulations. The external signals still have priority.

SBB creates the following sustainable benefits:

- Energy is produced by early electric braking.

- Energy is saved by avoiding stopping.

- No loss of time because of the acceleration phase.

- The train leaves the point of conflict at maximum speed.

The event-driven architecture is powered by Apache Kafka and Confluent. It consumes and correlates the following events:

- Speed recommendation to train driver

- Calculations of train speeds

- Recognition on unplanned stops

- Prognosis / statistical analysis on likelihood of stop

- Disposition system (Fleet management + train driver availability)

- Operating situation (Healthy, conflict situation, ..)

- Train steering

- Rolling stock material

- Train timetable

- Infrastructure data

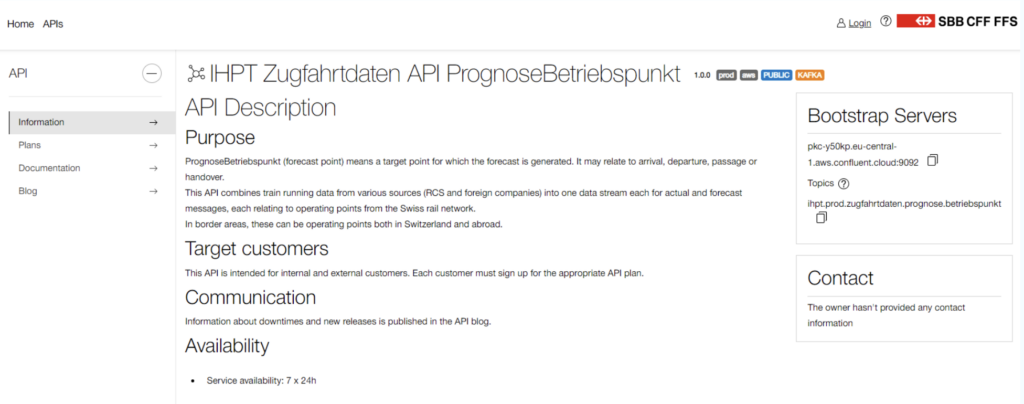

SBB provides Open APIs for relevant train and customer information. Most endpoints are still API-based, i.e. HTTP/REST. Some streaming endpoints provide a direct public Kafka API:

Streaming data sharing is a common trend and complementary to existing API gateways and management tools like Mulesoft, Apigee or Kong. Read my article “Streaming Data Exchange with Kafka and a Data Mesh in Motion” for more details.

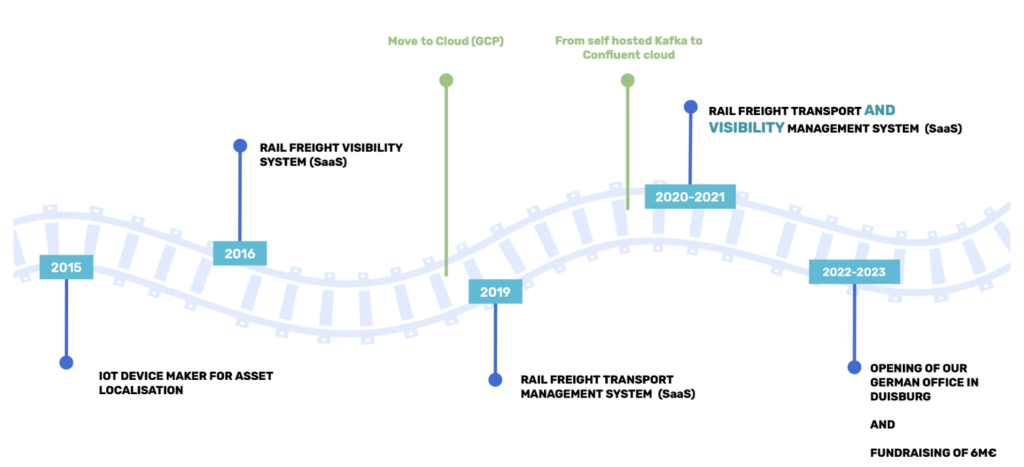

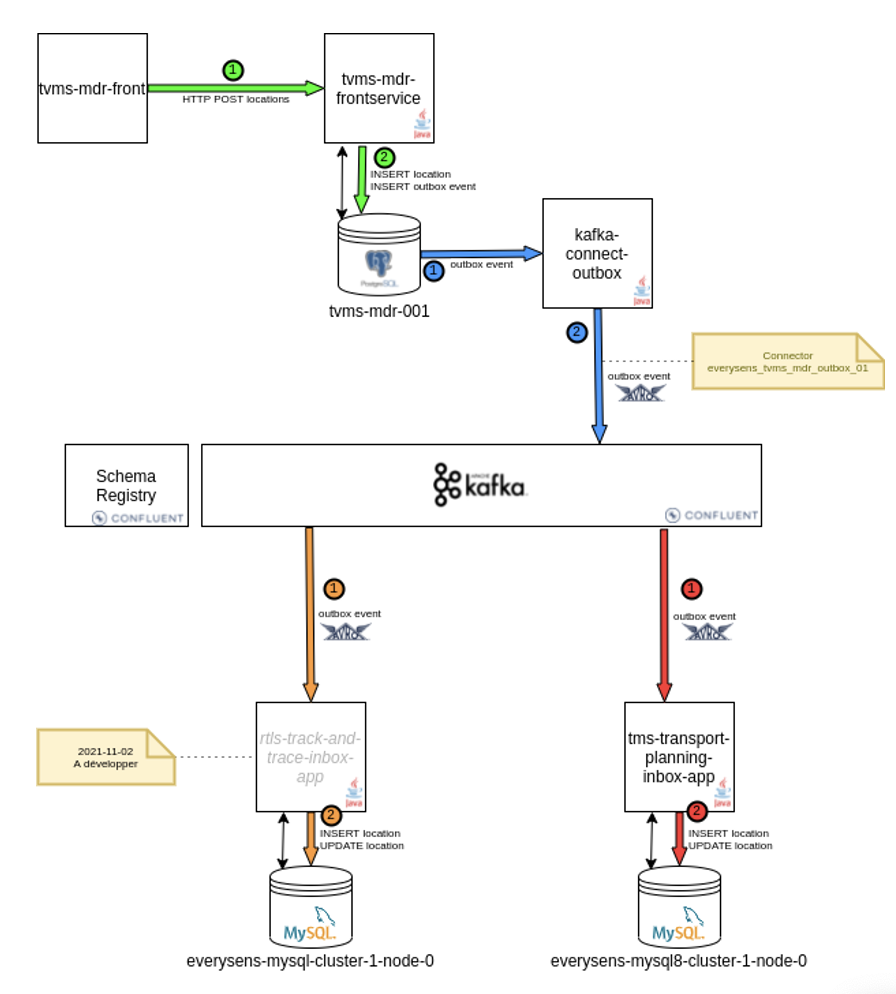

EverySens: Decarbonize Freight Transport with a Cloud-Native Transport Management System (TMS)

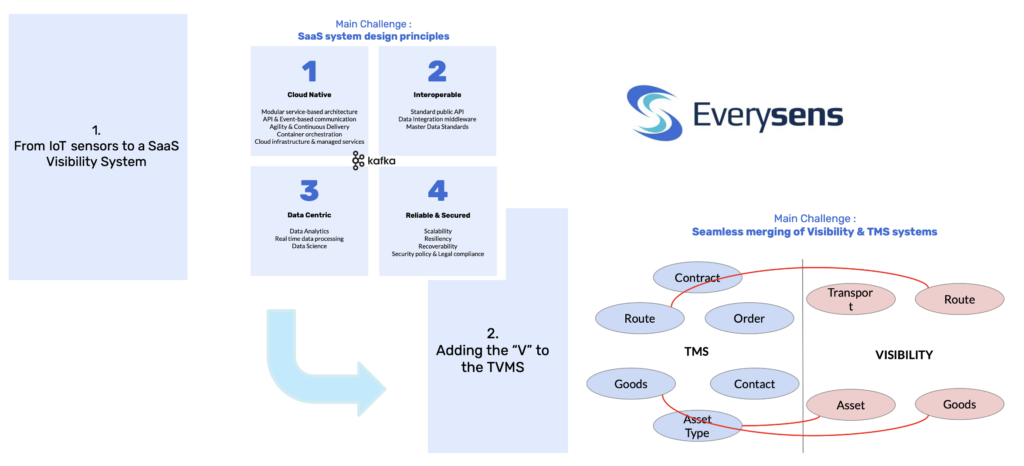

Everysens offers a Transport Visibility & Management System (TVMS): A new cloud-native generation of Transport Management System (TMS) integrating real-time visibility deep into the logistics processes of its customers.

Here are a few of the statistics of Everysens’ website:

- 18 300 managed trains each year

- 40% reduction in time weekly planning

- 42 countries covered

- 732K trucks avoided each year

The last bullet point impressively shows how real-time data streaming enables reduction of unnecessary carbonization. The solution avoided hundreds of thousands of truck rides. After building a cloud-native transport management system (TMS), merging visibility and TMS adds tremendous business value:

Everysens presented its data streaming journey at the Data in Motion Tour Paris 2023. Their evolution looks very similar to other companies. Data streaming with Kafka and Flink is a journey, not a race!

The technical architecture is not all that surprising. Kafka is the data hub and connects, streams, and processes events across the data pipeline:

Noteworthy that many sources and sinks are traditional relational databases like MySQL or PostgreSQL. One of the most underestimated features of Kafka is its event store and the capability to truly decouple systems and guarantee data consistency across real-time and non-real-time systems.

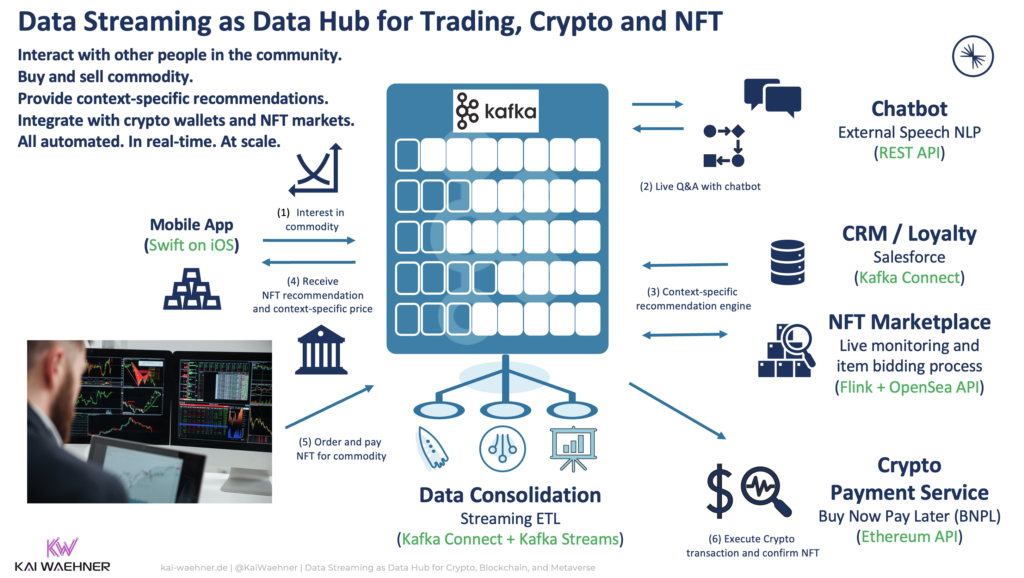

Powerledger: Green Energy Trading with Blockchain, Kafka and MongoDB

Powerledger develops software solutions for the tracking, tracing and trading of renewable energy. The solution is powered by Blockchain, Kafka and MongoDB for green energy trading.

The combination of data streaming and blockchain is very common in FinTech and cryptocurrency projects. Here are a few interesting facts about the platform:

- Blockchain-based energy trading platform

- Facilitating peer-to-peer (P2P) trading of excess electricity from rooftop solar power installations and virtual power plants

- Traceability with non-fungible tokens (NFTs) representing renewable energy certificates (RECs)

- Decentralised rather than the conventional unidirectional market

- Benefits: Reduced customer acquisition costs, increased customer satisfaction, better prices for buyers and sellers (compared with feed-in and supply tariffs), and provision for cross-retailer trading

- Apache Kafka via Confluent Cloud as a core piece of the integration infrastructure, specifically to ingest data from smart electricity meters and feed it into the trading system

Here is an example architecture for the integration between data streaming and trading via NFT tokens:

- Apache Kafka and Blockchain – Comparison and a Kafka-native Implementation

- Apache Kafka as Data Hub for Crypto, DeFi, NFT, Metaverse – Beyond the Buzz

- Apache Kafka in Crypto and FinServ for Cybersecurity and Fraud Detection

Streaming for a Better World: Unleashing ESG Potential with Kafka and Flink

This article explored how organizations can achieve Environmental, Social, and Governance (ESG) excellence through the utilization of innovative tools like Apache Kafka and Flink. By leveraging the real-time data processing capabilities of these platforms, companies can translate their sustainability aspirations into concrete actions, driving meaningful change and enhancing their ESG ratings across diverse industries.

The case studies showed the value of data streaming to improve ESG scores across use cases, such as managing real estate, monitoring IoT data streams, or trading renewable energy. In a similar way, project teams can evaluate how used software solutions and cloud services are committed to developing a strong ESG program and strategy to conduct business with integrity and build a sustainable future. As an example for data streaming, look at Confluent’s ESG Report to understand how ESG matters for many software vendors, too.

How do you build sustainable software projects to improve your ESG ratings? Is data streaming and real-time data correlation part of the story already? Let’s connect on LinkedIn and discuss it! Stay informed about new blog posts by subscribing to my newsletter.