How Siemens, SAP, and Confluent Shape the Future of AI Ready Integration – Highlights from the Rojo Event in Amsterdam

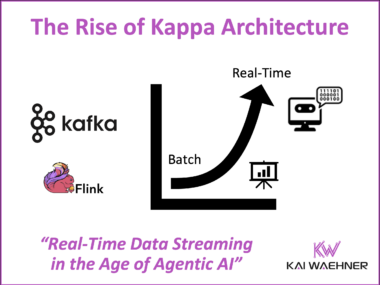

Many enterprises want to become AI ready but are limited by slow, batch based integration platforms that prevent real time insight and automation. The Rojo “Future of Integration” event in Amsterdam addressed this challenge by bringing together Siemens, SAP, Rojo, and Confluent to show how event driven and intelligent data architectures solve it. The discussions revealed how data streaming with Apache Kafka and Flink complements traditional integration tools, enabling continuous data flow, scalability, and the foundation for AI and automation. This blog summarizes the key learnings from the event, including my presentation “AI Ready Integration with Data Streaming,” and insights from Siemens, SAP, and Rojo on how enterprises can build truly connected, AI ready ecosystems.