The retail industry is completely changing these days. Consequently, traditional players have to disrupt their business to stay competitive. New business models, great customer experience, and automated real-time supply chain processes are mandatory. Event Streaming with Apache Kafka plays a key role in this evolution of re-inventing the retail business. This blog post explores use cases, architectures, and real-world deployments of Apache Kafka including edge, hybrid, and global retail deployments at companies such as Walmart and Target.

Disruptive Trends in Retail

A few general trends completely change the retail industry:

- Highly competitive market, work to thin margins

- Moving from High Street (brick & mortar) to Online (OnmiChannel)

- Personalized Customer Experience – optimal buyer journey

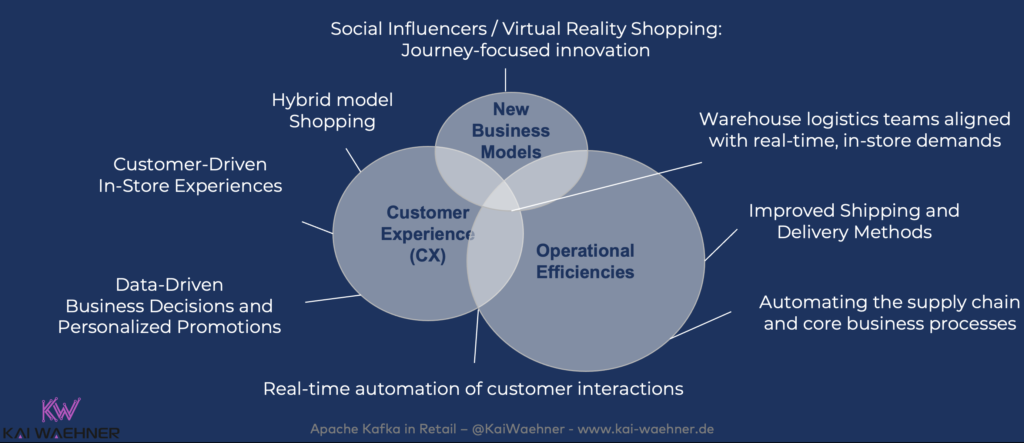

These trends require retail companies to create new business models, provide a great customer experience, and improve operational efficiencies:

Event Streaming with Apache Kafka in Retail

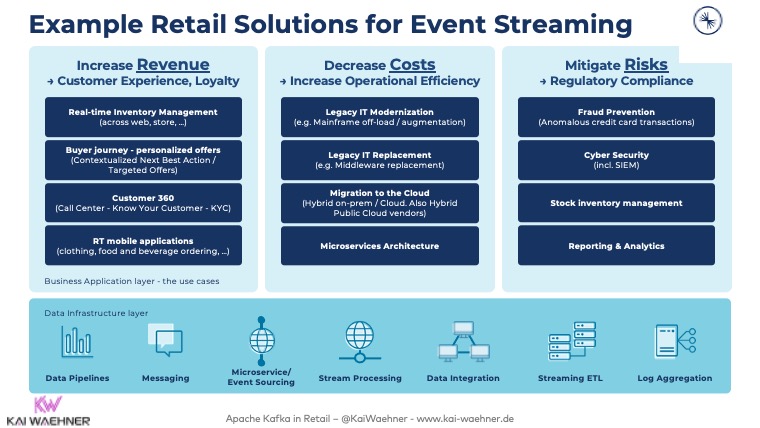

Many use cases for event streaming are not new. Instead, Apache Kafka enables faster processing at a larger scale with a lower cost and reduced risk:

Hence, Kafka is not just used for greenfield projects in the retail industry. It very often complements existing applications in a brownfield architecture. Plenty of material explores this topic in more detail. For instance, check out the following:

- Kafka as modern middleware as a replacement or together with other MQ / ETL / ESB infrastructure

- Kafka in conjunction with API Management tools

- Integration with legacy mainframe applications

- Event Streaming as the foundation of postmodern ERP, logistics, transportation systems

Let’s now take a look at a few public examples that leverage all the above capabilities.

Real World Use Cases for Kafka in Retail

Various deployments across the globe leverage event streaming with Apache Kafka for very different use cases. Consequently, Kafka is the right choice, no matter if you need to optimize the supply chain, disrupt the market with innovative business models, or build a context-specific customer experience. Here are a few examples:

- Walmart – Real-Time Inventory System: 8,500 nodes processing 11 billion events per day deliver an omnichannel experience so every customer can shop the way they want to

- Target – Omnichannel Distribution and Logistics: Data correlation of events from distribution centers, stores, digital channels, and customer interactions enable real-time omnichannel scale

- Nuuly – Clothing Rental Subscription Service: Very different from a typical e-commerce model with the need for a real-time event-driven architecture

- AO.com – Context-specific Customer 360: Hyper-personalized online retail experience, turning each customer visit into a one-on-one marketing opportunity via a correlation of historical customer data with real-time digital signals

- Mojix – Retail and Supply Chain IoT Platform: Real-time operational intelligence across the edge and the cloud improves inventory accuracy, enables operational intelligence, supports omnichannel sales

The architectures of retail deployments often leverage a fully-managed serverless infrastructure with Confluent Cloud or deploy in hybrid architectures across data centers, clouds, and edge sites. Let’s now take a look at one example.

Omnichannel and Customer 360 across the Supply Chain with Kafka

Omnichannel retail requires the combination of various different tasks and applications across the whole supply chain. Some tasks are real-time while others are batch or historical data:

- Customer interactions, including website, mobile app, on-site in store

- Reporting and analytics, including business intelligence and machine learning

- R&D and manufacturing

- Marketing, loyalty system, and aftersales

The real business value is generated by correlating all the data from these systems in real-time. That’s where Kafka is a perfect fit due to its combination of different capabilities: Real-time message at scale, storage for decoupling and caching, data integration, continuous data processing.

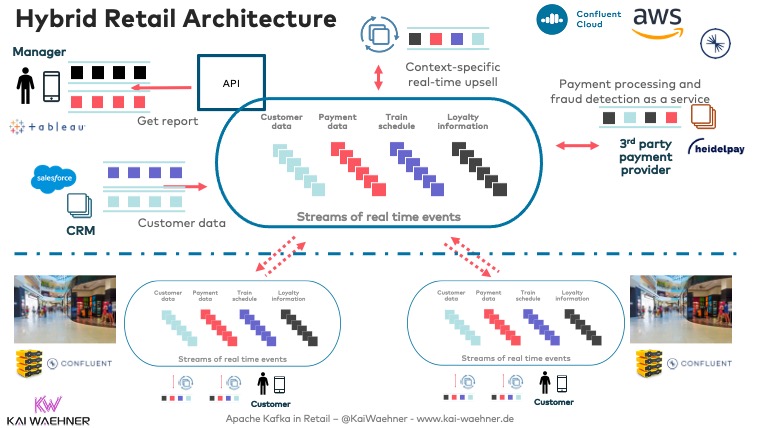

Hybrid Architecture from Edge to Cloud

The following picture shows a possible retail architecture leveraging event streaming. It runs many mission-critical workloads and integrations in the cloud. However, the context-specific recommendations, point of sale payment and loyalty processing, and other relevant use cases are executed at the disconnected edge in each retail store:

I will dig deeper into this architecture and talk about specific requirements and challenges solved with Kafka at the edge and in the cloud for retailers. For now, check out the following posts to learn about global Kafka deployments and Kafka at the edge in the retail stores:

- Kafka is the new black at the edge in retail stores

- Use cases and architectures for Kafka outside the data center

- Architectures for hybrid and global Kafka deployments

Slides and Video – Disruption in Retail with Kafka

The following slide deck explores the usage of Kafka in retail in more detail:

Click on the button to load the content from www.slideshare.net.

Also, here is a link to the on-demand video recording:

Software (including Kafka) is Eating Retail

In conclusion, Event Streaming with Apache Kafka plays a key role in this evolution of re-inventing the retail business. Walmart, Target, and many other retail companies rely on Apache Kafka and its ecosystem to provide a real-time infrastructure to make the customer happy, increase revenue, and stay competitive in this tough industry.

What are your experiences and plans for event streaming in the retail industry? Did you already build applications with Apache Kafka? Let’s connect on LinkedIn and discuss it! Stay informed about new blog posts by subscribing to my newsletter.