The automotive industry is undergoing its most profound transformation in over a century. What was once defined by mechanical engineering is now led by software, data, and AI. From smart factories and connected vehicles to autonomous systems and personalized mobility, innovation increasingly depends on the ability to process and act on data in real time.

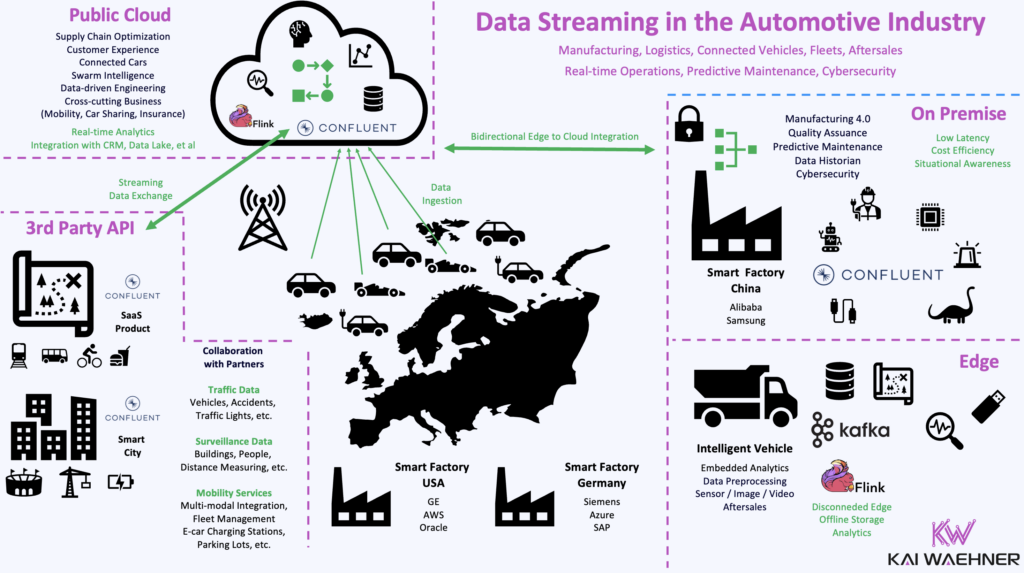

Data Streaming technologies like Apache Kafka and Apache Flink have become foundational in this shift. They enable the real-time data infrastructure that modern automotive use cases demand—bridging edge and cloud, integrating IT and OT, and powering everything from predictive maintenance to customer engagement and AI-driven vehicle functions.

This blog explores how the world’s leading OEMs, suppliers, and mobility platforms are embracing data streaming to modernize their operations, accelerate digital innovation, and redefine what’s possible across the vehicle lifecycle.

Join the data streaming community and stay informed about new blog posts by subscribing to my newsletter and follow me on LinkedIn or X (former Twitter) to stay in touch. And make sure to download my free book about data streaming use cases.

Digitalization and Innovation in the Automotive Industry

The automotive industry is transforming into a software-centric, data-driven ecosystem. Traditional engineering cycles are being replaced by agile, cloud-native development. Physical assets are becoming software-defined products. AI and real-time data processing are at the core of this shift.

This transformation impacts every function – from R&D and production to aftersales and mobility services. Real-time decision-making, event-based integration, and intelligent automation are essential for maintaining competitiveness, reducing cost, and improving time-to-market.

Data streaming technologies like Apache Kafka and Apache Flink are foundational to this change. They enable real-time data pipelines, decouple producers and consumers, and support complex event processing. When combined with AI/ML, they allow manufacturers to build intelligent, adaptive automotive systems at scale.

Data Streaming in the Automotive Industry

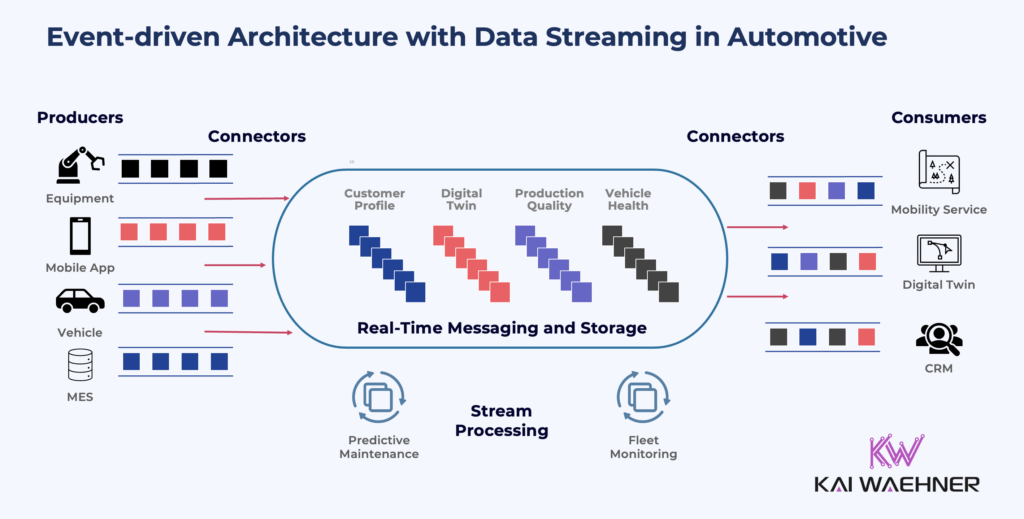

Automotive companies deal with vast and growing volumes of data; from in-vehicle sensors to edge devices in smart factories, to backend systems in the cloud. Static, batch-oriented architectures are no longer sufficient.

An event-driven architecture enables real-time processing and responsiveness by reacting instantly to changes across vehicles, factories, and cloud systems.

Use Cases and Success Stories for Data Streaming

Apache Kafka is at the heart of digital transformation across the automotive sector. Carmakers and suppliers use it to move from siloed, batch-oriented systems to real-time, event-driven platforms. A few examples:

- BMW uses Kafka on the shop floor and in the cloud to connect machines, systems, and applications in real time. This supports smart manufacturing, reduces downtime, and improves supply chain visibility. They also use Kafka for natural language processing to automate tasks like contract analysis and service desk operations.

- Audi built its connected car architecture using Kafka. Real-time data from vehicles powers features like predictive AI, swarm intelligence, and digital twins. Their “Audi Data Collector” platform ensures scalable and reliable ingestion from millions of connected cars.

- Tesla runs a massive Kafka infrastructure to support its vehicles, energy systems, and Gigafactories. Kafka processes telemetry, charging data, and factory metrics to enable real-time decision-making across the company.

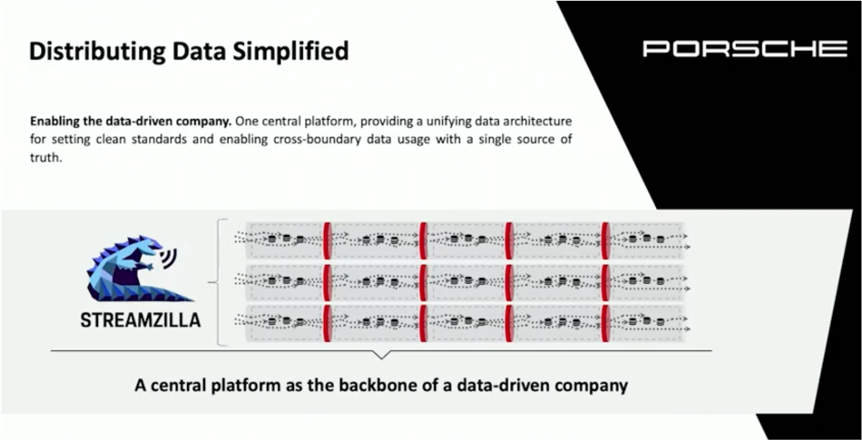

- Porsche built the ‘My Porsche’ platform to deliver a seamless digital experience across web, app, in-car, and in-store channels. Kafka powers customer 360 use cases, OTA updates, and a central streaming platform called Streamzilla that connects systems across clouds and regions.

Across all these examples and many more, data streaming with Apache Kafka enables decoupled, scalable data flows. It connects legacy systems, equipment, vehicles, and IT software applications with cloud-native platforms and supports use cases ranging from predictive maintenance to real-time personalization. Data streaming is no longer optional—it’s a core capability for modern automotive innovation.

Explore more details about the above case studies here: Data Streaming Automotive Use Cases and Case Studies.

Enterprise Architecture for Data Streaming at Scale

Automotive use cases span edge, on-prem, and cloud environments. To support these diverse requirements, most organizations adopt a hybrid architecture powered by Kafka and its ecosystem.

Edge streaming collects and processes sensor data locally – either in the vehicle or on the factory floor. Local processing enables low-latency decision-making, reduces data transfer costs, and ensures compliance with data residency or security requirements. Common use cases include anomaly detection, real-time alerts, and first-stage filtering before sending data to the cloud.

Kafka Connect moves relevant data into cloud platforms or centralized data lakes. In addition to data integration, Cluster Linking is often used to replicate data from edge or on-prem Kafka clusters to cloud environments. This supports centralized analytics, AI training, or long-term aggregation, without compromising operational performance at the edge.

Flink applications process and enrich the data in motion. While commonly used for streaming ETL, Flink also supports full business logic. Use cases include predictive maintenance, real-time scoring, or stateful monitoring—making Flink suitable for both backend analytics and operational applications.

Kafka topics act as the integration layer between microservices, AI pipelines, dashboards, and third-party systems. In modern architectures, Kafka also enables data products and data contracts. This enforces schema compatibility, improves data quality, and provides clear ownership for distributed data pipelines.

This architecture ensures flexibility, scalability, fault tolerance, and agility—key requirements for automotive use cases such as connected vehicles, smart factories, and customer-facing digital services. It enables event-driven, real-time data flows across all domains of the enterprise.

More details about the architectural patterns here: Apache Kafka Enterprise Architectures in the Automotive Industry.

Top Trends in the Automotive Industry and Their Connection to Data Streaming

The following key trends are shaping the future of the automotive and manufacturing industry:

- Paradigm Shift in Vehicle Design

- Simulation with Digital Twins and Virtualization

- AI-Driven Disruption

- Reimagining the Customer Experience

- New Collaboration Models

- Reducing Operational Complexity

- Data-Driven Software Development

- Self-Driving Cars and Autonomous Systems

Each of these trends is deeply influenced by real-time data streaming. Technologies like Apache Kafka and Flink play a critical role in enabling the flexibility, intelligence, and responsiveness these trends demand. This chapter explores how data streaming supports and accelerates each of these transformations—for OEMs, suppliers, manufacturers, mobility providers, and other key players across the automotive ecosystem.

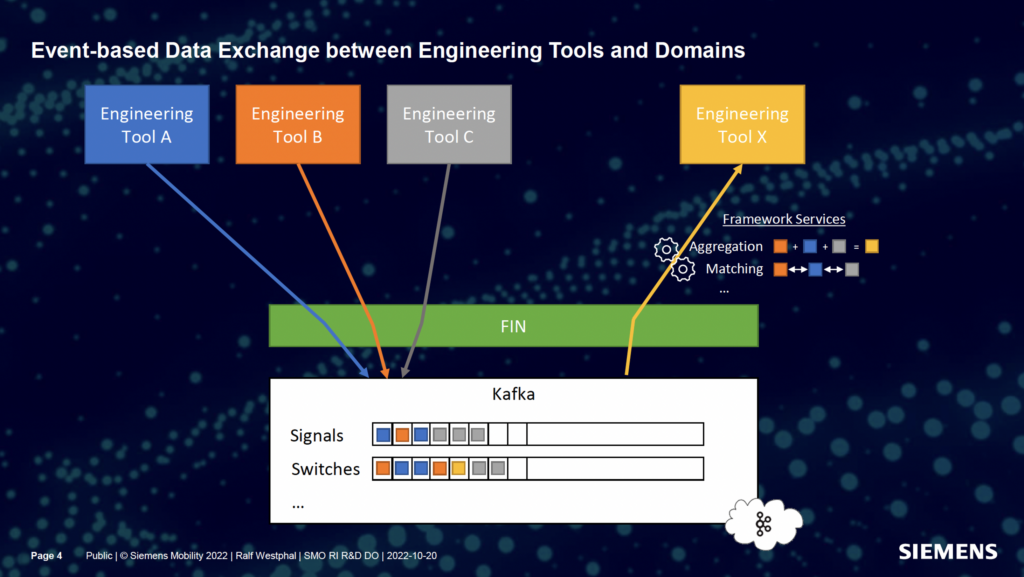

Paradigm Shift in Vehicle Design

Vehicles are no longer designed through isolated CAD workflows. Instead, digital engineering platforms create shared models across design, simulation, and testing in manufacturing vehicles.

Data Streaming Integration:

Kafka pipelines connect tools across the lifecycle – from PLM systems to real-time feedback from test benches or prototype fleets. This enables early fault detection and design feedback loops based on real-world usage.

Use Case Example:

A Kafka-based feedback loop integrates design simulations with real-world telemetry from test drives, reducing the time from concept to validation.

Real-World Example:

Siemens Mobility exemplifies this paradigm shift by replacing point-to-point interfaces between engineering tools with a decoupled, event-driven architecture using fully managed Confluent Cloud. This enables consistent integration across engineering domains, real-time data exchange, and seamless collaboration; without requiring changes to existing tools. The result is a flexible, modular development environment that accelerates design cycles and supports continuous innovation in vehicle engineering.

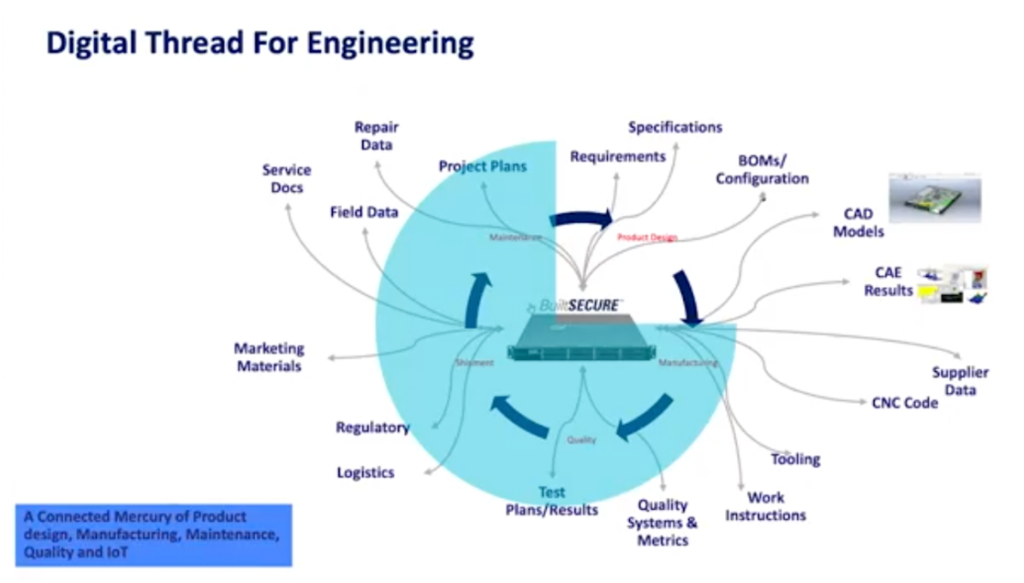

Simulation with Digital Twins and Virtualization

Digital twins replicate physical systems in the virtual world. They rely on real-time data streams to simulate, monitor, and optimize system behavior. This allows automotive and manufacturing companies to test features, predict failures, and accelerate development without relying solely on physical prototypes.

Data Streaming Integration:

Data streaming provides the data backbone between edge devices, simulators, and cloud models. Kafka ensures reliable, high-throughput transport of real-time data. Flink enriches these streams with business logic, context, or machine learning predictions to support dynamic simulation workflows.

Use Case Example:

A manufacturer simulates a powertrain under extreme conditions using live telemetry streamed via Kafka. This reduces the number of physical test cycles needed and allows engineering teams to validate software updates under varying load profiles in virtual environments.

Real-World Example:

Mercury Systems, a global aerospace and defense technology company, drives its digital thread program with Apache Kafka and Confluent. By integrating design, manufacturing, and service data across PLM, ERP, and MES systems, Mercury creates a unified view of the product life cycle. Their cloud-native streaming architecture supports real-time simulation, AI/ML processing, and model-based system engineering. This approach enables faster time to market, greater scalability, and a more agile innovation pipeline—key elements of a virtualization strategy that mirrors how digital twins operate in automotive design and testing.

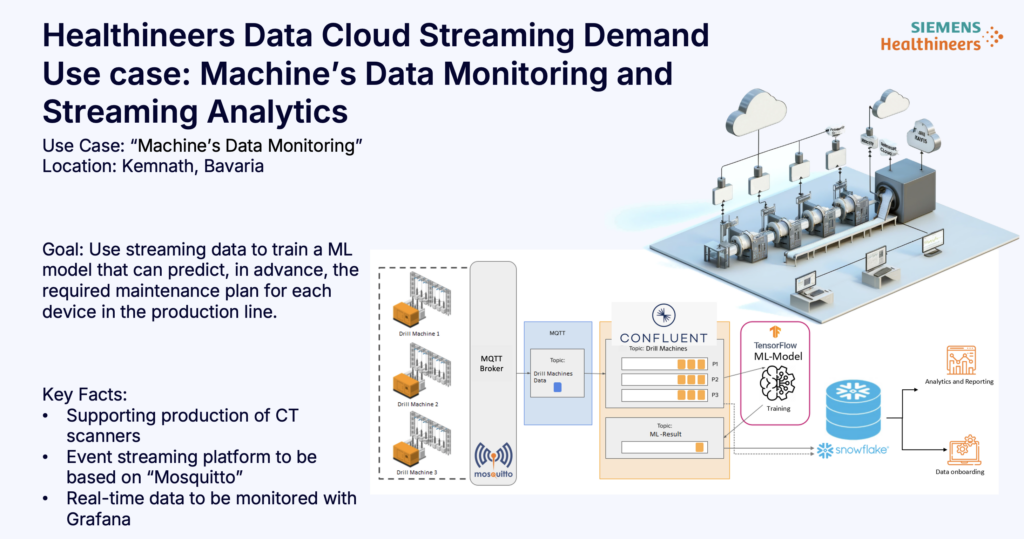

AI-Driven Disruption

Artificial Intelligence is transforming every layer of the automotive value chain—from product development and manufacturing to supply chain optimization and customer experience. AI enables predictive maintenance, dynamic logistics, autonomous driving features, and highly personalized in-car services.

Data Streaming Integration:

Apache Kafka serves as the foundational ingestion layer for high-volume telemetry and sensor data required to train and operate AI models. Apache Flink adds real-time processing, such as feature extraction, anomaly detection, and rule-based alerting, enabling intelligent systems to act instantly on events as they occur.

Use Case Example:

OEMs collect engine and sensor data in real time through Kafka. Machine learning models running on Flink or external ML platforms use this data to predict component wear. Maintenance plans are dynamically adjusted based on actual usage patterns rather than static schedules—reducing downtime and repair costs.

Real-World Example:

At Siemens Healthineers, a similar approach is used to support the manufacturing of CT scanners in Germany. Streaming data from machines on the production line is captured and monitored in real time. Using an MQTT-based ingestion layer alongside analytics tools like Grafana, Healthineers builds predictive models that determine when specific components require maintenance. This real-time data-driven approach ensures production continuity, lowers maintenance costs, and improves product reliability. The pattern is directly transferable to the automotive industry, where predictive maintenance powered by Kafka and real-time analytics can significantly boost uptime across fleets and manufacturing systems.

Reimagining the Customer Experience

Today’s car buyers and drivers expect seamless, consistent, and personalized experiences across all digital and physical touchpoints before, during, and after the vehicle purchase. The customer journey extends from the website and mobile app to the dealership and even into the vehicle itself.

Data Streaming Integration:

Apache Kafka aggregates behavioral and contextual data from multiple channels, including mobile apps, websites, in-store interactions, and in-vehicle systems. Apache Flink processes this data in real time to enable dynamic customer segmentation, recommendation engines, and event-based notifications delivered at the right moment and in the right context.

Use Case Example:

OEMs use Kafka to stream driver behavior, vehicle usage, and location data to backend systems. Flink then analyzes and scores this data to trigger hyper-personalized services. Examples include recommending nearby charging stations, sending proactive service reminders, or customizing infotainment content based on user profiles.

Real-World Example:

Porsche is leading this transformation with its ‘My Porsche’ digital platform, which unifies the customer experience across web, mobile, in-car systems, and physical stores. Underpinned by a streaming infrastructure called Streamzilla, Porsche operates a central data platform that spans data centers, public clouds, and global regions. This architecture enables a Customer 360 view that supports real-time personalization, omnichannel retail, and high-frequency customer engagement. By using event streaming to synchronize customer data across channels, Porsche delivers a premium digital experience that reflects the brand’s identity and enhances long-term loyalty.

New Collaboration Models

Automotive OEMs increasingly shift from vertically integrated development to collaborative ecosystems. Shared platforms, open-source projects, and partner marketplaces enable faster innovation, lower R&D costs, and access to new capabilities. Strategic collaboration across the value chain is essential—especially for non-differentiating components like navigation, connectivity, and diagnostics.

Data Streaming Integration:

Apache Kafka enables secure, real-time data exchange between OEMs, tier-1 suppliers, and software providers. Kafka topics decouple producers and consumers and provide a real-time data exchange. Tools like Confluent Schema Registry provide data contracts and enforce compatibility and governance across teams. This model supports flexible onboarding of partners and streamlines integration in dynamic, evolving ecosystems.

Use Case Example:

EV charging providers like Virta collaborate with OEMs by exchanging real-time telemetry, pricing, and session data over shared Kafka topics. This event-driven approach allows seamless interoperability with vehicle platforms—without the need for tightly coupled integrations or proprietary APIs.

Real-World Example:

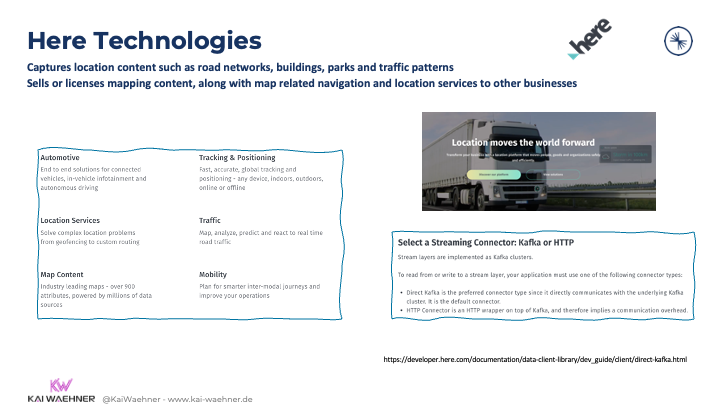

Here Technologies is a key player in this collaborative model. The company captures detailed location data—such as road networks, building footprints, and traffic patterns—and provides navigation and mapping services to OEMs, mobility providers, and logistics platforms. Here monetizes its data through licensing and API-based access models, while also supporting Kafka-based data streaming for high-throughput, low-latency use cases. OEMs integrate Here’s location intelligence into their infotainment, ADAS, and fleet systems via standardized APIs or Kafka-native interfaces. By exchanging location and mobility data with transportation partners in real time, Here enables richer user experiences, more accurate navigation, and faster data-driven service development. This is a clear example of how event streaming powers modern collaboration models across the automotive ecosystem.

Reducing Operational Complexity

Automotive manufacturers are under pressure to simplify their IT environments, streamline supply chains, and optimize aftersales processes. Reducing operational complexity is essential for improving margins, increasing responsiveness, and enabling scalable innovation. Complexity often stems from fragmented data, disconnected systems, and slow information flows.

Data Streaming Integration:

Apache Kafka captures real-time events across production systems, logistics providers, and service platforms. Apache Flink processes these streams to detect SLA violations, identify material shortages, or flag quality issues before they escalate. Streaming data enables proactive coordination, faster root-cause analysis, and improved cross-functional visibility.

Use Case Example:

OEMs use Kafka-based tracking systems to monitor parts deliveries in real time. Alerts are triggered when delays or deviations occur, allowing production teams to coordinate logistics providers and minimize downtime.

Real-World Example:

The Twin4Trucks initiative driven by Daimler Truck and supported by the German government demonstrates how digital twins and AI can reduce operational complexity on the shop floor. The project focuses on intra-logistics, quality assurance, and production orchestration using advanced technologies such as 5G and Ultra-Wideband (UWB) for real-time location tracking. Kafka plays a critical role in data sharing across the smart factory and enterprise architecture, enabling high-speed, event-driven communication between machines, systems, and teams. Twin4Trucks is also aligned with Gaia-X compliance to ensure secure, federated data exchange beyond factory boundaries. By combining streaming infrastructure with digital twin technology, Daimler Truck reduces complexity, accelerates decision-making, and builds a foundation for scalable, intelligent manufacturing.

Data-Driven Software Development

Modern vehicle functions such as autonomous driving, infotainment, and energy optimization depend on continuous real-world data. Traditional development cycles are being replaced by data-driven approaches that support real-time feedback, simulation, and over-the-air updates.

Data Streaming Integration:

Apache Kafka collects high-frequency data from ADAS and in-vehicle systems. Apache Flink processes this data in motion to power simulation, ML pipelines, and Over-the-Air (OTA) workflows to enable faster and smarter software updates.

Use Case Example:

OEMs use Kafka to analyze driver behavior and road conditions in real time, continuously improving ADAS features and vehicle performance via OTA updates.

Real-World Example:

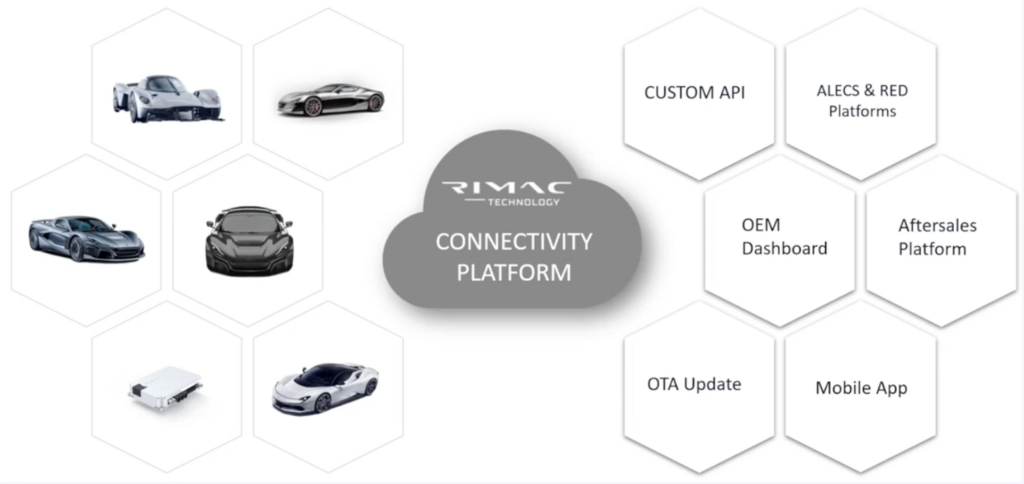

Rimac Technology uses MQTT and Apache Kafka on AWS to build a two-way connectivity platform between connected cars and the cloud for EV charging via battery packs, electric drive units (EDUs), electronic control units (ECUs), and software platforms. It supports an OEM dashboard for fleet insights and OTA management, and the Nevera app for live data previews, vehicle health monitoring, and remote control. Kafka serves as the central backbone, powering agile development and real-time intelligence across the vehicle lifecycle.

MQTT combined with Kafka enables low-latency, bidirectional communication for operational use cases—such as command and control—not just unidirectional ingestion into analytical layers like data lakes.

Self-Driving Cars and Autonomous Systems

Autonomous driving is one of the most ambitious and complex challenges in the automotive world. The core vehicle functions—such as perception, sensor fusion, and motion planning—run locally on embedded systems using deterministic, low-latency technologies like C++ or Rust. These systems operate in hard real time and are safety-critical. They are not built on data streaming platforms like Kafka.

However, everything surrounding the self-driving stack—from fleet operations to analytics, simulation, and customer experience—relies on high-throughput, low-latency data infrastructure. This is where Apache Kafka and Apache Flink play a critical role.

Data Streaming Integration:

Apache Kafka collects and distributes data across connected vehicles, backend systems, and development environments. This includes driving logs, diagnostic information, navigation events, and performance metrics. Apache Flink enables stream processing on this data to power real-time dashboards, simulate driving scenarios, detect anomalies, or trigger business workflows.

Use Case Examples:

- Uploading sensor metadata from vehicles to support simulation and offline model validation

- Analyzing driver interventions and vehicle handovers to improve fleet safety

- Streaming location and usage data to predict maintenance needs

- Real-time alerts to operations teams for vehicle health or environmental hazards

- Delivering contextual offers or messages to users during rides or at trip completion

Real-World Example:

Connected car platforms at OEMs like Audi and Rimac use Apache Kafka as the central backbone for streaming telemetry, enabling OTA software updates, and coordinating fleet operations in real time. Similarly, ride-hailing and autonomous mobility companies such as Uber, Lyft, Grab, and FreeNow rely on Kafka to power dispatching, trip tracking, driver-rider coordination, and large-scale telemetry ingestion.

In short, while embedded autonomy logic lives in the vehicle, everything else—mapping, monitoring, operations, customer engagement—is powered by event-driven data streaming.

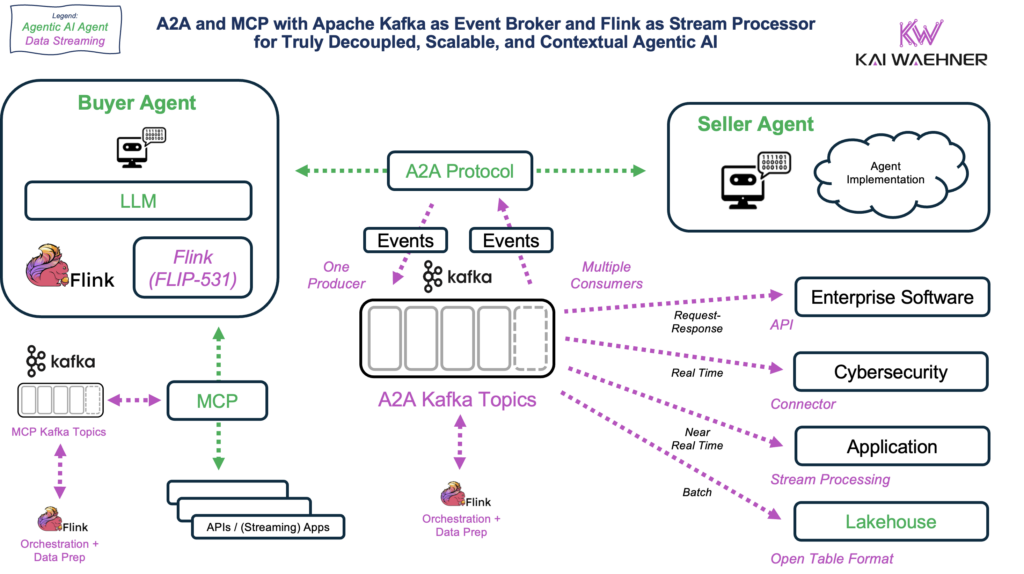

Why Data Streaming Is Essential for Agentic AI in the Automotive Industry

The automotive industry is entering the age of agentic AI — autonomous systems that reason, learn, and adapt in real time. These AI agents will power:

- Self-healing production systems use streaming data from sensors, machines, and quality control systems to detect issues early, trigger corrective actions, and optimize manufacturing processes without human intervention.

- Integration between internal ERP systems and external supplier platforms enables real-time B2B collaboration, allowing automotive companies to synchronize orders, inventories, and delivery schedules dynamically across the value chain.

- Self-optimizing EVs use agentic AI to adapt powertrain settings in real time based on driving behavior, road conditions, and energy usage — improving range and performance automatically while reducing battery degradation.

- Dynamic customer engagement platforms stream behavioral and contextual data from vehicles, mobile apps, and digital channels to deliver personalized experiences, proactive service offers, and real-time updates to drivers and fleet managers.

- Real-time fleet coordination and safety systems process continuous vehicle location, telemetry, and traffic data to reroute vehicles, manage load distribution, and respond instantly to safety events or environmental hazards.

But none of this is possible without access to high-quality, real-time data.

Data Streaming with Apache Kafka and Flink forms the real-time nervous system for these intelligent automotive systems. They ensure every system, service, and user can react to the world as it changes; not hours or days later, but now.

To remain competitive, automotive leaders must adopt data streaming as a strategic priority. It’s the backbone of every AI initiative, every customer interaction, and every operational process.

Further Reading:

- The Data Streaming Landscape for Automotive and Manufacturing

- Apache Kafka in Manufacturing at Automotive Tier 1 Supplier Brose for Industrial IoT Use Cases

- How Data Streaming with Kafka and Safety-Critical Hard Real-Time System Work Together

- How Apache Kafka and Flink Power Event-Driven Agentic AI in Real Time

Join the data streaming community and stay informed about new blog posts by subscribing to my newsletter and follow me on LinkedIn or X (former Twitter) to stay in touch. And make sure to download my free book about data streaming use cases.