Before the Covid pandemic, I had the pleasure of visiting “Motor City” Detroit in November 2019. I met with several automotive companies, suppliers, startups, and cloud providers to discuss use cases and architectures around Apache Kafka. A lot has happened. Since then, I have also met several OEMs and suppliers in Europe and Asia. As I finally go back to Detroit this January 2022 to meet customers again, I thought it would be a good time to update the status quo of event streaming and Apache Kafka in the automotive and manufacturing industry.

Today, in 2022, Apache Kafka is the central nervous system of many applications in various areas related to the automotive and manufacturing industry for processing analytical and transactional data in motion across edge, hybrid, and multi-cloud deployments. This article explores the automotive event streaming landscape, including connected vehicles, smart manufacturing, supply chain optimization, aftersales, mobility services, and innovative new business models.

The Event Streaming Landscape for Automotive and Manufacturing

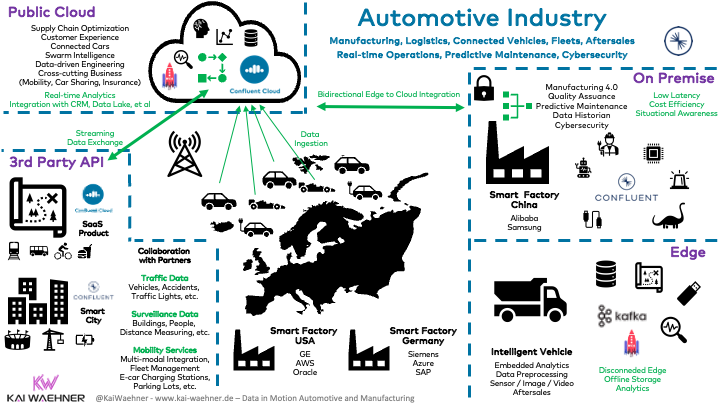

Every business domain leverages Event Streaming with Apache Kafka in the automotive and manufacturing industry. Data in motion helps everywhere. The infrastructure and deployment differ depending on the use case and requirements. I have seen everything at carmakers and manufacturers across the globe:

- Cloud-first strategy with all new business applications in the public cloud deployed and connected across regions and even continents

- Hybrid integration scenarios between legacy applications in the data center and modern cloud-native services the public cloud

- Edge computing in a smart factory for low latency, cost-efficient data processing, and cybersecurity

- Embedded Kafka brokers in machines and vehicles at the disconnected edge

This spread of use cases is impressive. The following diagram depicts a high-level overview:

The following sections describe the automotive and manufacturing landscape for event streaming in more detail:

- Manufacturing 4.0

- Supply Chain Optimization

- Mobility Services

- New Business Models

If you are mainly interested in real-world Kafka deployments with examples from BMW, Porsche, Audi, Tesla, and other OEMs, check out the article “Real-World Deployments of Kafka in the Automotive Industry“.

If you want to understand why Kafka makes such a difference in automotive and manufacturing, check out the article “Apache Kafka in the Automotive Industry“. This article explores the business motivation for these game-changing concepts of data in motion for the digitalization of the automotive industry.

Before you start reading the below section, I want to clearly emphasize that Kafka is not the silver bullet for every problem. “When NOT to use Apache Kafka?” digs deep into this discussion.

I keep the following sections relatively short to give a high-level overview. Each section contains links to more deep-dive articles about the topics.

Manufacturing 4.0

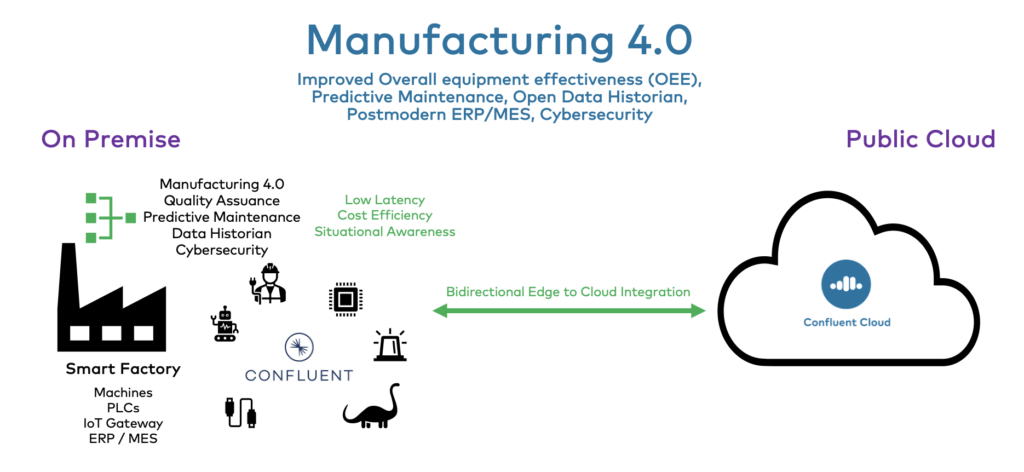

Industrial IoT (IIoT) respectively Industry 4.0 changes how the shop floor and production lines produce goods. Automation, process efficiency, and a much better Overall Equipment Effectiveness (OEE) enable cost reduction and flexibility in the production process:

Smart Factory

A smart factory is not necessarily a newly built building like a Tesla Gigafactory. Many enterprises install smart technology like networked sensors for temperature or vibrations measurements into old factories. Improving the Overall Equipment Effectiveness (OEE) is the primary goal of most use cases. Many scenarios leverage Kafka for continuously processing sensor and telemetry data in motion:

- Connectivity to machines with modern, open technologies such as MQTT

- Visualization and monitoring of equipment and assets (often called Digital Twin or Digital Threat)

- Quality assurance such as condition monitoring with stateless stream processing

- Predictive maintenance of machines, robots, productions lines with stateful streaming analytics and machine learning

- Surveillance and safety-critical video monitoring by processing images and videos

- Cybersecurity for situational awareness and threat intelligence

- Smart Buildings for maintenance and operations, smarter energy consumptions, optimized space usage, better employee experience

Legacy Modernization with Open APIs and Hybrid Cloud

Factories exist for decades after they are built. Digitalization and the modernization of legacy technologies are some of the biggest challenges in IIoT projects. Such an initiative usually includes several tasks:

- Complex integration with proprietary legacy protocols such as Siemens S7, Allan Bradley, Modbus, et al., for instance, with a dedicated open-source framework such as Apache PLC4X running on Kafka Connect

- Simple integration with open standards such as HTTP and REST-based web services and a REST Proxy for Kafka

- Deployment of a modern, open, scalable data historian replacing or complementing monolithic, proprietary data historians

- Postmodern MES and ERP architectures upgrading or replacing legacy proprietary non-scalable MES and ERP systems, for instance, the integration between legacy SAP systems and Kafka

- Lift and shift from on-premise data centers to the public cloud with hybrid synchronization and replication using the Kafka protocol and cluster linking

Continuous Data-driven Engineering and Product Development

Last but not least, an opportunity many people underestimate: Continuous data streaming with Kafka enables new possibilities in software engineering and product development for IoT and automotive projects.

For instance, developing and deploying the “big loop” for machine learning of advanced driver-assistance systems (ADAS) or self-driving functions based on sensor data from the fleet is a new way of software engineering. Tesla’s Kafka-based data platform is a fantastic example. A related use case in engineering is the ingest of sensor data during and after test drives.

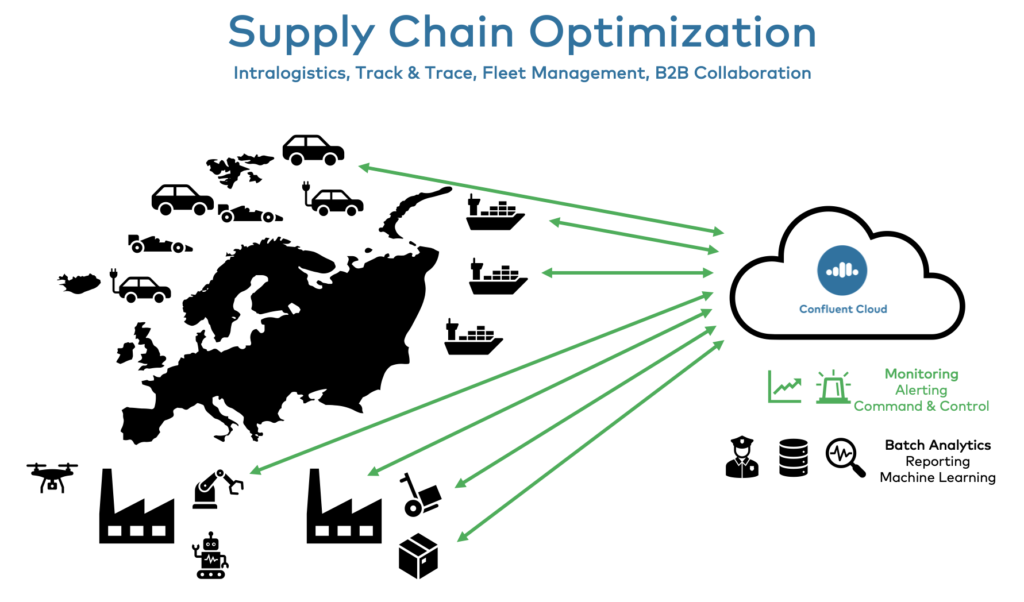

Supply Chain Optimization

Supply chain processes and solutions are very complex. The Covid pandemic showed how only flexible enterprises could survive, stay profitable, and provide a great customer experience, even in disastrous external events.

Here are the top 5 critical challenges of supply chains:

- Time Frames are Shorter

- Rapid Change

- Zoo of Technologies and Products

- Historical Models are No Longer Viable

- Lack of visibility

Only real-time data streaming and correlation solve these supply chain challenges end-to-end across regions and companies:

In its detailed blog post, I covered Supply Chain Optimization (SCM) with Apache Kafka. Check it out to learn about real-world supply chain use cases from Bosch, BMW, Walmart, and other companies.

Intra-logistics and Global Distribution Networks

Logistics and supply chains within a factory, distribution center, or store require real-time data integration and processing to provide efficient processing of goods and a great customer experience. Batch processes or manual interaction by human workers cannot implement these use cases. Examples include:

- Real-Time Locating System (RTLS) for transportation and logistics within a building to monitor robots, driverless transport systems (DTS), and manual processes for improved safety, controlled security, and optimized operations and productivity

- Inventory management for optimized and customized production processes with a better B2B integration in real-time – the same story and benefits as in a modern omnichannel retail architecture

- Globally distributed networks powered by a global Kafka infrastructure

- Augmented reality for the digitalization and automation of worker tasks

Track & Trace and Fleet Management

Real-time logistics is a game-changer for fleet management and track & trace use cases.

- Commercial motor vehicles such as cars, vans, trucks, specialist vehicles (such as mobile construction machinery), forklifts, and trailers

- Private vehicles used for work (the ‘grey fleet’)

- Aviation machinery such as aircraft (planes and helicopters)

- Ships

- Rail cars

- Non-powered assets such as generators, tanks, gearboxes

All the following aspects are not new. The difference is that event streaming allows to continuously execute these tasks in real-time to act on new information in motion:

- Visualization

- Location-based services

- Routing and navigation

- Estimated time of arrival

- Alerting

- Proactive recalculation

- Monitoring of the assets and mechanical components of a vehicle

Most companies have a cloud-first strategy for building such a platform. However, some cases require edge computing either via local 5G location for low latency use cases or embedded Kafka brokers for disconnected data collection and analytics within the vehicles.

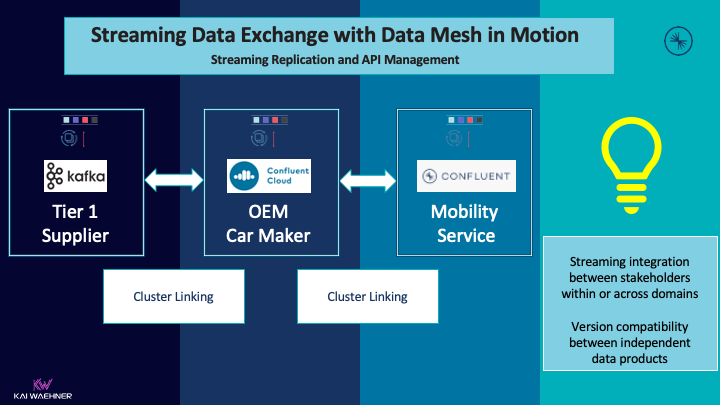

Streaming Data Exchange for B2B Collaboration with Partners

Real-time data is not just relevant without a company. OEMs and Tier 1 and Tier 2 suppliers benefit in the same way from data streams. The same is true for car dealerships, end customers, and any other consumer of the data. Hence, a clear trend in the market is the emergence of a Kafka-based streaming data exchange across companies to build a data mesh.

I have often seen this situation in the past: The OEM leverages event streaming. The Tier 1 supplier leverages event streaming. The used ERP solution is built on Kafka, too. All leverage the capabilities of scalable real-time data streaming. It makes little sense to integrate with partners and software vendors via web service APIs, such as SOAP or HTTP/REST. Instead, a streaming interface is a natural choice to hand streaming data to partners.

The following example from the automotive industry shows how independent stakeholders (= domains in different enterprises) use a cross-company streaming data exchange:

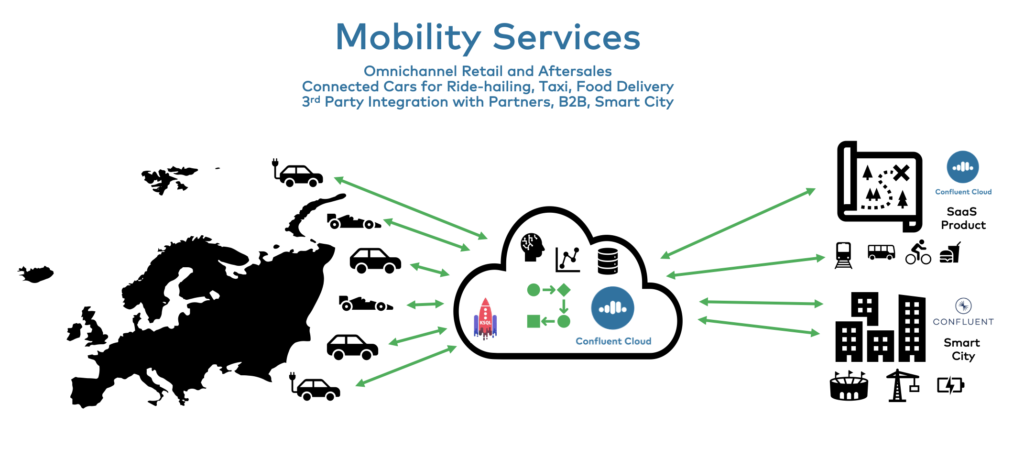

Mobility Services

Every OEM, supplier, or innovative startup in the automotive space thinks about providing a mobility service either on top of the goods they sell or as an independent service.

Most mobility services on your mobile apps used today for business or privately are only possible because of a scalable real-time backbone powered by event streaming:

The possibilities for mobility services are endless. A few examples that are mainstream today already:

- Omnichannel retail and aftersales to buy additional car features online, for instance, more power, seat heater, up-to-date navigation, self-driving software (okay, the latter one is not mainstream yet, but Tesla shows where it goes)

- Connected Cars for ride-hailing, scooter rental, taxi services, food delivery

- 3rd party integration for adding services that a company does not want to build by themselves

Today’s most successful and widely adopted mobility services are independent of a specific carmaker or supplier.

Examples of prominent Kafka-powered consumer mobility services are Uber and Lyft in the US, Grab in Asia, and FREENOW in Europe. Here Technologies is an excellent example for a B2B mobility service providing mapping information so that companies can build new or improve existing applications on top of it.

A good starting point to learn more is my blog post about Apache Kafka and MQTT for mobility services and transportation.

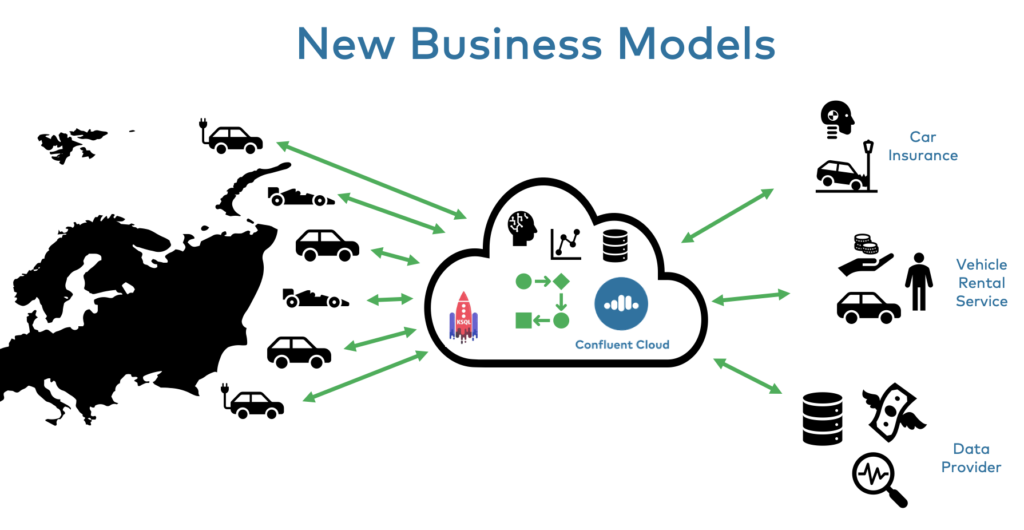

New Business Models

The access to real-time data enables companies to build entirely new business models on top of their existing products:

A few examples:

- Next-generation car rental with excellent customer experience, context-specific coupons, loyalty platform, and car rental fleets with other services from the carmaker.

- Reinventing car insurance based on real-time driving information about each driver to build driver-specific pricing based on real-time analysis of the driver behavior instead of legacy approaches using statistical models with attributes like driver age, number of accidents in the past, etc.

- Data provider for monetization enables other companies to build new business models with your car data – for instance, working with a government to make a smart city traffic system or a mobility service startup to analyze and correlate car data across OEMs.

This evolution is just the beginning of the usage of streaming data. I have seen many customers build a first streaming pipeline for one use case. However, new business divisions will leverage the data for innovations when the platform is there.

The Data is in Motion in Automotive and Manufacturing

The landscape for Apache Kafka in the automotive and manufacturing industry showed that Apache Kafka is the central nervous system of many applications in various areas for processing analytical and transactional data in motion.

This article explored use cases such as connected vehicles, smart manufacturing, supply chain optimization, aftersales, mobility services, and innovative new business models. The possibilities for data in motion are almost endless. The automotive and manufacturing industry is still in the very early stages of leveraging data in motion.

Where do you use Apache Kafka and its ecosystem in the automotive and manufacturing industry? Do you deploy in the public cloud, in your data center, or at the edge outside a data center? What other technologies do you combine with Kafka? Let’s connect on LinkedIn and discuss it! Stay informed about new blog posts by subscribing to my newsletter.