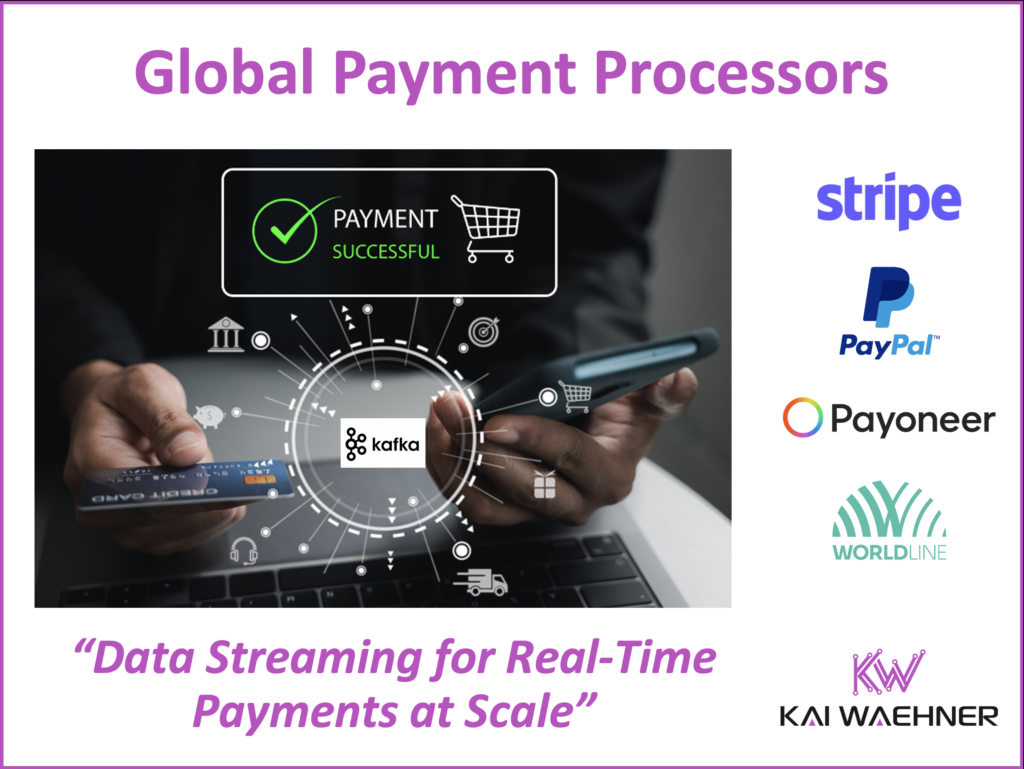

The recent announcement that Global Payments will acquire Worldpay for $22.7 billion has once again put the spotlight on the payment processing space. This move consolidates two giants and signals the growing importance of real-time, global payment infrastructure. But behind this shift is something deeper: data streaming has become the backbone of modern payment systems.

From Stripe’s 99.9999% Kafka availability to PayPal streaming over a trillion events per day, and Payoneer replacing its existing message broker with data streaming, the world’s leading payment processors are redesigning their core systems around streaming technologies. Even companies like Worldline, which developed its own Apache Kafka management platform, have made Kafka central to their financial infrastructure.

Payment processors operate in a world where every transaction must be authorized, routed, verified, and settled in milliseconds. These are not just financial operations—they are critical real-time data pipelines. From fraud detection and currency conversion to ledger updates and compliance checks, the entire value chain depends on streaming architectures to function without delay.

This transformation highlights how data streaming is not just an enabler but a core requirement for building fast, secure, and intelligent payment experiences at scale.

Join the data streaming community and stay informed about new blog posts by subscribing to my newsletter and follow me on LinkedIn or X (former Twitter) to stay in touch. And make sure to download my free book about data streaming use cases. It features multiple use cases across financial services.

What Is a Payment Processor?

A payment processor is a financial technology company that moves money between the customer, the merchant, and the bank. It verifies and authorizes transactions, connects with card networks, and ensures settlement between parties.

In other words, it acts as the middleware that powers digital commerce—both online and in-person. Whether swiping a card at a store, paying through a mobile wallet, or making an international wire transfer, a payment processor is often involved.

Key responsibilities include:

- Validating payment credentials

- Performing security and fraud checks

- Routing payment requests to the right financial institution

- Confirming authorization and handling fund settlement

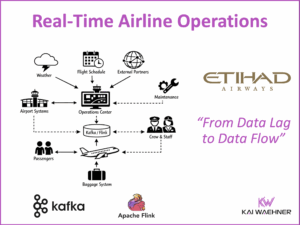

Top Payment Processors

Based on transaction volume, global presence, and market impact, here are some of the largest players today:

- FIS / Worldpay – Enterprise focus, global reach

- Global Payments – Omnichannel, merchant services

- Fiserv / First Data – Legacy integration, banks & retailers

- PayPal – Global digital wallet and checkout

- Stripe – API-first, developer-friendly platform

- Adyen – Unified commerce for large enterprises

- Block (Square) – SME focus, hardware + software

- Payoneer – Cross-border B2B payments

- Worldline – Strong European presence

Each of these companies operates at massive scale, handling millions of transactions per day. Their infrastructure needs to be secure, reliable, and real-time. That’s where data streaming comes in.

Payment Processors in the Context of Financial Services

Payment processors don’t work in isolation. They integrate with a wide range of:

- Banking systems: to access accounts and settle funds

- Card networks: such as Visa, Mastercard, Amex

- B2B applications: such as ERP, POS, and eCommerce platforms

- Fintech services: including lending, insurance, and digital wallets

This means their systems must support real-time messaging, low latency, and fault tolerance. Downtime or errors can mean financial loss, customer churn, or even regulatory fines.

Why Payment Processors Use Data Streaming

Traditional batch processing isn’t good enough in today’s payment ecosystem. There are 20+ problems and challenges with batch processing.

Financial services must react in real time to critical events such as:

- Payment authorizations

- Suspicious transaction alerts

- Currency conversion requests

- API-based microtransactions

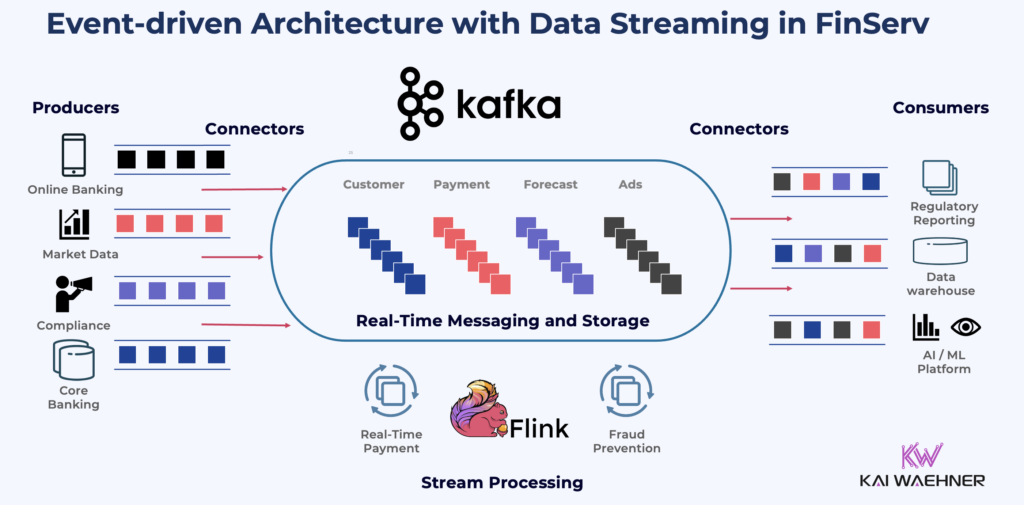

In this context, Apache Kafka and Apache Flink, often deployed via Confluent, are becoming foundational technologies.

A data streaming platform enables an event-driven architecture, where each payment, customer interaction, or system change is treated as a real-time event. This model supports decoupling of services, meaning systems can scale and evolve independently—an essential feature in mission-critical environments.

The Benefits of Apache Kafka and Flink for Payments

Apache Kafka is the de facto backbone for modern payment platforms because it supports the real-time, mission-critical nature of financial transactions. Together with Apache Flink, it enables a data streaming architecture that meets the demands of today’s fintech landscape.

Key benefits include:

- Low latency – Process events in milliseconds

- High throughput – Handle millions of transactions per day

- Scalability – Easily scale across systems and regions

- High availability and failover – Ensure global uptime and disaster recovery

- Exactly-once semantics – Maintain financial integrity and avoid duplication

- Event sourcing – Track every action for audit and compliance

- Decoupled services – Support modular, microservices-based architectures

- Data reusability – Stream once, use across multiple teams and use cases

- Scalable integration – Bridge modern APIs with legacy banking systems

In the high-stakes world of payments, real-time processing is no longer a competitive edge. It is a core requirement! Data streaming provides the infrastructure to make that possible.

Apache Kafka is NOT just for big data analytics. It enables transactional processing without compromising performance or consistency. See the full blog post for detailed use cases and architectural insights: Analytics vs. Transactions with Apache Kafka.

Data Streaming with Apache Kafka in the Payment Processor Ecosystem

Let’s look at how top payment processors use Apache Kafka.

Stripe: Apache Kafka for 99.9999% availability

Stripe is one of the world’s leading financial infrastructure platforms for internet businesses. It powers payments, billing, and financial operations for millions of companies—ranging from startups to global enterprises. Every transaction processed through Stripe is mission-critical, not just for Stripe itself but for its customers whose revenue depends on seamless, real-time payments.

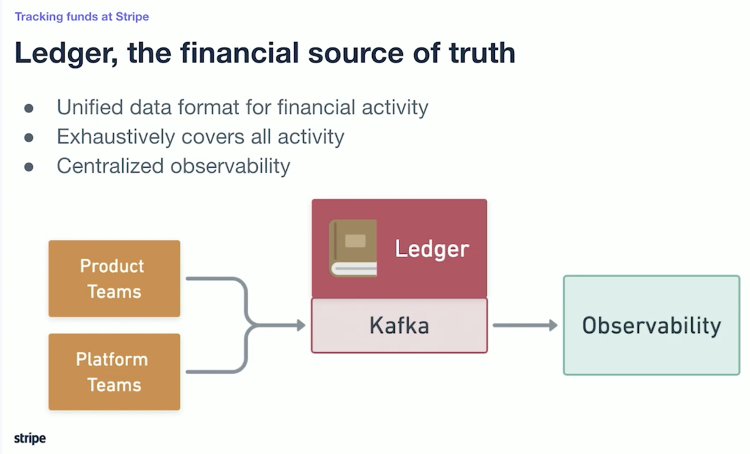

At the heart of Stripe’s architecture lies Apache Kafka, which serves as the financial source of truth. Kafka is used by the vast majority of services at Stripe, acting as the backbone for processing, routing, and tracking all financial data across systems. The core use case is Stripe’s general ledger, which models financial activity using a double-entry bookkeeping system. This ledger covers every fund movement within Stripe’s ecosystem and must be accurate, complete, and observable in real time.

Kafka ensures that all financial events from payment authorizations to settlements and refunds are processed with exactly-once semantics. This guarantees that the financial state is never overcounted, undercounted, or duplicated, which is essential in regulated environments.

Stripe also integrates Apache Pinot as a real-time OLAP engine, consuming events directly from Kafka for instant analytics. This supports everything from operational dashboards to customer-facing reporting, all without pulling from offline batch systems.

One of Stripe’s most advanced Kafka deployments supports 99.9999% availability; referred to internally as “six nines”. This is achieved through a custom-built multi-cluster proxy layer that routes producers and consumers globally. The proxy system ensures high availability even in the event of a cluster outage, allowing maintenance and upgrades with zero impact on live systems. This enables critical services, like Stripe’s “charge path” (the end-to-end flow of a transaction), to remain fully operational under all conditions. Learn more: “6 Nines: How Stripe keeps Kafka highly-available across the globe“.

Stripe’s use of Kafka illustrates how a data streaming platform can serve not just as a pipeline but as a foundational, highly available, and globally distributed layer of financial truth.

PayPal: Streaming Over a Trillion Events a Day with Data Streaming

PayPal is one of the most widely used digital payment platforms in the world, serving hundreds of millions of users and businesses. As a global payment processor, its systems must be fast, reliable, and secure; specially when every transaction impacts real money movement across borders, currencies, and regulatory frameworks.

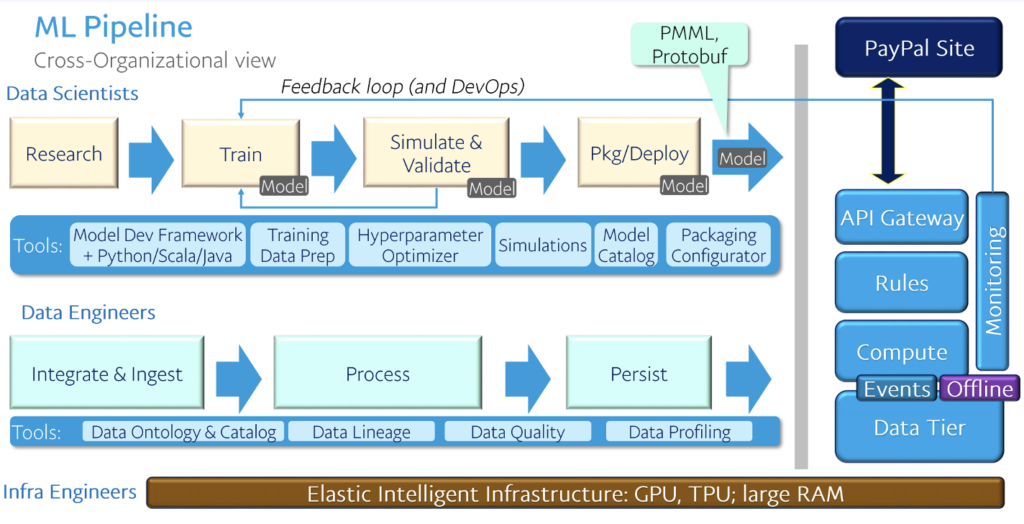

Apache Kafka plays a central role in enabling PayPal’s real-time data streaming infrastructure. Kafka supports some of PayPal’s most critical workloads, including fraud detection using AI/ML models, user activity tracking, risk and compliance, and application health monitoring. These are not optional features. They are fundamental to the integrity and safety of PayPal’s payment ecosystem.

The platform processes over 1 trillion Kafka messages per day during peak periods, such as Black Friday or Cyber Monday. Kafka enables PayPal to detect fraud in real time, respond to risk events instantly, and maintain continuous observability across services.

PayPal also uses Kafka to reduce analytics latency from hours to seconds. Streaming data from Kafka into tools like Google BigQuery allows the company to generate real-time insights that support payment routing, user experience, and operational decisions.

As a payment processor, PayPal cannot afford downtime or delays. Kafka ensures low latency, high throughput, and real-time responsiveness. This makes it a critical backbone for keeping PayPal’s global payments platform secure, compliant, and always available.

Payoneer: Migrating to Kafka for Scalable Event-Driven Payments

Payoneer is a global payment processor focused on cross-border B2B transactions. It enables freelancers, businesses, and online sellers to send and receive payments across more than 200 countries. Operating at this scale requires not only financial compliance and flexibility—but also a robust, real-time data infrastructure.

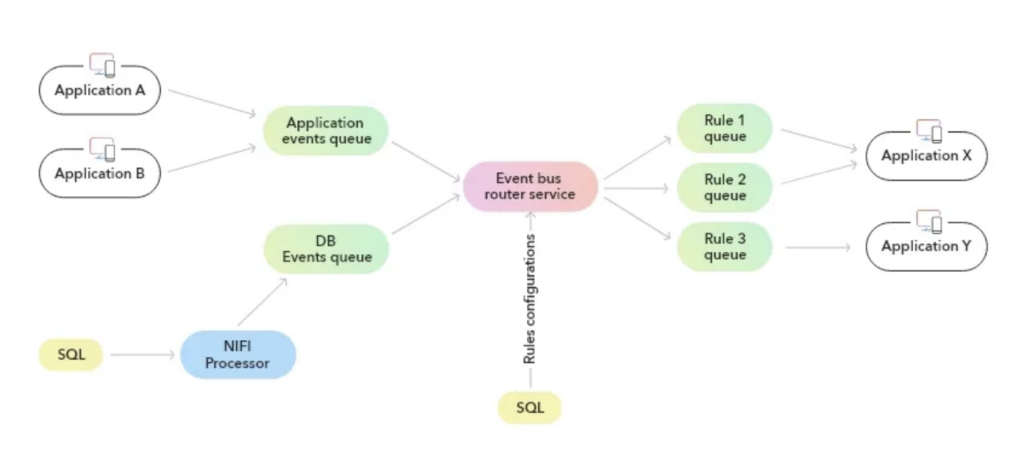

As Payoneer transitioned from monolithic systems to microservices, it faced serious limitations with its legacy event-driven setup, which relied on RabbitMQ, Apache NiFi, and custom-built routing logic. These technologies supported basic asynchronous communication and change data capture (CDC), but they quickly became bottlenecks as the number of services and data volumes grew. NiFi couldn’t keep up with CDC throughput, RabbitMQ wasn’t scalable for multi-consumer setups, and the entire architecture created tight coupling between services.

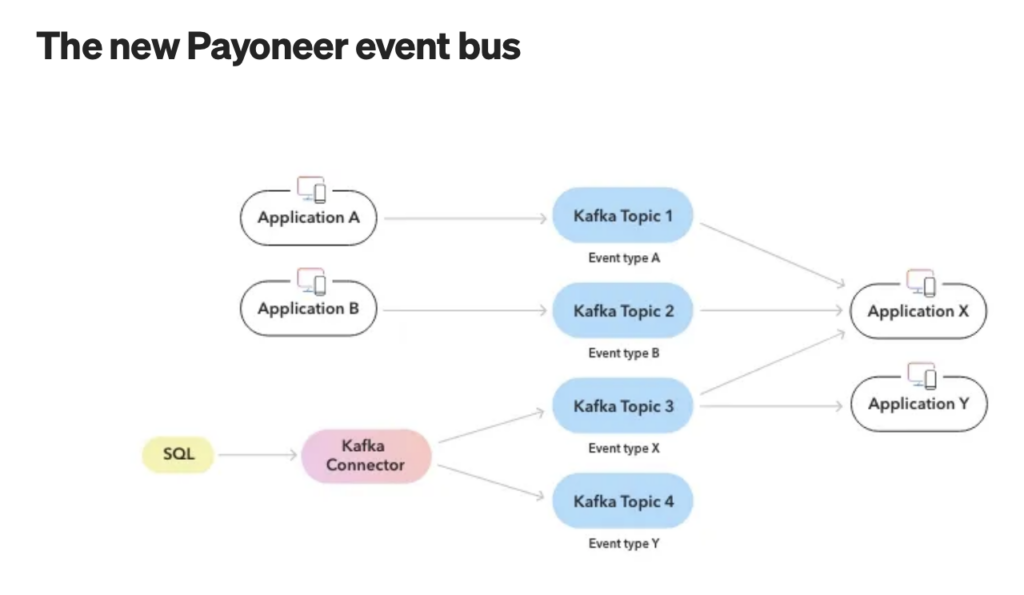

To solve these challenges, Payoneer reengineered its internal event bus using Apache Kafka and Debezium. This shift replaced queues with Kafka topics, unlocking critical benefits like high throughput, multi-consumer support, data replay, and schema enforcement. Application events are now published directly to Kafka topics, while CDC data from SQL databases is streamed using Debezium Kafka connectors.

By decoupling producers from consumers and removing the need for complex rule-based queue routing, Kafka enables a cleaner, more resilient event-driven architecture. It also supports advanced use cases like event filtering, enrichment, and real-time analytics.

For Payoneer, this migration wasn’t just a technical upgrade—it was a foundational shift that enabled the company to scale securely, communicate reliably between services, and process financial events in real time. Kafka is now a critical backbone for Payoneer’s modern payment infrastructure.

Worldline: Building an Enterprise-Grade Kafka Management Platform

Worldline is one of Europe’s leading payment service providers, processing billions of transactions annually across banking, retail, and mobility sectors. In this highly regulated and competitive environment, real-time reliability and observability are critical. These requirements made Apache Kafka a strategic part of Worldline’s infrastructure.

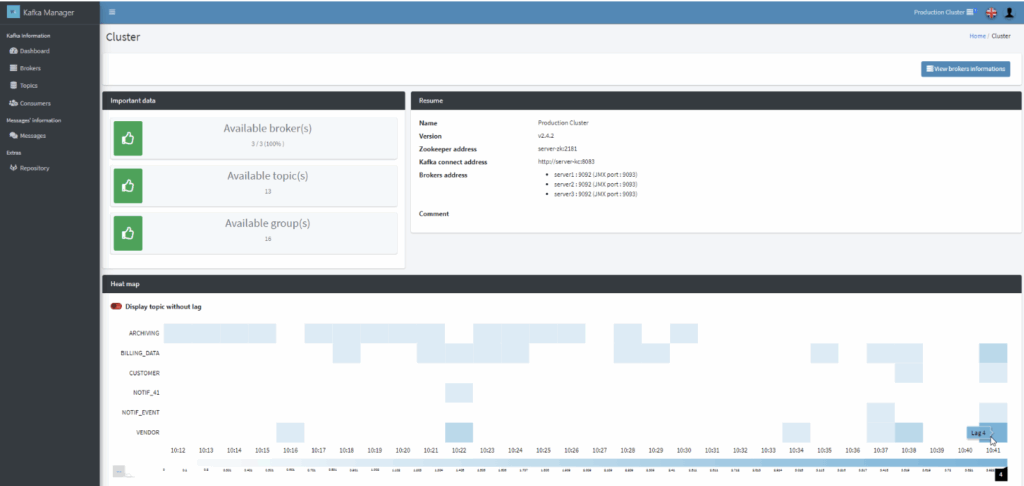

While Worldline has not publicly detailed specific payment-related use cases, the company’s investment in Kafka is clear if you look at its public GitHub projects, technical articles, and job postings. Kafka has been adopted across multiple teams and projects, powering asynchronous processing, data integration, and event-driven architectures that support Worldline’s role as a global payment processor.

One of the strongest indicators of Kafka’s importance at Worldline is the development of their own Kafka Manager tool. Built in-house and tailored to enterprise needs, this tool allows teams to monitor, manage, and troubleshoot Kafka clusters with greater efficiency and security. This is critical in PCI-regulated environments. The tool’s advanced features, such as offset tracking, message inspection, and cluster-wide configuration management, reflect the operational maturity required in the payments industry.

Their blog post from 2021 gives a good overview about the motivation and has an interesting (but bit outdated) comparison of why Worldline built its own Kafka UI.

Another interesting project of Worldline is wkafka: a wrapper for the Kafka library to initialize and use microservices in the Golang programming language. Worldline also continues to hire Kafka engineers and developers, showing ongoing investment in scaling and optimizing its streaming infrastructure.

Even without public use cases-focused stories, the message is clear: Kafka is essential to Worldline’s ability to deliver secure, real-time payment services at scale.

Beyond Payments: Value-Added Services for Payment Processor Powered by Data Streaming

Payment processing is just the beginning. Data streaming supports a growing number of high-value services:

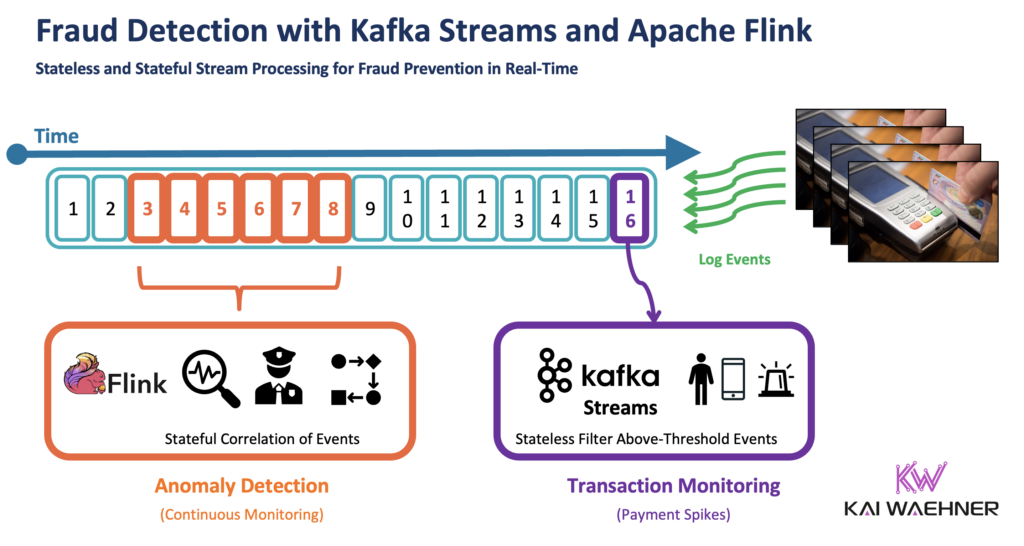

- Fraud prevention: Real-time scoring using historical and streaming features

- Analytics: Instant business insights from live dashboards

- Embedded finance: Integration with third-party platforms like eCommerce, ride-share, or SaaS billing

- Working capital loans: Credit decisions made in-stream based on transaction history

These services not only add revenue but also help payment processors retain customers and build ecosystem lock-in.

For instance, explore various “Fraud Detection Success Stories with Apache Kafka, KSQL and Apache Flink“.

Looking Ahead: IoT, AI, and Agentic Systems

The future of payment processing is being shaped by:

- IoT Payments: Cars, smart devices, and wearables triggering payments automatically

- AI + GenAI: Personal finance agents that manage subscriptions, detect overcharges, and negotiate fees in real time

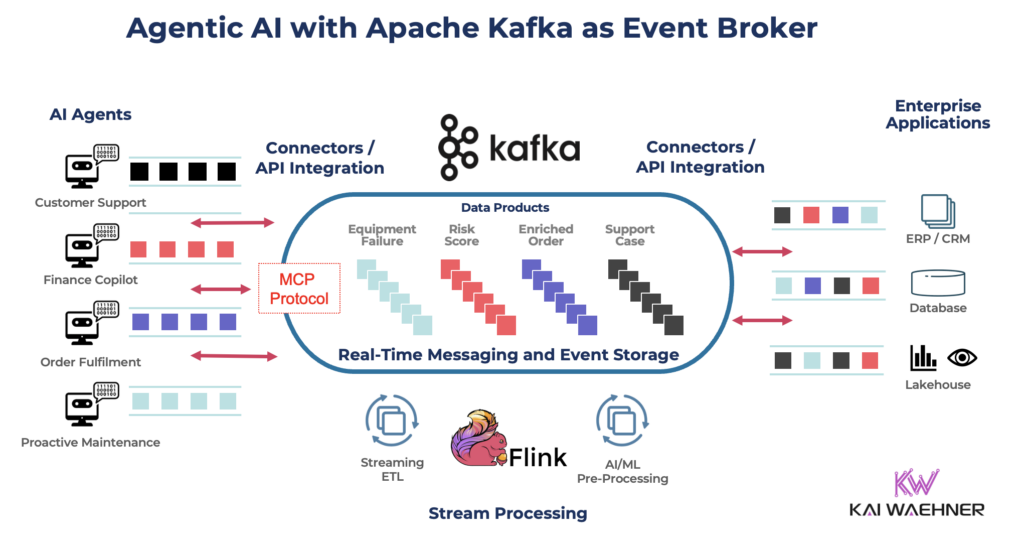

- Agentic AI systems: Acting on behalf of businesses to manage invoicing, reconciliation, and cash flow, streaming events into Kafka and out to various APIs

Data Streaming is the foundation for these innovations. Without real-time data, AI can’t make smart decisions, and IoT can’t interact with payment systems at the edge.

Read more about data streaming and AI here:

- Real-Time Model Inference with Apache Kafka and Flink for Predictive AI and GenAI

- How Apache Kafka and Flink Power Event-Driven Agentic AI in Real Time

- Agentic AI with the Agent2Agent Protocol (A2A) and MCP using Apache Kafka as Event Broker

Modern payments are not just about money movement. They’re about data movement.

Payment processors are leading examples of how companies create value by processing streams of data, not just rows in a database. A data streaming platform is no longer just tools; it is a strategic enablers of real-time business.

As the fintech space continues to consolidate and evolve, those who invest in event-driven architectures will have the edge in innovation, customer experience, and operational agility.

Join the data streaming community and stay informed about new blog posts by subscribing to my newsletter and follow me on LinkedIn or X (former Twitter) to stay in touch. And make sure to download my free book about data streaming use cases. It features multiple use cases across financial services.