Modern enterprises rely heavily on operational systems like SAP ERP, Oracle, Salesforce, ServiceNow and mainframes to power critical business processes. But unlocking real-time insights and enabling AI at scale requires bridging these systems with modern analytics platforms like Databricks. This blog explores how Confluent’s data streaming platform enables seamless integration between SAP, Databricks, and other systems to support real-time decision-making, AI-driven automation, and agentic AI use cases. It explores how Confluent delivers the real-time backbone needed to build event-driven, future-proof enterprise architectures—supporting everything from inventory optimization and supply chain intelligence to embedded copilots and autonomous agents.

About the Confluent and Databricks Blog Series

This article is part of a blog series exploring the growing roles of Confluent and Databricks in modern data and AI architectures:

- Blog 1: The Past, Present and Future of Confluent (The Kafka Company) and Databricks (The Spark Company)

- Blog 2: Confluent Data Streaming Platform vs. Databricks Data Intelligence Platform for Data Integration and Processing

- Blog 3: Shift-Left Architecture for AI and Data Warehousing with Confluent and Databricks

- Blog 4 (THIS ARTICLE): Databricks and Confluent in Enterprise Software Environments (with SAP as Example)

- Blog 5: Databricks and Confluent Leading Data and AI Architectures – and How They Compare to Competitors

Learn how these platforms will affect data use in businesses in future articles. Join the data streaming community and stay informed about new blog posts by subscribing to my newsletter and follow me on LinkedIn or X (former Twitter) to stay in touch. And download my free book about data streaming use cases, including technical architectures and the relation to other operational and analytical platforms like SAP and Databricks.

Most Enterprise Data Is Operational

Enterprise software systems generate a constant stream of operational data across a wide range of domains. This includes orders and inventory from SAP ERP systems, often extended with real-time production data from SAP MES. Oracle databases capture transactional data critical to core business operations, while MongoDB contributes operational data—frequently used as a CDC source or, in some cases, as a sink for analytical queries. Customer interactions are tracked in platforms like Salesforce CRM, and financial or account-related events often originate from IBM mainframes.

Together, these systems form the backbone of enterprise data, requiring seamless integration for real-time intelligence and business agility. This data is often not immediately available for analytics or AI unless it’s integrated into downstream systems.

Confluent is built to ingest and process this kind of operational data in real time. Databricks can then consume it for AI and machine learning, dashboards, or reports. Together, SAP, Confluent and Databricks create a real-time architecture for enterprise decision-making.

SAP Product Landscape for Operational and Analytical Workloads

SAP plays a foundational role in the enterprise data landscape—not just as a source of business data, but as the system of record for core operational processes across finance, supply chain, HR, and manufacturing.

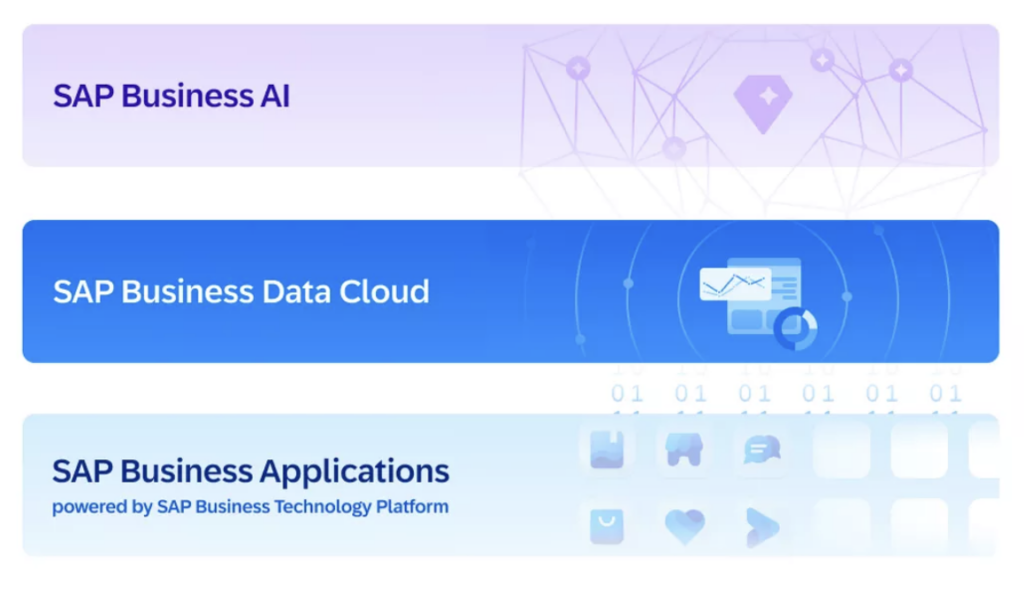

On a high level, the SAP product portfolio has three categories (these days): SAP Business AI, SAP Business Data Cloud (BDC), and SAP Business Applications powered by SAP Business Technology Platform (BTP).

To support both operational and analytical needs, SAP offers a portfolio of platforms and tools, while also partnering with best-in-class technologies like Databricks and Confluent.

Operational Workloads (Transactional Systems):

- SAP S/4HANA – Modern ERP for core business operations

- SAP ECC – Legacy ERP platform still widely deployed

- SAP CRM / SCM / SRM – Domain-specific business systems

- SAP Business One / Business ByDesign – ERP solutions for mid-market and subsidiaries

Analytical Workloads (Data & Analytics Platforms):

- SAP Datasphere – Unified data fabric to integrate, catalog, and govern SAP and non-SAP data

- SAP Analytics Cloud (SAC) – Visualization, reporting, and predictive analytics

- SAP BW/4HANA – Data warehousing and modeling for SAP-centric analytics

SAP Business Data Cloud (BDC)

SAP Business Data Cloud (BDC) is a strategic initiative within SAP Business Technology Platform (BTP) that brings together SAP’s data and analytics capabilities into a unified cloud-native experience. It includes:

- SAP Datasphere as the data fabric layer, enabling seamless integration of SAP and third-party data

- SAP Analytics Cloud (SAC) for consuming governed data via dashboards and reports

- SAP’s partnership with Databricks to allow SAP data to be analyzed alongside non-SAP sources in a lakehouse architecture

- Real-time integration scenarios enabled through Confluent and Apache Kafka, bringing operational data in motion directly into SAP and Databricks environments

Together, this ecosystem supports real-time, AI-powered, and governed analytics across operational and analytical workloads—making SAP data more accessible, trustworthy, and actionable within modern cloud data architectures.

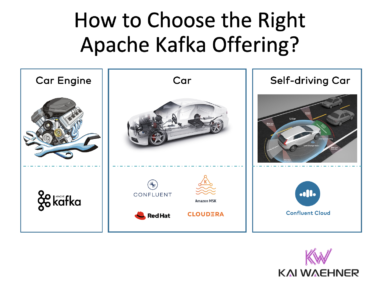

SAP Databricks OEM: Limited Scope, Full Control by SAP

SAP recently announced an OEM partnership with Databricks, embedding parts of Databricks’ serverless infrastructure into the SAP ecosystem. While this move enables tighter integration and simplified access to AI workloads within SAP, it comes with significant trade-offs. The OEM model is narrowly scoped, optimized primarily for ML and GenAI scenarios on SAP data, and lacks the openness and flexibility of native Databricks.

This integration is not intended for full-scale data engineering. Core capabilities such as workflows, streaming, Delta Live Tables, and external data connections (e.g., Snowflake, S3, MS SQL) are missing. The architecture is based on data at rest and does not embrace event-driven patterns. Compute options are limited to serverless only, with no infrastructure control. Pricing is complex and opaque, with customers often needing to license Databricks separately to unlock full capabilities.

Critically, SAP controls the entire data integration layer through its BDC Data Products, reinforcing a vendor lock-in model. While this may benefit SAP-centric organizations focused on embedded AI, it restricts broader interoperability and long-term architectural flexibility. In contrast, native Databricks, i.e., outside of SAP, offers a fully open, scalable platform with rich data engineering features across diverse environments.

Whichever Databricks option you prefer, this is where Confluent adds value—offering a truly event-driven, decoupled architecture that complements both SAP Datasphere and Databricks, whether used within or outside the SAP OEM framework.

Confluent and SAP Integration

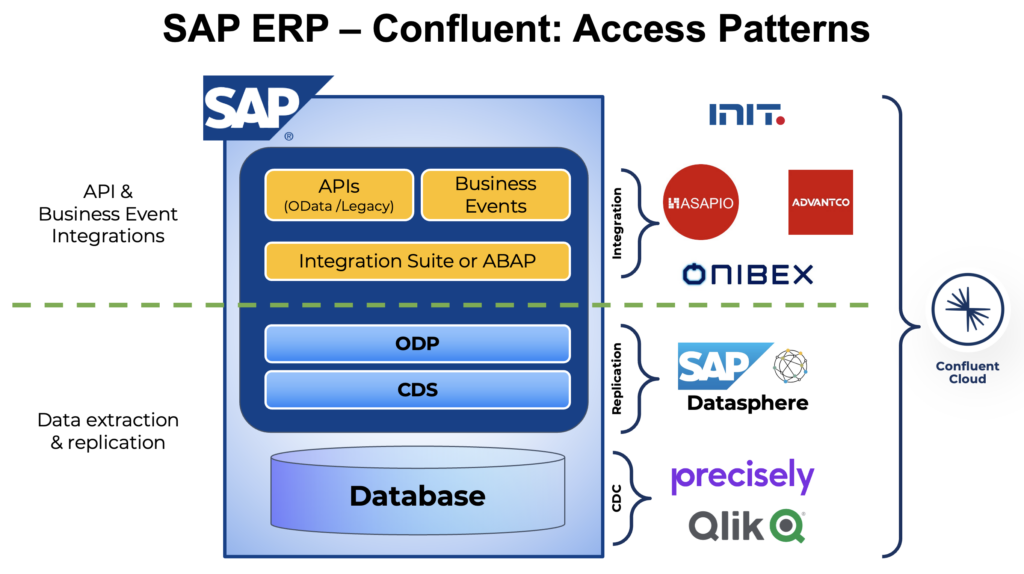

Confluent provides native and third-party connectors to integrate with SAP systems to enable continuous, low-latency data flow across business applications.

This powers modern, event-driven use cases that go beyond traditional batch-based integrations:

- Low-latency access to SAP transactional data

- Integration with other operational source systems like Salesforce, Oracle, IBM Mainframe, MongoDB, or IoT platforms

- Synchronization between SAP DataSphere and other data warehouse and analytics platforms such as Snowflake, Google BigQuery or Databricks

- Decoupling of applications for modular architecture

- Data consistency across real-time, batch and request-response APIs

- Hybrid integration across any edge, on-premise or multi-cloud environments

SAP Datasphere and Confluent

To expand its role in the modern data stack, SAP introduced SAP Datasphere—a cloud-native data management solution designed to extend SAP’s reach into analytics and data integration. Datasphere aims to simplify access to SAP and non-SAP data across hybrid environments.

SAP Datasphere simplifies data access within the SAP ecosystem, but it has key drawbacks when compared to open platforms like Databricks, Snowflake, or Google BigQuery:

- Closed Ecosystem: Optimized for SAP, but lacks flexibility for non-SAP integrations.

- No Event Streaming: Focused on data at rest, with limited support for real-time processing or streaming architectures.

- No Native Stream Processing: Relies on batch methods, adding latency and complexity for hybrid or real-time use cases.

Confluent alleviates these drawbacks and supports this strategy through bi-directional integration with SAP Datasphere. This enables real-time streaming of SAP data into Datasphere and back out to operational or analytical consumers via Apache Kafka. It allows organizations to enrich SAP data, apply real-time processing, and ensure it reaches the right systems in the right format—without waiting for overnight batch jobs or rigid ETL pipelines.

Confluent for Agentic AI with SAP Joule and Databricks

SAP is laying the foundation for agentic AI architectures with a vision centered around Joule—its generative AI copilot—and a tightly integrated data stack that includes SAP Databricks (via OEM), SAP Business Data Cloud (BDC), and a unified knowledge graph. On top of this foundation, SAP is building specialized AI agents for use cases such as customer 360, creditworthiness analysis, supply chain intelligence, and more.

The architecture combines:

- SAP Joule as the interface layer for generative insights and decision support

- SAP’s foundational models and domain-specific knowledge graph

- SAP BDC and SAP Databricks as the data and ML/AI backbone

- Data from both SAP systems (ERP, CRM, HR, logistics) and non-SAP systems (e.g. clickstream, IoT, partner data, social media) from its partnership with Confluent

But here’s the catch: What happens when agents need to communicate with one another to deliver a workflow? Such Agentic systems require continuous, contextual, and event-driven data exchange—not just point-to-point API calls and nightly batch jobs.

This is where Confluent’s data streaming platform comes in as critical infrastructure.

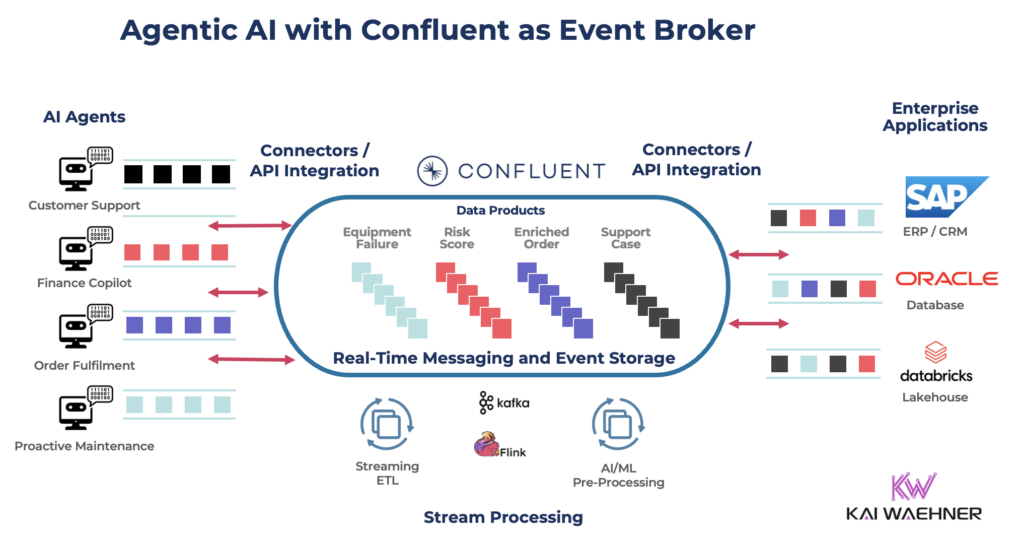

Agentic AI with Apache Kafka as Event Broker

Confluent provides the real-time data streaming platform that connects the operational world of SAP with the analytical and AI-driven world of Databricks, enabling the continuous movement, enrichment, and sharing of data across all layers of the stack.

The above is a conceptual view on the architecture. The AI agents on the left side could be built with SAP Joule, Databricks, or any “outside” GenAI framework.

The data streaming platform helps connecting the AI agents with the reset of the enterprise architecture, both within SAP and Databricks but also beyond:

- Real-time data integration from non-SAP systems (e.g., mobile apps, IoT devices, mainframes, web logs) into SAP and Databricks

- True decoupling of services and agents via an event-driven architecture (EDA), replacing brittle RPC or point-to-point API calls

- Event replay and auditability—critical for traceable AI systems operating in regulated environments

- Streaming pipelines for feature engineering and inference: stream-based model triggering with low-latency SLAs

- Support for bi-directional flows: e.g., operational triggers in SAP can be enriched by AI agents running in Databricks and pushed back into SAP via Kafka events

Without Confluent, SAP’s agentic architecture risks becoming a patchwork of stateless services bound by fragile REST endpoints—lacking the real-time responsiveness, observability, and scalability required to truly support next-generation AI orchestration.

Confluent turns the SAP + Databricks vision into a living, breathing ecosystem—where context flows continuously, agents act autonomously, and enterprises can build future-proof AI systems that scale.

Data Streaming Use Cases Across SAP Product Suites

With Confluent, organizations can support a wide range of use cases across SAP product suites, including:

- Real-Time Inventory Visibility: Live updates of stock levels across warehouses and stores by streaming material movements from SAP ERP and SAP EWM, enabling faster order fulfillment and reduced stockouts.

- Dynamic Pricing and Promotions: Stream sales orders and product availability in real time to trigger pricing adjustments or dynamic discounting via integration with SAP ERP and external commerce platforms.

- AI-Powered Supply Chain Optimization: Combine data from SAP ERP, SAP Ariba, and external logistics platforms to power ML models that predict delays, optimize routes, and automate replenishment.

- Shop Floor Event Processing: Stream sensor and machine data alongside order data from SAP MES, enabling real-time production monitoring, alerting, and throughput optimization.

- Employee Lifecycle Automation: Stream employee events (e.g., onboarding, role changes) from SAP SuccessFactors to downstream IT systems (e.g., Active Directory, badge systems), improving HR operations and compliance.

- Order-to-Cash Acceleration: Connect order intake (via web portals or Salesforce) to SAP ERP in real time, enabling faster order validation, invoicing, and cash flow.

- Procure-to-Pay Automation: Integrate procurement events from SAP Ariba and supplier portals with ERP and financial systems to streamline approvals and monitor supplier performance continuously.

- Customer 360 and CRM Synchronization: Synchronize customer master data and transactions between SAP ERP, SAP CX, and third-party CRMs like Salesforce to enable unified customer views.

- Real-Time Financial Reporting: Stream financial transactions from SAP S/4HANA into cloud-based lakehouses or BI tools for near-instant reporting and compliance dashboards.

- Cross-System Data Consistency: Ensure consistent master data and business events across SAP and non-SAP environments by treating SAP as a real-time event source—not just a system of record.

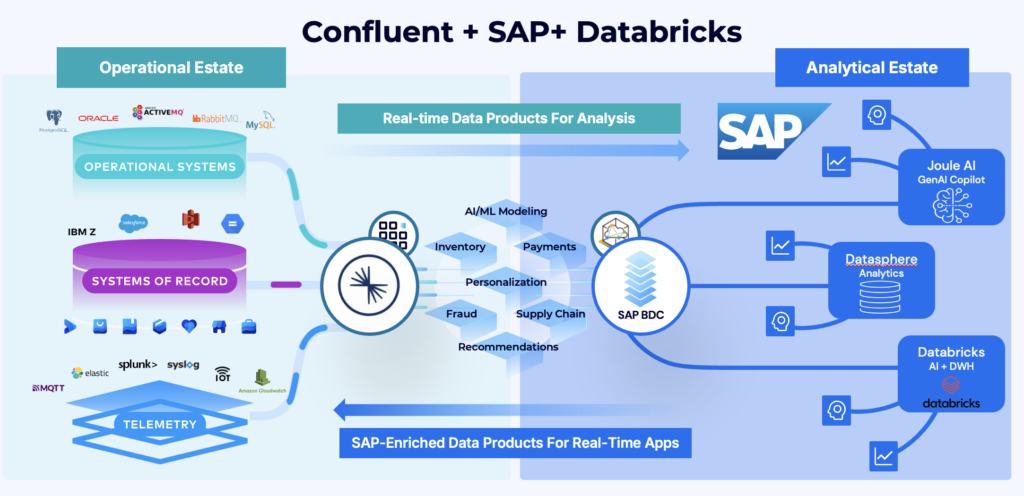

Example Use Case and Architecture with SAP, Databricks and Confluent

Consider a manufacturing company using SAP ERP for inventory management and Databricks for predictive maintenance. The combination of SAP Datasphere and Confluent enables seamless data integration from SAP systems, while the addition of Databricks supports advanced AI/ML applications—turning operational data into real-time, predictive insights.

With Confluent as the real-time backbone:

- Machine telemetry (via MQTT or OPC-UA) and ERP events (e.g., stock levels, work orders) are streamed in real time.

- Apache Flink enriches and filters the event streams—adding context like equipment metadata or location.

- Tableflow publishes clean, structured data to Databricks as Delta tables for analytics and ML processing.

- A predictive model hosted in a Databricks model detects potential equipment failure before it happens in a Flink application calling the remote model with low latency.

- The resulting prediction is streamed back to Kafka, triggering an automated work order in SAP via event integration.

This bi-directional, event-driven pattern illustrates how Confluent enables seamless, real-time collaboration across SAP, Databricks, and IoT systems—supporting both operational and analytical use cases with a shared architecture.

Going Beyond SAP with Data Streaming

This pattern applies to other enterprise systems:

- Salesforce: Stream customer interactions for real-time personalization through Salesforce Data Cloud

- Oracle: Capture transactions via CDC (Change Data Capture)

- ServiceNow: Monitor incidents and automate operational responses

- Mainframe: Offload events from legacy applications without rewriting code

- MongoDB: Sync operational data in real time to support responsive apps

- Snowflake: Stream enriched operational data into Snowflake for near real-time analytics, dashboards, and data sharing across teams and partners

- OpenAI (or other GenAI platforms): Feed real-time context into LLMs for AI-assisted recommendations or automation

- “You name it”: Confluent’s prebuilt connectors and open APIs enable event-driven integration with virtually any enterprise system

Confluent provides the backbone for streaming data across all of these platforms—securely, reliably, and in real time.

Strategic Value for the Enterprise of Event-based Real-Time Integration with Data Streaming

Enterprise software platforms are essential. But they are often closed, slow to change, and not designed for analytics or AI.

Confluent provides real-time access to operational data from platforms like SAP. SAP Datasphere and Databricks enable analytics and AI on that data. Together, they support modern, event-driven architectures.

- Use Confluent for real-time data streaming from SAP and other core systems

- Use SAP Datasphere and Databricks to build analytics, reports, and AI on that data

- Use Tableflow to connect the two platforms seamlessly

This modern approach to data integration delivers tangible business value, especially in complex enterprise environments. It enables real-time decision-making by allowing business logic to operate on live data instead of outdated reports. Data products become reusable assets, as a single stream can serve multiple teams and tools simultaneously. By reducing the need for batch layers and redundant processing, the total cost of ownership (TCO) is significantly lowered. The architecture is also future-proof, making it easy to integrate new systems, onboard additional consumers, and scale workflows as business needs evolve.

Beyond SAP: Enabling Agentic AI Across the Enterprise

The same architectural discussion applies across the enterprise software landscape. As vendors embed AI more deeply into their platforms, the effectiveness of these systems increasingly depends on real-time data access, continuous context propagation, and seamless interoperability.

Without an event-driven foundation, AI agents remain limited—trapped in siloed workflows and brittle API chains. Confluent provides the scalable, reliable backbone needed to enable true agentic AI in complex enterprise environments.

Examples of AI solutions driving this evolution include:

- SAP Joule / Business AI – Context-aware agents and embedded AI across ERP, finance, and supply chain

- Salesforce Einstein / Copilot Studio – Generative AI for CRM, service, and marketing automation built on top of Salesforce Data Cloud

- ServiceNow Now Assist – Intelligent workflows and predictive automation in ITSM and Ops

- Oracle Fusion AI / OCI AI Services – Embedded machine learning in ERP, HCM, and SCM

- Microsoft Copilot (Dynamics / Power Platform) – AI copilots across business and low-code apps

- Workday AI – Smart recommendations for finance, workforce, and HR planning

- Adobe Sensei GenAI – GenAI for content creation and digital experience optimization

- IBM watsonx – Governed AI foundation for enterprise use cases and data products

- Infor Coleman AI – Industry-specific AI for supply chain and manufacturing systems

- All the “traditional” cloud providers and data platforms such as Snowflake with Cortex, Microsoft Azure Fabric, AWS SageMaker, AWS Bedrock, and GCP Vertex AI

Each of these platforms benefits from a streaming-first architecture that enables real-time decisions, reusable data, and smarter automation across the business.

Join the data streaming community and stay informed about new blog posts by subscribing to my newsletter and follow me on LinkedIn or X (former Twitter) to stay in touch. And download my free book about data streaming use cases, including technical architectures and the relation to other operational and analytical platforms like SAP and Databricks.