The research company Forrester defines data streaming platforms as a new software category in a new Forrester Wave. Apache Kafka is the de facto standard used by over 100,000 organizations. Plenty of vendors offer Kafka platforms and cloud services. Many complementary open source stream processing frameworks like Apache Flink and related cloud offerings emerged. And competitive technologies like Pulsar, Redpanda, or WarpStream try to get market share leveraging the Kafka protocol. This blog post explores the data streaming landscape of 2024 to summarize existing solutions and market trends. The end of the article gives an outlook to potential new entrants in 2025.

Join the data streaming community and stay informed about new blog posts by subscribing to my newsletter.

Data Streaming is a New Software Category

Real-time data beats slow data. That’s true across almost all use cases in any industry. Event-driven applications powered by data streaming are the new black. This approach increases the business value as the overall goal by increasing revenue, reducing cost, reducing risk, or improving the customer experience.

Plenty of software categories and related data platforms exist to process and analyze data:

- Database: Store and execute transactional workloads.

- Data Warehouse: Processing structured historical data to create recurring reports and unique insights.

- Data Lake: Processing structured and semi- or unstructured big data sets with batch processing to create recurring reports and unique insights.

- Lakehouse: A mix of data warehouse and data lake to process all data on one platform.

- Data Streaming: Continuously process data in motion and provide data consistency across communication paradigms (like real-time, batch, request-response) instead of storing and analyzing data only at rest.

Of course, these data platforms often overlap a bit. I did a complete blog series exploring the use cases and how they complement each other.

- Data Warehouse vs. Data Lake vs. Data Streaming – Friends, Enemies, Frenemies?

- Data Streaming for Data Ingestion into the Data Warehouse and Data Lake

- Data Warehouse Modernization: From Legacy On-Premise to Cloud-Native Infrastructure

- Case Studies: Cloud-native Data Streaming for Data Warehouse Modernization

- Lessons Learned from Building a Cloud-Native Data Warehouse

The Forrester Wave™: Streaming Data Platforms, Q4 2023

Forrester is a leading research and advisory company that provides insights and analysis on various aspects of technology, business, and market trends.

The company is known for its in-depth analysis, market research reports, and frameworks that help organizations navigate the rapidly changing landscape of technology and business. Businesses and IT leaders often use Forrester’s research to understand market trends, evaluate technology solutions, and develop strategies to stay competitive in their respective industries.

In December 2023, the research company published “The Forrester Wave™: Streaming Data Platforms, Q4 2023“. Get free access to the report here. The leaders are Microsoft, Google and Confluent, followed by Oracle, Amazon, Cloudera, and a few others.

You might agree or disagree with the positions of a specific vendor regarding its offering or strategy strength. But the emergence of this new wave is a proof that data streaming is a new software category; not just yet another hype or next-generation ETL / ESB / iPaaS tool.

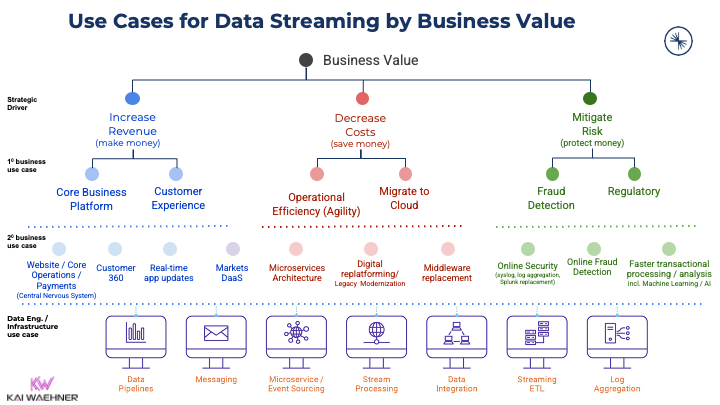

Data Streaming Use Cases by Business Value

A new software category opens use cases and adds business value across all industries:

Adding business value is crucial for any enterprise. With so many potential use cases, it is no surprise that more and more software vendors add Kafka support to their products. Search my blog for your favorite industry to find plenty of case studies and architectures. Or read about use cases for Apache Kafka across industries to get started.

The Data Streaming Landscape of 2024

Data Streaming is a separate software category of data platforms. Many software vendors built their entire businesses around this category. The data streaming landscape shows that most vendors use Kafka or implement its protocol because Apache Kafka has become the de facto standard for data streaming.

New software companies have emerged in this category in the last few years. And several mature players in the data market added support for data streaming in their platforms or cloud service ecosystem. Most software vendors use Kafka for their data streaming platforms. However, there is more than Kafka. Some vendors only use the Kafka protocol (Azure Event Hubs) or utterly different APIs (like Amazon Kinesis).

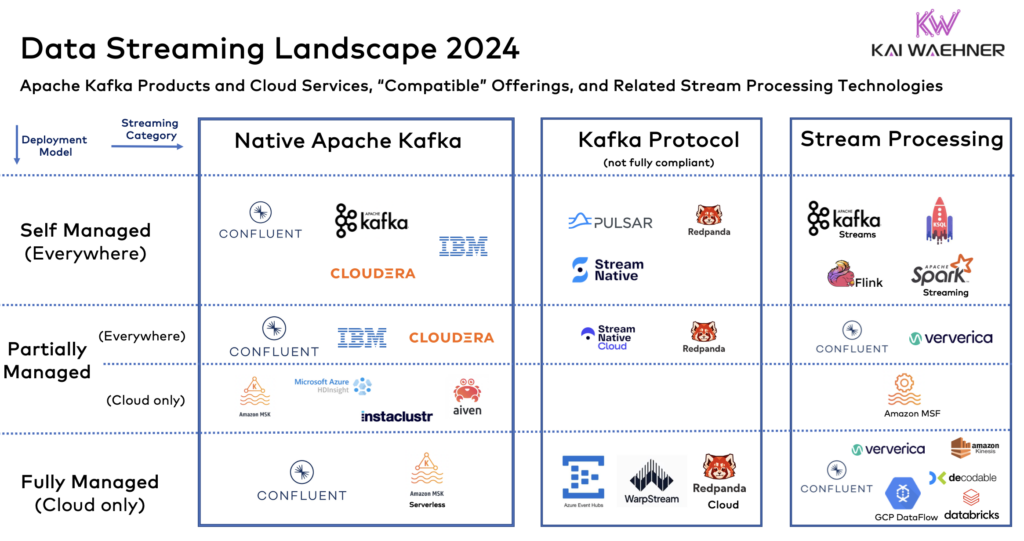

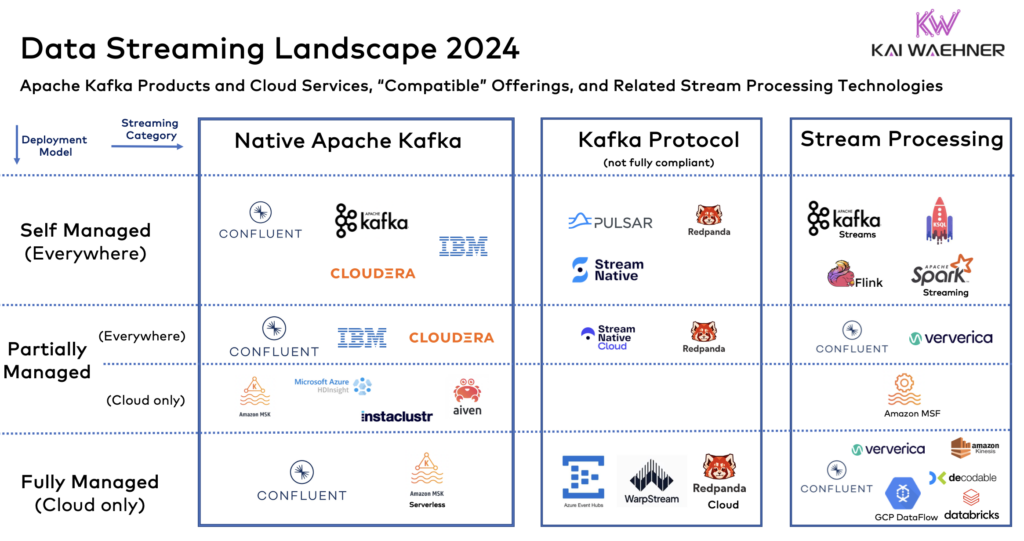

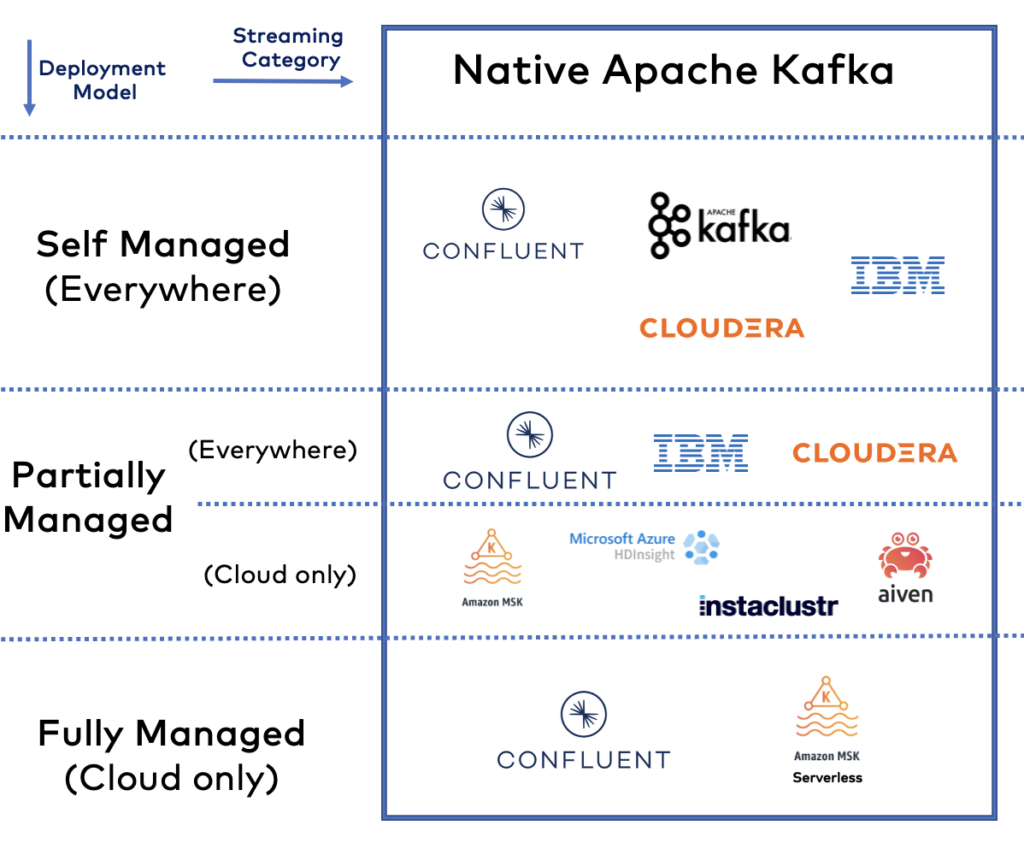

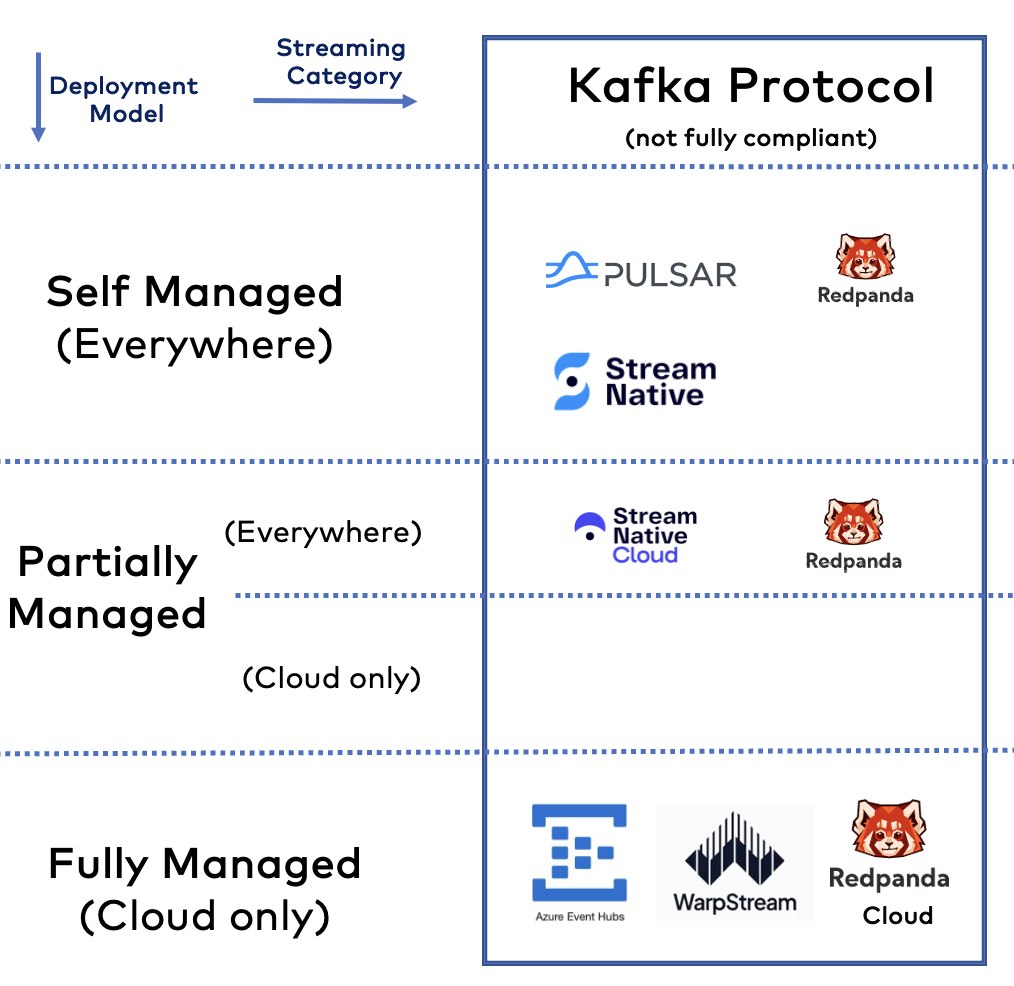

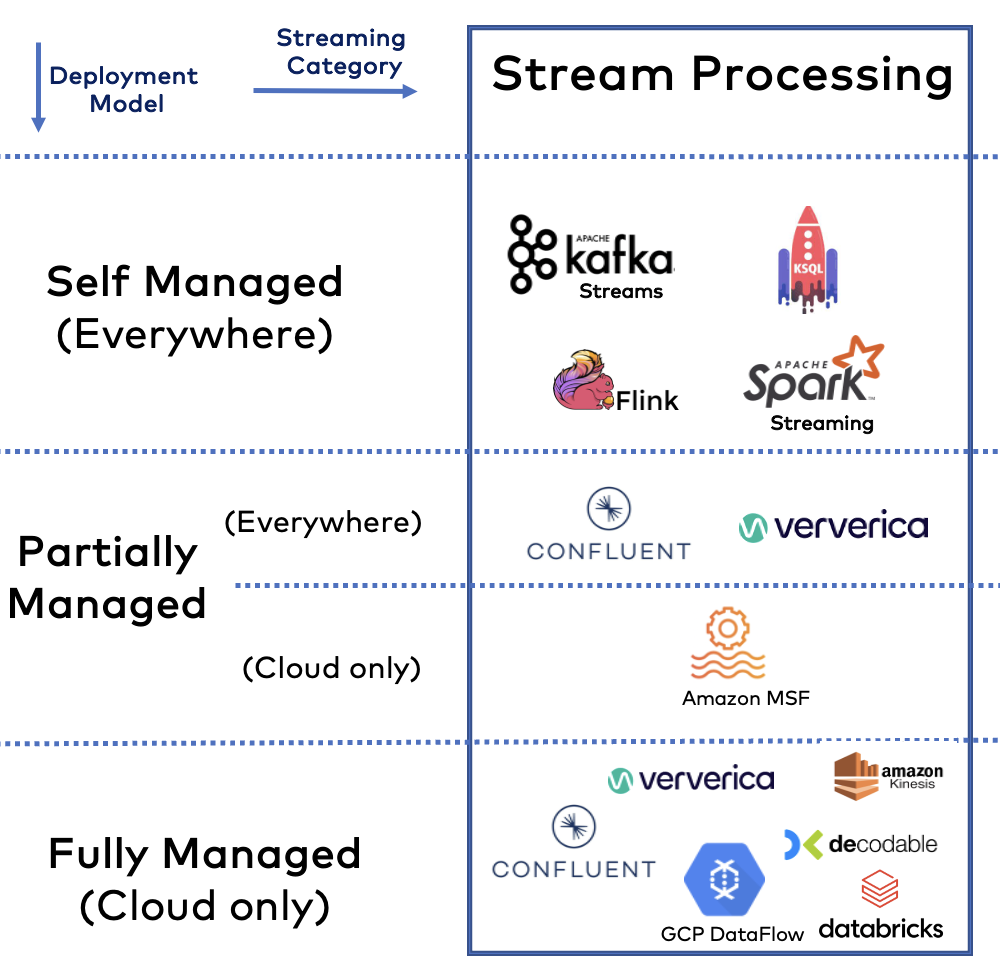

The following Data Streaming Landscape 2024 summarizes the current status of relevant products and cloud services.

Please note: Intentionally, this is not a complete list of frameworks, cloud services, or vendors. It is not an official research landscape. There is no statistical evidence. If your favorite technology is not in this diagram, then I did not see it in my conversations with customers, prospects, partners, analysts, or the broader data streaming community.

Also, note that I focus on general data streaming infrastructure. Brilliant solutions exist for using and analyzing streaming data for specific scenarios, like time series databases, machine learning engines, or observability platforms. These are complementary and often connected out of the box to a streaming cluster.

Deployment Models: Self Managed vs. Fully Managed

Different data streaming categories exist regarding the deployment model:

- Self Managed: Operate nodes like Kafka broker, Kafka Connect, Schema Registry by yourself with your favorite scripts and tools. This can be on-premise or in the public cloud in your VPC.

- Partially Managed: Reduce the operations burden via a cloud-native platform (usually Kubernetes) and related operator tools that automate operations tasks like rolling upgrades or rebalancing Kafka Partitions. This can be on-premise or in the public cloud either in your VPC or in the vendor’s VPC.

- Fully Managed: Leverage (truly) fully managed SaaS offerings that do 100 percent of the operations and provide critical SLAs and support to focus on integration and business logic.

What about Bring Your Own Cloud (BYOC)?

Some vendors offer a fourth deployment model: Bring Your Own Cloud (BYOC), an approach where the software vendor operates a cluster for you in your environment. BYOC is a deployment model which sits somewhere between a SaaS cloud service and a self-managed deployment.

I do NOT believe in this approach as too many questions and challenges exist with BYOC regarding security, support, and SLAs in the case of P1 and P2 tickets and outages. Hence, I put this in the category of self-managed. That is what it is, even though the vendor touches your infrastructure. In the end, it is your risk because you have to and want to control your environment.

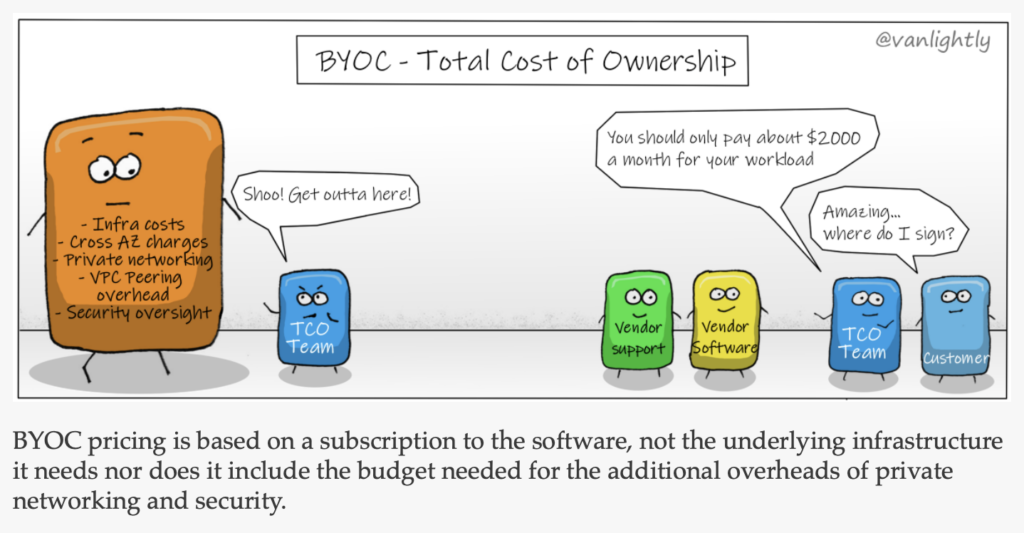

Jack Vanlightly wrote an excellent article “On The Future Of Cloud Services And BYOC“. Jack explores the following myths:

- Myth 1: BYOC enables better security by keeping the data in your account.

- Myth 2: BYOC is cheaper, with a lower total cost of ownership (TCO).

I summarize the story with these two drawings and highly recommend reading Jack’s detailed article about BYOC and its trade-offs:

Here is Jack’s conclusion: “Just as customers moved away from racking their own hardware to move to the cloud, those that try BYOC will similarly migrate away to SaaS for its simplicity, reliability, scalability and cost-effectiveness.” I fully agree.

Streaming Categories: Native Kafka vs. Protocol Compatibility vs. Stream Processing

Apache Kafka became the de facto standard for data streaming, like Amazon S3 is the de facto standard for object storage:

Read the detailed blog post to learn more about the differences between an open-source standard like Kafka and a proprietary protocol like S3. When you explore the data streaming world, there is no way not to look at the Apache Kafka ecosystem.

The data streaming landscape covers three streaming categories:

- Native Apache Kafka: The product or cloud service leverages the open source framework for real-time messaging and event store. The Kafka API is 100% compliant. Not included are Kafka Streams and Kafka Connect; many vendors exclude theses Kafka features.

- Kafka protocol: The product or cloud services implements its own code but supports the Kafka API. These offerings are usually NOT 100% compliant. Usually, Kafka Connect and Kafka Streams are usually not part of the offerings.

- Stream processing: Frameworks and cloud services correlate data in a stateless or stateful manner. Solutions are either Kafka-native, work together with the Kafka protocol, or run completely independent.

It is really tough to define these categories. For instance, I could add another section for Kafka Connect, or more generally, data integration. Another debate is how to clarify if a vendor supports the complete Kafka API (with or without Kafka Connect or Kafka Streams). But I do not want to have an endless list of solutions. Therefore, the focus is on the Kafka protocol (being the de facto standard for messaging and storage) and related stream processing with Kafka and non-Kafka technologies.

Changes in the Data Streaming Landscape from 2023 to 2024

My goal is NOT a growing landscape with tens or even hundreds of vendors and cloud services. Plenty of these pictures exist. Instead, I focus on a few technologies, vendors, and serverless offerings that I really see in the field used in practice, with excitement by the broader open source and cloud community. Therefore, the following changes were made compared to the data streaming landscape 2023 published a year ago:

Replaced

- Category: I changed “Non-Kafka” to “Stream Processing” to get the right focus on data streaming, not including core messaging solutions like Google Pub/Sub or Amazon Kinesis.

- Red Hat: Strategy shift at IBM (again). Red Hat shut down its cloud offering “Red Hat OpenShift Streams for Apache Kafka” and shifts conversations to IBM products. I replaced the logo with IBM that still offers Kafka cloud products. But to be clear: Red Hat AMQ Streams (i.e., self-managed Kafka + Strimzi Kubernetes operator) is still a selling product.

Added

- WarpStream: A new entrant into fully managed cloud services using the Kafka protocol.

- Confluent: Added to the stream processing category with Kafka Streams, ksqlDB, and Apache Flink (via its Immerok acquisition).

- Ververica: Provides a Flink platform for many years. Honestly, not sure why I missed them last year.

- Amazon Managed Service for Apache Flink (MSF). Formerly known as Amazon Kinesis Data Analytics (KDA). Added to partially managed stream processing.

- Google DataFlow: Added to fully managed stream processing.

Removed

This is a controversial section. Hence, once again, I emphasize that this is just what I see in the field, not as a statistical research or survey.

- Google Pub/Sub: Focus on data streaming, not message brokers.

- TIBCO: Not used for data streaming (beyond TIBCO StreamBase in the financial services market for low-latency trading).

- DataStax: I do not see open source Apache Pulsar much. And I did not see DataStax’ Pulsar offering Luna Streaming to get any traction in the market at all. Also, it seems like the vendor did another strategic shift and focuses much more on Vector Databases and Generative AI (GenAI) now.

- Lenses + Conduktor: The reason I removed them is not because they are not used. These are great tools. But this landscape focuses on data streaming platforms, not complementary management, monitoring or proxy tools. So many tools exist in the meantime around Kafka – this deserves its own landscape or comparison article.

- Pravega: I did not see this technology in the field a single time in 2023.

- Immerok: Acquired by Confluent.

- Hazelcast: I did not see it in the real world for data streaming scenarios. The technology is well-known as an in-memory data-grid, but not for stream processing.

As I mentioned the Forrester Wave above, you might realize that I did not include every “strong performer” from the report. E.g., TIBCO, SAS, or Hazelcast. Because I don’t see any traction among these vendors in my conversations about event-driven architectures and stream processing. This is no statistical evidence or trying to make other tools bad.

Evaluation Criteria for Data Streaming Platforms

I often recommend using the following four aspects to look at different frameworks, platforms, and cloud services to evaluate a technology for your business project or enterprise architecture strategy:

- Cloud-native: Is the solution elastic to scale up and down? Is it fully managed / serverless, or just a bunch of server instances hosted in the cloud? Can you automate the development, operations, and testing process using DevOps, GitOps, test-driven development, and similar principles?

- Complete: Does the solution offer all required capabilities? Data streaming requires more than just messaging or data ingestion. Hence, does it provide connectors, data processing, governance, security, self-service, open APIs, and so on?

- Everywhere: Where can you use the solution? Cloud-only? Are all required cloud service providers supported? Is there an option to deploy in a data center or even at the edge (i.e., outside a data center), like a factory, cell tower, or retail store? How can you share data between regions, clouds or data centers? What use cases does it support (e.g., aggregation, disaster recovery, hybrid integration, migration, etc.)?

- Supported: Is the solution mature and battle-tested? Are public case studies available for your use case or industry? Does the vendor fully support the product? What are the SLAs? Are specific features excluded from commercial enterprise support? Some vendors offer data streaming cloud services (like a managed Kafka service) and exclude support in the terms and conditions (that many people don’t read in public cloud services, unfortunately).

Let’s take a deeper look into the different data streaming categories and start with the leading technology: Native Apache Kafka…

Native Apache Kafka for Data Streaming

Starting with the leading data streaming technology, Apache Kafka, and related vendors and SaaS offerings.

The growth of the Apache Kafka community in the last few years is impressive. Here are some statistics that Jay Kreps presented last year at the data streaming conference “Current – The Next Generation of Kafka Summit” in Austin, Texas:

- >100,000 organizations using Apache Kafka

- >41,000 Kafka meetup attendees

- >32,000 Stack Overflow questions

- >12,000 Jiras for Apache Kafka

- >31,000 Open job listings request Kafka skills

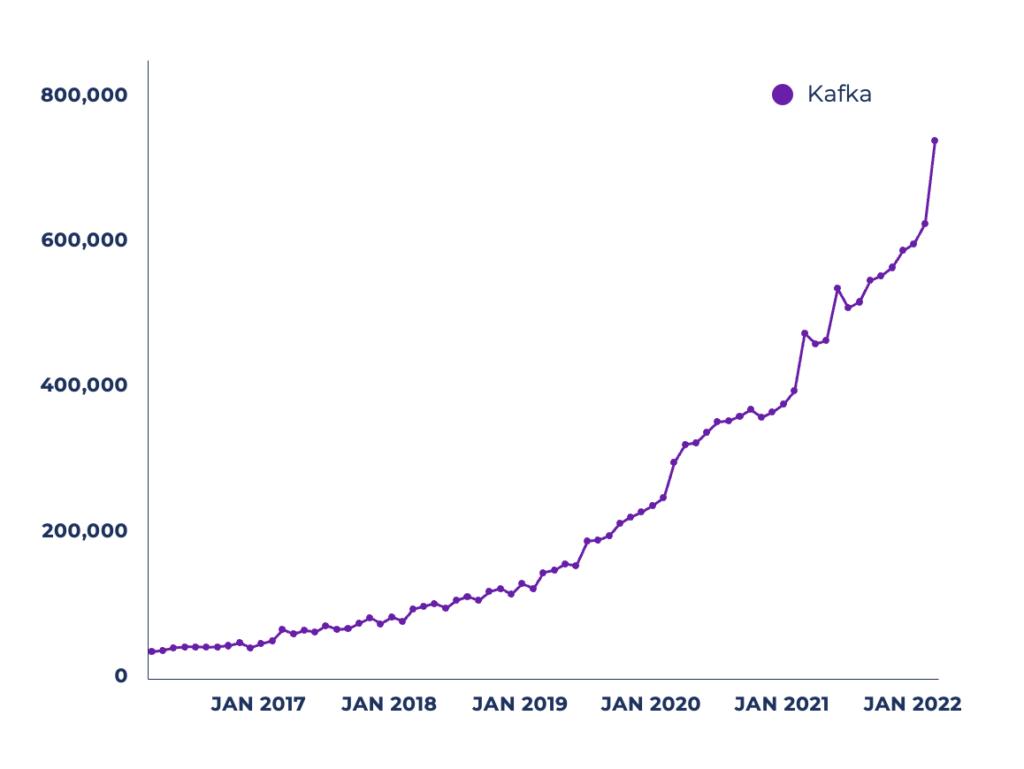

And look at the increased number of active monthly unique users downloading the Kafka Java client library with Maven:

Apache Kafka Vendors: Self-managed vs. Cloud Offerings

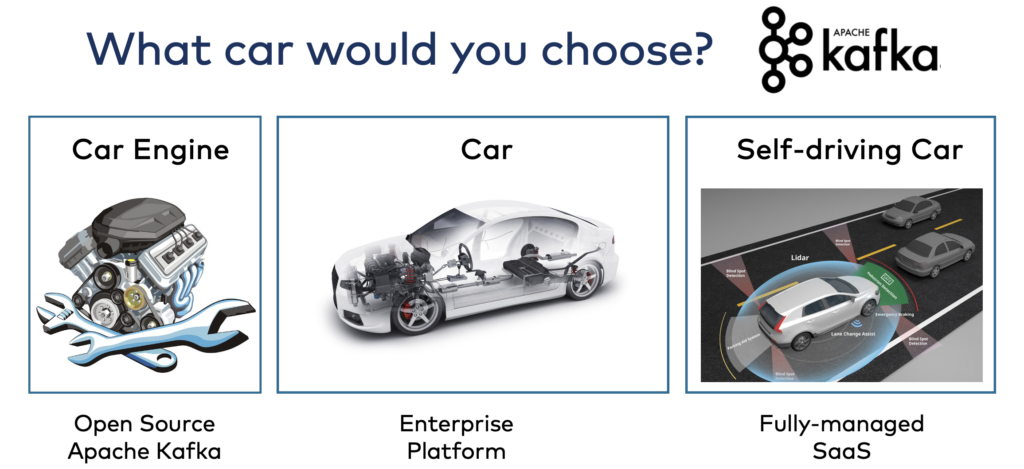

New software companies focus on data streaming. And even long-standing companies such as IBM and Oracle followed the trend in the past few years. On a top level – to keep it simple – three kinds of offerings exist for Apache Kafka:

I made a detailed comparison of on-premise Kafka vendors and cloud services using this car analogy. Only Amazon MSK Serverless (i.e., the fully managed service, not the partially Managed MSK) was not available when writing this comparison. Hence, also read Confluent Cloud versus Amazon MSK Serverless.

Here are a few notes on each technology as a summary.

- Apache Kafka: The de facto standard for data streaming. Open source with a vast community. All the vendors in this list rely on (parts of) this project.

- Confluent: Provides data streaming everywhere with Confluent Platform (self-managed) and Confluent Cloud (fully managed and available across all major cloud providers).

- Cloudera: Provides Kafka as a self-managed offering. Focuses on combining many data technologies like Kafka, Hadoop, Spark, Flink, NiFi, and many more.

- IBM: Provides Kafka as a partially managed cloud offering and self-managed Kafka on Kubernetes via OpenShift (through Red Hat). Kafka is part of the integration portfolio that includes other open-source frameworks like Apache Camel.

- AWS: Provides two separate products with Amazon MSK (partially managed) and Amazon MSK Serverless (fully managed). Both products have very different functionalities and limitations. Both MSK offerings exclude Kafka support (read the terms and conditions). AWS has hundreds of cloud services, and Kafka is part of that broad spectrum. Only available in public AWS cloud regions; not on Outposts, Local Zones, Wavelength, etc.

- Instaclustr and Aiven: Partially managed Kafka cloud offerings across cloud providers. The product portfolios offer various hosted services of open-source technologies. Instaclustr also offers a (semi-)managed offering for on-premise infrastructure.

- Microsoft Azure HDInsight. A piece of Azure’s Hadoop infrastructure. Not intended for other use cases. Only available in public Azure cloud regions.

This is no comparison. Just a list with a few notes. Make your own evaluation of your favorite vendors. Check what you need: Cloud-native? Complete? Everywhere? Supported?

And keep in mind that many vendors exclude or do not focus on Kafka Streams and Kafka Connect and only offer incomplete Kafka; they want to sell their own integration and processing products instead. Don’t compare apples and oranges!

Open Source Frameworks and SaaS using the Kafka Protocol

A few vendors don’t rely on open-source Apache Kafka but built their own implementations on top of the Kafka protocol for different reasons.

Marketing will not tell you, but the Kafka protocol compatibility is limited. This can create risk in operating existing Kafka workloads against the cluster and differs in operations and execution (which can be good or bad).

Here are a few notes on each technology as a summary:

- Apache Pulsar: A competitor to Apache Kafka. Similar story and use cases, but different architecture. Kafka is a single distributed cluster – after removing the ZooKeeper dependency in 2022. Pulsar is three (!) distributed clusters: Pulsar brokers, ZooKeeper, and BookKeeper. I wrote about Pulsar vs. Kafka two years ago, and the status is still more or less the same: It is too late now to get more market traction. And Kafka catches up to some missing features like Queues for Kafka.

- StreamNative: The primary vendor behind Apache Pulsar. Offers self-managed and fully managed solutions. StreamNative Cloud for Kafka is still in a very early stage of maturity, not sure if it will ever strengthen. No surprise you can now also choose BYOC instead.

- Redpanda: A relatively new entrant into the data streaming market offering self-managed and fully managed products. Interesting approach to implementing the Kafka protocol with C++. It might take some market share if they can find the proper use cases and differentiators. Today, I don’t see Redpanda as an alternative to a Kafka-native offering because of its early stage in the maturity curve and no added value for solving business problems versus the added risk compared to Apache Kafka.

- Azure Event Hubs: A mature, fully managed cloud service. The service does one thing, and that is done very well: Data ingestion via the Kafka protocol. Hence, it is not a complete streaming platform, but is more comparable to Amazon Kinesis or Google Cloud PubSub. Only available on public Azure cloud regions. The limited compatibility with the Kafka protocol and high cost of the service are the two blockers I hear regularly.

- WarpStream: A new entrant into the data streaming market. The cloud service is a Kafka compatible data streaming platform built directly on top of S3. The worse latency is probably okay for some Kafka use cases. But only the future will show if this differentiating architecture plays a key role after other Kafka cloud services adopt Kafka’s KIP-405 for Tiered Storage (which is available in early access since Kafka 3.6).

Be careful about statements of vendors that reimplement the Kafka protocol. Most of these vendors oversell the Kafka protocol compatibility. Additionally, “benchmarketing” (i.e., picking a sweet spot or niche scenario where you perform better than your competitor) is the favorite marketing technique to “prove” differentiators to the real Apache Kafka.

Stream Processing Technologies

While Apache Kafka is the de facto standard for message and event storage, many complementary and competitive technologies exist for stream processing:

Even more technologies emerge these days because of the growth of this software category across the globe and all industries. That’s excellent news. Data streaming is here to stay and grow.

The situation is challenging to explore as part of the data streaming landscape, as some products are complementary and competitive to the Apache Kafka ecosystem.

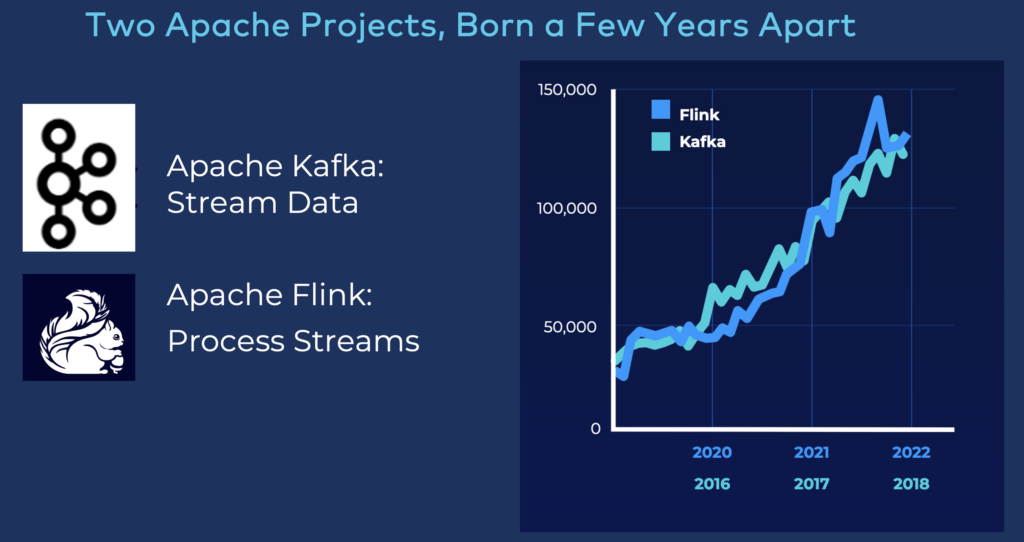

Apache Flink Adoption and Growth

Fun fact: The leading conference for Kafka was rebranded from “Kafka Summit” to “Current – The Next Generation of Kafka Summit” in 2022. Why? Because data streaming is more than Kafka. Many complementary and competitive technologies were present, including vendors, booths, demos, and customer case studies. That’s a remarkable evolution of data streaming for the community and enterprises across the globe!

Apache Flink is becoming the de facto standard for stream processing. The rise of Flink looks very similar to Kafka’s adoption a few years ago:

But don’t underestimate the power and use cases of Kafka-native stream processing with Kafka Streams. The adoption rate is massive, as Kafka Streams is easy to use. And it is part of Apache Kafka. I wrote a comparison of the benefits of Apache Flink and the differences between Flink and Kafka Streams.

Some Stream Processing Products are Complementary to Kafka

Each stream processing framework or cloud service has trade-offs. While Flink gets a lot of traction, there are others, too. There is no single size that fits all use cases. Here are a few mature and emerging technologies that complement Apache Kafka.

Open Source Stream Processing Frameworks

- Kafka Streams: Part of open-source Apache Kafka. Hence, if you download Kafka from the Apache website, it always includes the library. You should always ask yourself if you need another framework besides Kafka Streams for stream processing. The significant benefit: One technology, one vendor, one infrastructure.

- ksqlDB (usually called KSQL, even after the rebranding): An abstraction layer on top of Kafka Streams to provide stream processing with streaming SQL. Hence, also Kafka-native. It comes with a Confluent Community License and is free to use. Sweet spot: Streaming ETL.

- Apache Flink: Leading open-source stream processing framework. Advanced features include a powerful scalable compute engine (separated from Kafka), freedom of choice for developers between ANSI SQL, Java and Python, APIs for Complex Event Processing (CEP), and unified APIs for stream and batch workloads.

- Spark Streaming: The streaming part of the open source big data processing framework Apache Spark. I am still not 100 percent convinced. Kafka Streams and Apache Flink are the better choices for stream processing. However, the enormous installed base of Spark clusters in enterprises broadens adoption.

Stream Processing Vendors and Cloud Services

- Ververica: Well-known Flink company. Acquired by Chinese giant Alibaba in 2019. Not much traction in Europa and the US, but definitely an expert player for Flink in Asia. Personally, I have never seen the vendor being used outside of Asia.

- Decodable: A new cloud service. Very early stage. I still added it, as I think it is an excellent strategic move to build a data streaming cloud service on top of Apache Flink. Huge potential if it is combined with existing Kafka infrastructures in enterprises. But also provides pre-built connectors for non-Kafka systems.

- Amazon Managed Service for Apache Flink (MSF): An (almost) fully managed service by AWS that allows customers to transform and analyse streaming data in real time with Apache Flink. It still provides some (costly) gaps for auto-scaling and is not truly serverless. Supports all Flink interfaces, i.e., SQL, Java, and Python. And non-Kafka connectors, too. Only available on AWS.

- Confluent Cloud: A truly serverless Flink offering that is elastic and scales to zero if not used. Only supports SQL in the beginning. Java and Python support coming in 2024. Starts on AWS, but expected to be available on GCP and Azure in early 2024. Deeply integrated with fully managed Kafka, Schema Registry, Connectors, Data Governance, and other features of Confluent. Provides a seamless end-to-end developer experience for data streaming pipelines.

- Databricks: Was the leading vendor behind Apache Spark. Today, nobody talks about Spark anymore (even if it is a key technology piece of Databricks’ cloud platform). Trying to get much more into the business of real-time data. I like the platform for analytics, reporting and AI / machine learning. But I am not convinced by the data lakehouse story around “doing everything within one big data lake”. Check out my blog series “Data Warehouse vs. Data Lake vs. Data Streaming – Friends, Enemies, Frenemies?“.

Most of these services work well with solutions of other vendors. For instance, Databricks integrates easily with any Kafka environment, or Amazon MSF connects directly to Confluent’s Kafka.

Apache Flink (or Spark Streaming) WITHOUT Kafka?

Most stream processing technologies complement Apache Kafka. But stream processing frameworks like Flink or cloud services like Databricks do NOT need Kafka as an ingestion layer. There are other options…

Flink, Spark, et al. can consume data from other streaming platforms or directly from data stores like a file or database.Be careful with the latter: If you use Flink or Spark Streaming for stream processing, that’s fine. But if the first thing to do is read the data from an S3 object store, well, that is data at rest.

BUT: A common trend in the data streaming market is long-term storage of (some) events within the event streaming platform. Especially, introducing Tiered Storage for Kafka changed the capabilities and use cases. The support for object storage by some vendors via the S3 interface can be an entire game changer for storing and processing events in real-time with the Kafka protocol or with other analytics engines and databases in near-real-time or batch. And Apache Iceberg might be the next trend we talk about for the 2025 streaming landscape.

Understand when to use Reverse ETL from a data lake and when it is an anti-pattern!

And understand that stream processing applications can also keep state: The backend of your Kafka Streams or Flink app can store state for your tasks like enrichment purposes. A stream processing application is not just about real-time data feeds. It also correlates these real-time feeds with (already ingested) historical data. This is a common approach for metadata or business data that is updated less frequently (like from an SAP ERP system).

Some Stream Processing Products are Competitive to Kafka

In some situations, you must evaluate whether Apache Kafka or another technology is the right choice. Here are a few open-source and cloud competitors:

- Amazon Kinesis: Data ingestion into AWS data stores. Mature product for a specific problem. Only available on AWS.

- Google Cloud DataFlow: Fully managed service for executing Apache Beam pipelines within the Google Cloud Platform ecosystem. Mature product for a specific problem. Only available on GCP.

- Many competitive startups emerge around stream processing outside of the Kafka and Flink world. Let’s see if some get traction in 2024.

Amazon Kinesis and Google Cloud DataFlow are excellent cloud services if you “just” want to ingest data into a specific cloud storage. If there are no other use cases, these tools might be the right choice (if pricing at scale and other limitations work for you).

Apache Kafka is a much more flexible and strategic data streaming platform. Many projects still start with data ingestion and build the first pipeline. But providing access to the same stream of events to any other data sink or for powerful stream processing with tools like Kafka Streams or Apache Flink is a significant advantage.

Potential for the Data Streaming Landscape 2025

Data streaming is a journey. So is the development of event streaming platforms and cloud services. Several established software and cloud vendors might get more traction with their data streaming offerings. And some startups might grow significantly. The following shows a few technologies that might evolve and see growing adoption in 2024:

- Additional Kafka cloud services like Digital Ocean or Oracle Cloud Infrastructure (OCI) Streaming might get more traction. I did not see these yet in the field. But e.g., combining Oracle GoldenGate with OCI Streaming might be interesting to some organizations for some use cases.

- Hazelcast rebrands significantly and is part of Forrester’s Streaming Data Wave. With both an on-premise and serverless cloud offering, the technology might get traction for data streaming and come back to my landscape next year.

- Streaming Databases like Materialize or RisingWave might become its own category. My feeling: Super early stage of the hype cycle. We will see in 2024 if and where this technology gets more broadly adopted and what the use cases are. It is hard to answer how these will compete with emerging real-time analytics databases like Apache Druid, Apache Pinot, ClickHouse, Rockset, Timeplus, et al. I know there are differences, but the broader community and companies need to a) understand these differences and b) find business problems for it.

- SaaS Startups like Quix and Bytewax (both stream processing with Python), DeltaStream (powered by Apache Flink), and many more emerge. Let’s see which of these gets traction in the market with an innovative product and business model.

- Existing multi-product enterprise extend their offerings around Kafka with separate Flink service. For instance, Aiven already has a Flink product in the meantime that might get traction like its Kafka offering.

- Traditional data management vendors like MongoDB or Snowflake try to get deeper into the data streaming business. I am still a fan of separation of concerns; so I think these should keep their sweet spot and (only) provide streaming ingestion and CDC as use cases, but not (try to) compete with data streaming vendors.

Fun fact: The business model of almost all emerging startups is fully managed cloud services, not selling licenses for on-premise deployments. Many are based on open-source or open-core, others only provide a proprietary implementation.

The Data Streaming Journey is a Long One…

Data Streaming is not a race, it is a journey! Event-driven architectures and technologies like Apache Kafka or Apache Flink require a mind shift in architecting, developing, deploying, and monitoring applications. Legacy integration, cloud-native microservices, and data sharing across hybrid and multi-cloud setups are the norm, not an exception.

The data streaming landscape 2024 shows how a new software category is emerging. We are still in an early stage. A new software category takes time to create. In most conversations with customers, partners, and the community, I hear statements like: “We see the value, but we are not there yet – we now start with building first data streaming pipelines and have a roadmap for the next years to add more advanced stream processing”.

The Forrester Wave: Streaming Data Platforms, Q4 2023 is a first proof that the category is moving forward in the hype cycle.

Looking at the competitive data streaming market, one of my favorite real-world examples for choosing the right stream processing technologies comes from DoorDash: Why companies migrate from Amazon SQS and Kinesis to Apache Kafka and Flink. The article explores the trade-offs between cloud-specific solutions like Kinesis and an open ecosystem around open-source technologies like Kafka and Flink.

Last but not least, check out my Top 5 Data Streaming Trends for 2024 to understand how the data streaming landscape fits into emerging trends: data sharing, data contracts, serverless stream processing, multi-cloud architectures, and GenAI.

What are your most relevant and exciting trends for data streaming with Apache Kafka and Flink in 2024 to set data in motion? What does your enterprise landscape for data streaming look like? Let’s connect on LinkedIn and discuss it! Stay informed about new blog posts by subscribing to my newsletter.