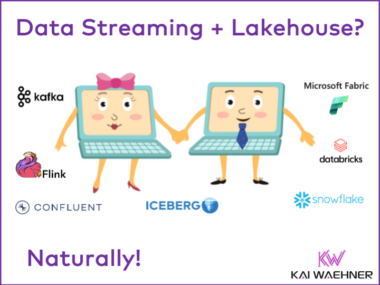

How Microsoft Fabric Lakehouse Complements Data Streaming (Apache Kafka, Flink, et al.)

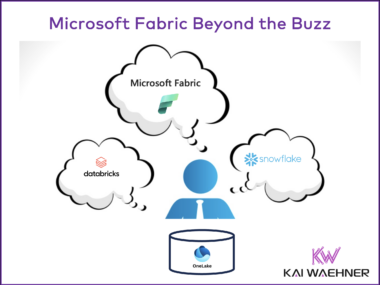

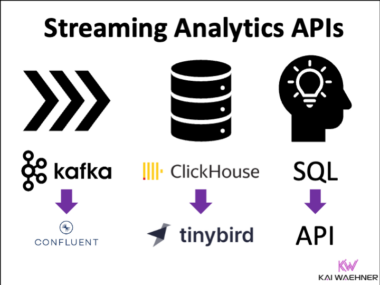

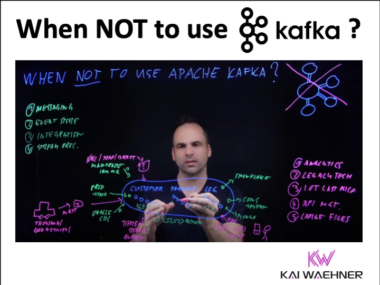

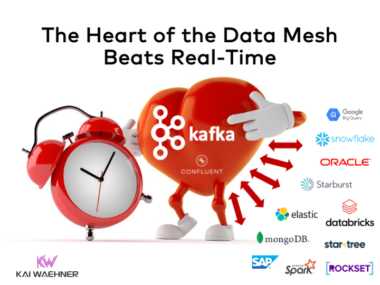

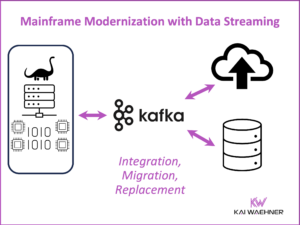

In today’s data-driven world, understanding data at rest versus data in motion is crucial for businesses. Data streaming frameworks like Apache Kafka and Apache Flink enable real-time data processing. Meanwhile, lakehouses like Snowflake, Databricks, and Microsoft Fabric excel in long-term data storage and detailed analysis, perfect for reports and AI training. This blog post explores how these technologies complement each other in enterprise architecture.